Production PostmortemThe Razor Suicide

Unlike previous posts in this series, this is actually something that happened to our own production server today. It resulted in our website being inaccessible for a a couple of hours, and like most such stories, its root cause is tremedously simple, and through a series of unfortunate accidents, it had escalated to a major issue.

First, this post is dedicted to this book, which should be read by any self respecting developer whose code is expected to hit production.

This post is also written a few hours only after the incident was resolved. Before we actually implemented anything except a temporary workaround. I’ll probably have another post in a couple of days to talk about the steps we are going to take to alleviate a repeat of this incident.

The incident started innocently enough, when one of the guys on the team discovered that the startup time of a certain instance jumped by a lot. Investigating into why he realized that the issue was extremely slow responses from our server. That was a cause of triple concern, actually. First, why are we accepting such slow responses instead of time limiting them? Second, why are we making a remote syncronous call during startup? And third, why on Earth is our server so slow?

Logging into the server, it didn’t take long to see what the problem was. The www.RavenDB.net website (the code that runs the RavenDB website, not RavenDB itself) was consuming a lot of CPU and quite a bit of memory. In a bit to restore the other services which reside on the same box, we reset the process. Our main concern at the time was to restore service as soon as possible, and we planned on investigating further through the logs.

However, in a few minutes, the www.RavenDB.net website started consuming more and more resources. At that point, we started considering a DoS attach of some sort and looked a the logs. The logs did show a very high number of requests, much more than I would expect. But looking further into them, it looked like they were mostly bots of various kinds indexing our site.

Considering that this might be the case of Google hammering us, we configured a robots.txt on the site and waited to see if this would have an impact. It didn’t.

The next step was to take a process dump of the process, and then analyze it. During this period, we had to shut down www.RavenDB.net because it was killing all other services running on the server.

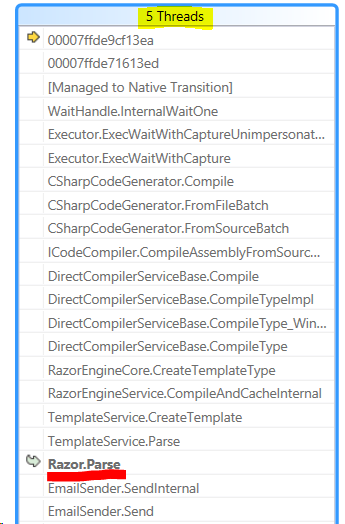

Looking at the dump in WinDBG, we started with the obvious commands.

- !runaway – to find out the thread cpu times

- switching to the busiest threads

- !clrstack - to see what it is doing

Honestly, this is a much nicer way of looking at this, though:

As you can see, the threads are currently actually doing parsing of a Razor template, and seems to be doing that on a fairly continous basis, consuming all system resources.

At that point, I started getting concerned to the well being of the poor guy’s inbox as a result of this code. That was the point where we actually did what should have probably been our first action, and looked at the error log of the website.

Previously, we looked at the live metrics and the request log, but didn’t consider looking into the error log for the system. The error log for the website, for today only, was 6GB in size, and was pretty full of errors such as:

- error: (221, 88) 'HibernatingRhinos.Orders.Common.EmailProcessing.EmailTemplates.RavenDBWebsite.Models.DownloadQuestionMailInput' does not contain a definition for 'Unsubscribe' and no extension method 'Unsubscribe' accepting a first argument of type

And at that point, we had enough to point a suspicious finger. We have an email that we send out, and we used to have a valid template. At some point, the code was changed and the Unsubscribe was removed. Nothing broke because the template is just a file, not actually compiling code. However, in production, when we tried to send the email, Razor would parse the text, fail compilation because of the missing member, and basically thorw a hissy fit.

Update: We investigated further, and it looks like the following was the actual “solution” to the outage:

The “solution” is in quotes, becasue this fixes the problem, but we need to still implement steps to ensure that something like that doesn’t repeat.

Unforuntately, at that point, we would consider this email as failing, and move on to the next one. That next one would also fail, and so would the next one, etc. Because all of them failed, they would get picked up again next time this run.

Once we knew where the problem was. The workaround was to deploy a version with no email sending. For this weekend, that will work. But come Sunday, someone is going to go over this piece of code with a veyr fine comb. I’ll post more about it once this actually roll around.

More posts in "Production Postmortem" series:

- (07 Apr 2025) The race condition in the interlock

- (12 Dec 2023) The Spawn of Denial of Service

- (24 Jul 2023) The dog ate my request

- (03 Jul 2023) ENOMEM when trying to free memory

- (27 Jan 2023) The server ate all my memory

- (23 Jan 2023) The big server that couldn’t handle the load

- (16 Jan 2023) The heisenbug server

- (03 Oct 2022) Do you trust this server?

- (15 Sep 2022) The missed indexing reference

- (05 Aug 2022) The allocating query

- (22 Jul 2022) Efficiency all the way to Out of Memory error

- (18 Jul 2022) Broken networks and compressed streams

- (13 Jul 2022) Your math is wrong, recursion doesn’t work this way

- (12 Jul 2022) The data corruption in the node.js stack

- (11 Jul 2022) Out of memory on a clear sky

- (29 Apr 2022) Deduplicating replication speed

- (25 Apr 2022) The network latency and the I/O spikes

- (22 Apr 2022) The encrypted database that was too big to replicate

- (20 Apr 2022) Misleading security and other production snafus

- (03 Jan 2022) An error on the first act will lead to data corruption on the second act…

- (13 Dec 2021) The memory leak that only happened on Linux

- (17 Sep 2021) The Guinness record for page faults & high CPU

- (07 Jan 2021) The file system limitation

- (23 Mar 2020) high CPU when there is little work to be done

- (21 Feb 2020) The self signed certificate that couldn’t

- (31 Jan 2020) The slow slowdown of large systems

- (07 Jun 2019) Printer out of paper and the RavenDB hang

- (18 Feb 2019) This data corruption bug requires 3 simultaneous race conditions

- (25 Dec 2018) Handled errors and the curse of recursive error handling

- (23 Nov 2018) The ARM is killing me

- (22 Feb 2018) The unavailable Linux server

- (06 Dec 2017) data corruption, a view from INSIDE the sausage

- (01 Dec 2017) The random high CPU

- (07 Aug 2017) 30% boost with a single line change

- (04 Aug 2017) The case of 99.99% percentile

- (02 Aug 2017) The lightly loaded trashing server

- (23 Aug 2016) The insidious cost of managed memory

- (05 Feb 2016) A null reference in our abstraction

- (27 Jan 2016) The Razor Suicide

- (13 Nov 2015) The case of the “it is slow on that machine (only)”

- (21 Oct 2015) The case of the slow index rebuild

- (22 Sep 2015) The case of the Unicode Poo

- (03 Sep 2015) The industry at large

- (01 Sep 2015) The case of the lying configuration file

- (31 Aug 2015) The case of the memory eater and high load

- (14 Aug 2015) The case of the man in the middle

- (05 Aug 2015) Reading the errors

- (29 Jul 2015) The evil licensing code

- (23 Jul 2015) The case of the native memory leak

- (16 Jul 2015) The case of the intransigent new database

- (13 Jul 2015) The case of the hung over server

- (09 Jul 2015) The case of the infected cluster

Comments

We've had a lot of similar issues with Razor Engine as well as some other hard to diagnose. As a result I decided instead of using runtime template parsing to generate everything upfront at the moment when one edits an email template. This solution won't work if you support updating email templates at run time. But if you don't support that and create the templates upfront then RazorEngine.Generator ( https://visualstudiogallery.msdn.microsoft.com/4cebc8ac-50dc-4381-8f2a-634f318f06df ) will help, it's a VS extension and after you install it you'll need to set custom tool for your razor files to RazorEngineGenerator.

With vanilla ASP.NET MVC, you could catch those errors by using ASP.NET pre-compilation in your build process (or Resharper while still in dev). Since you are using Razor Engine, I am keen to see how you fix it properly, especially as I am also using Razor Engine for email generation, albeit in a Windows service which won't flatten the website when it goes titsup.

The first thoughts when I read this is that this is an Razor bashing post ...

What you described, is not by any means a Razor issue. Razor behaves very well here, it' can't parse, it gives and error, as specific as possible. It's not even an ASP.Net issue, the fact the Razor views are just in time compiled on access, is a well known behavior. The fact that the Application Email Sender uses Dynamically compiled razor views should have been well known as well.

It is more of an technical implementation issue.

Visual Studio and tools (Resharper) all have support for refactoring razor views and compiling them. The fact that the solution was structured in such a way that model renaming was not detected during a release build (if not a debug one) kind of defeats the purpose of using a Strong Typed View Engine imho.

Another more important issue is the fact that there is an infinite retry in place. Infinite retries is an disaster waiting to happen in any application/system, and among the biggest anti patterns there are: From installers that infinitely retry to access files locked by other installers that wait for the firs ones to unlock some other files, to unending reboot cycles, to applications that infinitely try save a file, and others ...

People say multi threading is hard .... it's way easier than proper error handling ...

Nice post. I'd also say that the fact that you were not alerted to production errors is also a problem.

Don't you have any kind of tests on the code that generates the content of the mail body? That would have found the compile error in the razor view before it reached the production.

I would separate the process for sending emails from the website. One idea: introduce an asynchronous queue. Whenever you need to send an email, put a message on a queue somewhere. A listener on the queue would process the emails asynchronously and independently from any other process. This can be on a separate server and independently scaled. After a few retries, a failed message should go to a dead letter queue. You can monitor both queues in various ways. If anything goes wrong with sending emails, your main website is unaffected.

May this be the solution to ensure this does not repeat ?

http://stackoverflow.com/questions/20156472/how-can-i-make-builds-fail-in-vs-2013-if-theres-a-razor-c-error-in-a-cshtml-f

This project can help to find razor's problems at compile time https://github.com/RazorGenerator/RazorGenerator

I have to agree with Marc.

The fact that you were not alerted to production errors is IMHO the real problem. Everything can happen and the Razor suicide is only one possible error. Errors always happen, and you´ll never catch all (so may tests you cannot write), but the most important thing is, to get notified, when something goes really wrong. We scan our logs files or database tables with logs for errors regulary (batch/powershell/sql,.. scripts), and send simple status emails to an internal log email address. One person has to check them in the morning (during the cup of coffee) I can recommend this.

<MvcBuildViews>true</MvcBuildViews> http://haacked.com/archive/2011/05/09/compiling-mvc-views-in-a-build-environment.aspx/

I agree with JTE. I wish there were a UI for this, and I continually forget to set it for new projects since it defaults to false, but it can be a lifesaver.

A tool that my team and I use to prevent such scenarios is Resharper.

So first, we would see if the class had any uses for that Property and then Remove it. OR you could just check for Errors in the solution - even non-compiling errors like that would appear in ReSharper.

Comment preview