This is a sordid tale of chance and mystery and the nasty tricks that Murphy can play on you.

This is a sordid tale of chance and mystery and the nasty tricks that Murphy can play on you.

A few customers reported an error similar to the following one:

Invalid checksum for page 1040, data file Raven.voron might be corrupted, expected hash to be 0 but was 16099259854332889469

One such case might be a disk corruption, but multiple customers reporting it is an indication of a much bigger problem. That was a trigger for a STOP SHIP reaction. We consider data safety a paramount goal of RavenDB (part of the reason why I’m doing this Production Postmortem series), and we put some of our most experienced people on it.

The problem was, we couldn’t find it. Having access to the corrupted databases showed that the problem occurred on random. We use Voron in many different capacities (indexing, document storage, configuration store, distributed log, etc) and these incidents happened across the board. That narrowed the problem to Voron specifically, and not bad usage of Voron. This reduced the problem space considerably, but not enough for us to be able to tell what is going on.

Given that we didn’t have a lead, we started by recognizing what the issue was and added additional guards against it. In fact, the error itself was a guard we added, validating that the data on disk is the same data that we have written to it. The error above indicates that there has been a corruption in the data because the expected checksum doesn’t match the actual checksum from the data. This give us an early warning system for data errors and prevent us from proceeding on erroneous data. We have added this primarily because we were worried from physical disk corruption of data, but it turns out that this is also a great early warning system for when we mess up.

The additional guards were primarily additional checks for the safety of the data in various locations on the pipeline. Given that we couldn’t reproduce the issue ourselves, and none of the customers affected were able to reproduce this, we had no idea how to go from there. Therefor, we had a team that kept on trying different steps to reproduce this issue and another team that added additional safety measures for the system to catch any such issue as early as possible.

The additional safety measures went into the codebase for testing, but we still didn’t have any luck in figuring out what we going on. We went from trying to reproduce this by running various scenarios to analyzing the code and trying to figure out what was going on. Everything pointed to it being completely impossible for this to happen, obviously.

We got a big break when the repro team managed to reproduce this error when running a set of heavy tests on 32 bits machines. That was really strange, because all the reproductions to date didn’t run on 32 bits.

It turns out that this was a really lucky break, because the problem wasn’t related to 32 bits at all. What was going on there is that under 32 bits, we run in heavily constrained address space, which under load, can cause us to fail to allocate memory. If this happens at certain locations, this is considered to be a catastrophic error and requires us to close the database and restart it to recover. So far, this is pretty standard and both expected and desired reaction. However, it looked like sometimes, this caused an issue. This also tied to some observations from customers about the state of the system when this happened (low memory warnings, etc).

The very first thing we did was to test the same scenario on the codebase with the new checks added. So far, the repro team worked on top of the version that failed at the customers’ sites, to prevent any other code change from masking the problem. With the new checks, we were able to confirm that they actually triggered and caught the situation early. That was a great confirmation, but we still didn’t know what was going on. Luckily, we were able to add more and more checks to the system and run the scenario. The idea was to trip over a guard rail as early as possible, to allow us to inspect what actually caused it.

Even with a reproducible scenario, that was quite hard. We didn’t have a reliable method of reproducing it, we had to run the same set of operations for a while to hopefully reproduce this scenario. That took quite a bit of time and effort. Eventually, we figured out what was the root cause of the issue.

In order to explain that, I need to give you a refresher on how Voron is handling I/O and persistent data.

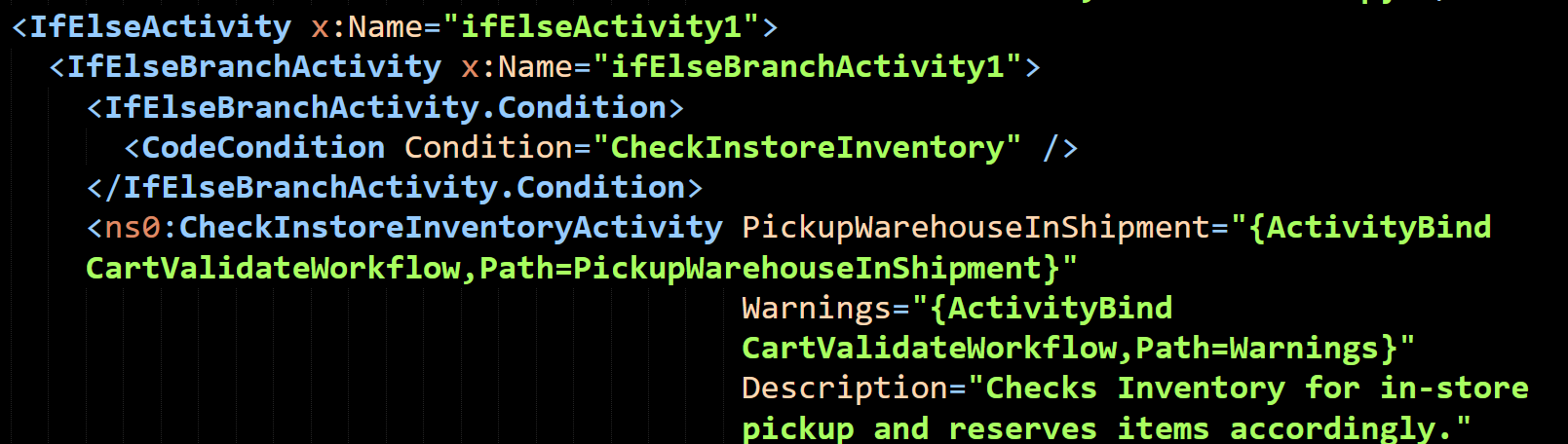

Voron is using MVCC model, in which any change to the data is actually done on a scratch buffer, this allow us to have snapshot isolation at very little cost and give us a drastically simplified model for working with Voron. Other important factors include the need to be transactional, which means that we have to make durable writes to disk. In order to avoid doing random writes, we use a Write Ahead Journal. For these reasons, I/O inside Voron is basically composed of the following operations:

- Scratch (MEM) – copy on write data for pages that are going to be changed in the transaction. Usually purely in memory. This is how we maintain the Isolated and Atomic aspects on ACID.

- Journal (WAL) – sequential, unbuffered, writes that include all the modifications to the transaction. This is how we maintain the Atomic and Durability aspects in ACID.

- Flush (MMAP)– copy data from the scratch buffers to the data file, which allow us to reuse space in the scratch file.

- Sync – (FSYNC) – ensure that the data from a previous flush is stored in durable medium, allow us to delete old journal files.

In Voron 3.5, we had Journal writes (which happen on each transaction commit) at one side of the I/O behavior and flush & sync as the other side. In Voron 4.0, we actually split it even further, meaning that journal writes, data flush and file sync are all independent operations which can happen independently.

Transactions are written to the journal file one at a time, until it reach a certain size (usually about 256MB), at which point we’ll create a new journal file. Flush will move data from the scratch buffers to the data file and sync will ensure that the data that was moved to the data file is durably stored on disk, at which point you can safely delete the old journals.

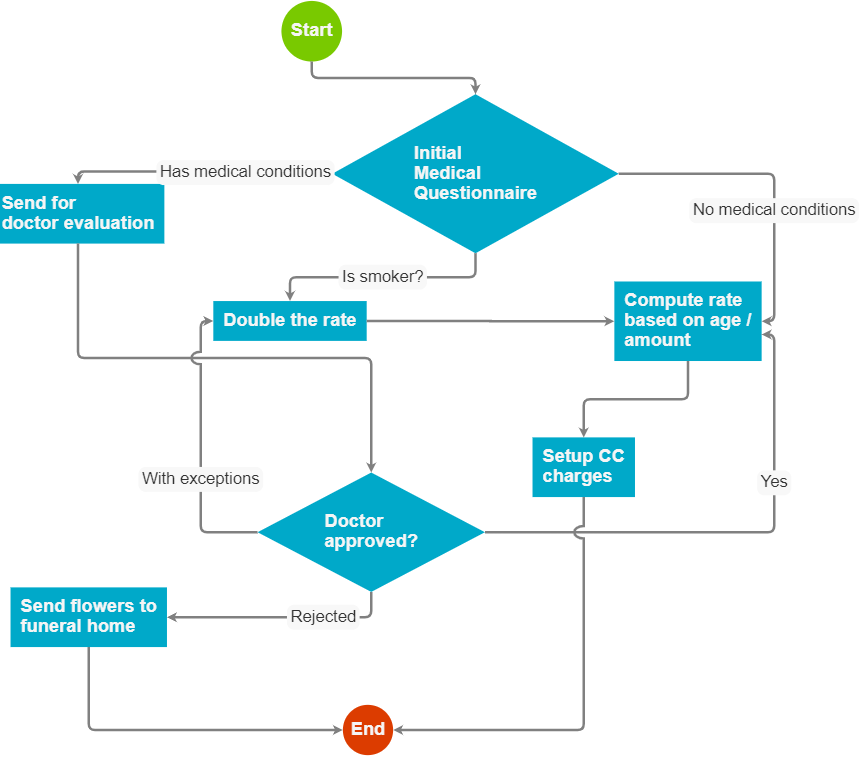

In order to trigger this bug, you needed to have the following sequence of events:

- Have enough transactions happen quickly enough that the flush / sync operations are lagging by more than a single file behind the transaction rate.

- Have a transaction start a new journal file while the flush operation was in progress.

- Have, concurrently, the sync operation complete an operation that include that last journal file. Sync can take a lot of time.

- Have another flush operation go on while the sync is in progress, which will move the flush target to the new journal file.

- Have the sync operation complete, which only synced some of the changes that came from that journal, but because the new flush (which we didn’t sync yet) already moved on from that journal, mistakenly believe that this journal file is completed done and delete it.

All of these steps, that is just the setup for the actual problem, mind you.

In this case, we are prepared to have to this issue, but we aren’t yet to actually experience it. This is because what happened is that the persistent state (on disk) of the database is now suspect, if a crash happens, we will miss the oldest journal that still have transactions that haven’t been properly persisted to the data file.

Once you have setup the system properly, you aren’t done, in terms of reproducing this issue. We now have a race, the next flush / sync cycle is going to fix this issue. So you need to have a restart of the database within a very short period of time.

For additional complexity, the series of steps above will cause a problem, but even if you crash in just the right location, there are still some mitigating circumstances. In many cases, you are modifying the same set of pages in multiple transactions, and if the transactions that were lost because of the early deletion of the journal file had pages that were modified in future transactions, these transactions will fill up the missing details and there will be no issue. That was one of the issues that made it so hard to figure out what was going on. We needed to have a very specific set of timing between three separate threads (journal, flush, sync) that create the whole, then another race to restart the database at this point before Voron will fix itself in the next cycle, all happening just at the stage that Voron moves between journal files (typically every 256MB of compressed transactions, so not very often at all) and with just the right mix of writes to different pages on transactions that span multiple journal files.

These are some pretty crazy requirements for reproducing such an issue, but as the saying goes: One in a million is next Tuesday.

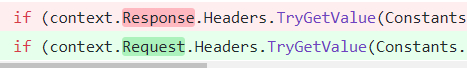

What made this bug even nastier was that we didn’t caught it earlier already. We take the consistency guarantees of Voron pretty seriously and we most certainly have code to check if we are missing transactions during recovery. However, we had a bug in this case. Because obviously there couldn’t be a transaction previous to Tx #1, we aren’t checking for a missing transaction at that point. At least, that was the intention of the code. What was actually executing was a check for missing transactions on every transaction except for the first transaction on the first journal file during recovery. So instead of ignoring just the the check on Tx #1, we ignored it on the first tx on all recoveries.

Of course, this is the exact state that we have caused in this bug.

Sigh.

We added all the relevant checks, tightened the guard rails a few more times to ensure that a repeat of this issue will be caught very early and provided a lot more information in case of an error.

Then we fixed the actual problems and subject the database to what in humans would be called enhanced interrogation techniques. Hammers were involved, as well as an irate developer with penchant to pulling the power cord at various stages just to see what will happen.

We have released the fix in RavenDB 4.1.4 stable release and we encourage all users to upgrade as soon as possible.