One of the most important aspect of a database engine is that it needs to support durability. Now, that is a personal opinion as a database author, but I consider this to be a pretty important consideration when selecting a database. Other database engines disagree, from pure in memory databases that lose all the data on restart to databases that makes “best effort” and will work as long as they don’t crash in the wrong place.

Because there are several different metrics for what durable means, I’ll provide several levels of possible durability requirements.

- None – if the application / server restarts, all the data is lost. Typically is used for in memory databases, explicitly giving up durability for performance.

- Try – the data is written to disk, but no attempt is made to make sure that it is coherent / up to date. Typically is used to startup an in memory / near in memory database from cold state.

- Crash – if the database has confirmed a write, and then immediately crashed, the data is still there. Most databases try to get to this level.

- Power loss – if the database has confirmed a write, even complete power loss of the entire machine will still keep the written data. This is where you wanna be.

Note that in all those cases, I’m talking about single node databases, distributed stuff is a lot more complex, so I’ll not touch it here.

This is when talking about durability, but there is also the notion of atomicity, in other words, a transaction that is composed of multiple operations should either be there complete (and certain if confirmed to the client) or not be there at all (rollback), there should never be a situation where some of the records went in, and some didn’t.

Finally, there is the paranoid mode for durability, in which you don’t trust the hardware, so you write to multiple locations, hash it and validate. Personally, at that level, I think that this is the purpose of the file system to verify that, and this is where the responsibility of the database ends, but if you are stuck with a poor file system choice (like FAT a decade or two ago), that was certainly something that you’ll have to consider.

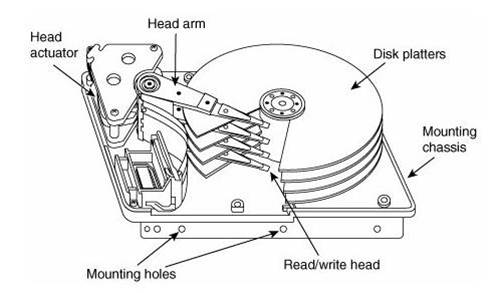

At any rate, one of the major problems with gaining durability is that you have to pay so much for it. In order to actually be durable, you have to write your changes to the actual disk platter, and that is something that might actually require physical parts to move, so that is something that is very costly. How costly? A high end (15,000 RPM) hard disk can do a theoretical maximum of 250 such writes per second, and that is an extremely theoretical number. In most cases, even on high end hard disks, you’ll see a maximum of a 100 – 150 per second. You can probably double or triple that for high end SSD drive, but those are still very poor numbers, compared to the number of operations you can do in memory and in the CPU in that time frame.

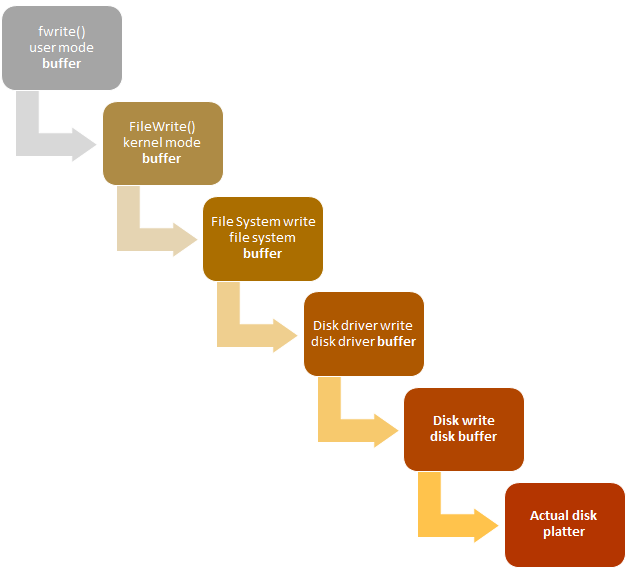

That puts a pretty hard limit on the number of times you can hit the disk, but that is not something that we can tolerate, so the process of making a write in most operation systems, looks like this:

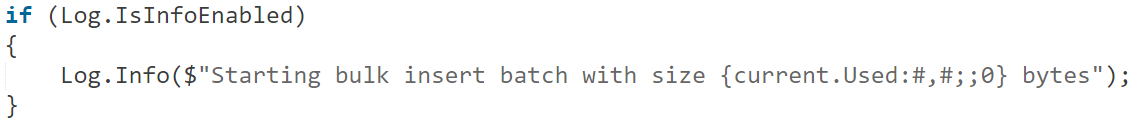

Notice the number of buffers in the middle? Each of them is going to gather the I/O as it can before issuing a (large) write to the layer below them. With the idea that this way, we can amortize the cost of going to the physical disk among many different writes. It works, it works quite well, in fact, to the point where most of the time, you don’t really think how slow the hardware is really is.

But for databases, this is a horrendous problem. To start with, if I’m issuing a write to the disk, I have no idea when this actually hit the platter. For fun, all through this chain (which is actually simplified), each component is free to re-order the writes in any way it feels like it, so we can’t even rely on the order of the writes to ensure consistency. No, in order to protect itself from data loss / corruption in the presence of power loss, we have to tell the operating system to actually skip all those layers and flush directly to disk.

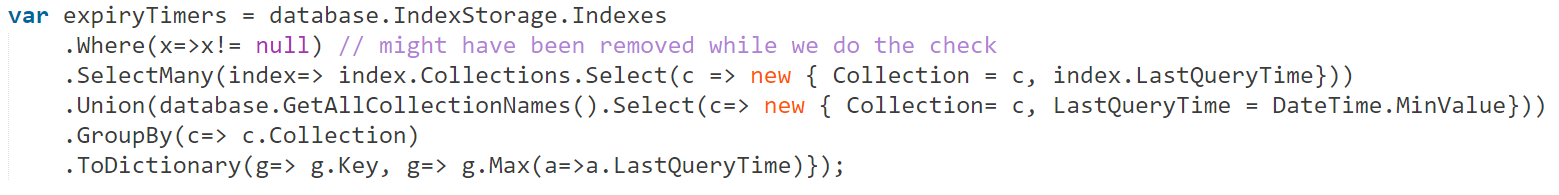

As I said, this is expensive to do. In particular, the normal way to do to make your writes, and then to to call fsync(fd) in order to flush those changes down the chain, all the way to the disk. This has a few issues, however. In particular, note that in the gap between the file system and the disk driver, we’ll lose the correlation between writes made to a particular file and any other writes made to that device. That end result is that the fsync command forces us to flush the entire disk driver buffer (and the disk buffers, etc). In production systems, that can be hundreds of MB that were innocently sitting there, slowly being written to disk, and suddenly you have this disruptive fsync that need to be written, so everything is flushed to disk, and the fsync (which you expect to be short, because you wrote on only a few dozen KB) now takes a minute, because it is actually flushing 100 MB writes from totally different process.

This post is getting long enough, so I’ll defer the actual discussion on how databases actually achieve durability to the next one, in the meantime, just consider the complexities involved in making sure that the data is on the disk, and how much of the design of modern databases is spent in optimizing just this part.