I was pointed to this blog post which talks about the experience of using RavenDB in production. I want to start by saying that I love getting such feedback from our users, for a whole lot of reasons, not the least of which is that it is great to hear what people are doing with our database.

Alex has been using RavenDB for a while, so he had the chance to use RavenDB 3.5 and 4.2, that is a good from my perspective, because means that he had the chance to see what the changes were and see how they impacted his routine usage of RavenDB. I’m going to call out (and discuss) some of the points that Alex raise in the post.

Speaking about .NET integration:

Raven’s .NET client API trumps MongoDB .NET Driver, CosmosDB + Cosmonaut bundle and leaves smaller players like Cassandra (with DataStax C# Driver), CouchDB (with MyCouch) completely out of the competition.

When I wrote RavenDB 0.x, way before the 1.0 release, it took two months to build the core engine, and another three months to build the .NET client. Most of that time went on Linq integration, by the way. Yes, it literally took more time to build the client than the database core. We put a lot of effort into that. I was involved for years in the NHibernate project and I took a lot of lessons from there. I’m very happy that it shows.

Speaking about technical support:

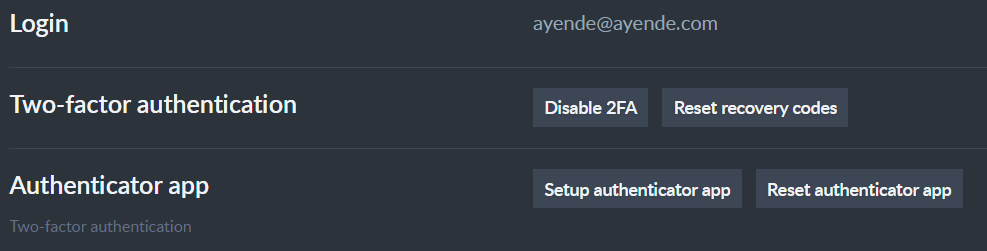

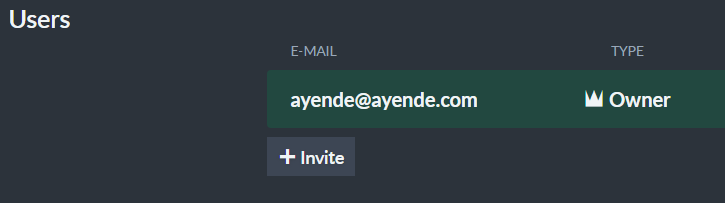

RavenDB has a great technical support on Google Groups for no costs. All questions, regardless of the obtained license, get meaningful answers within 24 hours and quite often Oren Eini responds personally.

Contrary to the Google Groups, questions on StackOverflow are often neglected. It’s a mystery why Raven sticks to a such archaic style of tech support and hasn’t migrated to StackOverflow or GitHub.

I care quite deeply about the quality of our support, to the point where I’ll often field questions directly, as Alex notes. I have an article on Linked In that talks about my philosophy in that regard which may be of interest.

As to Alex’s point about Stack Overflow vs Google Groups, the key difference is the way we can discuss things. In Stack Overflow, the focus is on an answer, but that isn’t our usual workflow when providing support. Here is a question that would fit Stack Overflow very well, there is a well defined problem with all the details and we are able to provide an acceptable answer in a single exchange. That kind of interaction, on the other hand, is quite rare. It is a lot more common to have to have a lot more back and forth and we tend to try to give a complete solution, not just answer the initial question.

Another issue is that Stack Overflow isn’t moderated by us, which means that we would be subject to rules that we don’t necessarily want to adhere to. For example, we get asked similar questions all the time, which are marked as duplicated and closed on Stack Overflow, but we want to actually answer people.

GitHub issues are a good option for this kind of discussion, but they tend to cause people to raise issues, and one of the reasons that we have the google group is to create discussion. I guess it comes down to the different community that spring up from the communication medium.

Speaking about documentation:

RavenDB does have the official docs, which are easily navigable and searchable. Works well for beginners and provides good samples to start with, but there are gaps here and there, and it has far less coverage of the functionality than many popular free and open source projects.

…

Ultimately, as a developer, I want to google my question and it’s acceptable to have the answer on a non-official website. But if you’re having a deep dive with RavenDB, it’s unlikely to find it in the official docs, nor StackOverflow, nor GitHub.

Documentation has always been a chore for me. The problem is that I know what the software does, so it can be hard to even figure out what we need to explain. This year we have hired a couple more technical writers specifically to address the missing pieces in our documentation. I think we are doing quite well at this point.

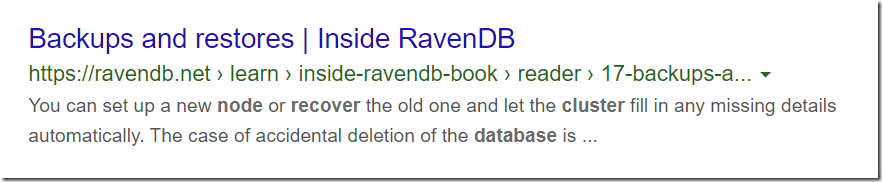

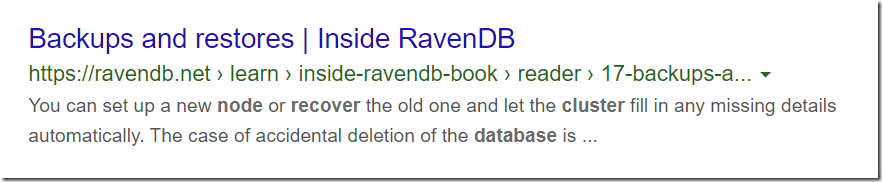

What wasn’t available at the time of this post and is available now is the book. All of the details about RavenDB that you could care too and more are detailed there are are available. It is also available to Google, so in many cases your Google search will point you to the right section in the book that may answer your question.

I hope that these actions covered the gaps that Alex noted in our documentation. And there is also this blog, of course  .

.

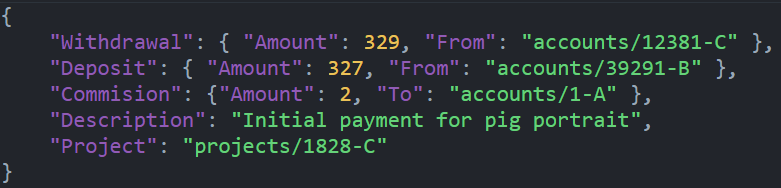

Speaking about issues that he had run into:

It’s reliable and does work well. Unless it doesn’t. And then a fix gets promptly released (a nightly build could be available within 24 hours after reporting the issue). And it works well again.

…

All these bugs (and others I found) have been promptly fixed.

… stability of the server and the database integrity are sacred. Even a slight risk of losing it can keep you awake at night. So no, it’s a biggy, unless the RavenDB team convinces me otherwise.

I didn’t include the list of issues that Alex pointed to on purpose. The actual issues don’t matter that much, because he is correct, from Alex’s perspective, RavenDB aught to Just Work, and anything else is our problem.

We spend a lot of time on ensuring a high quality for RavenDB. I had a two parts with Jeffery Palermo about just that, and you might be interested in this keynote that goes into some of the challenges that are involved in making RavenDB.

One of the issues that he raised was RavenDB crashing (or causing Windows to crash) because of a bug in the Windows Kernel that was deployed in a hotfix. The hotfix was quietly patched some time later by Microsoft, but in the meantime, RavenDB would crash. And a user would blame us, because we crashed.

Another issue (RavenDB upgrade failing) was an intentional choice by us in the upgrade, however. We had a bug that can cause data corruption in some cases, we fixed it, but we had to deal with potentially problematic state of existing databases. We chose to be conservative and ask the user to take an explicit action in this case, to prevent data loss. It isn’t ideal, I’m afraid, but I believe that we have done the best that we could after fixing the underlying issue. In doubt, we pretty much always have to fall on the prevent data loss vs. availability side.

Speaking about Linq & JS support:

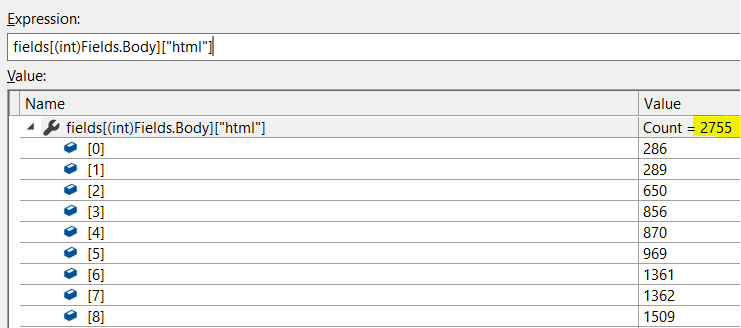

let me give you a sense of how often you’ll see LINQ queries throwing NotSupportedException in runtime.

…

But in front of us a special case — a database written in the .NET! There is no need in converting a query to SQL or JavaScript.

I believe that I mentioned already that the initial Linq support for RavenDB literally took more time than building RavenDB itself, right? Linq is an awesome feature, for the consumer. For the provider, it is a mess. I’m going to quote Frans Bouma on this:

Something every developer of an ORM with a LINQ provider has found out: with a LINQ provider you're never done. There are always issues popping up due to e.g. unexpected expressions in the tree.

Now, as Alex points out. RavenDB is written in .NET, so we could technically use something like Serialize.Linq and support any arbitrary expression easily, right?

Not really, I’m afraid, and for quite a few reasons:

- Security – you are effectively allowing a user to send arbitrary code to be executed on the server. That is never going to end up well.

- Compatibility – we want to make sure that we are able to change our internals freely. If we are forced to accept (and then execute) code from the client, that freedom is limited.

- Performance – issuing a query in this manners means that we’ll have to evaluate the query on each document in the relevant collection. A full table scan. That is not a feature that RavenDB even has, and for a very good reason.

- Limited to .NET only – we currently have client for .NET, JVM, Go, Python, C++ and Node.JS. Having features just for one client is not something that we want, it really complicates our lives.

We think about queries using RQL, which are abstract in nature and don’t tie us down with regards to how we implement them. That means that we can use features such as automatic indexes, build fast queries, etc.

Speaking about RQL:

Alex points out some issues with RQL as well. The first issue relates to the difference between a field existing and having a null value. RavenDB make a distinction between these state. A field can have a null value or it can have a missing value. In a similar way to the behavior of NULL in SQL, which can often create similar confusion. The problem with RavenDB is that the schema itself isn’t required, so different documents can have different fields, so in our case, there is an additional level. A field can have a value, be null or not exist. And we reflect that in our queries. Unfortunately, while the behavior is well defined and documented, just like NULL behavior in SQL, it can be surprising to users.

Another issue that Alex brings up is that negation queries aren’t supported directly. This is because of the way we process queries and one of the ways we ensure that users are aware of the impact of the query. With negation query, we have to first match all documents the exclude all those that match the negation. For large number of documents, that can be expensive. Ideally, the user have a way to limit the scope of the matches that are being negated, which can really help performance.

Speaking about safe by default:

RavenDB is a lot less opinionated than it used to be. Alex rightfully points that out. As we got more users, we had to expand what you could do with RavenDB. It still pains me to see people do things that are going to be problematic in the end (extremely large page sizes are one good example), but our users demanded that. To quote Alex:

I’d preferred a slowed performance in production and a warning in the server logs rather than a runtime exception.

Our issue with this approach is that no one looks at the logs and that this usually come to a head at 2 AM, resulting in a support call from the ops team about a piece of software that broke. Because of this, we have removed many such features, while turning them to alerts, and the very few that remained (mostly just the number of requests per session) can be controlled globally by the admin directly from the Studio. This ensures that the ops team can do something if you hit the wall, and of course, you can also configure this from the client side globally easily enough.

As a craftsman, it pains me to remove those limits, but I have to admit that it significantly reduced the number of support calls that we had to deal with.

Conclusion:

Overall, I can say RavenDB is a very good NoSQL database for .NET developers, but the ”good” is coming with a bunch of caveats. I’m confident in my ability to develop any enterprise application with RavenDB applying the Domain-driven design (DDD) philosophy and practices.

I think that this is really the best one could hope for. I think that Alex’s review that is honest and to the point. Moreover, it is focused and detailed. That make very valuable. Because he got to his conclusions not out of brief tour of RavenDB but actually holding it up in the trenches.

Thanks, Alex.