The last production postmortem that I blogged about in real time was almost a year ago. This is something that makes me very happy, considering the uptick we see in RavenDB usage. All the efforts we put into making RavenDB more stable, predictable and robust has been paying off. The “downside” of that is that I have less interesting stories to tell, of course, but I’ll live with that.

Today’s story, however, is about the nastiest of problems. An occasional slow down in production that cause RavenDB to halt for about 5 seconds. The killer is that this is something that would only reproduce after several weeks of running, and it isn’t consistent. Once in a while, without any consistency, RavenDB would appear to stop processing requests for a period of a few seconds, and then resume normally. Those kind of bugs are the worst, because it is very hard to narrow down exactly what is going on, even before we get to trying to figure out the root cause.

We quickly ruled out the usual suspects. There was no high CPU, swapping to disk or slow I/O that can explain it. We tested the underlying hardware and it seemed fine as well. The problem would usually be quickly fixed if you restarted RavenDB, but sometimes that wasn’t enough. Restarting the whole server was required to get back to the baseline performance. Note that usually, RavenDB performed just fine, it is just that occasionally it would pause.

This naturally made us suspect that we have some issue with the GC causing pauses, but it didn’t make sense. Our allocation rates weren’t high and we didn’t have that big of a managed heap. In short, pretty much all avenues of investigation looked like they were closed to us.

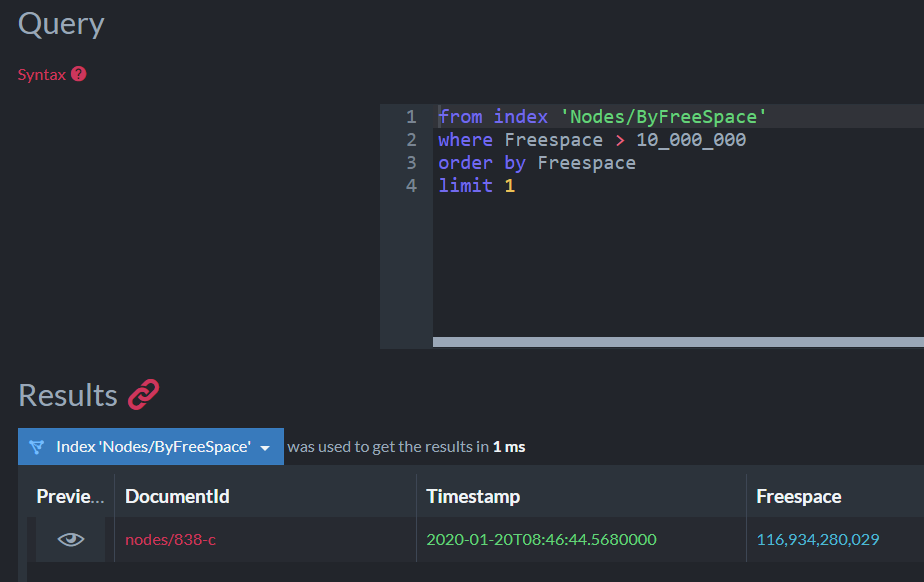

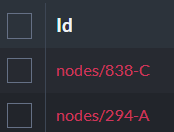

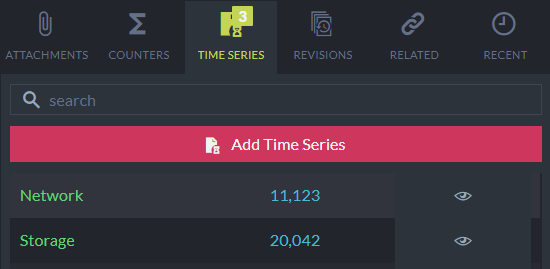

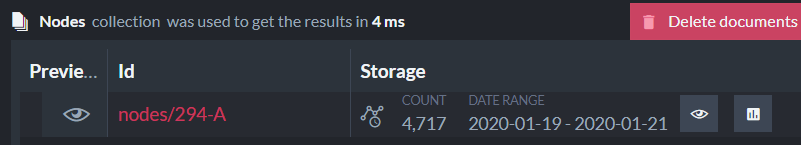

We took several dumps of the process state and inspected what was going on there. Pretty much all indications pointed to there being an issue with the GC, but we couldn’t figure out why. Then we started to analyze the dump file in more detail, here is everything in the dump that was over 100MB:

The total size of the managed heap was just over 8GB, in a system with 64GB of RAM. So nothing really that interesting. The number of strings was high, I’ll admin, much higher than what we’ll usually find in a RavenDB process, but this database instance was doing heavy indexing, so that was probably the reason for this.

But pay very close attention to the second item from the end. That is about 800 MB (!!) of ThreadLocal<WeakReference>.LinkedSlotVolatile array. And that was suspicious. We looked into this a bit more and discovered that we had this tidbit:

00007f8203682ce0 50062 2002480 System.Threading.ThreadLocal`1[[System.WeakReference, System.Private.CoreLib]]

To start with, that isn’t too bad. We have 2MB or so of ThreadLocal<WeakReference> instances, no big deal. But look at the instance count (which is the second column). We had over 50,000 of those. And that didn’t seem right at all.

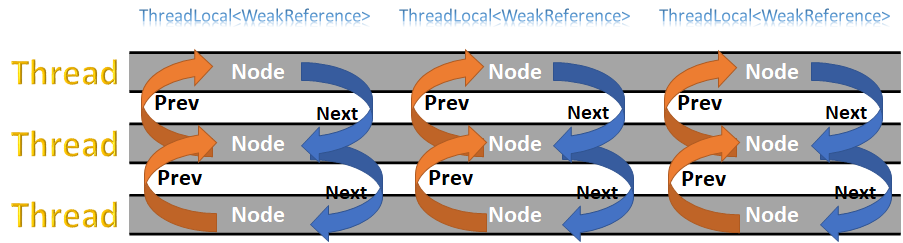

We started to investigate how ThreadLocal<T> works, and we found that it is really interesting. Here is the in memory structure of a ThreadLocal<T>. The internal structure is quite interesting:

Each ThreadLocal<T> instance has an id, which is generated sequentially. For each thread, there is a static thread local array that is allocated to store the values for this thread. The id of the ThreadLocal instance is used to index into this array. The array is for the local thread, but all the values across all threads for a particular ThreadLocal are held together as a doubly linked list.

Note that ThreadLocal has a trackAllValues constructor parameter that does not affect this behavior at all. It simply control whatever you are allowed to call the Values property, not whatever the thread local instance will track all the values.

Due to reasons that I’ll get to later, we created a lot of ThreadLocal instances. That means that we had instance ids in the high tens of thousands. When allocating the thread static array, the ThreadLocal will allocate an array that can hold its id (to the next power of two). So if we have a ThreadLocal with id of 50,062, it will allocate an array with 65,536 elements. That would explain the amount of memory that we saw in the memory dump and is interesting all on its own.

It did not explain the problem with the GC. At least, not yet. As we looked further into this issue, we noticed that this problem only occurred on very large database instances. Ones that had dozens of databases and many indexes. One of the ways that RavenDB ensure isolation of components is to have them each run in a different thread. In those machines,we have had processes that run with thousands of threads, usually in the range of 3000 to 6000.

Combine what we know about ThreadLocal and the number of threads, and you might start to see the problem. Not all ThreadLocals are used in all threads, but when they do, we need to allocate an array that is 65,536 elements in each of the threads. That translate to a total size that is measured in hundreds of millions.

That explains the size, again, but what about the GC speed? I wrote a small isolated test to see what this looks like and I was able to reproduce this on its own. That was really interesting, but I didn’t think that the issue was with ThreadLocal directly. Rather, the problem was with the lattice like structure that we have here. Because of this, I decided to check what it would cost for the GC to run on such a system without dealing with intermediaries.

Here is what this looks like:

On my machine, this code results in GC taking over 200ms each time on a heap that is less than 0.5 GB in size. Given how the GC works, it makes sense. And that means that the accidental lattice structure that we create using ThreadLocal is at the root of our troubles. The question is why do we have so many of them.

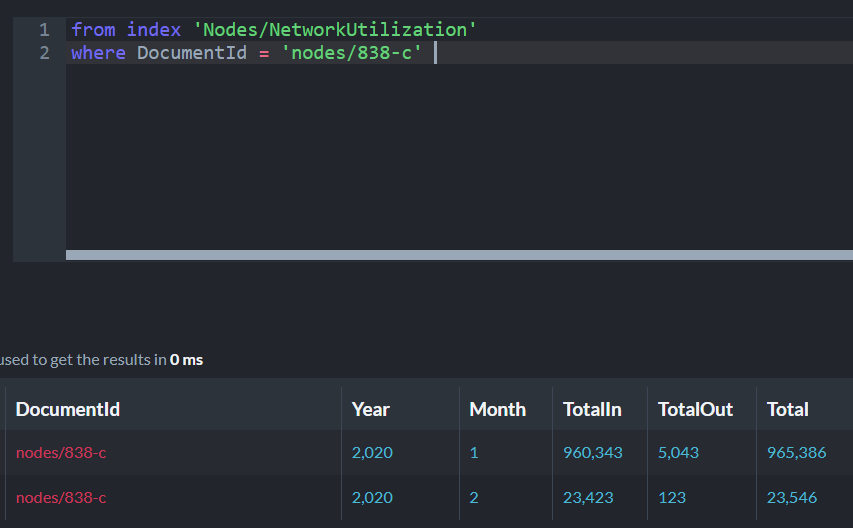

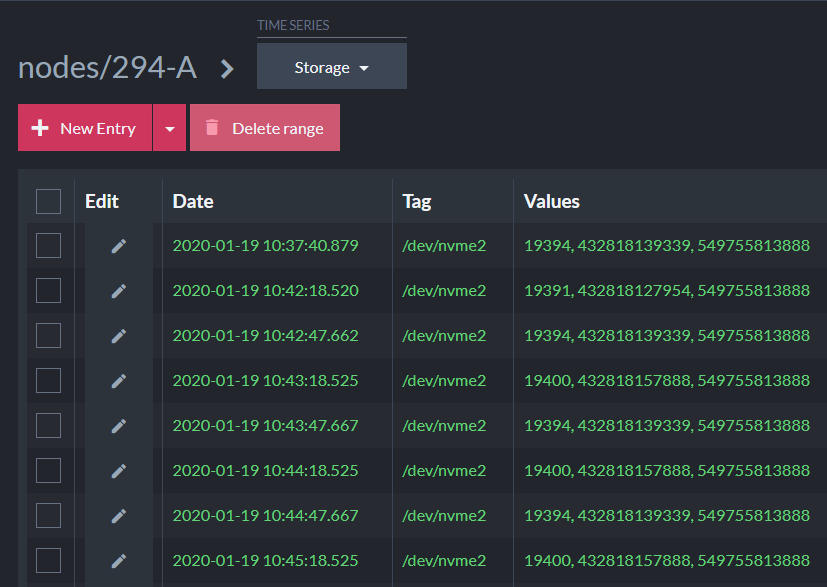

Internally, inside Lucene, there is a ThreadLocal<WeakReference> that is being used when you use a particular feature. This is used once per segment, so it isn’t too bad. However, consider what happens over time in a process that have thousands of indexes and is constantly busy?

Each indexing run will create a segment, and each one of them will have a ThreadLocal instance. At the same time, we also have a lot of threads, which create this exact scenario. The problem slowly accumulate over time. As you have more and more indexing runs, you’ll have more and more such instances and you’ll get to bigger and bigger arrays on each thread. This explains why we are able to see the issue only on instances that have been running for weeks, and then, only on those instances that run a particular set of queries that make use of this feature.

We reported the issue to the .NET team and I’m very curious about what the end result will be here. On our end, we are going to have to revamp how we are handling this type of situation. We have a plan of action already and we’ll see over the next week or so how it plays out in production load.