For most developers, Behavior Driven Development is a synonym for Given-When-Then syntax and Cucumber-like frameworks that are supporting it. In this session, we will step back from DSLs like Gherkin and show that BDD in its essence is about the mental approach to writing software based on a specification that describes business scenarios. Additionally, we will use RavenDB to provide an easy and effortless way to implement integrations tests based on the BDD approach. Finally, we will show how the BDD approach can be introduced in your projects without the need to learn any new frameworks.

Another code review comment, this time this is an error message being raised:

The comment I made here is that this error message lacks a very important detail. How do I fix this? In this case, the maximum number of connections is controlled by a configuration setting, so telling that to the user as well as what is the actual configuration entry to use is paramount.

If we give them all the information, we save the support call that would follow. If the user don’t read the error message, the support engineer likely would, and be able to close the ticket within moment.

I just went over the following pull request, where I found this nugget:

I have an admittedly very firm views on the subject of error handling. The difference between good error handling and the merely mediocre can be ten times more lines of code, but also hundred times less troubleshooting in production.

And a flat out rule that I have is that whenever you have a usage of the Exception.Message property, that means that you are discarding valuable information aside. This is a pretty horrible thing to do.

You should never use the Message property, always use the ToString(), so you’ll get the full details. Yes, that is relevant even if you are showing to the user. Because if you are showing an exception message to the user, you have let it bubbled up all the way, so you might as well give full details.

I’ll start with saying that this is not something that is planned in any capacity, I run into this topic recently and decided to dig a little deeper. This post is mostly about results of my research.

If you run a file sharing system, you are going to run into a problem very early on. Quite a lot of the files that people are storing are shared, that is a good thing. Instead of storing the same file multiple times, you can store it once, and just keep a reference counter. That is how RavenDB internally deals with attachments, for example. Two documents that have the same attachment (content, not the file name, obviously) will only have a single entry inside of the RavenDB database.

When you run a file sharing system, one of the features you really want to offer is the ability to save space in this manner. Another one is not being able to read the users’ files. For many reasons, that is a highly desirable property. For the users, because they can be assured that you aren’t reading their private files. For the file sharing system, because it simplify operations significantly (if you can’t look at the files’ contents, there is a lot that you don’t need to worry about).

In other words, we want to store the users’ file, but we want to do that in an encrypted manner. It may sound surprising that the file sharing system doesn’t want to know what it is storing, but it actually does simplify things. For example, if you can look into the data, you may be asked (or compelled) to do so. I’m not talking about something like a government agency doing that, but even feature requests such as “do virus scan on my files”, for example. If you literally cannot do that, and it is something that you present as an advantage to the user, that is much nicer to have.

The problem is that if you encrypt the files, you cannot know if they are duplicated. And then you cannot use the very important storage optimization technique of de-duplication. What can you do then?

This is where convergent encryption comes into play. The idea is that we’ll use an encryption system that will give us the same output for the same input even when using different keys. To start with, that sounds like a tall order, no?

But it turns out that it is quite easy to do. Consider the following symmetric key operations:

One of the key aspects of modern cryptography is the use of nonce, that ensures that for the same message and key, we’ll always get a different ciphertext. In this case, we need to go the other way around. To ensure that for different keys, the same content will give us the same output. Here is how we can utilize the above primitives for this purpose. Here is what this looks like:

In other words, we have a two step process. First, we compute the cryptographic hash of the message, and use that as the key to encrypt the data. We use a static nonce for this part, more on that later. We then take the hash of the file and encrypt that normally, with the secret key of the user and a random nonce. We can then push both the ciphertext and the nonce + encrypted key to the file sharing service. Two users, using different keys, will always generate the same output for the same input in this model. How is that?

Both users will compute the same cryptographic hash for the same file, of course. Using a static nonce completes the cycle and ensures that we’re basically running the same operation. We can then push both the encrypted file and our encrypted key for that to the file sharing system. The file sharing system can then de-duplicate the encrypted blob directly. Since this is the identical operation, we can safely do that. We do need to keep, for each user, the key to open that file, encrypted with the user’s key. But that is a very small value, so likely not an issue.

Now, what about this static nonce? The whole point of a nonce is that it is a value that you use once. How can we use a static value here safely? The problem that nonce is meant to solve is that with most ciphers, if you XOR the output of two cipher texts, you’ll get the difference between them. If they were encrypted using the same key and nonce, you’ll get the result of XOR between their plain texts. That can have catastrophic impact on the security of the system. To get anywhere here, you need to encrypt two different messages with the same key and nonce.

In this case, however, that cannot happen. Since we use cryptographic hash of the content as the key, we know that any change in the message will ensure that we have a different key. That means that we never reuse the key, so there is no real point in using a nonce at all. Given that the cryptographic operation requires it, we can just pass a zeroed nonce and not think about it further.

This is a very attractive proposition, since it can amounts to massive space savings. File sharing isn’t the only scenario where this is attractive. Consider the case of a messaging application, where you want to forward messages from one user to another. Memes and other viral details are a common scenario here. You can avoid having to re-upload the file many times, because even in an end to end encryption model, we can still avoid sharing the file contents with the storage host.

However, this lead to one of the serious issues that we have to cover for convergent encryption. For the same input, you’ll get the same output. That means that if an adversary know the source file, it can tell if a user has that file. In the context of a messaging application, it can spell trouble. Consider the following image, which is banned by the Big Meat industry:

Even with end to end encryption, if you use convergent encryption for media files, you can tell that a particular piece of content is accessed by a user. If this is a unique file, the server can’t really tell what is inside it. However, if this is a file that has a known source, we can detect that the user is looking at a picture of salads and immediately snitch to Big Meat.

This is called configuration of file attack, and it is the most obvious problem for this scenario. The other issue is that you using convergent encryption, you may allow an adversary to guess about values in the face on known structure.

Let’s consider the following scenario, I have a system service where users upload their data using convergent encryption, given that many users may share the same file, we allow any user to download a file using:

GET /file?id=71e12496e9efe0c7e31332533cb53abf1a3677f2a802ef0c555b08ba6b8a0f9f

Now, let’s assume that I know what typical files are stored in the service. For example, something like this:

Looking at this form, there are quite a few variables that you can plug here, right? However, we can generate options for all of those quite easily, and encryption is cheap. So we can speculate on the possible values. We can then run the convergent encryption on each possibility, then fetch that from the server. If we have a match, we figured out the remaining information.

Consider this another aspect of trying to do password cracking, and using a set of algorithms that are quite explicitly meant to be highly efficient, so they lend themselves to offline work.

That is enough on the topic, I believe. I don’t have any plans of doing something with that, but it was interesting to figure out. I actually started this looking at this feature in WhatsApp:

It seams that this is a client side enforced policy, rather than something that is handled via the protocol itself. I initially thought that this is done via convergent encryption, but it looks like just a counter in the message, and then you the client side shows the warning as well as applies limits to it.

Recently we had to tackle a seriously strange bug. A customer reported that under a specific set of circumstances, when loading the database with many concurrent requests, they would get an optimistic concurrency violation from RavenDB.

That is the sort of errors that we look at and go: “Well, that is what you expect it to do, no?”

The customer code looked something like this:

As you can imagine, this code is expected to deal with concurrency errors, since it is explicitly meant to run from multiple threads (and processes) all at once.

However, in the scenario that the user was using, it had two separate types of workers running, one type was accessing the same set of locks, and there you might reasonably expect to get a concurrency error. However, the second type was run in the main thread only, and there should be no contention on that at all. However, the user was getting a concurrency error on that particular lock, which shouldn’t happen.

Looking at the logs, it was even more confusing. Leaving aside that we could only reproduce this issue when we had a high contention rate, we saw some really strange details. Somehow, we had a write to the document coming out of nowhere?

It took a while to figure out what was going on, but we finally figured out what the root cause was. RavenDB internally does not execute transactions independently. That would be far too costly. Instead, we use a process call transaction merging.

Here is what this looks like:

The idea is that we need to write to the disk to commit the transaction. That is an expensive operation. By merging multiple concurrent transactions in this manner, we are able to significantly reduce the number of disk writes, increasing the overall speed of the system.

That works great, until you have an error. The most common error, of course, is a concurrency error. At this point, the entire merged transaction is rolled back and we will execute each transaction independently. In this manner, we suffer a (small) performance hit when running into such an error, but the correctness of the system is preserved.

The problem in this case would only happen when we had enough load to start doing transaction merging. Furthermore, it would only happen if the actual failure would happen on the second transaction in the merged sequence, not the first one.

Internally, each transaction does something like this:

Remember that aside from merging transactions, a single transaction in RavenDB can also have multiple operations. Do you see the bug here?

The problem was that we successfully executed the transaction batch, but the next one in the same transaction failed. RavenDB did the right thing and execute the transaction batch again independently (this is safe to do, since we rolled back the previous transaction and are operating on a clean slate).

The problem is that the transaction batch itself, on the other hand, had its own state. In this case, the problem was that when we replied back to the caller, we used the old state, the one that came from the old transaction that was rolled back.

The only state we return to the user is the change vector, which is used for concurrency checks. What happened was that we got the right result, for the old change vector. Everything worked, and the actual state of the database was fine. The only way to discover this issue is if you are continue to make modifications to the same document on the same session. That is a rare scenario, since you typically discard the session after you save its changes.

In any other scenario, you’ll re-load the document from the server, which will give you the right change vector and make everything work.

Like all such bugs, when we look back at it, this is pretty obvious, but to get to this point was quite a long road.

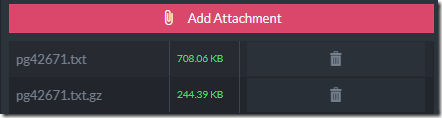

Following my previous post, which mentioned that you can save significantly on disk space if you store a plain text attachment using gzip, we go a feature request:

Perhaps in future attachments could have built-in compression as well?

The answer to that is no, but I thought that it is worth a post to explain why not.

Let’s consider the typical types of attachments that you’ll store in RavenDB. Based on experience, we usually see:

- PDF files

- Word / Excel / Power Point

- Images (JPEG, PNG, GIF, etc)

- Videoes

- Designs (floor plans, CAD / DWG, etc)

- Text files

Aside from the text files, pretty much all the data you’ll store as an attachment is already compressed. In fact, you’ll be hard pressed today to find any file format that does not already have built-in compression.

Compressing already compressed data is… suboptimal. I will not usually lead to significant space savings and can actually make the file size larger. It also burns CPU cycles unnecessarily.

It is better to shift the responsibility to the users in this case, since they have a lot more information about what they actually put into RavenDB and won’t have to guess.

In distributed systems, the term Byzantine fault tolerance refers to working in an environment where the other nodes in the system are going to violate the invariants held by the system. Sometimes, that is because of a bug, sometimes because of a hardware issue (Figure 11) and sometimes that is a malicious action.

A user called us to let us know about a serious issue, they have a 100 documents in their database, but the index reports that there are 105 documents indexed. That was… puzzling. None of the avenues of investigation we tried help us. There wasn’t any fanout on the index, for example.

That was… strange.

We looked at the index in more details and we noticed something really strange. There were documents ids that were in the index that weren’t in the database, and they were all at the end. So we had something like:

- users/1 – users/100 – in the index

- users/101 – users/105 – not in the index

What was even stranger was that the values for the documents that were already in the index didn’t match the values from the documents. For that matter, the last indexed etag, which is how RavenDB knows what documents to index.

Overall, this is a really strange thing, and none of that is expected to happen. We asked the user for more details, and it turns out that they don’t have much. The error was reported in the field, and as they described their deployment scenario, we were able to figure out what was going on.

On certain cases, their end users will want to “reset” the system. The manner in which they do that is to shut down their application and then delete the RavenDB folder. Since all their state is in RavenDB, that brings them back up at a clean state. Everything works, and this is a documented manner in which they are working.

However, the manner in which the end user will delete is done as: “Delete the database files”, the actual task is done by the end user.

A RavenDB database internally is composed of a few directories, for data and indexes. What would happen in the case where you deleted just the database file, but kept the index files? In that case, RavenDB will just accept that this is the case (soft delete is a a thing, so we have to accept that) and open the index file. That index file, however, came from a different database, so a lot of invariants are broken. I’m actually surprised that it took this long to find out that this is problematic, to be honest.

We explained the issue, and then we spent some time ensuring that this will never be an invisible error. Instead, we will validate that the index is coming from the right source, or error explicitly. This is one bug that we won’t have to hunt ever again.

A user called us with a strange bug report. He said that the SQL ETL process inside of RavenDB was behaving badly. It would write the data from the RavenDB server to the MySQL database, but then it would immediately delete it.

From the MySQL logs, the user showed:

2021-10-12 13:04:18 UTC:20.52.47.2(65396):root@ravendb:[1304]:LOG: execute <unnamed>: INSERT INTO orders ("id") VALUES ('Tab/57708-A')

2021-10-12 13:04:19 UTC:20.52.47.2(65396):root@ravendb:[1304]:LOG: execute <unnamed>: DELETE FROM orders WHERE "id" IN ('Tab/57708-A')

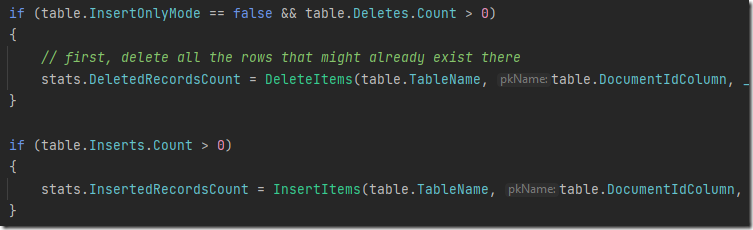

As you can imagine, that isn’t an ideal scenario for ETL processes. It is also something that should absolutely not happen. RavenDB issues the delete before the insert, obviously. In fact, for your viewing pleasure, here is the relevant piece of code:

It doesn’t get any clearer than that, right? We issue any deletes we have, then we send the inserts. But the user insisted that they are seeing this behavior, and I tend to trust them. But we couldn’t reproduce the issue, and the code in question dates to 2017, so I was pretty certain it is correct.

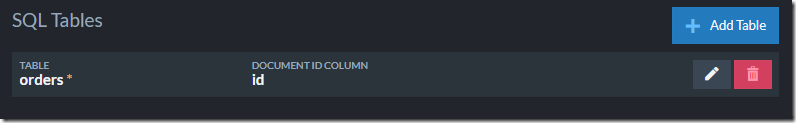

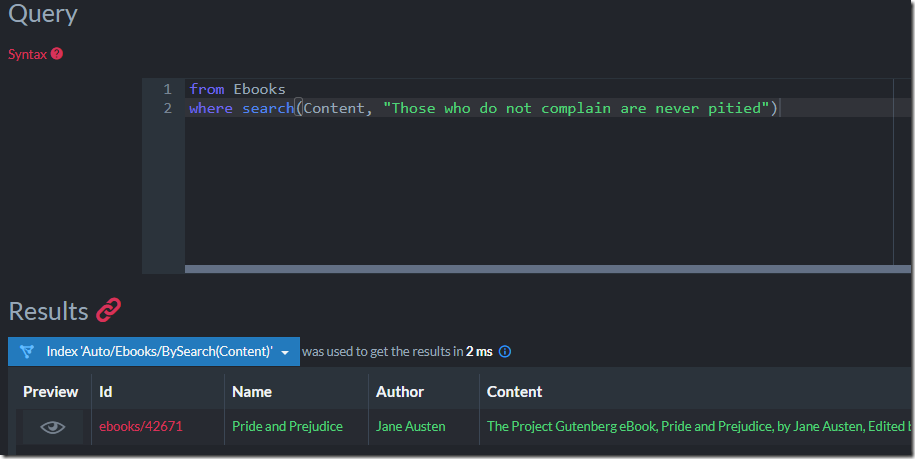

Then I noticed the user’s configuration. To define a SQL ETL process in RavenDB, you need to define what tables we’ll be writing to as well as deciding what data to send to those tables. Here is what this looked like:

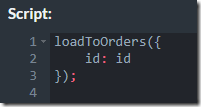

And here is the script:

Do you see the problem? It might be better to use an overload, which make it clearer:

In the table listing, we had an “orders” table, but the script sent the data to the “Orders” table.

RavenDB is a case insensitive database, but in this case, what happened behind the scenes is that the code used a case sensitive dictionary to keep track of the tables that we are working with. That meant that what we did was roughly:

And basically, the changes_by_table dictionary had two tables in it. One from the table definitions and one from the script. When we validate that the tables from the script are fine, we do that using case insensitive comparison, so that passed properly.

To make it worst, the order of items in a dictionary is not predictable. If this was the iteration order other way around, everything would appear to be working just fine.

We fixed the bug, but I found it really interesting that three separate people (very experienced with the codebase) had a look and couldn’t figure out how this bug can happen. It wasn’t in the code, it was in what wasn’t there.

A scenario came up from a user that was quite interesting to explore.

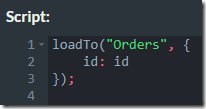

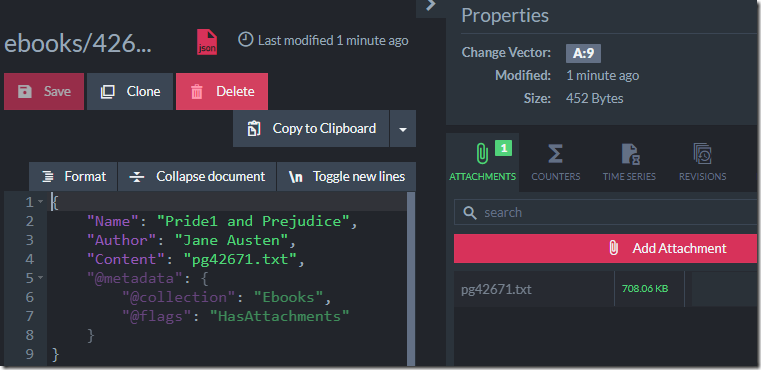

Let’s us assume that we want to put the Gutenberg Project inside of RavenDB. An initial attempt for doing that would look like this:

I’m skipping a lot of the details, but the most important field here is the Content field. That contains the actual text of the book.

On last count, however, the size of the book was around 708KB. When storing that as a single field inside of RavenDB, on the other hand, RavenDB will notice that this is a long field and compress it. Here is what this looks like:

The 738.55 KB is the size of the actual JSON, the 674.11 KB is after quick compression cycle and the 312KB is the actual size that this takes on disk. RavenDB is actively trying to help us.

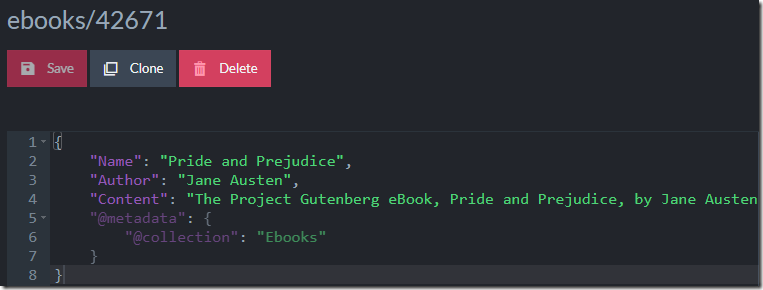

But let’s take the next step, we want to allow us to query, using full text search, on the content of the book. Here is what this will look like:

Everything works, which is great. But what is going on behind the scenes?

Even a single text field that is large (100s of KB or many MB) puts a unique strain on RavenDB. We need to manage that as a single unit, it significantly bloats the size of the parent document and make it more expensive to work with.

This is interesting because usually, we don’t actually work all that much with the field in question. In the case of the Pride and Prejudice book, the content is immutable and not really relevant for the day to day work with the document. We are better off moving this elsewhere.

An attachment is a natural way to handle this. We can move the content of the book to an attachment. In this way, the text is retained, we can still work and process that, but it is sitting on the side, not making our life harder on each interaction with the document.

Here is what this looks like, note that the size of the document is tiny. Operations on that size would be much faster than a multi MB document:

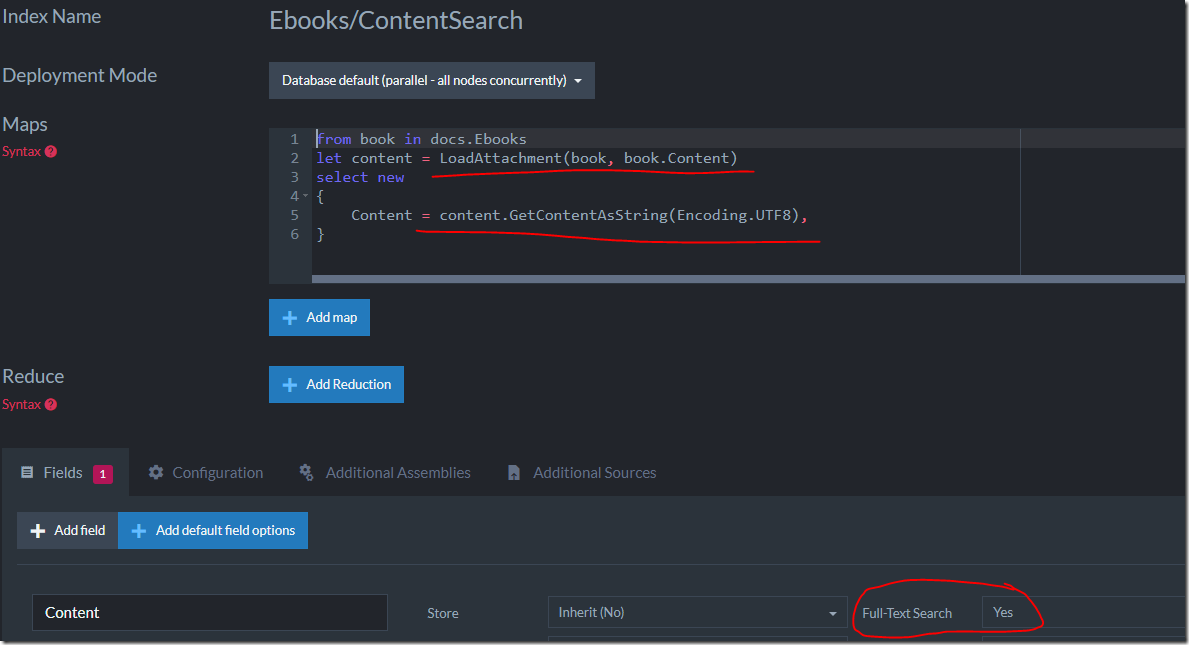

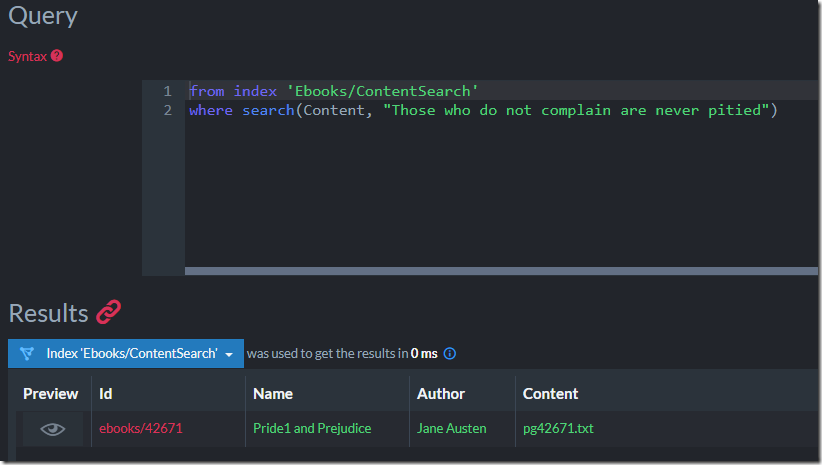

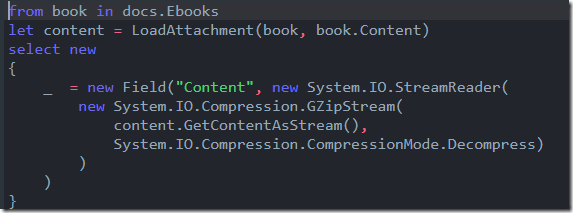

Of course, there is a disadvantage here, how can we index the book’s contents now? We still want that. RavenDB support that scenario explicitly, let’s define an index to do just that:

You can see that I’m loading the content attachment and then accessing its content as string, using UTF8 as the encoding mechanism. I tell RavenDB to use full text search on this field, and I’m off to the races.

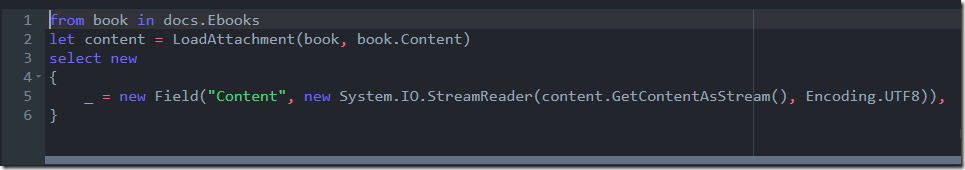

Of course, we could stop here, but why? We can do even better. When working with large text fields, an index such as the one above will force us to materialize the entire field as a single value. For very large values, that can put a lot of pressure in terms of memory usage.

But RavenDB supports more than that. Instead of processing a very large string in one shot, we can do that in an incremental fashion, avoiding big value materialization and the memory pressure associated with that. Here is what you can write:

That tells RavenDB that we should process the field in a streaming fashion.

Here is why it matters:

| Value Materialized | Streaming Value |

|

|

When we are working on a document that has a < 1 MB attachment, it probably doesn’t matter all that much (although using 25% of the memory is nice), but it matters a lot more when you are working with larger texts.

We can take this one step further still! Instead of storing the attachment text as is, we can compress it, like so:

And then in the index, we’ll decompress on the fly:

Note that throughout all of that, the queries that you send are exactly the same, we are just taking 20% of the disk space and 25% of the memory that we used to.

After spending so much time building my own protocol, I decided to circle back a bit and go back to TLS itself and see if I can get the same thing for it that I make on my own. As a reminder, here is what we achieved:

Trust established between nodes in the system via a back channel, not Public Key Interface. For example, I can have:

On the client side, I can define something like this:

Server=northwind.database.local:9222;Database=Orders;Server Key=6HvG2FFNFIifEjaAfryurGtr+ucaNgHfSSfgQUi5MHM=;Client Secret Key=daZBu+vbufb6qF+RcfqpXaYwMoVajbzHic4L0ruIrcw=

Can we achieve this using TLS? On first glance, that doesn’t seem to be possible. After all, TLS requires certificates, but we don’t have to give up just yet. One of the (new) options for certificates is Ed25519, which is a key pair scheme that uses 256 bits keys. That is also similar to what I have used in my previous posts, behind the covers. So the plan is to do the following:

- Generate key pairs using Ed25519 as before.

- Distribute the knowledge of the public keys as before.

- Generate a certificate using those keys.

- During TLS handshake, trust only the keys who we were explicitly told to trust, disabling any PKI checks.

That sounds reasonable, right? Except that I failed.

To be rather more exact, I couldn’t generate a valid X509 certificate from Ed25519 key pair. Using .NET, you can use the CertificateRequest class to generate certificates, but it only supports RSA and ECDsa keys. Safe sizes for those types are probably:

- RSA – 4096 bits (2048 bits might also be acceptable) – key size on disk: 2,348 bytes.

- ECDsa – 521 bits - key size on disk: 223 bytes.

The difference between those and the 32 bytes key for Ed25519 is pretty big. It isn’t much in the grand scheme of things, for sure, but it matters. The key issue (pun intended) is that this is large enough to make it awkward to use the value directly. Consider the connection string I listed above. The keys we use here are small enough that we can just write them inline (simplest and most oblivious thing to do). The keys for either of the more commonly used RSA and ECDsa are too big for that.

Here is a ECDsa key, for example:

MIHcAgEBBEIBuF5HGV5342+1zk1/Xus4GjDx+FR rbOPrC0Q+ou5r5hz/49w9rg4l6cvz0srmlS4/Ysg H/6xa0PYKnpit02assuGgBwYFK4EEACOhgYkDgYY ABABaxs8Ur5xcIHKMuIA7oedANhY/UpHc3KX+SKc K+NIFue8WZ3YRvh1TufrUB27rzgBR6RZrEtv6yuj 2T2PtQa93ygF761r82woUKai7koACQZYzuJaGYbG dL+DQQApory0agJ140T3kbT4LJPRaUrkaZDZnpLA oNdMkUIYTG2EYmsjkTg==

And here is an RSA key:

MIIJKAIBAAKCAgEApkGWJc+Ir0Pxpk6affFIrcrRZgI8hL6yjXJyFNORJUrgnQUw i/6jAZc1UrAp690H5PLZxoq+HdHVN0/fIY5asBnj0QCV6A9LRtd3OgPNWvJtgEKw GCa0QFofKk/MTjPimUKiVHT+XgZTnTclzBP3aSZdsROUpmHs2h4eS9cRNoEnrC1u YUzaGK4OeQNLCNi1LyB6I33697+dNLVPoMJgfDnoDBV12KtpB6/pLjigYgIMwFx/ Qyx9DhnREXYst/CLQs8S/dmF+opvghhdhiUUOUwqGA/mIIbwtnhMQFKWCQXEk7km 5hNg/fyv/qwqvTkqQTZkJdj0/syPNhqnZ9RurFPkiOwPzde8I/QwOkEoOXVMboh4 Ji3Y6wwEkWSwY/9rzUK2799lzTmZlvUu2ZxNZfKxQ84vmPUCvP288KXOCU4FxIUX lujBu7aXUORtQE9oZxBSxqCSqmCEb7jGwR3JOpFlUZymK7W0jbY4rmfZL8vcDYdG r0msuXD+ggVjYzpHI7EH5MtQXYJZ2aKan5ZpSL/Lb0HsjkDLrsvMi+72FcwXH+5P Q1E30uxs5y9xOTSqff9T9x6KPAOwIpmrv4Bc3J0NgEgWiKxG9nM1+f8FkKlCRino rrF9ZrC+/l/vc67xye+Pr1tLvEFT5ARu/nR1JH/Lv/CsAU9y51wOPqD6dQUCAwEA AQKCAgBJseTWWcnitqFU8J62mM94ieCL8Q3WYZlP7Zz38lfySeCKeZRtWa/zsozm XEQY0t7+807pHPLs0OhMHlFv1GQKj09Wg4XvWWgqvLOSucC7QZ6cLfNUoUNhCxGp dbnAKGuXN9wwx7NBBljl5V4Ruf//UgxRw7YuklWk0ZjoUSrGGDX3siOtaZ17Nxwf NAB8qWKWwzSgquUmEH+kr4HeZorSRfC/+ntEUaa6y5T28g7Vosb4NYgLxJqiN3te 3B0yY6O3N4bZkyQ6TEblSdua7LCsPUCjbdi6LlZg664RDQqIcVATkwzVC14A95Mj tjkzqzU5ttxpkmP21cHdX6847QcpERgQ7NzAbjrU5UH8aBOsetaZo/1yDr5U13ah YcAq9XX6tLeAA0rUsnXKAWBQswtWIU0jXBuRRSE7xDXv+82SWEoPqZMSAv77p+uc AeogN+zzZPPet/AOERKLcGC9WoC/HT7q/H3zFAsRPoKY6qMfLFntdosc0lmRxvHv b9NXBzKdDuOiUXhdRMhL5Yld8ivvHuwRnPfcZycplSFrA9E5xo/S3RQj+Re9L0yR 8tNzjl+lcgtk8Q0CSJl6eW2Fjja5ZrvDD8qL97+WFqHR7LTTqZ7TmiT7u1MXW1Il wTuccWCQ85BzxpRbyzPXLdsxMgPCmjicX/23+srOXAk2z42bOQKCAQEAzM1Mocnd w0uoETHZH0VX29WaKVqUAecGtrj+YNujzmjLy2FPW10njgBfZgkVQETjFxUS9LBZ xv/p6fCio3NgXh3q7O/kWLxojuR8JB7n4vxoKGBwinwzi1DHp37gzjp/gGdr4mG9 8b7UeFJY8ZPz0EoXcPr3TL+69vOoLieti/Ou9W7HbpDHXYLKclFkJ0d/0AtDNaM7 kCNvI7HgC5JvCCOdGatmbB09kniQjtvE4Wh4vOg/TtH1KoKGXbC8JnjHNRjJtgqU 1mhbq36Eru8iOVME9jyHAkSPqphqeayEUdeP3C1Bc2xmrlxCQALZrAfH37ZWcf44 UuOO5TMnf5HTLwKCAQEAz9F59/xlVDHaaFpHK6ZRTQQWh6AVBDKUG2KDqRFAGQik 6YqQwJFGSo1Z+FjXzidGEHkqH6KyGtSxS6dTgqqfTC96P1rdrBab5vgdXpfSa/0S Qke2sH3eZ1vWJe95AD7AuVfsN/6IXIBHP5fWjXthGuo6U3vkkNjdjJGNxjfuMuug SbxjjVV6kZI6gwX2gfTQDKUT+yRjEnqGAyCcFeZXwWGryF1IseOFaNB2ATVKSqn9 oXI7AaI3ZRX3SyfOfyo3TaZEXabS1tfEg4JwIGNpx8WvRxb/X7WZi46be8u0ya4L BDJ6ZIOBf7lpvaI1Dr3dzCuPqjGQ3V/xPwGy5D8+CwKCAQEAuFVUUw6pjn0LIabX QQEd6hzgq7X+H5Q8A7yQIMewMTk7rKvCTH6U+oe1VdZ5DSazqvPp4tjThXyTol9X U3ymUS/mYiotQf0asvpODgjPOAttCGJ9CPhvQEaN3WEioBwg5IaxoMnOt8bF4CJm MdG0ElaNsMACVE8BzgJS7nACEURcxkVWNVsURkNRSgGd/oipLqzkamOoWby67MrN 2DyNuSqs3QzbnBXZdHsVya9fDm8EtSroyF3Lp95hZ/SJ9KqiylSsQTBW9IBrefjf HcDY8fWaMrMZ5V2mXarfsvInCq7VqhwFnAkGhos9ifXGy8MZEG9CcUmakmiFFiCr vXOYOwKCAQADL9Yr/F3dbapIwWGoBLPod3CVAdpwpwnoZZlZRV9zQtOslShlG5U1 XXeMvGgKzEVhyUnhFFCg4rQZUeaQ8Wbh9zRrtkwB8JLRduqUYcWjTE00YP8nM7bu ZNUi3cpAO7Ye4X9I2Ilkyb7N9dkfcE3r6L2ePB8kLX8wQacn7AGmHEDoAJCSQUZQ 5yooijXehk+OchWdW1B9nw1hDOX33AFqgMHun6eWusN3+QJmQFf0TykJicPn4YHx 9eVF7MVY49/XO/5+ZSmEi+iCj8SCaqPboWdvsqWV5SYGotg1jMkn8phOpyuDURTy TXiWpN8la7n0AJMCbCIpkugTLEZ/A41DAoIBAAr73RhOZWDi40D6g+Z2KLHMtLdn xHMEkT0bzRZYlr0WGQpP/GPKJummDHuv/fRq2qXhML7yh7JK8JFxYU94fW2Ya1tx lYa5xtcboQpBLfDvvvI4T4H1FE4kXeOoO46AtZ6dFZyg3hgKlaJkR+pFPLr5Aeak w9+6UCK8v72esoKzCMxQzt3L2euYRt4zTKL3NnrgS7i5w56h2UvP1rDo3P0RVoqc knS1ToamVL2JaPnf/g+gUUVZyya9pyu9RP8MIcd1cvnxZec8JaN89WWnsA2JJbPw stYBnWMvLFabPtPXVcsLrWMEmLFI2yn+fU4YTviwRSs/SrprXDdsqZO2xd8=

Note that in both cases, we are looking at the private key only. As you can imagine, this isn’t really something viable. We will need to store that separately, load it from a file, etc.

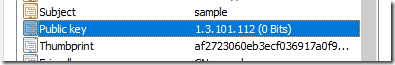

I tried generating Ed25519 keys using the built-in .NET API as well as the Bouncy Castle one. Bouncy Castle is a well known cryptographic library that is very useful. It also supports Ed25519. I spent quite some time trying to get it to work. You can see the code here. Unfortunately, while I’m able to generate a certificate, it doesn’t appear to be valid. Here is what this looks like:

Using RSA, however, did generate viable certificates, and didn’t take a lot of code at all:

We store the actual key in a file, and we generate a self signed certificate on the fly. Great. I did try to use the ECDsa option, which generates a much smaller key, but I run into sever issues there. I could generate the key, but I couldn’t use the certificate, I run into a host of issues around permissions, somehow.

You can try to figure out more details from this issue, what I took from that is that in order to use ECDsa on Windows, I would need to jump through hoops. And I don’t know if Ed25519 will even work or how to make it.

As an aside, I posted the code to generate the Ed25519 certificates, if you can show me how to make it work, it would be great.

So we are left with using RSA, with the largest possible key. That isn’t fun, but we can make it work. Let’s take a look at the connection string again, what if we change it so it will look like this?

Server=northwind.database.local:9222;Database=Orders;Server Key Hash=6HvG2FFNFIifEjaAfryurGtr+ucaNgHfSSfgQUi5MHM=;Client Key=client.key

I marked the pieces that were changed. The key observation here is that I don’t need to hold the actual public key here, I just need to recognize it. That I can do by simply storing the SHA256 signature of the public key, that ensures that I always have the same length, regardless of what key type I’m using. For that matter, I think that this is something that I want to do regardless, because if I do manage to fix the other key types, I could still use the same approach. All values in SHA256 will hash to the same length, obviously.

After all of that, what do we have?

We generate a keypair and store, we let the other side know about the public key hash as the identifier. Then we dynamically generate a certificate with the stored key. Let’s say that we do that once per startup. That certificate is going to be different each run, but we don’t actually care, we can safely authenticate the other side using the (persistent) key pair by validating the public key hash.

Here is what this will look like in code from the client perspective:

And here is what the server is doing:

As you can see, this is very similar to what I ended up with in my secured protocol, but it utilizes TLS and all the weight behind it to achieve the same goal. A really important aspect of this is that we can actually connect to the server using something like openssl s_client –connect, which can be really nice for debugging purposes.

However, the weight of TLS is also an issue. I failed to successfully create Ed25519 certificates, which was my original goal. I couldn’t get it work using ECDsa certificates and had to use RSA ones with the biggest keys. It was obvious that a lot of those issues are because we are running on a particular operating system, which means that this protocol is subject to the whims of the environment still. I have also not done everything that is required to ensure that there will not be any remote calls as part of the TLS handshake in this case, that can actually be quite complex to ensure, to be honest. Given that these are self signed (and pretty bare boned) certificates, there shouldn’t be any, but you know what they say about assumptions ![]() .

.

The end goal is that we are now able to get roughly the same experience using TLS as the underlying communication mechanism, without dealing with certificates directly. We can use standard tooling to access the server, which is great.

Note that this doesn’t address something like browser access, which will not be trusted, obviously. For that, we have to go back to Let’s Encrypt or some other trusted CA, and we are back in PKI land.