Also known as: Please check the subtitle of this blog.

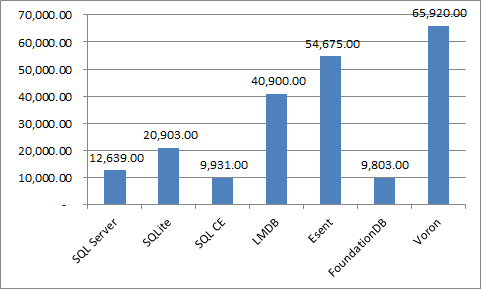

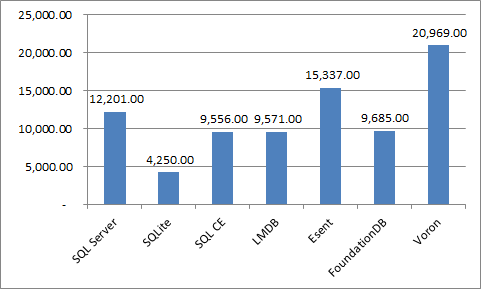

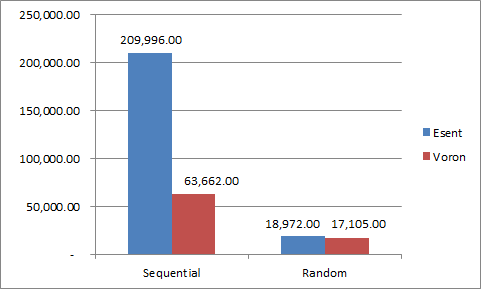

This post is in response to this one. Kelly took offence with this post about Voron performance. In particular, it appears that the major issues are:

This benchmark doesn’t actually provide much useful information. It is too short and compares fully featured DBMS systems to storage engines. I always stress very much that people never make decisions based on benchmarks like this.

These paint the fully featured DBMS systems in a negative light that isn’t a fair comparison. They are doing a LOT more work. I’m sure the FoundationDB folks will not be happy to know they were roped into an unfair comparison in a benchmark where the code is not even available.

This isn’t a benchmark. This is just an interim step along the way of developing Voron. It is a way for us to see where we stand and where we need to go. A benchmark include full details about what you did (machine specs, running environment, full source code, etc). This is just us putting stress on our machine and comparing where we are at. And yes, we could have done it in isolation, but that wouldn’t really give us any major advantage. We need to see how we compare to other database.

And yes, we compare apples to oranges here when we compare a low level storage engine like Voron to SQL Server. I am well aware of that. But that isn’t the point. For the same reason that we are currently doing a lot of micro benchmarks rather than the 48 hours ones we have in the pipeline.

I am trying to see how users will evaluate Voron down the road. A lot of the time, that means users doing micro benchmarks to see how good we are. Yes, those aren’t very useful, but they are a major way people make decisions. And I want to make sure that we come out in a good light under that scenario.

With regards to Foundation DB, I am sure they are as happy about it as I am about them making silly claims about RavenDB transaction support. And the source code is available if you really want to, in fact, we got the Foundation DB there because we had an explicit customer request, and because they contributed the code for that.

Next, let us move to something else equally important. This is my personal blog. I publish here things that I do on a daily basis. And if I am currently in a performance boost stage, you’re going to be getting a lot of details on that. Those are the results of performance runs, they aren’t benchmarks. They don’t get anywhere beyond this blog. When we’ll put the results on ravendb.net, or something like that, then it will be a proper benchmark.

And while I fully agree that making decisions based on micro benchmarks is a silly way to go about doing so, the reality is that many people do just that. So one of the things that I’m focusing on is exactly those things. It helps that we currently see a lot of places to improve in those micro benchmarks. We already have a plan (and code) to see how we do on a 24 – 48 hours benchmark, which would also allow us to see all sort of interesting things (mixed reads & writes, what happens when you go beyond physical memory size, longevity issues, etc).