This is a fictitious example of such a bug, but this would probably sound familiar.

Jane was soundly sleeping around 3AM when the phone started ringing off the hook. Dazed and not quite in full possession of her faculties, she picked up the phone and heard Tom from Operations screaming that the Northwind System was gone. It wasn’t just one application that died, it was all of them, and Operations had no idea what is going on, any CxO in the company was pissed and they were bleeding money and reputation by the second.

While driving to work, Jane took part in a conference call trying to figure out what happened. The following things became clear:

- There had been no changes to the production environment in the last week.

- The last change was in the reporting system, which was humming along for the last two weeks or so.

- Database servers were pegged by ridiculous amounts of queries from all applications.

- Cache servers were up and functioning.

- The production logs verbosity was adjusted and it seemed that every single query was a cache miss.

It became clear that something cause every single application to skip the cache and go directly to the database, but no one knew what.

Upon arriving at the office, they run into more puzzlement, frustration and anger. The problem was definitely related to the cache, but there was absolutely no explanation for that. The cache code was written several years ago, it was a pretty complex piece of code, and it was tested in production for almost as long.

The first suspicion fell on some sort of communication breakage between the application servers and the cache servers, but that was quickly ruled out. Looking at the logs, it was clear that the problem was 100% cache misses causing every single query to hit the database, which responded by throwing up its hands and sulking, refusing to talk to anyone.

The problem was absolutely non reproducible in the test or development environment.

The team decided to appropriate a single production server and run their tests on it. In production, it was absolutely reproducible, but no one could figure out why. Network traces from that server showed that it never even tried to connect to the cache servers for reading the queries, however, it would happily connect to the cache servers for writing the queries.

The root problem was found, then, somehow, there was a problem in reading data from the cache servers. Three developers and at least one IT guy were willing to swear on the religion of your choice that this is absolutely not possible, but evidence suggested otherwise.

Installing a kernel level network trace was suggested. After installing the trace and rebooting the machine, the team hovered over the admin head while they started up the application again and watched the network trace from the kernel. This time, however, they could see it reading from the cache servers.

Not only that, but it looked like the application was functioning normally! Installing the kernel trace fixed the problem! Since this was an issue of production being down, they tried the same method on a second server, and that came back up normally as well. Every single IT guy in the building was suddenly busy installing a kernel network trace, and lo and behold, the entire system came back up.

By that time, it was close to 4 PM of the following day, and just about everyone was exhausted. The CTO sent everyone whom and scheduled a meeting at 9 AM to discuss what happened.

At 9 AM the following day, no one could really suggest a working hypothesis for what actually happened. The Administration Module Team Lead suggested that their system has run into some threading bug in the network stack and installing the kernel level trace had changed the timing just enough to make the problem go away. The Reporting Module Team Lead snapped that it is not possible to have a network bug that only affect reading from a particular socket. The IT group insisted that there were no modifications to the production environment, all changed firewall rules in the last month were reviewed, and they couldn’t do that.

The CTO closed the meeting by quoting the damage that this downtime has done to the company, and directed everyone to investigate it further. No actual smoking gun could be found, and eventually the matter was dropped. One of the results of this change was an added emphasis on the production worthiness of all the parts of the system. Traces and logs were enhanced, circuit breakers were introduced and in general the team thought that they are pretty safe. The kernel trace fixed the problem, of course, but next time they will have a lot more information about what is going on.

Four months later…

The production issue that brought down the site was almost forgotten by this time, the system was running along happily for months, the changes to make the system more robust in production were already there, and everyone was happy.

Until the 3AM call on Thursday’s night. While driving back to work on empty roads, Jane had a strong sense of deja-vu. Again, the problem was that the system was hammering the database, again the log showed 100% cache misses. Again, there was no significant change to the production environment.

This time, the team already knew who the culprit was, and the caching system was given a thorough overview. No one could really find something wrong with it, but even more logging was added, and the change was pushed to production, where it didn’t seem to do any good at all.

Trying to ignore the CxO screaming match in the nearby conference room, the Infrastructure team tried to figure out what was wrong exactly. Jane thought that the problem was with the cache invalidation logic. The caching system used a fairly complex system to decide whatever a value in the cache was fresh enough or not.

Disabling that piece of the system was risking stale data scenarios, but stale data was better than no data. They commented that part out and push that to production. Unfortunately, it didn’t change one iota, and the system kept producing cache misses.

That was patently impossible, of course, but there was it. The IT group, in the meantime, came to the rescue. Trevor, the new guy on the team, decided to try deploying the application to a brand new server that was waiting to be provisioned. On the new server, the system worked, and that server did not have the kernel network trace installed.

Acting on instinct, they tried uninstalling the kernel network trace on one of the existing production servers. Like magic, that server came back to life and started working normally seconds after the reboot was completed. A rolling uninstallion of the magical kernel trace fixed the issue.

But this was being the second failure this year, and both could be traced to the same component. The development team was at a loss to explain what was going on there. For the next month or so, no developer dared walk by the executives part of the building. External consultants were brought in to do an in depth study of the code base, trying to find the problem. The consultants couldn’t really find anything to blame, but they didn’t trust the existing home grown cache servers. The company bought a commercial cache package, to the tune of 2,000 $ per CPU, replacing their caching backend.

For a while, it seemed like that would do it…

Jane woke up a few seconds before 3 AM, two months after the previous incident. It was Thursday again, and the phone was ringing. After briefly considering running away to the Sahara, she picked up the phone. It was the exact same scenario.

After arriving at the office, Jane didn’t bother to join the already violent row in the conference room, she sat down on her desk and brought up the Caching Module. She sat down and added a logging statement after each line in the codebase. Then she called Operations and had them push the new caching module to production.

That change didn’t fix the problem, but it gave Jane an exception. And that exception just wasn’t possible.

System.IndexOutOfRangeException: Index was outside the bounds of the array.

at System.Array.InternalGetReference(Void* elemRef, Int32 rank, Int32* pIndices)

at System.Array.GetValue(Int32 index)

at Northwind.CachingModule.CacheSelector.GetServer()

at Northwind.CachingModule.Cache.Get(string key)

That didn’t make any sort of sense, but at least she had a single method to check for.

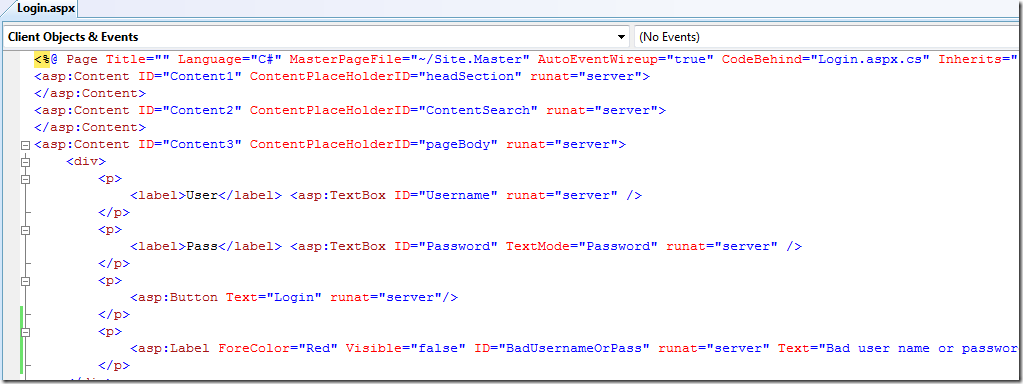

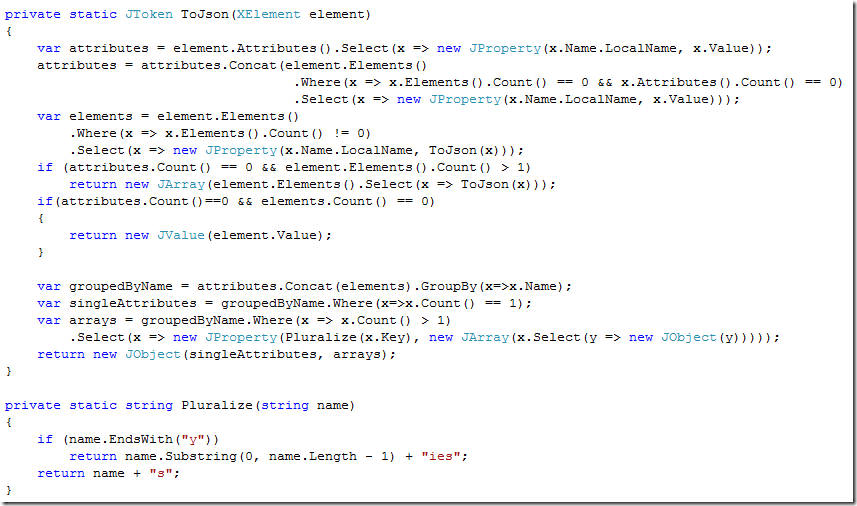

private CacheServer GetServer()

{

if(aliveAndWellServes.Count == 0)

return null;

var server = aliveAndWellServes[Environment.TickCount % aliveAndWellServes.Length];

if(server.IsAlive == false)

{

aliveAndWellServes.Remove(server);

server.Dispose();

}

return server;

}

The idea was to spread the load between the cache servers, so there wouldn’t be a single cache server being hit repeatedly. Somehow, that method was failing, when it failed, it caused the caching system generic error handler to consider this as a downed cache server, and produce a cache miss.

Jane pushed another update to production, this time adding pervasive logging to the GetServer method. The output said it all.

Alive and well servers: 4

Tick count: -137538094

Server index: -2

Looking at the documentation of the Environment.TickCount, it all became clear. That method will return a negative number if the system has been running for over 25 days. When that happened, the cache implementation would fail, leading to overload on the database and closing the entire system down.

The reason that the error was patches meant that the servers were occasionally rebooted, so you had to wait until enough time passed since the last reboot. Installing and uninstalling the kernel trace resulted in a reboot, which temporarily fix the problem.

An interesting final tidbit about this error. No one mentioned to the executives that all source control systems come with a blame feature.