You might have noticed that during the last day, we had duplicated posts. When I first saw that, I was shocked, there was absolutely no reason for this to have happened, and it seems that somehow the index or the database were corrupted.

We started investigating this, and we were able to reproduce this locally by using the database export. That was a great relief, because it meant that at least we could debug this. Once we were able to do that, we found out what the problem was. And basically, there was no problem. It was a configuration error (actually, two of them) that caused the problem.

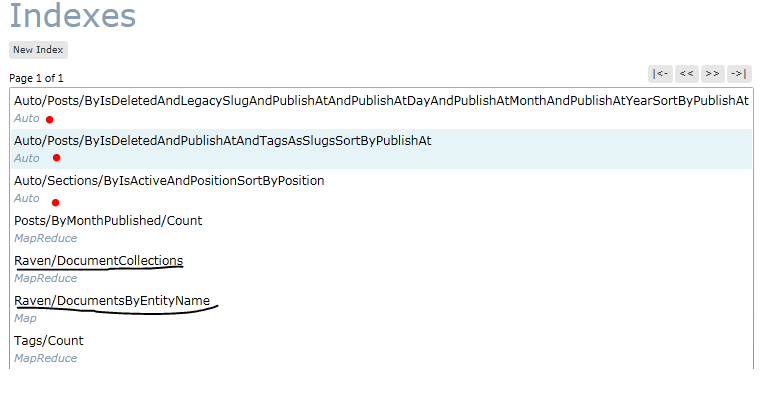

We accidently enabled versioning for the blog’s database, you can read more about RavenDB’s versioning bundle if you want, but basically, it keep copies of modified documents so you have an audit trail around. So far, so good, and no one noticed anything. But then we removed versioning from the blog’s database, since after all, we didn’t need it there in the first place.

The problem is that in the meantime, the versioning bundle created all of those additional documents. While it was in operation, it would hide those documents (since they were there for historical purposes only), but once we removed it, all those historical documents showed up. The reason for the duplicate posts was that we had duplicate posts, it was just that they were duplicate posts of the same post (the historical review).

Why did we have so many? Whenever you comment, we update the CommentCount field, so we had as many historical copies for most posts as we had comments.

Fixed now, and I apologize for the trouble.

As a general point of interest, bundles allows great flexibility in the database, but they are design to be with the database. Removing and adding bundles to a database on the fly is not something that is going to just work, as we have just re-learned.

I apologize for the problem, it was a simple misconfiguration error that caused all the historical records to show up when they didn’t need to. Nothing to see here, move along ![]()

One of the things that people kept bugging me about is that I am not building applications any longer, another annoyance was with my current setup using this blog.

One of the things that people kept bugging me about is that I am not building applications any longer, another annoyance was with my current setup using this blog.