In my last post I dove into the Epoch implementation. The Epoch is explained very nicely in the paper, and the code follows the paper pretty closely. The code make sense, but I still lack the proper… feeling for how it is actually being used. The Epoch allows you to register code that will be executed when the epoch is updated, which is the key to how FASTER is making progress, but while I can see that this is being called from the allocators, I haven’t really grokked it yet. I’m going to back to the faster.h file and see what I can glean from there.

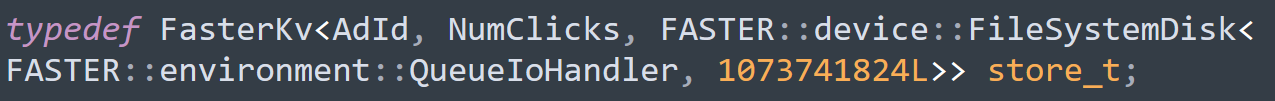

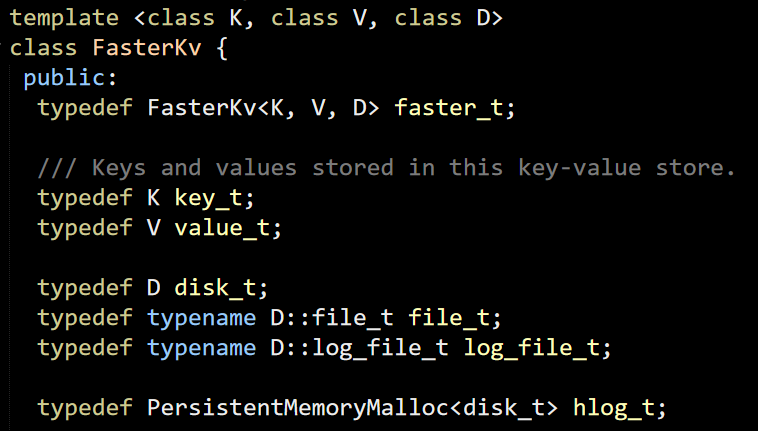

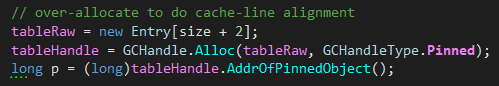

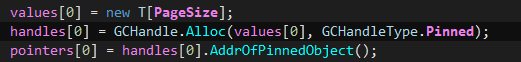

Because of the template utilization, it is kinda hard to figure out what exactly is going on, I’m going to look at some of the examples and try to figure out what it is doing there. Here is one instance of this class:

AdId and NumClicks are just two ways to provide operations on 8 bytes keys and values. I like these examples because they provide good use case to talk about FASTER usage.

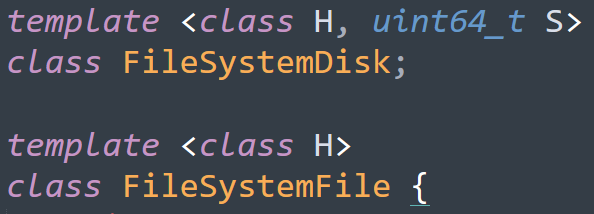

This code leads me to the FileSystemDisk, which is defined as:

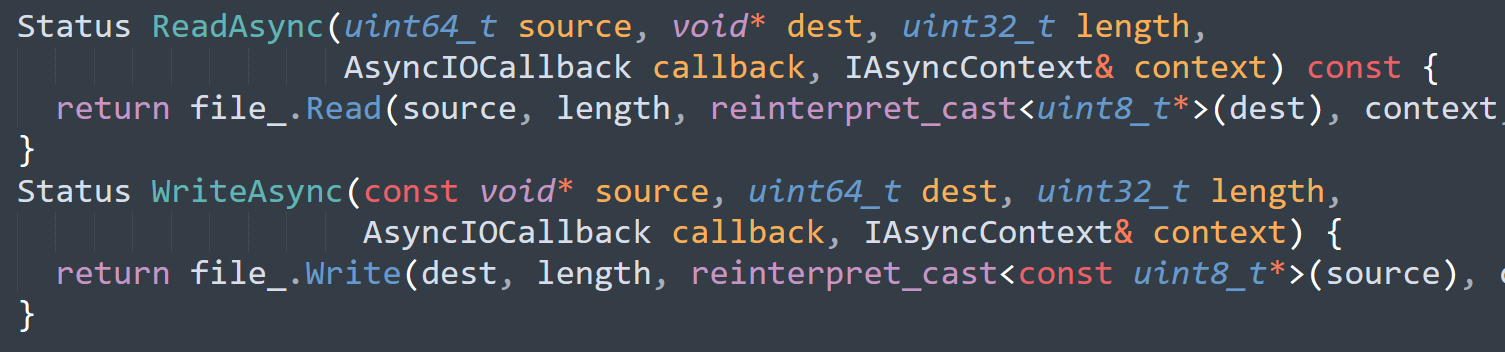

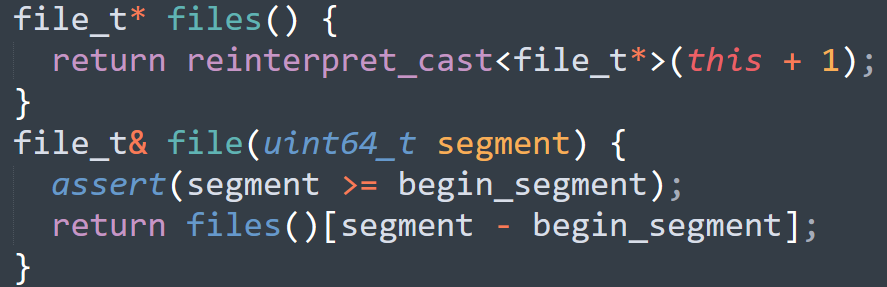

In the FileSystemFile, we have this code:

This is pretty simple, but I was quite amused by this, because this is C# API sneaking up again in the C++ code. There is also this:

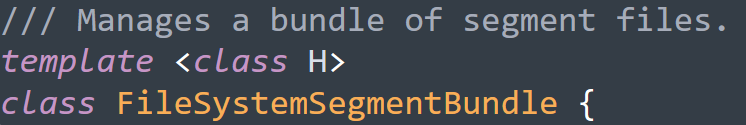

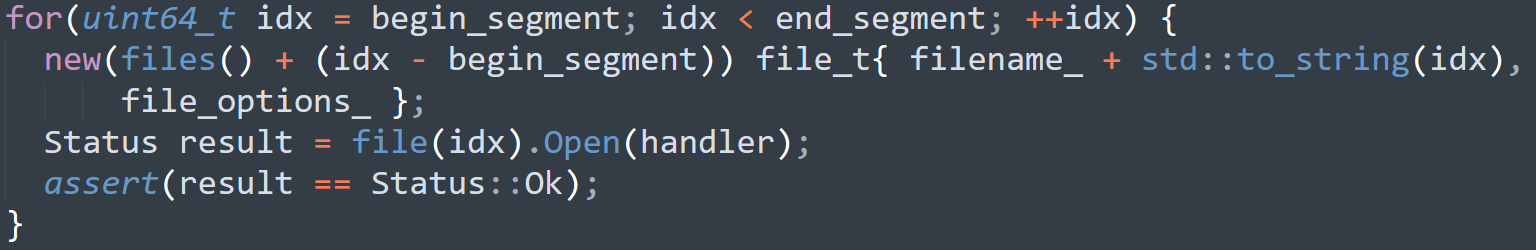

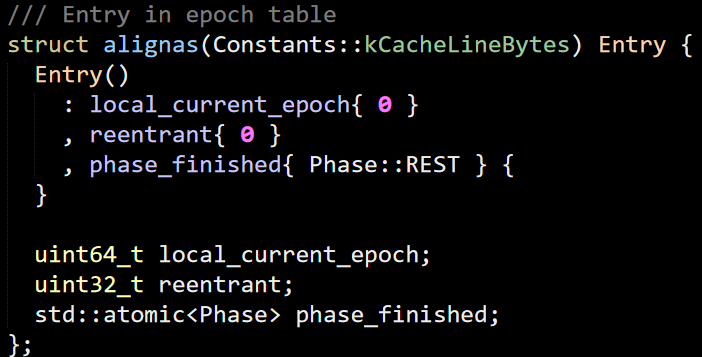

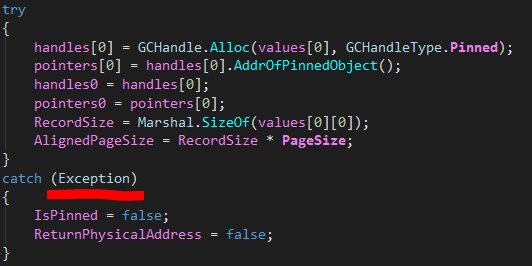

I’m not sure what this bundle is, though. I run into this code in the class:

This is… not nice, in my eyes. Based on this code, whoever allocated this instance also allocated a buffer large enough to include more data there. This is fairly common, since you want to work with such data together, but I find it ugly / risky because it means that there are multiple locations that needs to be aware of it. I would like it better if they just passed the pointer explicitly. That would avoid this snippet:

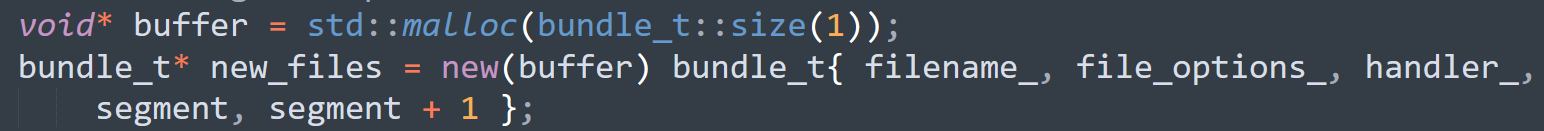

Which I find pretty annoying to read. What is stranger is that to use this, you have to write (bundle_t has been typedef for the FileSystemSegmentBundle):

I get what is going on here, but I just find it really awkward to handle. There are multiple places where you need knowledge of this allocation pattern and I don’t believe that the benefit of placing all of the data together is that important. For that matter, given the importance of not using new explicitly in modern C++, I’m sure that there are other ways to achieve the same goal that would be more natural.

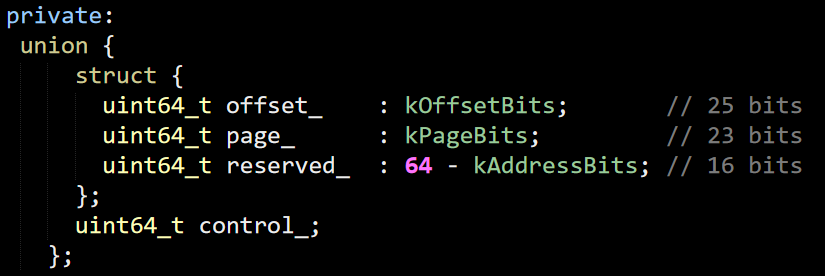

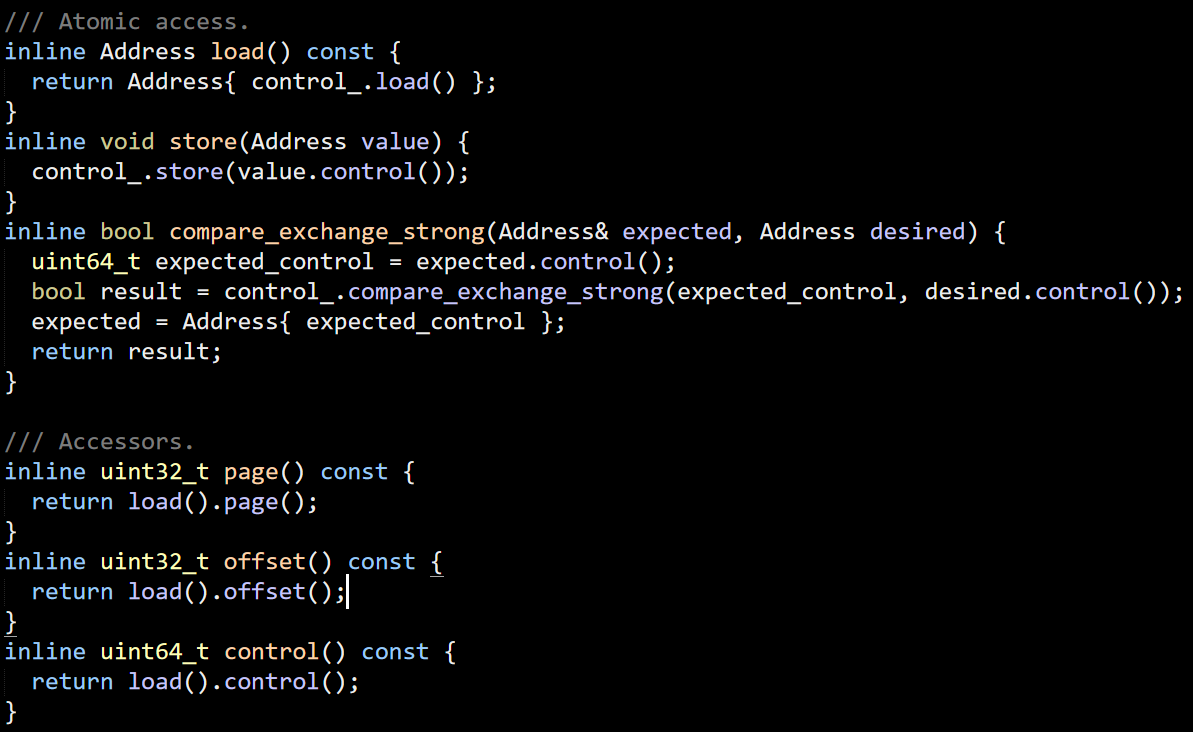

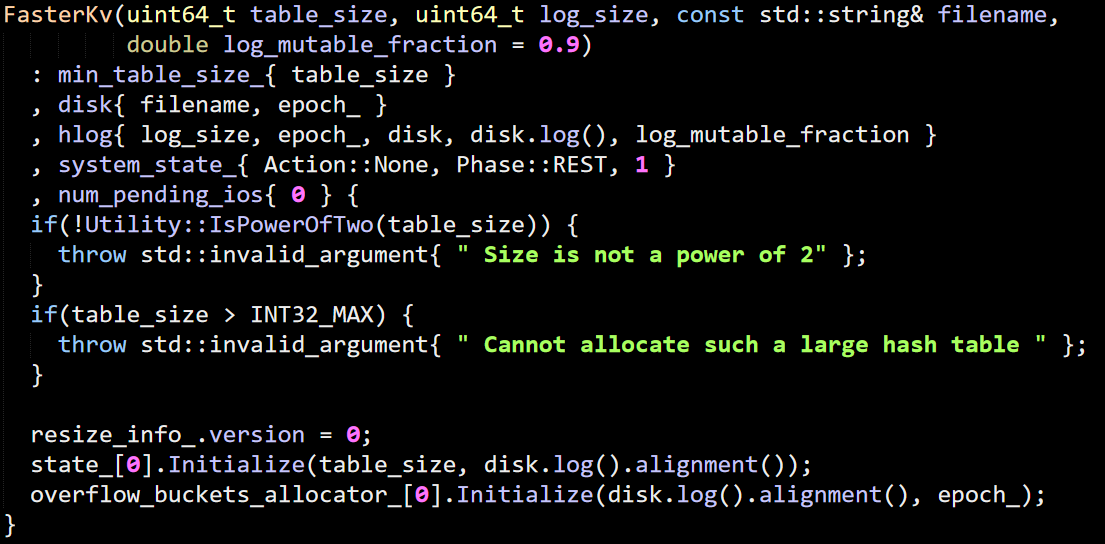

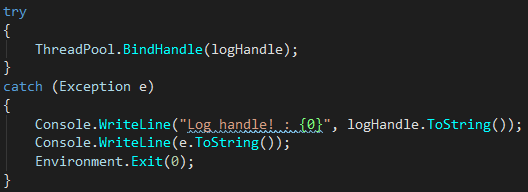

Going through the code, we now have:

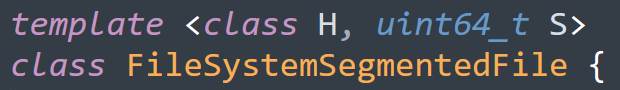

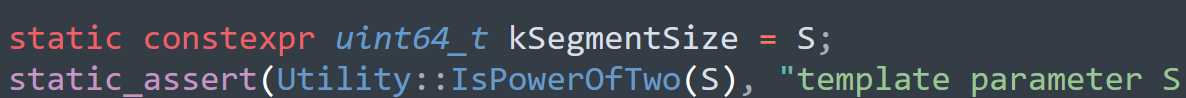

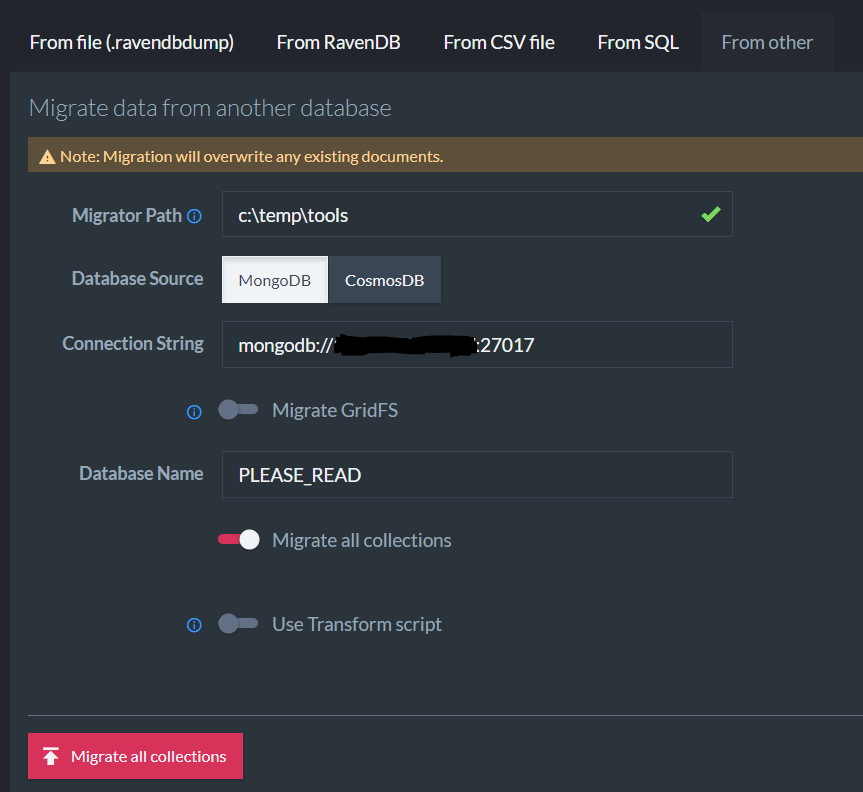

I decided to make this post about the file system usage, because there is a lot of pretty complex code here that I would like to understand. I finally figured out what the S is, this is the segment size:

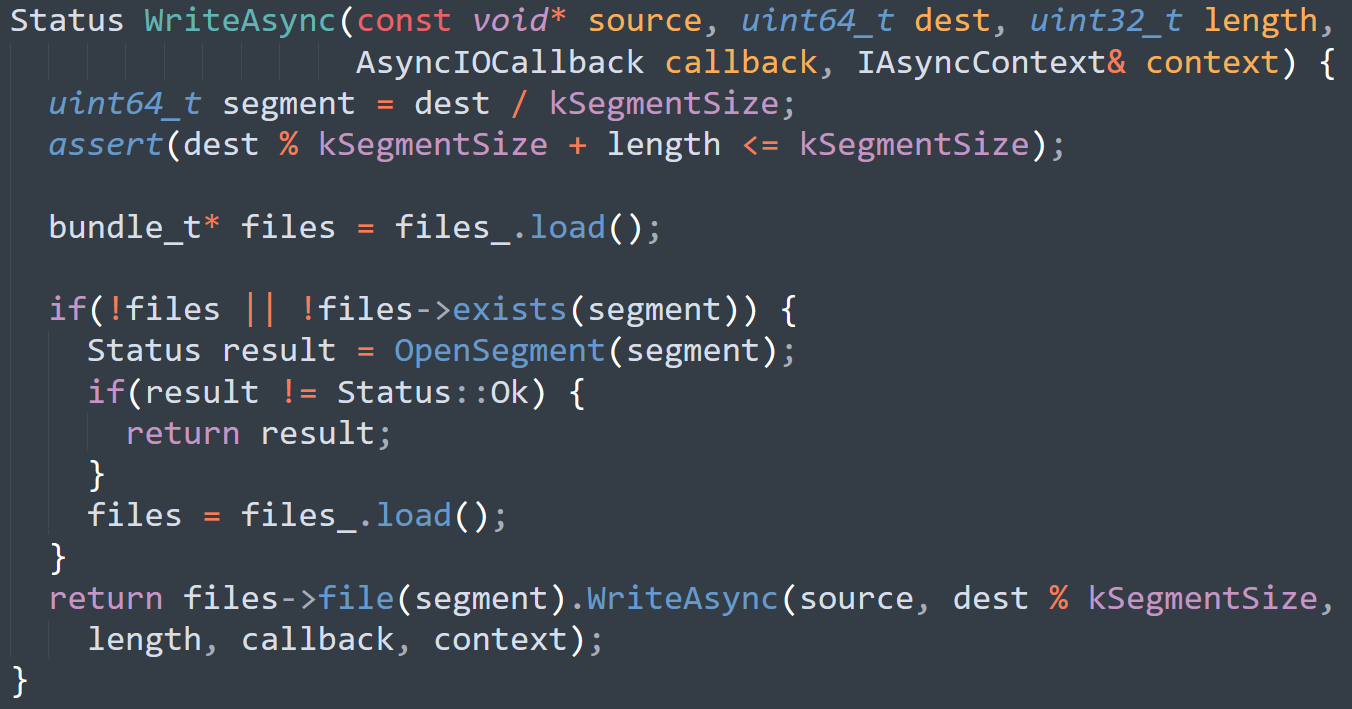

This means that the earlier definition of FasterKv basically defined Segment Size of 1 GB in size. I’m not sure what these segments are, though. I’m pretty sure that this is how they manage time base expiration, but I’m not certain. Following upward from the creation of a new segment, we have WriteAsync, like so:

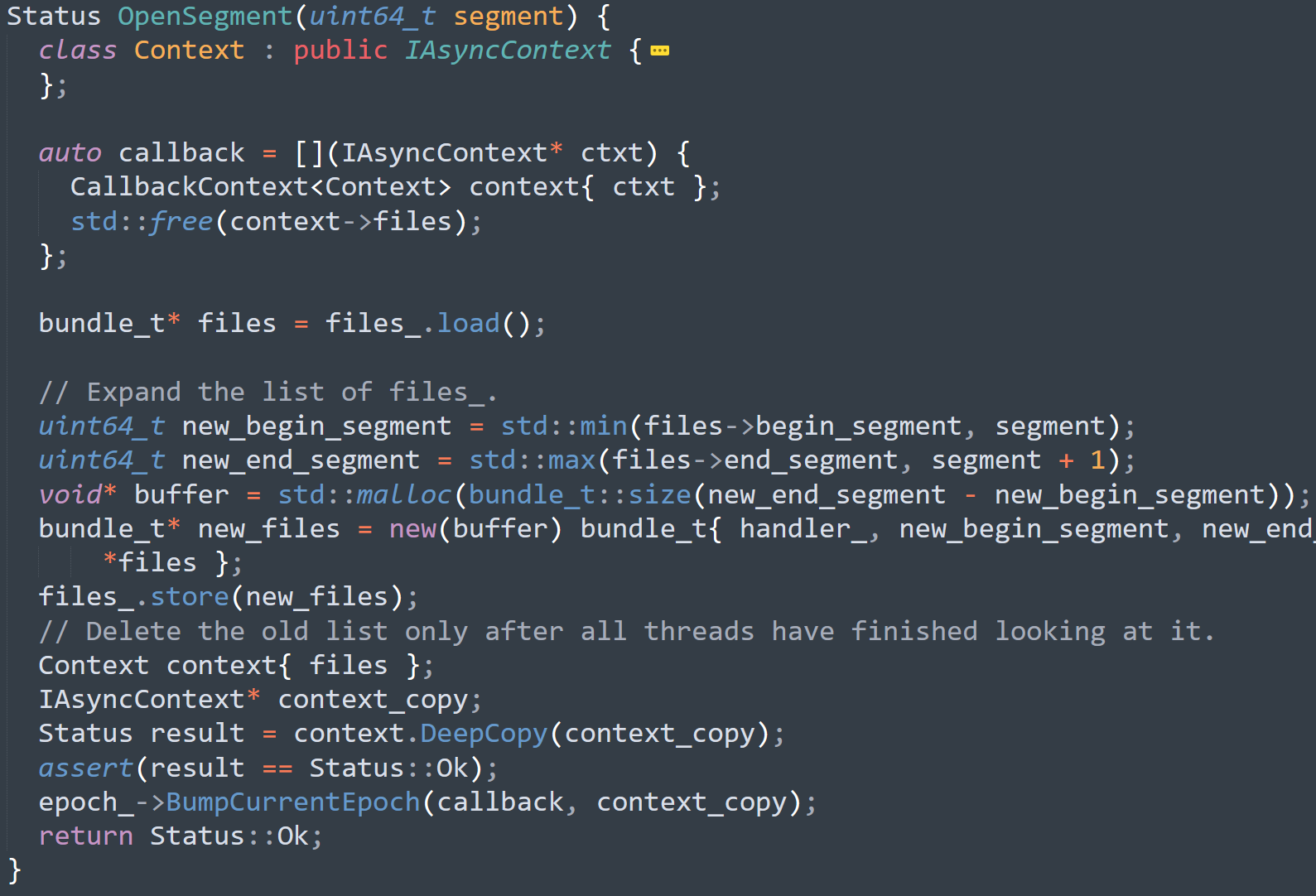

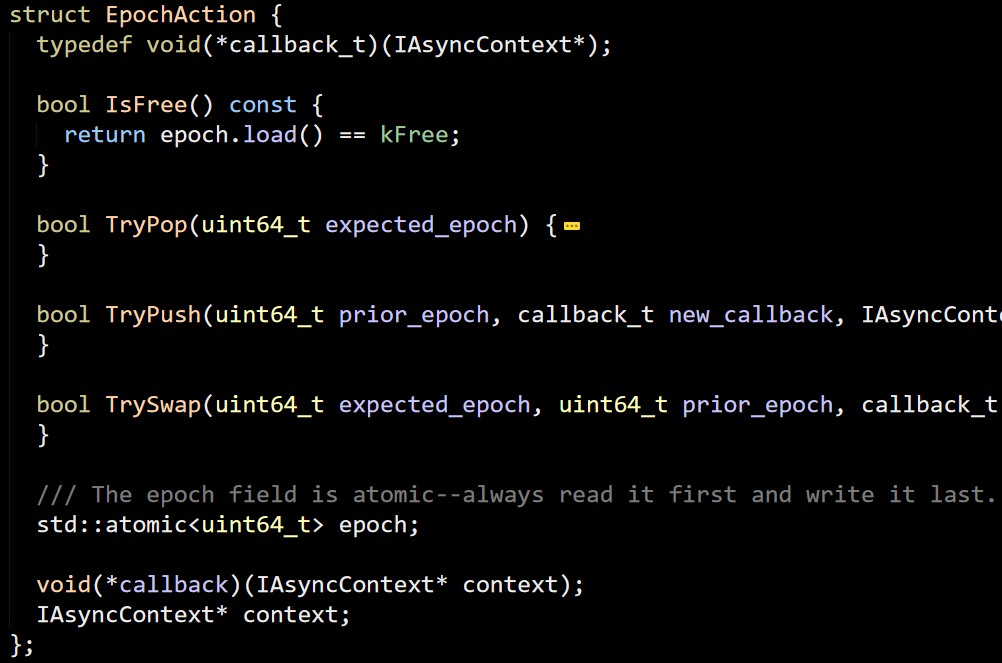

You can see that the segment number is basically just the file id, and if the file does not already exists, we call OpenSegment on it. Afterward, we call WriteAsync on that specific file. I’ll look into how that work in a bit, this isn’t that interesting at the moment. Right now I want to dig into OpenSegment. I removed some error handling here, but the gist of it is clear.

The actual code also handles threading and errors, which I omitted. You can see that it creates the new files, copying them from the existing value. Then it creates a context that holds the old files and pass it to BumpCurrentEpoch.

When FASTER is sure that no one else is looking at this epoch, it will call the callback and delete / dispose the old files. This is a nice way to ensure consistency. LMDB does something similar with its transactions’ table. So now we know that whenever we write at a 1GB boundary, FASTER will generate a new epoch.

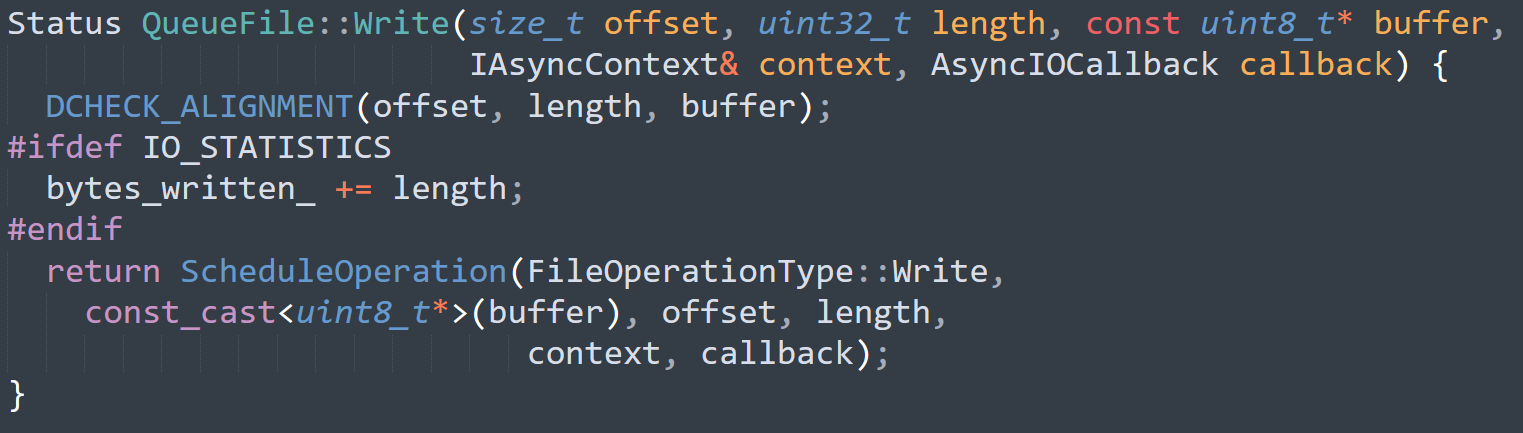

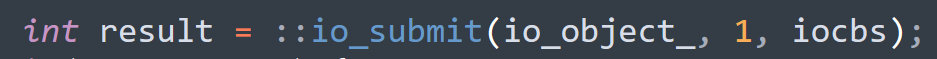

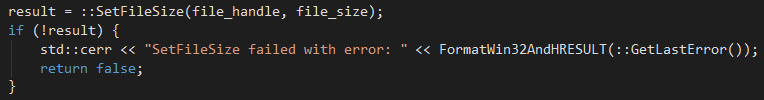

What about the actual writing? Here is what this looks like (the Linux impl):

On Linux, this ends up being:

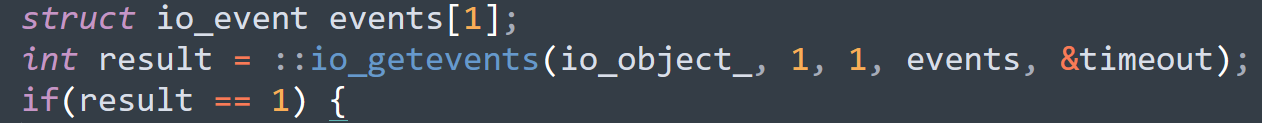

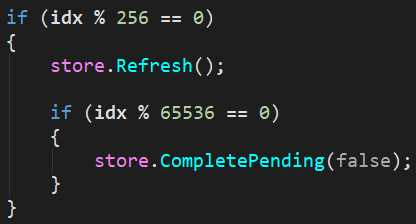

This is then checked in TryComplete:

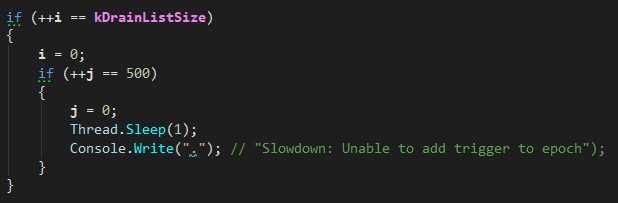

This is called FasterKv.CompletePending(), which seems to be called occasionally by FASTER. On Windows, this is using async I/O and callbacks to handle this.

Okay, this is already long enough, but I got a handle on how FASTER is writing to disk, even though I don’t know yet what it is doing with that. I also saw an actual use of Epoch that made sense (clearing old data once no one is looking at that).