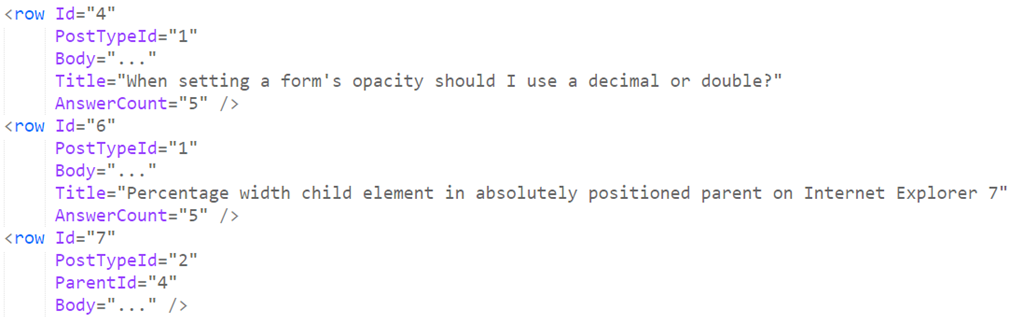

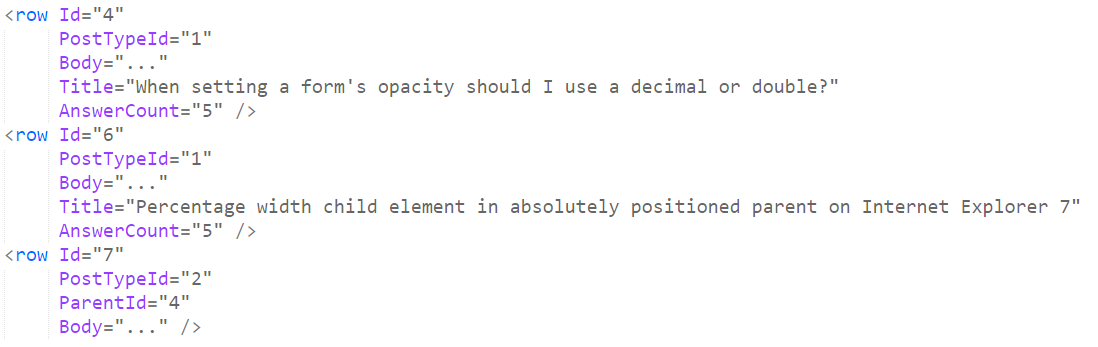

As I mentioned, given a 45 GB file containing all the StackOverflow questions since 2008, I wanted to transform items like this:

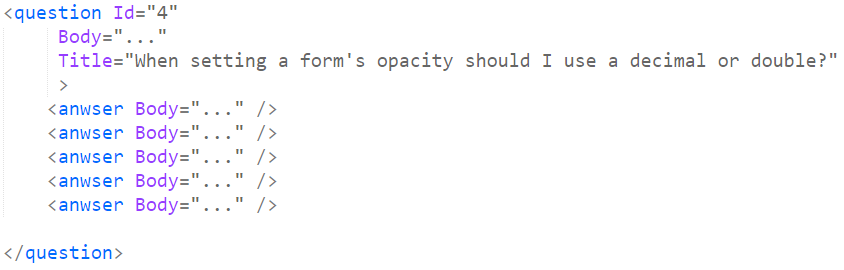

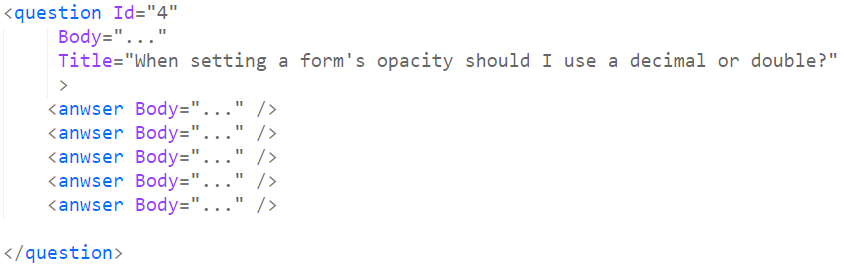

Into this:

This turn out to be rather tricky to do. The file is sorted by the row id, but there are tricks here:

- You can’t assume that all the answers to a question are located near the question itself.

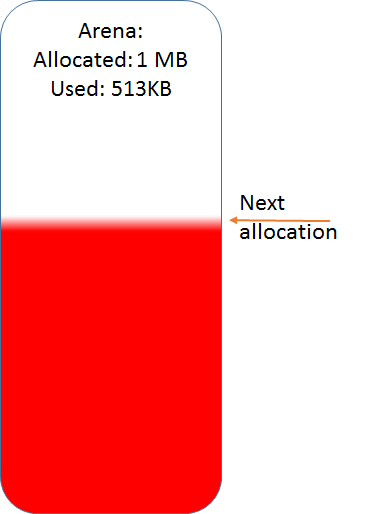

Ideally, we could keep a dictionary of the questions and their answers until we have all the answers to the question, they write it all out. The problem here is that a question might have answered years after it was asked, which means that the answer id would place it very far from the question in the file, which means that we have to keep the question in memory for a very long time. In practice, this happens quite frequently, and it plays havoc with trying to limit the memory utilization for this approach.

- While you would expect that answers will always have a row id that is higher than their questions, that isn’t always the case.

Let us take this question, about Haskell monads, which has the following answer.

The answer id is: 2388

The question id is: 44965

That means that if you scan the file sequentially, you are going to find the answers before their questions, somethings a lot before (I’m guessing that this happens if you have edits to a question, so a popular question might get edited long after it was asked & had answers).

The next thing I tried was to group all the questions together, then process them. Because I can’t do that in memory, I tried writing them to disk directly:

- /questions/44965/q

- /questions/44965/2388

The idea is that I can write them out to the file system, and then traverse the file system to gather all of them. The problem is that we are talking about over 32 million questions & answers, that isn’t really going to work for us using most normal file systems.

So I tried writing it all to a zip file, which was successful, but resulted in a zip file that was 21GB in size, and was very good in ensuring that nothing could open it before I run out of patience.

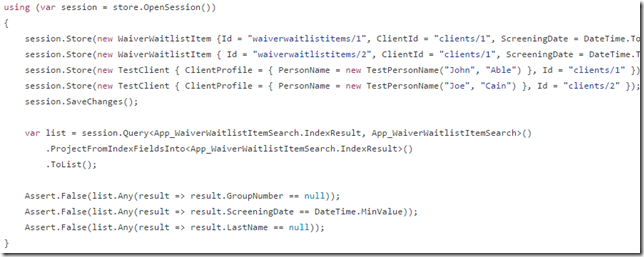

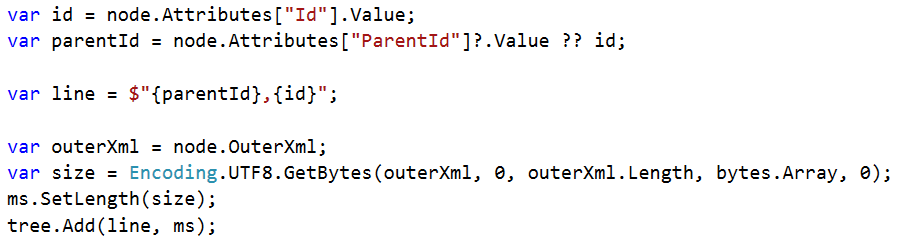

Eventually I just gave us and used Voron to handle it, here are the relevant sections:

Remember that Voron stores everything in a B+Tree, and allows to do ordered operations, so once this is done, all I need to do is traverse the tree, get all the records that have the same parent id, and voila, I have the grouping I wanted.

If I wanted to do it without the use of a database (or Voron), I would probably go with the following manner:

- Read each entry from the source data, and write it to another file, noting its location.

- Write “parent id, id, location” to a separate index file

- Sort the index file.

- Start reading from the index file as long as I have the same parent id, and merge those questions.

Why separate file? Because XmlReader does buffering, so it will be really hard to just in the file from just reading, by writing it out, we have the proper position to seek to.

Note that this will require a lot of random I/O, but that is pretty much just what it has to be.

We spent some time recently looking into a lot of our old design decisions. Some of them make very little sense today (json vs. blittalbe as a good example), but made perfect sense at the time, and were essential to actually getting the product out.

We spent some time recently looking into a lot of our old design decisions. Some of them make very little sense today (json vs. blittalbe as a good example), but made perfect sense at the time, and were essential to actually getting the product out.