Playing with the StackOverflow datasets : All the wrong ways

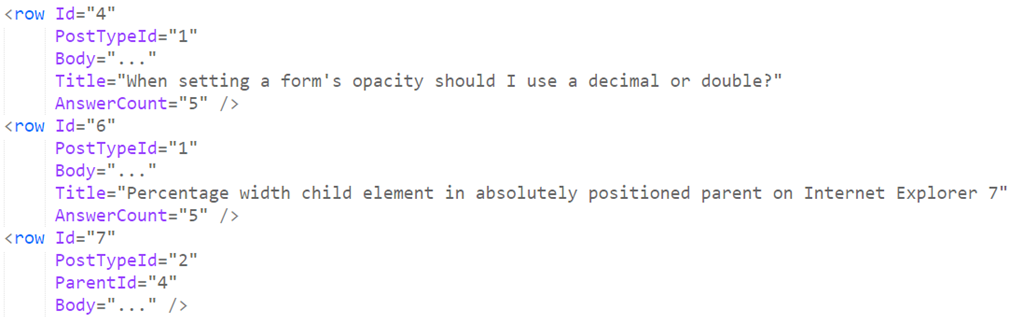

As I mentioned, given a 45 GB file containing all the StackOverflow questions since 2008, I wanted to transform items like this:

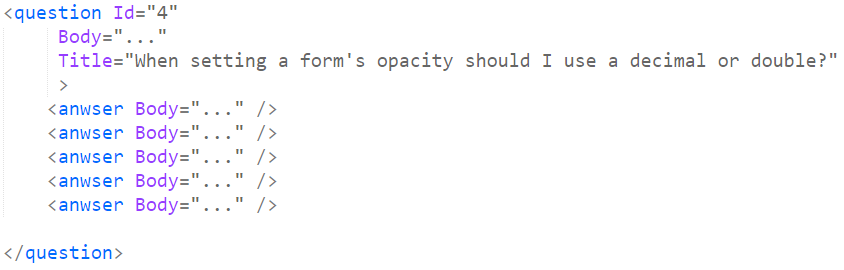

Into this:

This turn out to be rather tricky to do. The file is sorted by the row id, but there are tricks here:

- You can’t assume that all the answers to a question are located near the question itself.

Ideally, we could keep a dictionary of the questions and their answers until we have all the answers to the question, they write it all out. The problem here is that a question might have answered years after it was asked, which means that the answer id would place it very far from the question in the file, which means that we have to keep the question in memory for a very long time. In practice, this happens quite frequently, and it plays havoc with trying to limit the memory utilization for this approach.

- While you would expect that answers will always have a row id that is higher than their questions, that isn’t always the case.

Let us take this question, about Haskell monads, which has the following answer.

The answer id is: 2388

The question id is: 44965

That means that if you scan the file sequentially, you are going to find the answers before their questions, somethings a lot before (I’m guessing that this happens if you have edits to a question, so a popular question might get edited long after it was asked & had answers).

The next thing I tried was to group all the questions together, then process them. Because I can’t do that in memory, I tried writing them to disk directly:

- /questions/44965/q

- /questions/44965/2388

The idea is that I can write them out to the file system, and then traverse the file system to gather all of them. The problem is that we are talking about over 32 million questions & answers, that isn’t really going to work for us using most normal file systems.

So I tried writing it all to a zip file, which was successful, but resulted in a zip file that was 21GB in size, and was very good in ensuring that nothing could open it before I run out of patience.

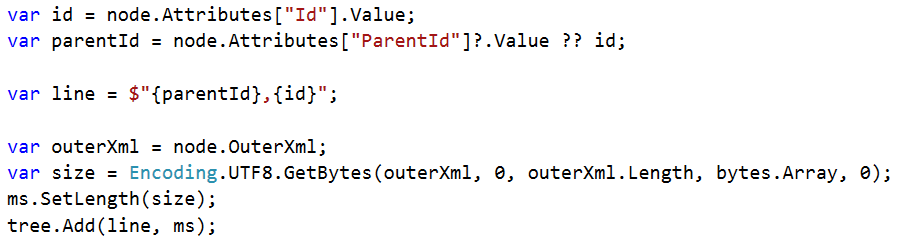

Eventually I just gave us and used Voron to handle it, here are the relevant sections:

Remember that Voron stores everything in a B+Tree, and allows to do ordered operations, so once this is done, all I need to do is traverse the tree, get all the records that have the same parent id, and voila, I have the grouping I wanted.

If I wanted to do it without the use of a database (or Voron), I would probably go with the following manner:

- Read each entry from the source data, and write it to another file, noting its location.

- Write “parent id, id, location” to a separate index file

- Sort the index file.

- Start reading from the index file as long as I have the same parent id, and merge those questions.

Why separate file? Because XmlReader does buffering, so it will be really hard to just in the file from just reading, by writing it out, we have the proper position to seek to.

Note that this will require a lot of random I/O, but that is pretty much just what it has to be.

Comments

It seems that You cannot use a Biztalk server :-)

The max message size is about 100MB and 1GB (routing only) https://msdn.microsoft.com/en-us/library/aa560481.aspx

I would have like to work with XSLT transforms again :-)

Carsten, Yes, typo :-) Hard to fix in an image, though. I actually double checked the number of periods you have there :-)

Carsten, I'm afraid to ask, but how would you do that in XSLT?

It might be done in XSLT because XSLT is Turing Complete https://en.wikipedia.org/wiki/XSLT

I think the process is like your solution. You might use XSLT to select and make the index files and later sort and merge. I guess that one template is too complicated, so you embed XSLT in a traditional programming language. According to my above Biztalk link the XML use a factor of 10 more storage in memory. I guess you need at least 500 GB internal memory to do a single transform.

I learned XSLT 16 years ago I was just wondering if BizTalk could solve this kind of problems today using some clever algoritms behind the scene in order to scale. It seems the focus is still on Business Documents.

No, editing a question does not change its id. What happened in that case is that a question was merged with an older question, which moves its answers. The revision history of the answer you linked to says so:

https://gist.github.com/wqweto/d4964ed6f3cd2106b4c73cd08109b753

Took 20 min on my 840 EVO drive to process latest Posts.xml and peaked at 3.5GB

Why not store them directly in ravendb if the parent doesn't exist generate a stub when the real one pops patch the stub

Tal, That would require us to make 32 million requests to RavenDB, since we can't really work with bulk insert when we need editing documents.

That would take too long. And the reason we wanted the data is to have a dump file in RavenDB format that we can test bulk load scenarios

It will take about 3 hours and it is a good benchmark for load and patch :)

Tal, Not the kind of test that we are aiming for here.

I have uploaded my XSLT-code here: https://github.com/cahan0506/xslt-Ayende-Challenge

I used the following link for online testing: http://www.freeformatter.com/xsl-transformer.html

I do the XSLT in 3 steps - (a) select and align nodes, (b) sort, (c) merge

I don't expect the solution to be fast or slim. There might be a market opportunity concerning XSLT-tranforms of big files. StackOverflow reports crash already from 60MB, so I am not going to try my solution on any big files.

Since XSLT is a declarative language, I will blame the people who wrote the XSLT query optimizer :-)

The "write one file per question" approach is a valid approach as long as you don't store too many files in a single directory. You should organize files on a directory tree structure. For example you could store question id 1234567 on \1\234\567.xml. I did a proof of concept: I generated 15 million small xml files following that schema, the files per second rate remained constant at about 1,850 files per second from the beginning to the end of the process.

I once had a problem with a lot of folders and files in Windows. NTFS uses a scalable B-Tree implementation but the problem is that Windows Explorer freezes.

Comment preview