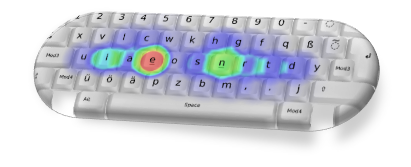

A customer asked for an interesting feature. Given two servers that need to replicate data between themselves, and the following network topology:

A customer asked for an interesting feature. Given two servers that need to replicate data between themselves, and the following network topology:

- The Red DB is on the internal network, it is able to connect to the Gray DB.

- The Gray DB is on the DMZ, it is not able to connect to the Red DB.

They are setup (with RavenDB) to do replication to one another. With RavenDB, this is done by each server opening a TCP connection to the other and sending all the updates this way.

Now, this is a simple example of a one way network topology, but there are many other cases where you might get into a situation where two nodes need to talk to each other, but only one node is able to connect to the other. However, once a TCP connection is established, communication is bidirectional.

The customer asked if we could add a configuration to support reversing the communication mode for replication. Instead of the source server initiating the connection, the destination server will do that, and then the source server will use the already established TCP connection henceforth.

This works, at least in theory, but there are many subtle issues that you’ll need to deal with:

- This means that the source server (now confusingly the one that accepts requests) is not in control of sending the data. Conversely, it means that the destination side must always keep the connection open, retrying immediately if there was a failure and never getting a chance to actually idle. This is a minor concern.

- Security is another problem. Replication is usually setup by the admin on the source server, but now we have to set it up on both ends, and make sure that the destination server has the ability to connect to the source server. That might carry with it more permissions that we want to grant to the destination (such as the ability to modify data, not just get it).

- Configuration is now more complex, because replication has a bunch of options that needs to be set, and now we need to set these on the source server, then somehow have the destination server let the source know which replication configuration it is interested in. What happens if the configuration differs between the two nodes?

- Failover in distributed system made of distributed systems is hard. So far we actually talked about nodes, but this isn’t actually the case, the Red and Gray DBS may be clusters in their own right, composed of multiple independent nodes each. When using replication in the usual manner, the source cluster will select a node to be in charge of the replication task, and this will replicate the data to a node on the other side. This can have multiple failure modes, a source node can be down, a destination node can be done, etc. That is all handled, but it will need to be handled anew for the other side.

- Concurrency is yet another issue. Replication is now controlled by the source, so it can assume certain sequence of operations, if the destination can initiate the connection, it can initiate multiple connections (or different destination nodes will open connections to the same / different source nodes at the same time) resulting in a sequential code path suddenly needing to deal with concurrency, locking, etc.

In short, even though it looks like a simple feature, the amount of complexity it brings is quite high.

Luckily for us, we don’t need to do all that. If what we want is just to have the connection be initiated by the other side, that is quite easy. Set things up the other way, at the TCP level. We’ll call our solution Routy, because it’s funny.

First, you’ll need a Routy service at the destination node, this will just open an TCP connection to the source node. Because this is initiated by the destination, this works fine. This TCP connection is not sent directly to the DB on the source, instead, it is sent to the Routy service on the source that will accept the connection and keep it open.

At the source side, you’ll configure it to connect to the source-side service. At this point, the Routy service on the source has two TCP connections. One that came from the source and one that came from the remote Routy service on the destination. At this point, it will basically copy the data between the two sockets and we’ll have a connection to the other side. On the destination side, the Routy service will start getting data from the source, at which point it will initiate its own connection to the database on the destination, ending up with two connections of its own that it can then tie together.

From the point of view of the databases, the source server initiated the connection and the destination server accepted it, as usual. From the point of view of the network, this is a TCP connection that came from the destination to the source, and then a bunch of connections locally on either end.

You can write such a Routy service in a day or so, although the error handling is probably the trickiest one. However, you probably don’t need to do that. This is called TCP reverse tunneling, and you can just use SSH to do that. There are also many other tools that can do the same.

Oh, and you might want to talk to your network admin first, it is possible that this would be easier if they will just change the firewall settings. And if they don’t do that, remember that this is effectively doing the same, so there might be a good reason here.