This is a story about a bug that frustrated, annoyed, and nearly drove me mad. It also cost me ridiculous amount of time. The problem? The communication from the profiled application to the profiler with NH Prof is done using a Protocol Buffers network stream. The problem? It kept failing. Now, networks are unreliable, but they are not that unreliable, especially since all my tests are focused on local machines scenario.

I tried quite hard to create a very reliable system, but I was growing extremely frustrated, it failed, sometimes, in a very unpredictable manner, and in ways that looked like the entire idea is broken. To add insult to injury, any isolated test that I run worked perfectly. Out of ideas and nearly out of of my mind, I turned to the old adage: “If it doesn’t work, kick it, if it still doesn’t work, kicker it harder” and created a stress test that basically run several of the problematic tests in a loop until I could finally reproduce it in a more or less consistent fashion.

It was fairly clear that I am getting a lot of errors when reading the data, and for a while, I focused on that, thinking that I wasn’t handling the connection properly. But even after I did a lot of work on hardening the connection code, it still failed quite often. Now it didn’t fail on reads, it failed on missing data that the tests expected to be there.

After a while I started concentrating on the writing side, I changed the code to write the data to a file, rather than a network stream. At that point, I was pretty sure that my file system was working properly, and I intended to do analyses of the file as I load it. Imagine my surprise when I found out that the file, too, was corrupt. That made me abandon the reading-cause-the-error scenario, and cause a total focus on the writing part. After a while, I managed to get a clue, in the form of the following error:

System.IndexOutOfRangeException: Index was outside the bounds of the array.

at Google.ProtocolBuffers.CodedOutputStream.WriteRawByte(Byte value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 530

at Google.ProtocolBuffers.CodedOutputStream.WriteRawByte(UInt32 value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 534

at Google.ProtocolBuffers.CodedOutputStream.SlowWriteRawVarint32(UInt32 value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 464

at Google.ProtocolBuffers.CodedOutputStream.WriteRawVarint32(UInt32 value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 480

at Google.ProtocolBuffers.CodedOutputStream.WriteTag(Int32 fieldNumber, WireType type) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 458

at Google.ProtocolBuffers.CodedOutputStream.WriteMessage(Int32 fieldNumber, IMessage value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 220

at HibernatingRhinos.NHibernate.Profiler.Appender.Messages.LoggingEventMessage.Types.StackTraceInfo.WriteTo(CodedOutputStream output) in C:\NHProf\HibernatingRhinos.NHibernate.Profiler.Messages.cs:line 560

at Google.ProtocolBuffers.CodedOutputStream.WriteMessage(Int32 fieldNumber, IMessage value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 222

at HibernatingRhinos.NHibernate.Profiler.Appender.Messages.LoggingEventMessage.WriteTo(CodedOutputStream output) in C:\NHProf\HibernatingRhinos.NHibernate.Profiler.Messages.cs:line 843

at Google.ProtocolBuffers.CodedOutputStream.WriteMessage(Int32 fieldNumber, IMessage value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 222

at HibernatingRhinos.NHibernate.Profiler.Appender.Messages.MessageWrapper.WriteTo(CodedOutputStream output) in C:\NHProf\HibernatingRhinos.NHibernate.Profiler.Messages.cs:line 1830

at Google.ProtocolBuffers.CodedOutputStream.WriteMessage(Int32 fieldNumber, IMessage value) in C:\OSS\Buffy\ProtocolBuffers\CodedOutputStream.cs:line 222

at Google.ProtocolBuffers.MessageStreamWriter`1.Write(T message) in C:\OSS\Buffy\ProtocolBuffers\MessageStreamWriter.cs:line 26

at HibernatingRhinos.NHibernate.Profiler.Appender.NHibernateProfilerAppender.WriteToProfilerWithRetries(IEnumerable`1 wrappers, Int32 retryCount) in C:\NHProf\NHibernateProfilerAppender.cs:line 150

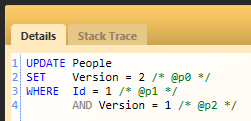

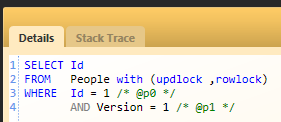

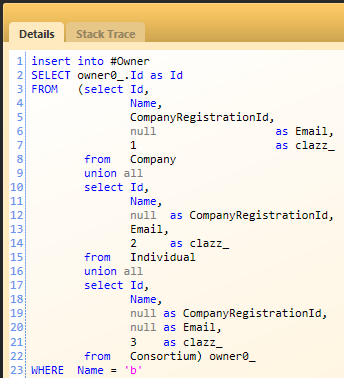

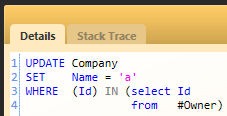

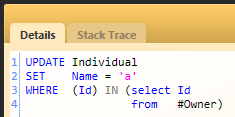

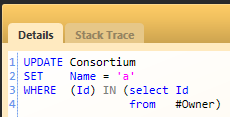

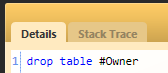

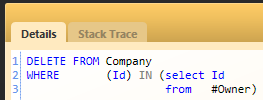

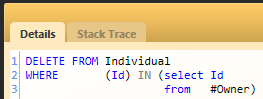

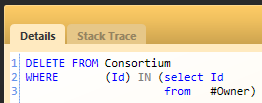

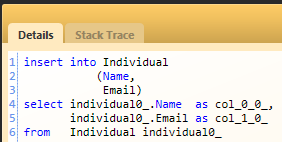

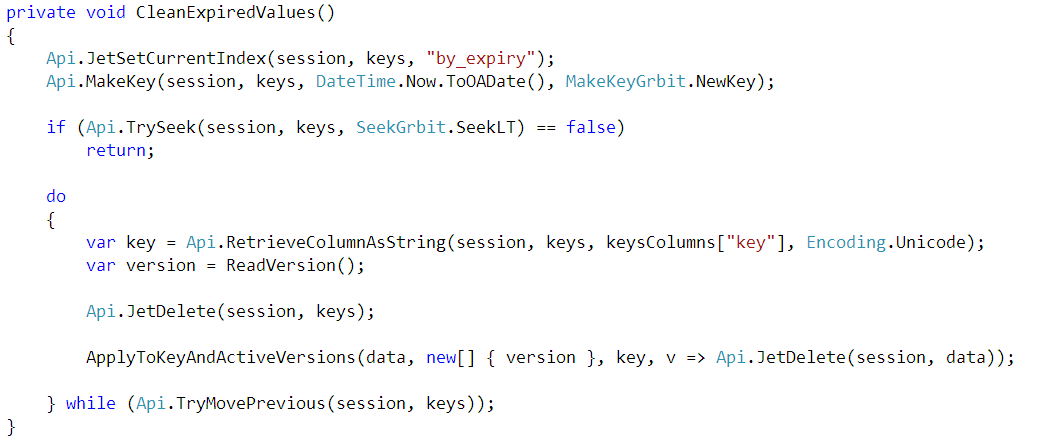

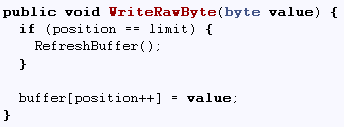

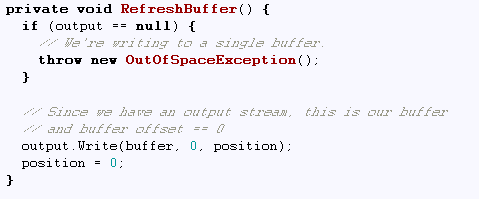

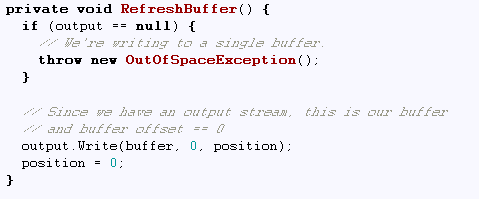

Ah ha! I thought, I know what is wrong, "select() is broken”. With that in mind, I looked at the relevant source file, and tried to figure out what the bug was. Here are the relevant sections:

Looking at that piece of code, it was very clear where the error was. Do you see it?

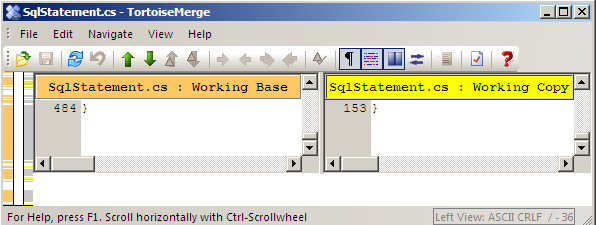

I won’t blame you if you don’t, the error is clear because it is not there. This is perfectly legal code, with no option for errors. Since we did get an error, it is clear that something is broken. And the problem is at a higher level. Usually, in such scenarios, it is a race condition that cause a violation of invariants. And that is the case here. Looking at the code, it was clear what the problem was, I was synchronizing access to the stream, but I wasn’t synchronizing access to the writer.

In this case, we actually got a real error, that pointed me very quickly to the real issue. In most scenarios, what actually happened was silent data corruption at the write end, which I interpreted as problems with the use of the unreliable network.

Again, yuck, and I feel really stupid.