NHibernate – Coarse Grained Locks

One of the challenges that DDD advocates face when using an OR/M is the usage of Coarse Grained Locks on Aggregate Roots, I’ll leave the discussion of you would want to do that to Fowler and Evans, but it seems that a lot of people run into a lot of problems with this.

It is actually not complicated at all, all you have to do is call:

session.Lock(person, LockMode.Force);

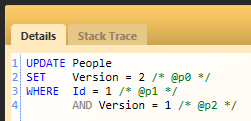

This will make NHibernate issue the following statement:

This allow us to update the version of the aggregate even if it wasn’t actually changed.

Of course, if you wanted a physical lock, you can do that as well:

session.Lock(person, LockMode.Upgrade);

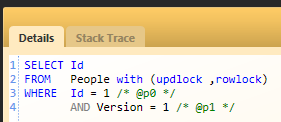

Which would result in:

Pretty easy, even if I say so myself.

Comments

ok, but what the guy was asking for is to mark the aggregate root as dirty when one of its children becomes dirty(automatically?)... even though, i think the guy will be happy to find and answer after almost a year.

The linked guy says that without root locking in ORM you can only write toy projects, not real world applications. I don't agree at all:

I have written severa. real-world applications on top of ORM and without explicit locking

You don't have to use ORM for root-level locking. Actually, db locks are useless for scenarios involving user interaction. User interactions can last very long an span several database transactions, you don't want to hold db lock for that long.

Ayende, the session.lock functionality is useful for eager-locking or enforcing the order of record locking to avoid deadlocks, but won't solve the problem of a guy who wants to lock the document during modification so other users cannot modify it simultaneously. So even if NH supports db record locking, it's necessary to develop other application-level locking mechanism.

I don't see that this solves the real problem, the idea with a coarse grained lock is you are locking the entire aggregate which might involve multiple tables, and as far as I know NHibernate doesn't take account of this.

It doesn't need to.

If you lock the aggregate, you lock the entire thing, you don't have to lock each individual part.

Hey guys, I'm 'the linked guy' ;) lol

Just to clarify my thoughts on the subject:

I know about the 'session.Lock' trick. It's an acceptable as long as you don't forget to force a version increment whenever an object is modified within the aggregate. This is still a manual error-prone implementation I think.

I wasn't talking about pessimistic locking but indeed about optimistic locking. IMHO, you can apply a coarse-grained lock using NHibernate by simply using optimistic-locking on the entity acting as aggregate root ... (most of the time) no optimistic-locking is needed on other entities inside the aggregate ... as long as your ORM always increment the version of the aggregate root whenever there is a change anywhere in the aggregate.

What I had tried was to configure 'cascades' from the aggregate root to child entities (top-to-bottom) but also from the child entities back to the aggregate root (bottom-to-top) ... but NHibernate didn't update the version in the root if there was only a change in a child entity. It would be nice if this could work, as it would allow us to define coarse-grained locks in the mapping directly.

What you think?

@ayende

Sorry I get you, you are suggesting any time you change an object in the aggregate you increment the version of the aggregate root.

Yes.

@ayende

Ahh ok, I htink people would find it useful if it was supported without needing the back references to get to the aggregate root.

Colin,

DDD says that you always go through the root when you want to do something, so that is not a problem.

@ayende

"Transient references to the internal members can be passed out for use within a single operation only. Because the root controls access, it cannot be blindsided by changes to the internals. This arrangemens makes it practical to enforce all invariants for objects in the Aggregate and for the Aggregate as a whole in any state change."

So its possible to hold a non-root reference and change its state, or cause its state to change.

Colin,

Precisely, you have to get the root anyway, so at that time, mark it.

@ayende DRY

also its a really nasty bug in production if you happen to forget to put that lock in.

How can you forget it? It is part of the LoadForModification method

what LoadForModification method?

What you call when you want to load an aggregate with the intention to modify it or its children. :-)

you just moved the problem...

what happens now when someone calls just GetById() and starts modifying it? Do we now build in some mechanism that forces the aggregate and all of its children to be readonly?

also if I have an object can I then upgrade it to a writable version?

No, I did not.

You can't protect people from doing stupid stuff, so I don't try.

Think about the implications of trying to do something like that in any other way, and you'll see the problem (mostly select N^2+1)

Build a proxy that holds a pointer to the root (or even a proxy of the root).

In that proxy build interceptors that automatically dirty the aggregate root.

btw: If you are requiring people to actually take actions on such cross cutting concerns you really better have a good way of detecting/handling production failures.

Developers (even good ones) will forget something like this, especially given the subtlety of it. The code will appear to work without, in some cases the code will always work without until some other developer comes and adds some code that now suddenly causes the versioning to be needed (eg an operation that spans objects within the root).

Its a cop out to say "I don't protect people from doing stupid stuff". By the same merits I could say well why don't you update versions on objects in code all the time?

he issue here is of protecting against Murphy vs. protecting against Machiavelli...

Not at all (and I saw where you got that quote from). When the cost of Murphy is huge it is irresponsible to let it happen.

There is no valid reason why to not do this automatically. I imagine the interceptors/proxy could be written in < 1 day. I will do it when I have time and/or a pressing need in actual work.

The problem with your way is that we can't rationalize that its actually being done. If we get your way to the point that we could actually rationalzie about it we have a code explosion in all of our domain objects (how do we know that code was written).

More in general when I brought up this issue originally it was because there are lots of people "doing DDD" with nhibernate already who were putting themselves into a lot of trouble (that they didn't see) because they were not properly handling aggregate root consistency. The symptoms of these problems would be occasionally finding invalid aggregate roots in the database .... not cool (especially when you load objects 100 times more often to interact with them than to change/display their data).

Greg,

a) Yes, I know you do. And you also saw my reaction to that. It is a great way to summarize how I think about such things.

b) As for no valid way, there is a very simple reason, it _makes things hard_. Moreover, it is an open case for SELECT N+1 and the Law of Unintended Consequences.

c) that is a bug, your point?

b) What select n+1 problem? You seem so certain there is one I have already said there is not (and explained how I would do it) but you keep saying there is perhaps you should explain why you believe this?

c) Perhaps you just work on systems where failures like those are acceptable, I mean hey a developer just goes back and fixes the bug right? I have worked on many systems where they were not. I worked on one where any such a failure would result in a 100,000$ penalty to the corporation running the system.

Let's say we have 1000 writing cases ... we only need a .1 failure rate to have a sneaky bug that will later cost us $100,000 ... more likely we will have an order of magnitude more knocking us down to .01% failure (you don't think humans will tend to fail, even at something they are good at 1 time in 10,000?). What makes this type of bug particularly bad is that it will likely pass all of your QA testing (even stress testing) and later rear its head in production because it is a statistically improbable event,.

b) How are you going to walk back to the root aggregate without causing this problem? Especially when you have deep graphs. Especially if you try to do this in an automated fashion.

c) Um, not really. It is much easier to work on systems where bugs are not critical, but I also worked on systems where the main business of the system was monetary transactions.

Having a bug there is... bad, you might say.

And the way you ensure that your bugs are low is by setting up code standards, code reviews, unit testing, etc.

As for the math that you do. Need I remind you of NASA's bugs?

It doesn't really matter what QA process you have, or how strict you try to be. Bugs will still be there.

b) Remember we are talking about DDD here. How did you get to those objects? There is no issue.

@Ayende those are ... expensive ways of insuring there are not bugs. The easy way of insuring there are not bugs is to not let bugs happen. Given 10,000 writing use cases which do you think will be cheaper to insure quality, my way which doesn't allow problems to happen or your way where the only way to find them is code reviews after every change.

b) Then if you got the object _anyway_, I fail to see the issue.

LoadForModification will do it for you, simple.

re: bugs - I still don't see your point, show me the problem in LoadForModification.

If you don't use that, it is a bug, it will be caught easily & early by any number of ways.

Remember, Murphy vs Machiavelli

We have having the discussion of allowing bugs and relying on other mechanisms to find them or disallowing them completely.

This is an architectural discussion. You hold the proposition that in this case we should always go the route of code reviews etc to find the bugs. What I am saying is that this is not the case, there are many situations where the code review etc process is too risky and/or expensive to use.

An easier way of looking at this would be by applying a stereotypical ROI equation to it.

What is our investment (about 1-2 days of time (guessing) we can call it a M using normal sizing)

What is our return ... not having to catch all of this informatiion in a code review.

What is the cost of doing it. let's say 40 hours * programmer cost

What is the probability of a failure without it (let's say .01%)

What is the cost of a failure.

The math from there is pretty simple and you will find for an extraordinary number of applications it makes fiscal sense to implement in an automatic fashion.

Greg

Greg,

You are making a mistake by trying to make this into a general statement vs. a unique case.

I am talking here specifically about coarse grain locking, and as I said, I think that the way to do that would be to provide LoadForModification method for that purpose. Since we have this method, I fail to see how you could get an error.

If your domain is write only, then you are done.

If your domain is read/write, they you need to have other options as well (LoadForReading), which may return immutable instances.

I used the general ROI logic in order to show the failure in your ideas. Are you suggesting that ROI does not apply to coarse grain locking? I believe you would be taking the burden of proof to show why it doesn't apply as it applies to pretty much any other feature we are adding.

Even if your domain is write only you would still need LoadForReading(). I have also already explained the immense cost in creating such a thing as it would leak to every domain object. Seriously go actually try your suggestion.

Anyways the conversation has come back in a circle to what I already predicted you would say and already argued against. There is no point in continuing.

Greg,

I honestly can't figure out where you are going with that.

I disagree with your math in general because you are ignoring a very real cost of the impact of the rigidity of the structure, but that is beside the point.

About coarse grain locking, if you want to enforce this distinction, all you need to do is offer just one approach, that force you to do that.

And no, it won't impact any domain object

The 'rigity of the structure' because I use a proxy to automatically dirty the root for me as opposed to doing it by hand? As opposed to the rigity of you making a programmer remember to dirty things manually? Are you serious? The only rigity might be that you are following the aggregate root pattern but if you aren't this would be a bit of a moot discussion no?

re 'And no, it won't impact any domain object ' :

You said that you want a readonly version of the domain object how on earth do you intend to do this without something in the domain object to at the least say what is mutating state. There are quite often methods on domain objects that do not mutate state and are perfectly valid to call in a readonly state, consider the double dispatch pattern.

You are confusing two issues.

a) the general architecture principle. Most of the time when this is applied, it enforce heavy weight constraints on the dev & architecture. I have seen it time & again limiting choices and making things harder than they should

b) readonly domain objects are very simple, you don't save them to the DB, hence they are read only, they can't affect anything except the current request.

a) the general architecture principle. Most of the time when this is applied, it enforce heavy weight constraints on the dev & architecture. I have seen it time & again limiting choices and making things harder than they should

Dude seriously WTF are you talking about? There is less complexity in having aggregate roots automatically dirty than having to sprinkle calls throughout my code to dirty the aggregate root manually, period!

The only 'constraints' to the automatic way of doing this way are that you have to follow the aggregate root pattern ... but then again the people who want this are already doing that so there are NO ADDITIONAL CONSTRAINTS. Are there additional constraints vs using a typical nhibernate 'model' that is just a bunch of bi-directional relationships from everything to everything? sure but the people who want this have already taken those constraints upon themselves, automatically dirtying the aggregate root does not add any new constraints.

b) readonly domain objects are very simple, you don't save them to the DB, hence they are read only, they can't affect anything except the current request.

So its fine that they can be mutated during a request even though they are readonly? wow that could lead to some 'interesting' situations.

I have dealt with many systems that make 'decisions' like this and I can tell you its much easier to insure that things cannot happen that to try to figure out the giant ball of spaghetti when they do.

If you want to continue this you can feel free to email me but its a waste of my time to continue here.

You are purposefully trying to twist what I am saying. I never said that I want to sprinkle stuff all over the code to manage that manually.

As for the complexity, I suggest trying to implement that on the types of models that NHibernate supports, you will quickly see the problem.

You say so now, but trying to track references which may or may be not bi directional, query only or queryable through multiple paths is not a trivial task. And that is even assuming that you limit yourself to very simple domain model approaches.

And I can tell you the same, I have seen such attempts made many times, with horrendous results for the project.

Trying to insure that things can't go wrong is a losing proposition. I prefer to make sure that the easiest thing is the right thing, not the only thing.

Comment preview