I recently had a fascinating support call, talking about how to optimize a very big model and an access pattern that basically required to have the entire model in memory for performing certain operations.

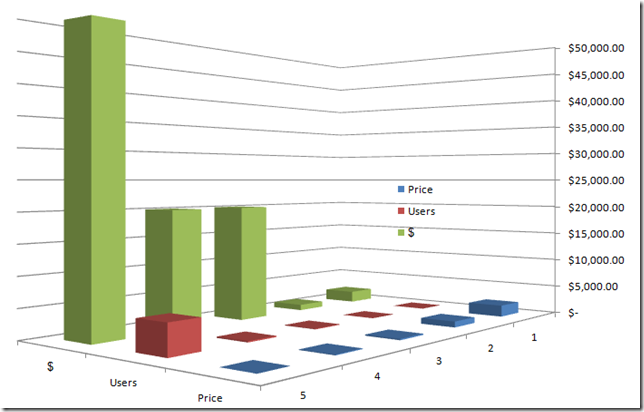

A pleasant surprise was that it wasn’t horrible (when I get called, there is usually a mess), which is what made things interesting. In the space of two hours, we managed to:

- Reduced number of queries by 90%.

- Reduced size of queries by 52%.

- Increased responsiveness by 60%, even for data set an order of magnitude.

My default answer whenever I am asked when to use NHibernate is: Whenever you use a relational database.

My strong recommendation at the end of that support call? Don’t use a relational DB for what you are doing.

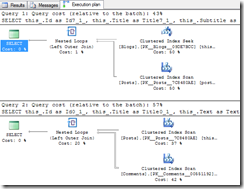

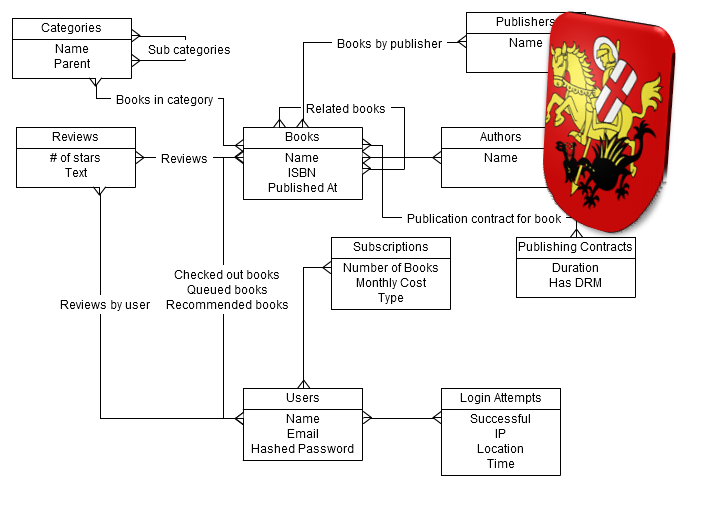

The ERD just below has absolutely nothing to do with the support call, but hopefully it will help make the example. Note that I dropped some of the association tables, to make it simpler.

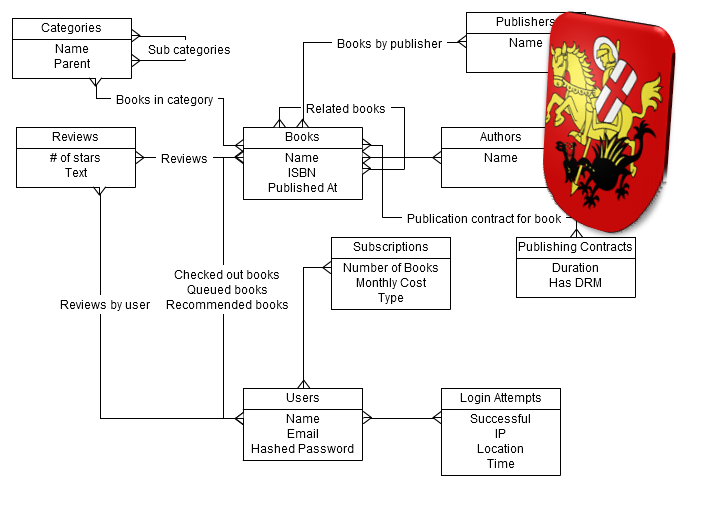

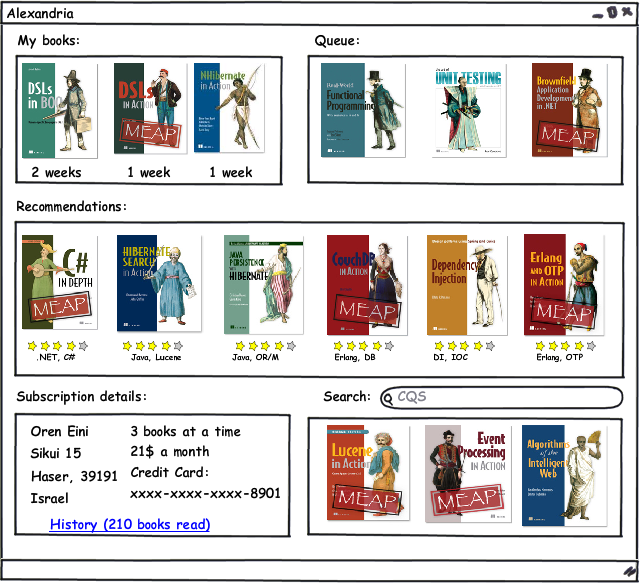

And the scenario we have to deal with is this one:

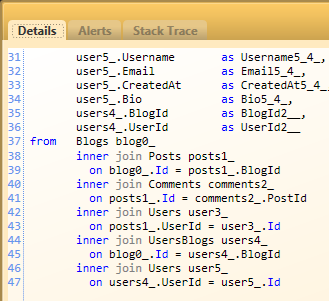

Every single table in the ERD is touched by this screen. Using a relational database, I would need something like the following to get all this data:

SELECT *

FROM Users

WHERE Id = @UserID

SELECT *

FROM Subscriptions

WHERE UserId = @UserId

AND GETDATE() BETWEEN StartDate AND EndDate

SELECT MIN(CheckedBooks.CheckedAt),

Books.Name,

Books.ImageUrl,

AVG(Reviews.NumberOfStars),

GROUP_CONCAT(', ',Authors.Name),

GROUP_CONCAT(', ',Categories.Name)

FROM CheckedBooks

JOIN Books

ON BookId

JOIN BookToAuthors

ON BookId

JOIN Authors

ON AuthorId

JOIN Reviews

ON BookId

JOIN BooksCategories

ON BookId

JOIN Categories

ON CategoryId

WHERE CheckedBooks.UserId = @UserId

GROUP BY BookId

SELECT Books.Name,

Books.ImageUrl,

AVG(Reviews.NumberOfStars),

GROUP_CONCAT(', ',Authors.Name),

GROUP_CONCAT(', ',Categories.Name)

FROM Books

JOIN BookToAuthors

ON BookId

JOIN Authors

ON AuthorId

JOIN Reviews

ON BookId

JOIN BooksCategories

ON BookId

JOIN Categories

ON CategoryId

WHERE BookId IN (SELECT BookID

FROM QueuedBooks

WHERE UserId = @UserId)

GROUP BY BookId

SELECT Books.Name,

Books.ImageUrl,

AVG(Reviews.NumberOfStars),

GROUP_CONCAT(', ',Authors.Name),

GROUP_CONCAT(', ',Categories.Name)

FROM Books

JOIN BookToAuthors

ON BookId

JOIN Authors

ON AuthorId

JOIN Reviews

ON BookId

JOIN BooksCategories

ON BookId

JOIN Categories

ON CategoryId

WHERE BookId IN (SELECT BookID

FROM RecommendedBooks

WHERE UserId = @UserId)

GROUP BY BookId

SELECT Books.Name,

Books.ImageUrl,

AVG(Reviews.NumberOfStars),

GROUP_CONCAT(', ',Authors.Name),

GROUP_CONCAT(', ',Categories.Name)

FROM Books

JOIN BookToAuthors

ON BookId

JOIN Authors

ON AuthorId

JOIN Reviews

ON BookId

JOIN BooksCategories

ON BookId

JOIN Categories

ON CategoryId

WHERE Books.Name LIKE @search

OR Categories.Name LIKE @search

OR Reviews.Review LIKE @search

GROUP BY BookId

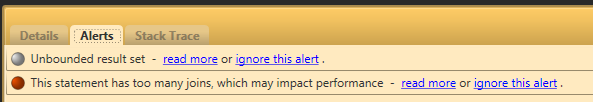

Yes, this is a fairly simplistic approach, without de-normalization, and I would never perform searches in this manner, but… notice how complex things are getting. For bonus points, look at the forth query, the queued books are ordered, try to figure out how we can get the order in a meaningful way. I shudder to thing about the execution plan of this set of queries. Even if we ignore the last one that does full text searching in the slowest possible way. And this is just for bringing the data for a single screen, assuming that magically it will show up (you need to do a lot of manipulation at the app level to make this happen).

The problem is simple, our data access pattern and the data storage technology that we use are at odds with one another. While relational modeling dictate normalization, our actual data usage means that we don’t really deal with a single-row entity, with relatively rare access to associations, which is the best case for OLTP. Nor are we dealing with set based logic, which is the best case for OLAP / Relational based queries.

Instead, we are dealing an aggregate that spans multiple tables, mostly because we have no other way to express lists and many to many associations in a relational database.

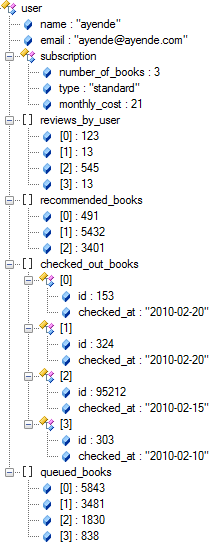

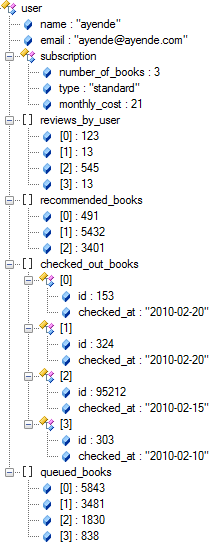

Let us see how we could handle things if we were using a document or key/value database. We would have two aggregates, User and Book.

GetUser(userId) –> would result in:

We can now issue another query, to bring the associated books. GetBooks(153, 1337) would result in:

Note that the entire data structure is different, we haven’t just copied the normalized relational model, we have a totally different model. An aggregate (similar to DDD’s aggregate) is a single entity that contains anything except other aggregates. References to other aggregates are allowed (from user to all the books), but most of the entity’s data is stored as a single value.

That has several interesting implications. First, we need two queries to get the data for the screen. One to get the user’s data, and the second to get the books that we need to display. Reducing remote calls is something that you really care about, and simplifying the queries to mere query by ids is going to have a significant effect as well.

By changing the data storage technology, we also enforced a very rigid aggregate boundary. Transactions becomes much simpler as well, since most transactions will now modify only a single aggregate, which is a single operation, no matter how many actual operations we perform on that aggregate. And by tailoring the data structure that we use to match our needs, we have natural aggregate boundaries.

The end result is a far simpler method of working with the data. It may mean that we have to do more work upfront, but look at the type of work we would have to do in order to try to solve our problems using the relational model. I know what model I would want for this sort of a problem.