Fun with a non relational databases

My post showing a different approach for handling data got a lot of traffic, and a lot of good comments. But I think that there is some misunderstanding with regards to the capabilities of NoSQL databases, so I am going to try to expand on those capabilities in this post.

Instead of hand waving, and since I am thinking about this a lot lately, we will assume that we are talking about DivanDB (unreleased version), which has the following API:

- JsonDoc[] Get(params Id[] ids);

- Set(params JsonDoc[] docs);

- JsonDoc[] Query(string indexName, string query);

DivanDB is a simple Document database, storing documents as Json, and using Lucene as a indexer for the data. An index is defined by specifying a function that creates it:

var booksByAuthor = from doc in documents

where doc.type == “book”

from author in doc.authors

select new { author };

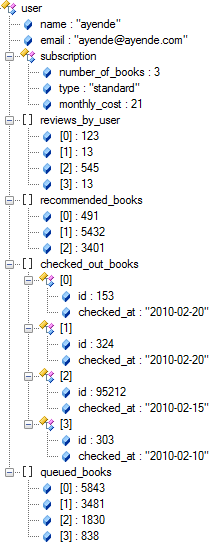

And the data looks like this:

Indexing is done at write time, you can think about those indexes as materialized views.

It appears that people assumes that just because you aren’t using an RDBMS, you can’t use queries. Here are a few options to show how you can do so.

Books by author:

Query(“booksByAuthor”, “author:weber”);

Books by category:

Query(“booksByCategory”, “category:scifi”);

How is the books by category index defined? I think you can guess.

var booksByCategory = from doc in documents

where doc.type == “book”

from category in doc.categories

select new { category };

What other queries did people brought up?

Who has book X in their queue?

Query(“usersByQueuedBooks”, “book:41”);

And the view?

var usersByQueuedBooks = from doc in documents

where doc.type == "user"

from book in doc.queues_books

select new { book };

I’ll leave the implementation of Who has book X checked out as an exercise for the reader.

What about deletes?

Using this system, it looks like deletes might be really expensive, right? Well, that depends on what exactly you want here.

My default approach would be to consider exactly what you want, as Udi pointed out, in the real world, you don’t delete.

But it is actually fairly easy to support something like this cheaply. It is all about defining the association and letting the DB handle this (although I am not fond of the syntax I came up with):

var books = from book in documents

where book.type == "book"

select new { book = book.id };

var checkedOutBooks = from user in documents

where user.type = "user"

from book in user.checked_out

select new { book };var queuedBooks = from user in documents

where user.type = "user"

from book in user.queued_books

select new { book };

FK_DisallowDeletion(books, checkoutBooks);

FK_RemoveElement(books, queuedBooks);

Under the cover this creates indexers and can check out those at delete / insert time.

However, I would probably not implement this for Rhino DivanDB, mostly because I agree with Udi.

Comments

How does the query interface handles paging, ordering and aggregation?

Getting N - M books matching a query?

Getting N top rated products under categories that are direct or indirect child of category X?

Getting books with more than 1 author?

Hi Ayende,

I see that the read operations use a JsonDoc entity...

What about serializing/deserializing the json docs into .NET entities?

Are you leaving this out because of performance concerns?

Javi

Bunter,

Paging is implemented in overload for Query.

Query(“booksByAuthor”, “author:weber”, N, M);

Ordering for paging is something that I need to think about, probably something like:

Query(“booksByAuthor”, “author:weber”, N, M, function(x,y) {

});

Aggregation isn't supported, because it isn't something that you can do in a doc db. Well, you can, but it provides interesting challenges and stops working when you try to think about distributed db.

Since you can't really do aggregation in a distributed fashion easily, I might not do it.

I actually have a couple of interesting ideas about how to implement this, though.

Why Json? Because I am thinking about very low level API, not user facing ones.

There are two options, the easy one is to include the entire category hierarchy in the book.

The idea is that it means that I can pull the entire list in a single request, vs. having to do hierarchical stuff.

In that is the case, you would need a view like:

var booksByCategory = from doc in docs

Query("booksByCategory", "name:foo");

Another approach, which I am not overly fond of, is to support hierarchy in the view generation, something like (highly tentative):

var booksByCategory = from doc in docs.include_matching(d=>d.category && d.type == "book")

Which would build the same index, but without requiring the book to carry all the hierarchy, but since you generally need the entire hierarchy anyway, it doesn't really make sense not to have it.

Final option is to generate the categories key as:

1.2 Books > Sci Fi

1.2.3 Books > Sci Fi > Military

And in this case you can do this sort of queries very easily because of the nature of the queries.

I read Udi's article and agree with his example, however does that really extend to never delete anything?

Surely it means never delete anything that is not really deleted, but merely changed state (as in Udi's examples.) Sometimes Delete really does make sense. A poor example I have now is, "What if I add an item to be persisted that I realise later shouldn't exist." A pretty poor example I admit... I will try to think of a better one...

I am quite excited at the prospect of using a .Net based object graph database, and this project seems the closest so far to my needs. I would definitely vote in favour of implementing delete!

Ok, you don't delete but you mark objects with 'bad' or 'not actual anymore' marks. Anyway, you have to fix the indexes...

I must admit, I would really like to see this project work out to be a good, in-process document database for small to mid-size applications.

However, my question is this: Is your end goal to "compete" in the same space as CouchDB or MongoDB? Or is this more an experiment that might yield a useful tool?

Hi Ayende,

The first thing that popped in my head was: What if you only want to show a list of books with the average user rating? Let's say you have a fairly large number of books, and some books with a lot of reviews/ratings. To show a listing you wouldn't necessarily want to fetch all those books with all of their reviews. That's a lot of overhead and data you wouldn't use in that view.

The second thing I thought was: Cool, even uses Lucene. Very nice.

-josh

I think anonymous types might be a bit more nice to look at as far as the query API.

@Josh,

As Oren said Aggregation isn't supported nor is it a problem that I believe he's trying to solve. In the case of the average user rating, I think you would fire off an event/message/command to the system when a user provided a rating on a book. The interface responds saying thanks for you feedback. Meanwhile the system queues the event and processes it computing the new average based on the new data. This new aggregate value is stored in a data structure for queries that need that use that average. That value may be stored in the doc db with the book and as such it can be queried using lucene. Or it might be stored in a sql table that has book ids and averages, it doesn't really matter.

The main problem people usually have is that the users feedback is not instantly available in the view. And to paraphrase Udi "So What?". it will be soon enough and the user who provided the feedback isn't waiting on the interface while this new aggregate is calculated.

Some will say that computing the average should not take long. True, but what if I would rather compute the average review of this book by users who like the same books as me? This is a much more interesting question and can not be calculated in a few milliseconds.

@Jordan

Small to mid-size applications only? Why not large databases? I think large databases is where nosql shows its superiority over rdbms.

Vadim,

The issue isn't with deletion, the issue is with references to deleted documents.

The DB won't handle that automatically for you

Jordan,

I would like to use it where I would use Couch / Mongo, yes. I think that this is very feasible.

Josh,

At that point, you include the average user rating inside the document, and index that. From there, it is a very simple issue

iLude,

The question is, how do you define instantly.

My current testing shows latency of ~25 - 50 ms between update & index sync. That is with zero perf work and on tough conditions.

@Oren,

I was referring to the time it takes to compute the average not the time that it takes for the index to be updated after you have the the new data ready for writing to the data store.

Computing the average of all ratings on a book is straight forward and shouldn't take a lot of time regardless of the size of your dataset. But if you want to find users who share similar taste in books to the current user and show their average rating for the currently viewed book, the answers going to take a bit more time to figure out.

My point was that figuring out the answers to these questions is not the job of the data store. Sure SQL can be used to figure out these answers. But it only scales so far.

iLude,

Agreed, in any such scenario that i am aware of, this is the job of background tasks

There is a big company well-entrenched in this kind of approach to databases. They have derived answers to many of the questions raised in this discussion, and developed a very mature product. This company is named FileMaker.

I have worked a few engagements in which company needs for BI has surpassed the abilities of FileMaker, prompting us to build "replication" bridges to data warehouse.

the "replication" approach is a viable answer to the problems with aggregation and general reporting, however if your primary goal was "NOSQL" well then you have only halfway succeeded.

Comment preview