Still going on with the discussion on how to handle failures in a sharded cluster, we are back to the question of how to handle the scenario of one node in a cluster going down. The question is, what should be the system behavior in such a scenario.

In my previous post, I discussed one alternative option:

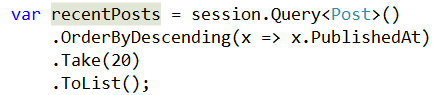

ShardingStatus status;

va recentPosts = session.Query<Post>()

.ShardingStatus( out status )

.OrderByDescending(x=>x.PublishedAt)

.Take(20)

.ToList();

I said that I really don’t like this option. But deferred the discussion on exactly why.

Basically, the entire problem boils down to a very simple fact, manual memory management doesn’t work.

Huh? What is the relation between handling failures in a cluster to manual memory management? Oren, did you get your wires crossed again and continued a different blog post altogether?

Well, no. It is the same basic principle. Requiring users to add a specific handler for this result in several scenarios, none of them ideal.

First, what happen if we don’t specify this? We are back to the “ignore & swallow the error” or “throw and kill the entire system”.

Let us assume that we go with the first option, the developer has a way to get the error if they want it, but if they don’t care, we will just ignore this error. The problem with this approach is that it is entirely certain that developers will not add this, at least, not before the first time we have a node fail in production and the system will simply ignore this and show the wrong results.

The other option, throw an exception if the user didn’t ask for the sharding status and we have a failing node, is arguably worse. We now have a ticking time bomb. If a node goes down, the entire system will go down. The reason that I say that this is worse than the previous option is that the natural inclination of most developers is to simply stick the ShardingStatus() there and “fix” the problem. Of course, this is basically the same as the first option, but this time, the API actually let the user down the wrong path.

Second, this is forcing a local solution on a global problem. We are trying to force the user to handle errors at a place where the only thing that they care about is the actual business problem.

Third, this alternative doesn’t handle scenarios where we are doing other things, like loading by id. How would you get the ShardingStatus from this call?

session.Load<Post>("tri/posts/1");Anything that you come up with is likely to introduce additional complexity and make things much harder to work with.

As I said, I intensely dislike this option. A much better alternative exists, and I’ll discuss this in the next post…