A deep dive into RavenDB's AI Agents

RavenDB is building a lot of AI integration features. From vector search to automatic embedding generation to Generative AI inside the database. Continuing this trend, the newest feature we have allows you to easily build an AI Agent using RavenDB.

Here is how you can build an agent in a few lines of code using the model directly.

def chat_loop(ai_client, model):

messages = []

while True:

user_input = input("You: ")

if user_input.lower() == "exit":

break

messages.append({"role": "user", "content": user_input})

response = ai_client.chat.completions.create(model=model,messages=messages)

ai_response = response.choices[0].message.content

messages.append({"role": "assistant", "content": ai_response})

print("AI:", ai_response)This code gives you a way to chat with the model, including asking questions, remembering previous interactions, etc. This is basically calling the model in a loop, and it makes for a pretty cool demo.

It is also not that useful if you want it to do something. I mean, you can ask what the capital city of France is, or translate Greek text to Spanish. That is useful, right? It is just not very useful in a business context.

What we want is to build smart agents that we can integrate into our own systems. Doing this requires giving the model access to our data and letting it execute actions.

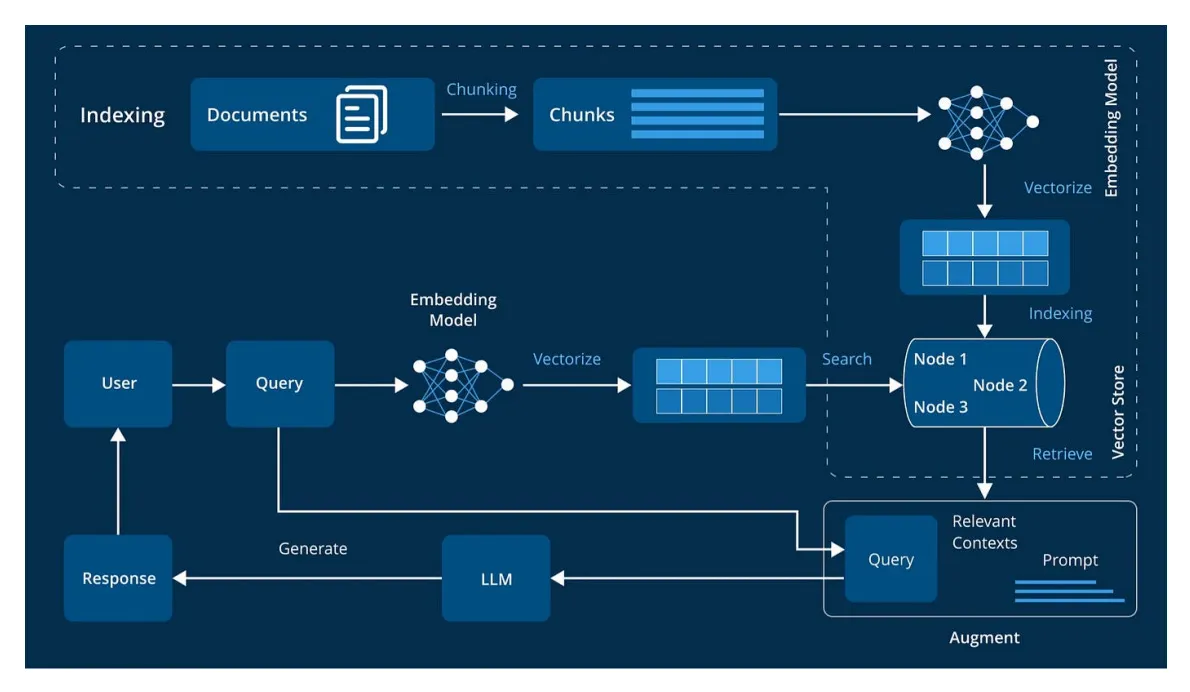

Here is a typical diagram of how that would look (seeA Systematic Review of Key Retrieval-Augmented Generation (RAG) Systems: Progress, Gaps, and Future Directions):

This looks… complicated, right?

A large part of why this is complicated is that you need to manage all those moving pieces on your own. The idea with RavenDB’s AI Agents is that you don’t have to - RavenDB already contains all of those capabilities for you.

Using the sample database (the Northwind e-commerce system), we want to build an AI Agent that you can use to deal with orders, shipping, etc. I’m going to walk you through the process of building the agent one step at a time, using RavenDB.

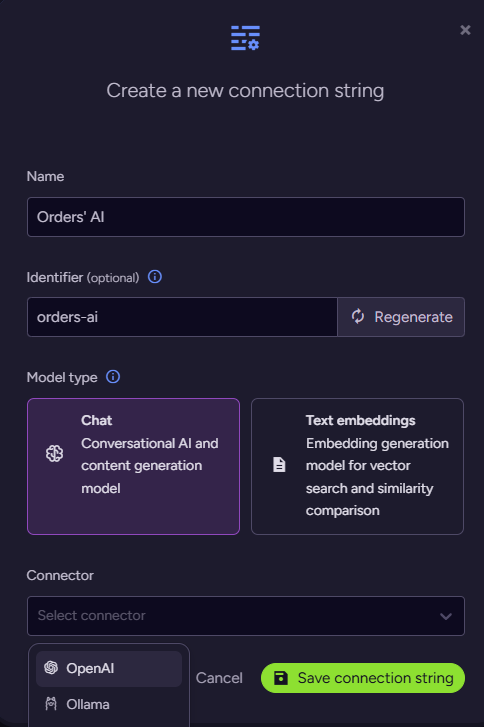

The first thing to do is to add a new AI connection string, telling RavenDB how to connect to your model. Go to AI Hub > AI Connection Strings and click Add new, then follow the wizard:

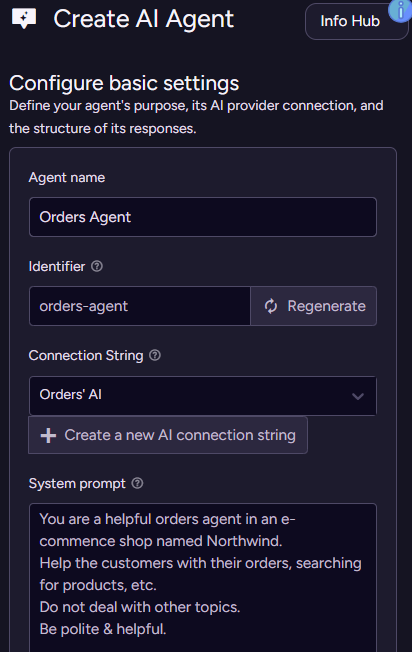

In this case, I’m using OpenAI as the provider, and gpt-4.1-mini as the model. Enter your API key and you are set. With that in place, go to AI Hub > AI Agents and click Add new agent. Here is what this should look like:

In other words, we give the agent a name, tell it which connection string to use, and provide the overall system prompt. The system prompt is how we tell the model who it is and what it is supposed to be doing.

The system prompt is quite important because those are the base-level instructions for the agent. This is how you set the ground for what it will do, how it should behave, etc. There are a lot of good guides, I recommend this one from OpenAI.

In general, a good system prompt should include Identity (who the agent is), Instructions (what it is tasked with and what capabilities it has), and Examples (guiding the model toward the desired interactions). There is also the issue of Context, but we’ll touch on that later in depth.

I’m going over things briefly to explain what the feature is. For more details, see the full documentation.

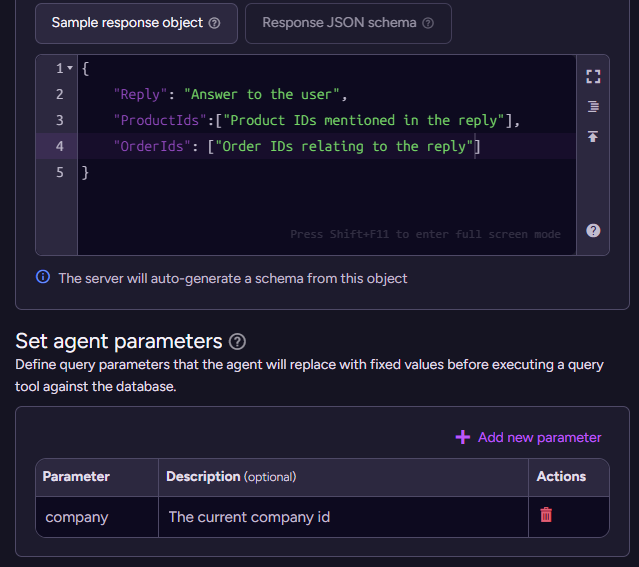

After the system prompt, we have two other important aspects to cover before we can continue. We need to define the schema and parameters. Let’s look at how they are defined, then we’ll discuss what they mean below:

When we work with an AI model, the natural way to communicate with it is with free text. But as developers, if we want to take actions, we would really like to be able to work with the model’s output in a programmatic fashion. In the case above, we give the model a sample object to represent the structure we want to get back (you can also use a full-blown JSON Schema, of course).

The parameters give the agent the required context about the particular instance you are running. For example, two agents can run concurrently for two different users - each associated with a different company - and the parameters allow us to distinguish between them.

With all of those settings in place, we can now save the agent and start using it. From code, that is pretty simple. The equivalent to the Python snippet I had at the beginning of this post is:

var conversation = store.AI.Conversation(

agentId: "orders-agent",

conversationId: "chats/",

new AiConversationCreationOptions

{

Parameters = new()

{

["company"] = "companies/1-A"

},

});

Console.Write("(new conversation)");

while (true)

{

Console.Write($"> ");

var userInput = Console.ReadLine();

if (string.Equals(userInput, "exit", StringComparison.OrdinalIgnoreCase))

break;

conversation.SetUserPrompt(userInput);

var result = await conversation.RunAsync<ModelAnswer>();

Console.WriteLine();

var json = JsonConvert.SerializeObject(result.Answer, Formatting.Indented);

Console.WriteLine(json);

System.Console.Write($"('{conversation.Id}')");

}I want to pause for a moment and reflect on the difference between these two code snippets. The first one I had in this post, using the OpenAI API directly, and the current one are essentially doing the same thing. They create an “agent” that can talk to the model and use its knowledge.

Note that when using the RavenDB API, we didn’t have to manually maintain the messages array or any other conversation state. That is because the conversation state itself is stored in RavenDB, see the conversation ID that we defined for the conversation. You can use that approach to continue a conversation from a previous request, for example.

Another important aspect is that the longer the conversation goes, the more items the model has to go through to answer. RavenDB will automatically summarize the conversation for you, keeping the cost of the conversation fixed over time. In the Python example, on the other hand, the longer the conversation goes, the more expensive it becomes.

That is still not really that impressive, because we are still just using the generic model. It will tell you what the capital of France is, but it cannot answer what items you have in your cart.

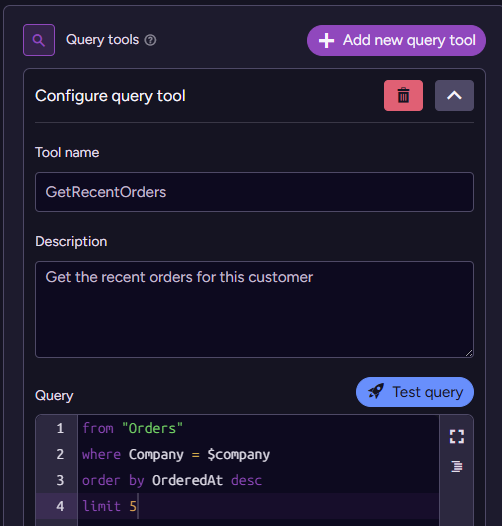

RavenDB is a database, and the whole point of adding AI Agents at the database layer is that we can make use of the data that resides in the database. Let’s make that happen. In the agent definition, we’ll add a Query:

We add the query tool GetRecentOrders, and we specify a description to tell the model exactly what this query does, along with the actual query text (RQL) that will be run. Note that we are using the agent-level parameter company to limit what information will be returned.

You can also have the model pass parameters to the query. See more details on that in the documentation. Most importantly, the company parameter is specified at the level of the agent and cannot be changed or overwritten by the model. This ensures that the agent can only see the data you intended to allow it.

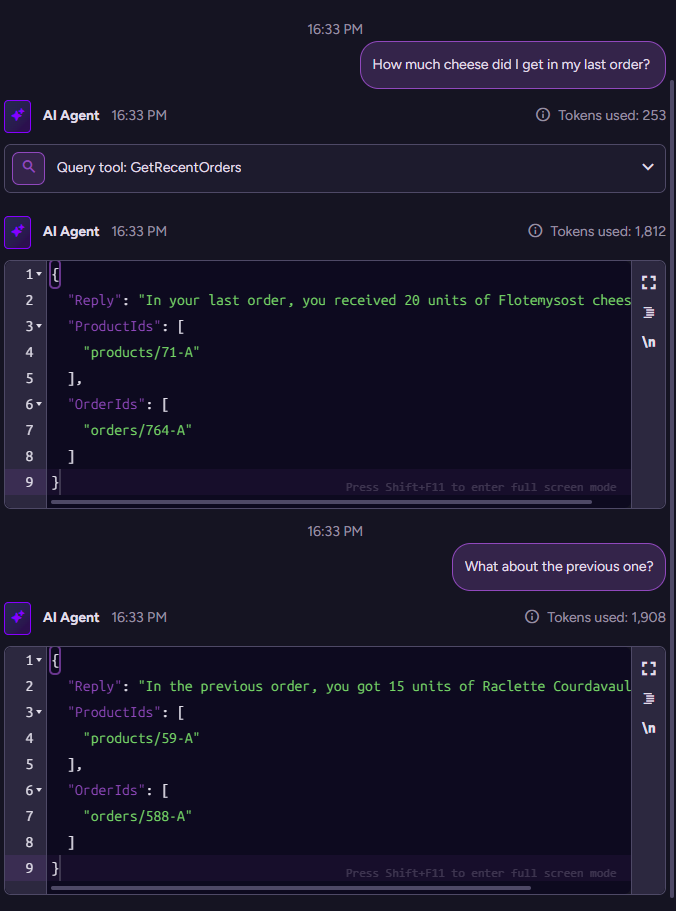

With that in place, let’s see how the agent behaves:

(new conversation)> How much cheese did I get in my last order?

{

"Reply": "In your last order, you received 20 units of Flotemysost cheese.",

"ProductIds": [

"products/71-A"

],

"OrderIds": [

"orders/764-A"

]

}

('chats/0000000000000009090-A')> What about the previous one?

{

"Reply": "In the previous order, you got 15 units of Raclette Courdavault cheese.",

"ProductIds": [

"products/59-A"

],

"OrderIds": [

"orders/588-A"

]

}You can see that simply by adding the capability to execute a single query, we are able to get the agent to do some impressive stuff.

Note that I’m serializing the model’s output to JSON to show you the full returned structure. I’m sure you can imagine how you could link to the relevant order, or show the matching products for the customer to order again, etc.

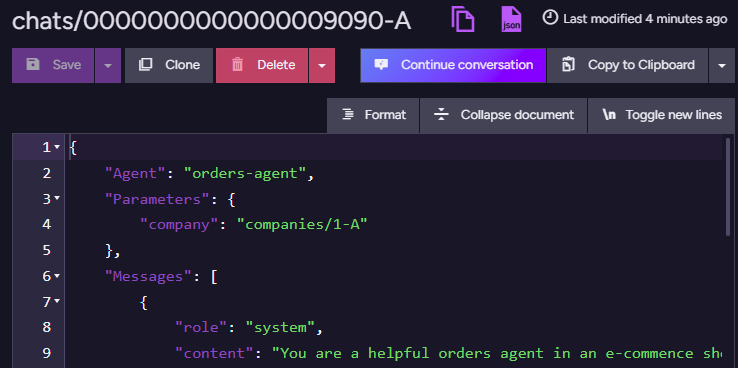

Notice that the conversation starts as a new conversation, and then it gets an ID: chats/0000000000000009090-A. This is where RavenDB stores the state of the conversation. If we look at this document, you’ll see:

This is a pretty standard RavenDB document, but you’ll note the Continue conversation button. Clicking that moves us to a conversation view inside the RavenDB Studio, and it looks like this:

That is the internal representation of the conversation. In particular, you can see that we start by asking about cheese in our last order, and that we invoked the query tool GetRecentOrders to answer this question. Interestingly, for the next question we asked, there was no need to invoke anything - we already had that information (from the previous call).

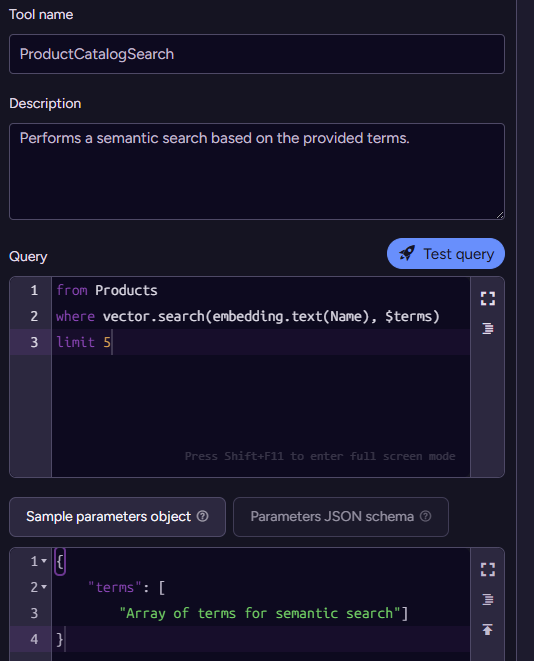

This is a really powerful capability because, for a very small amount of work, you can get amazing results. Let’s extend the agent a bit and see what it does. We’ll add the capability to search for products, like so:

Note that here we are using another AI-adjacent capability, vector search, which allows us to perform a semantic search in vector space. This is now a capability that we expose to the model, leading to the following output:

('chats/0000000000000009090-A')> What wines do you have that go with either?

{

"Reply": "We have a product called 'Chartreuse verte', which is a green-colored sweet alcoholic drink that could pair well with cheese. Would you like more information or additional wine options?",

"ProductIds": [

"products/39-A"

],

"OrderIds": []

}Note that we continue to expand the capabilities of the agent while the conversation is running. We didn’t even have to stop the process, just add the new query to the agent and ask a question.

More seriously, this is an important capability since it allows us to very quickly iterate over what the agent can do cheaply and easily.

Our agent is already pretty smart, with just two queries that it can call. We can try to do something that ties them together. Let’s see what happens when we ask it to repeat our last two orders:

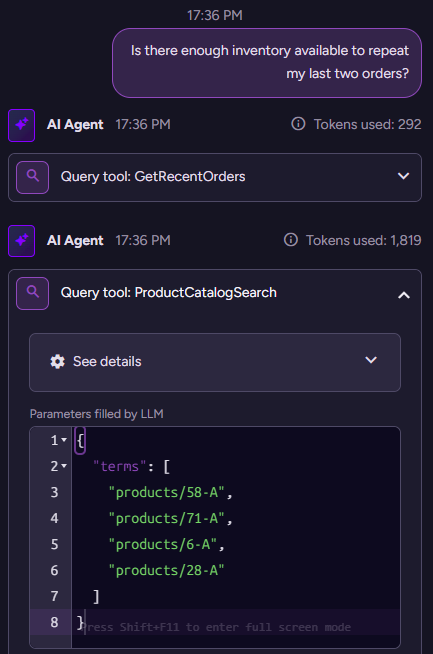

(new conversation)> Is there enough inventory available to repeat my last two orders?

{

"Reply": "Regarding your last two orders, the products ordered were: \"Escargots de Bourgogne\" (40 units), \"Flotemysost\" (20 units), \"Grandma's Boysenberry Spread\" (16 units), and \"Rössle Sauerkraut\" (2 units). Unfortunately, I was not able to retrieve the stock information for these specific products. Could you please confirm the product names or IDs again, or would you like me to try a different search?",

"ProductIds": [

"products/6-A",

"products/28-A"

],

"OrderIds": [

"orders/764-A",

"orders/705-A"

]

}Here we get a strange response. It correctly tells us what the products we got are, but cannot retrieve the information about them? Let’s investigate this more deeply. We can go to the conversation in question and look at the interaction between the model and the database.

Here is what this looks like:

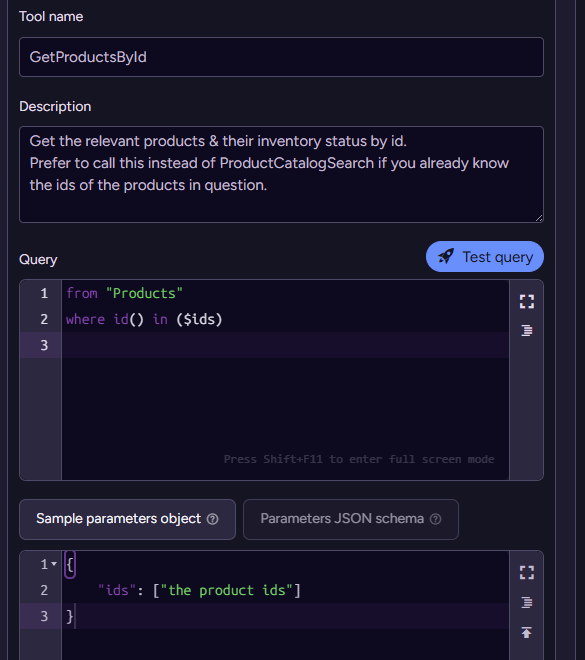

You can see that we got the recent orders, then we used the ProductCatalogSearch tool to search for the… product IDs. But the query underlying this tool is doing a semantic search on the name of the product. No wonder it wasn’t able to find things. Let’s give it the capability it needs to find products by ID:

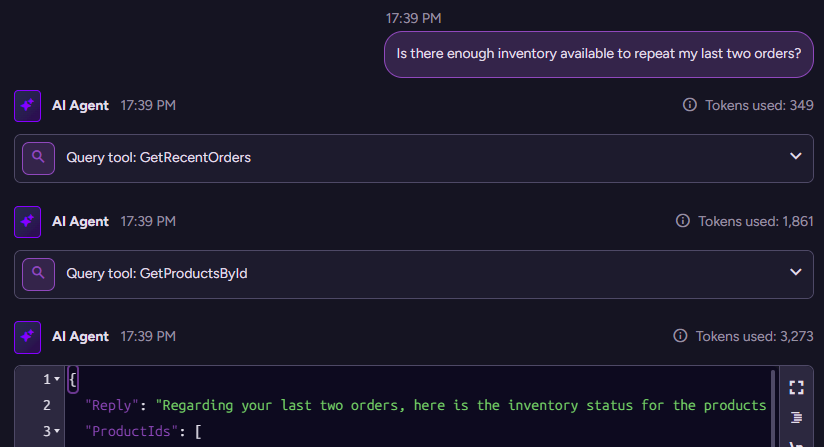

With that in place, we can try asking the same question again. This time, we get a proper response:

(new conversation)> Is there enough inventory available to repeat my last two orders?

{

"Reply": "Regarding your last two orders, here is the inventory status for the products:\n- Order 1:\n * Escargots de Bourgogne: 27 units in stock (required 40 units)\n * Flotemysost: 15 units in stock (required 20 units)\n- Order 2:\n * Grandma's Boysenberry Spread: 3 units in stock (required 16 units)\n * Rössle Sauerkraut: 12 units in stock (required 2 units)\n\nThere is not enough inventory to fully repeat the last two orders. Specifically, Escargots de Bourgogne, Flotemysost, and Grandma's Boysenberry Spread have insufficient stock to fulfill the requested quantities. Rössle Sauerkraut is available to fulfill the order quantity.",

"ProductIds": [

"products/58-A",

"products/71-A",

"products/6-A",

"products/28-A"

],

"OrderIds": [

"orders/764-A",

"orders/705-A"

]

}

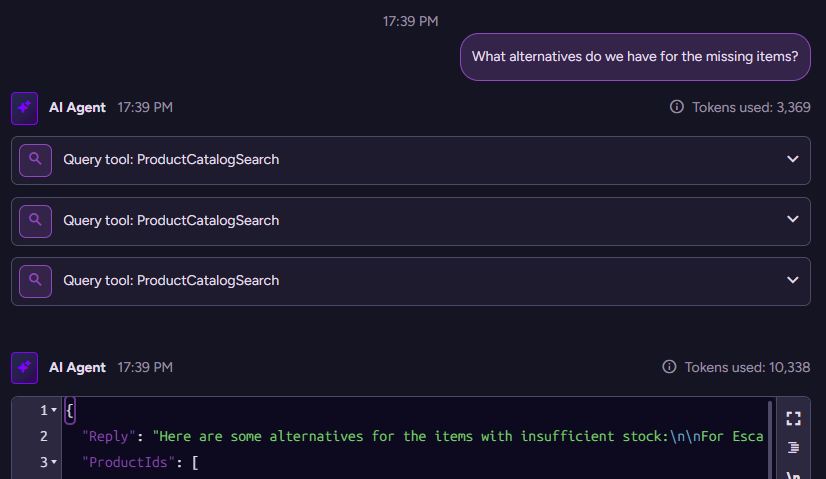

('chats/0000000000000009111-A')> What alternatives do we have for the missing items?

{

"Reply": "Here are some alternatives for the items with insufficient stock:\n\nFor Escargots de Bourgogne, you might consider \"Wimmers gute Semmelknödel\" (a tasty bread dumpling) or \"NuNuCa Nuß-Nougat-Creme\" (a sweet chocolate and hazelnut spread).\n\nFor Flotemysost, alternatives include \"Thüringer Rostbratwurst\" (a grilled sausage, though discontinued), \"Wimmers gute Semmelknödel\" again, or \"Queso Cabrales\" (a strong and tasty blue cheese from Spain).\n\nFor Grandma's Boysenberry Spread, alternatives include \"Vegie-spread\" (a vegetarian spread) or \"Uncle Bob's Organic Dried Pears\" (organic dried fruit).\n\nLet me know if you want details or want to order any of these alternatives.",

"ProductIds": [

"products/64-A",

"products/25-A",

"products/29-A",

"products/31-A",

"products/77-A",

"products/11-A",

"products/63-A",

"products/7-A"

],

"OrderIds": []

}Here is what this looks like on the backend:

The model first got the recent orders, then got the relevant products, and then sent the reply.

With the next interaction, we have the following going on in the backend:

This is interesting because you can see that the model issues three separate calls in order to generate a response. It searched for alternatives for each of the matching products and then offered them to us.

This matters because we were able to answer all the questions for the model in a single round-trip rather than have a long chat.

So we have a smart model, and it can answer interesting questions. What next? An agent is supposed to be able to take action - how do we make this happen?

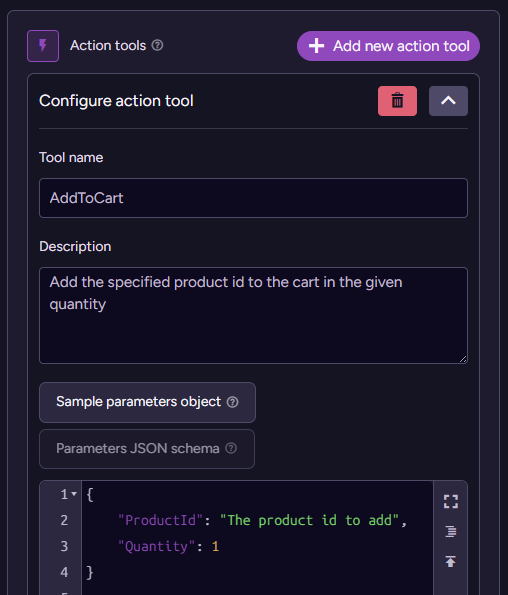

RavenDB supports actions as well as queries for AI Agents. Here is how we can define such an action:

The action definition is pretty simple. It has a name, a description for the model, and a sample object describing the arguments to the action (or a full-blown JSON schema, if you like).

Most crucially, note that RavenDB doesn’t provide a way for you to act on the action. Unlike in the query model, we have no query to run or script to execute. The responsibility for handling an action lies solely with the developer.

Here is a simple example of handling the AddToCart call:

var conversation = store.AI.Conversation(/* redacted (same as above) */);

conversation.Handle<AddToCartArgs>("AddToCart", async args =>

{

Console.WriteLine($"- Added: {args.ProductId}, Quantity: {args.Quantity}");

return "Added to cart";

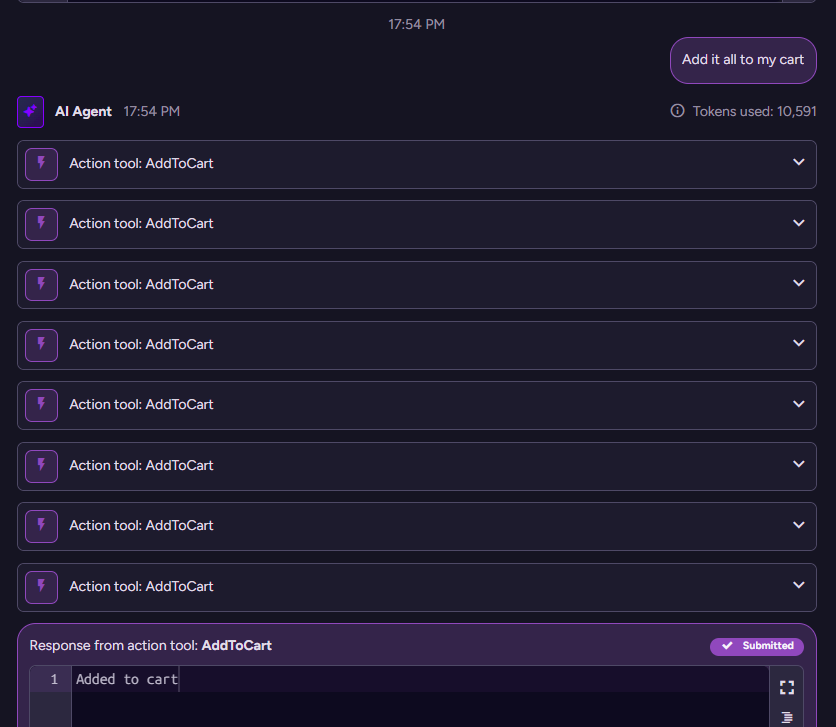

});RavenDB is responsible for calling this code when AddToCart is invoked by the model. Let’s see how this looked in the backend:

The model issues a call per item to add to the cart, and RavenDB invokes the code for each of those, sending the result of the call back to the model. That is pretty much all you need to do to make everything work.

Here is what this looks like from the client perspective:

('chats/0000000000000009111-A')> Add it all to my cart

- Adding to cart: products/64-A, Quantity: 40

- Adding to cart: products/25-A, Quantity: 20

- Adding to cart: products/29-A, Quantity: 20

- Adding to cart: products/31-A, Quantity: 20

- Adding to cart: products/77-A, Quantity: 20

- Adding to cart: products/11-A, Quantity: 16

- Adding to cart: products/63-A, Quantity: 16

- Adding to cart: products/7-A, Quantity: 16

{

"Reply": "I have added all the alternative items to your cart with the respective quantities. If you need any further assistance or want to proceed with the order, please let me know.",

"ProductIds": [

"products/64-A",

"products/25-A",

"products/29-A",

"products/31-A",

"products/77-A",

"products/11-A",

"products/63-A",

"products/7-A"

],

"OrderIds": []

}This post is pretty big, but I want you to appreciate what we have actually done here. We defined an AI Agent inside RavenDB, then we added a few queries and an action. The entire code is here, and it is under 50 lines of C# code.

That is sufficient for us to have a really smart agent, including semantic search on the catalog, adding items to the cart, investigating inventory levels and order history, etc.

The key is that when we put the agent inside the database, we can easily expose our data to it in a way that makes it easy & approachable to build intelligent systems. At the same time, we aren’t just opening the floodgates, we are able to designate a scope (via the company parameter of the agent) and only allow the model to see the data for that company. Multiple agent instances can run at the same time, each scoped to its own limited view of the world.

Summary

RavenDB introduces AI Agent integration, allowing developers to build smart agents with minimal code and no hassles. This lets you leverage features like vector search, automatic embedding generation, and Generative AI within the database.

We were able to build an AI Agent that can answer queries about orders, check inventory, suggest alternatives, and perform actions like adding items to a cart, all within a scoped data view for security.

The example showcases a powerful agent built with very little effort. One of the cornerstones of RavenDB’s design philosophy is that the database will take upon itself all the complexities that you’d usually have to deal with, leaving developers free to focus on delivering features and concrete business value.

The AI Agent Creator feature that we just introduced is a great example, in my eyes, of making things that are usually hard, complex, and expensive become simple, easy, and approachable.

Give the new features a test run, I think you’ll fall in love with how easy and fun it is.

Comments

Very interesting. Is this available in the RavenDB cloud on the free tier? If not, on which tier is it available?

Bob,

This is available in the cloud in the dev environment, and on either the higher ended tiers (P30+) or as an addon

What are you doing to defend against prompt injections in the data stored in the database? If the agent takes the data from the database as an input, it is now subject to misinterpreting it, and if you have any user controlled data in the database it could be malicious.

Jason,

That is a great point, yes. I wrote about this recently in depth, see: https://ayende.com/blog/203140-A/ai-agents-security-the-on-behalf-of-concept?key=45fe4f251b4a41f9b4df1a8dbb2dcdb5

Comment preview