Our test process occasionally crashed with an access violation exception. We consider these to be Priority 0 bugs, so we had one of the most experience developers in the office sit on this problem.

Access violation errors are nasty, because they give you very little information about what is going on, and there is typically no real way to recover from them. We have a process to deal with them, though. We know how to setup things so we’ll get a memory dump on error, so the very first thing that we work toward is to reproduce this error.

After a fair bit of effort, we managed to get to a point where we can semi-reliably reproduce this error. This means, if you wanna know, that do “stuff” and get the error in under 15 minutes. That’s the reason we need the best people on those kind of investigations. Actually getting to the point where this fails is the most complex part of the process.

The goal here is to get to two really important pieces of information:

- A few memory dumps of the actual crash – these are important to be able to figure out what is going on.

- A way to actually generate the crash – in a reasonable time frame, mostly because we need to verify that we actually fixed the issue.

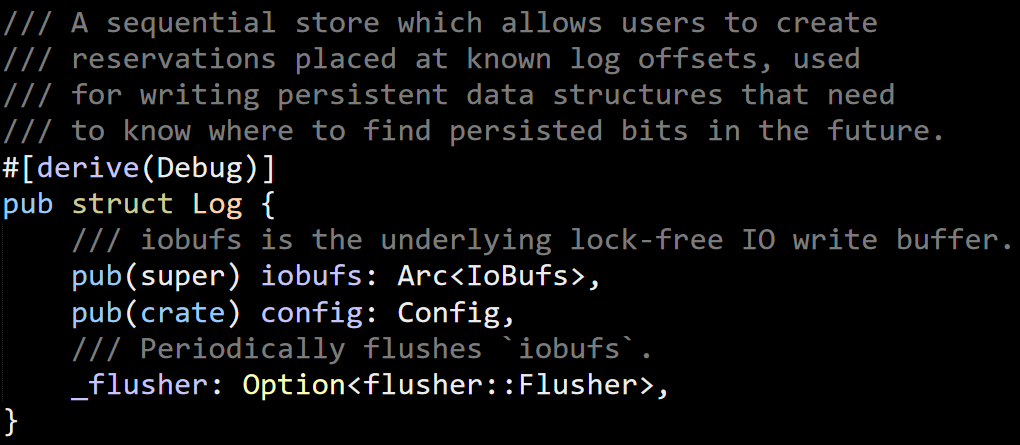

After a bunch of work, we were able to look at the dump file and found that the crash originated from Voron’s code. The developer in charge then faulted, because they tried to increase the priority of an issue with Priority 0 already, and P-2147483648 didn’t quite work out.

We also figured out that this can only occur on 32 bits, which is really interesting. 32 bits is a constrained address space, so it is a great way to run into memory bugs.

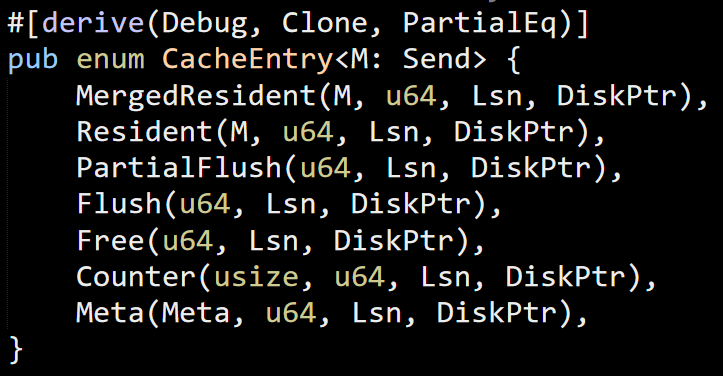

We started to look even more closely at this. The problem happened while running memcpy(), and looking at the addresses that were passed to the function, one of them was Voron allocated memory, whose state was just fine. The second value pointed to a MEM_RESERVE portion of memory, which didn’t make sense at all.

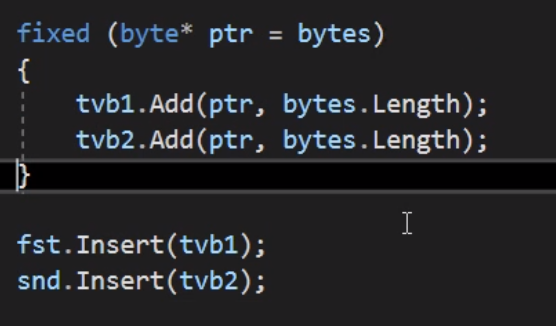

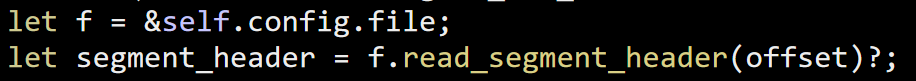

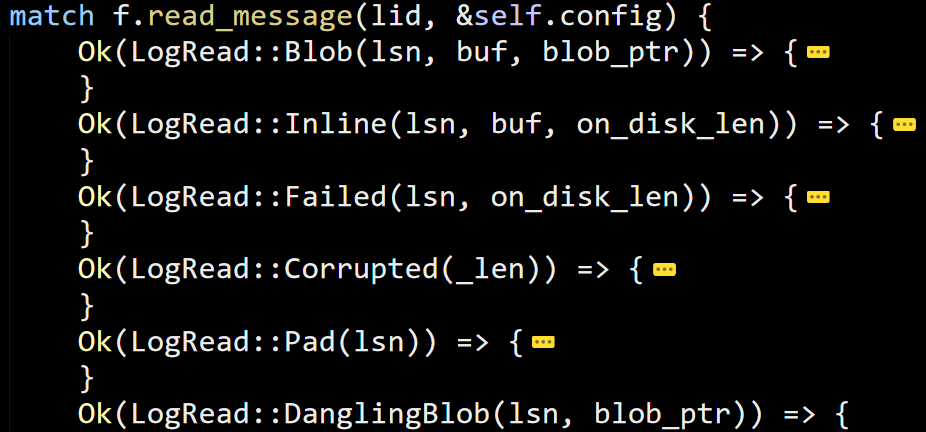

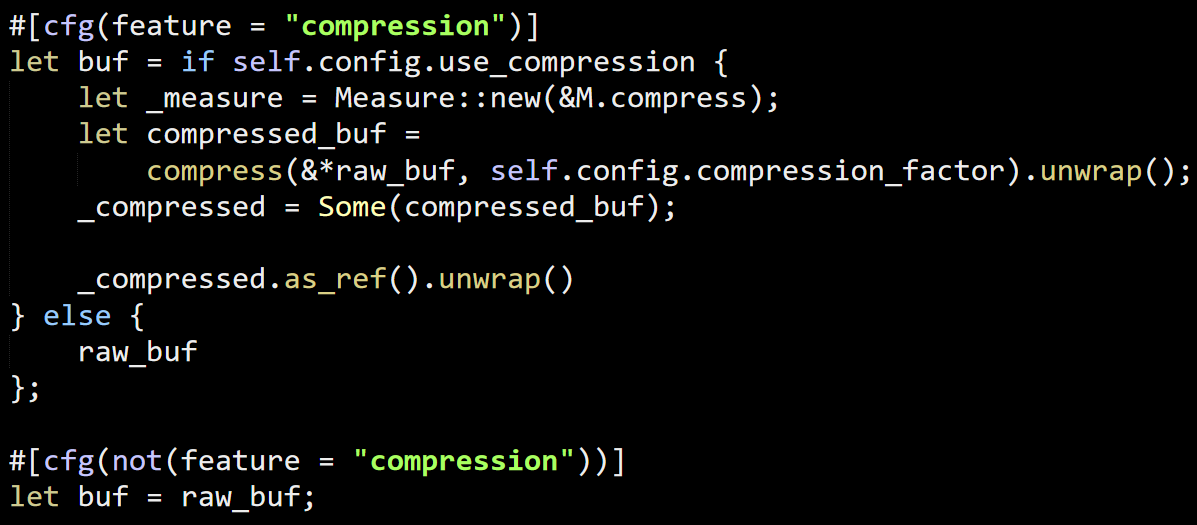

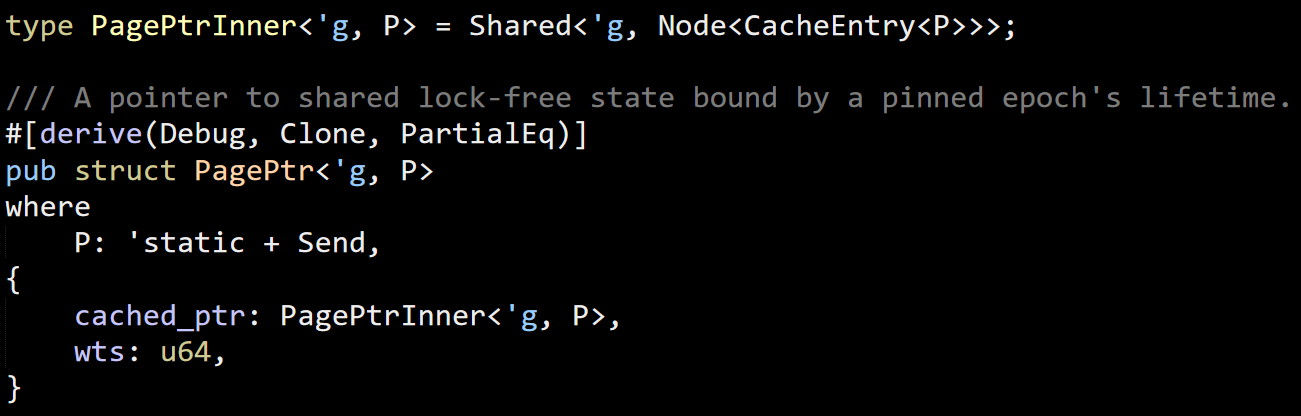

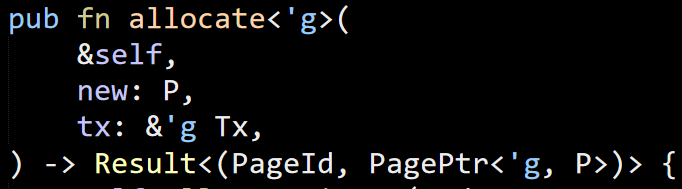

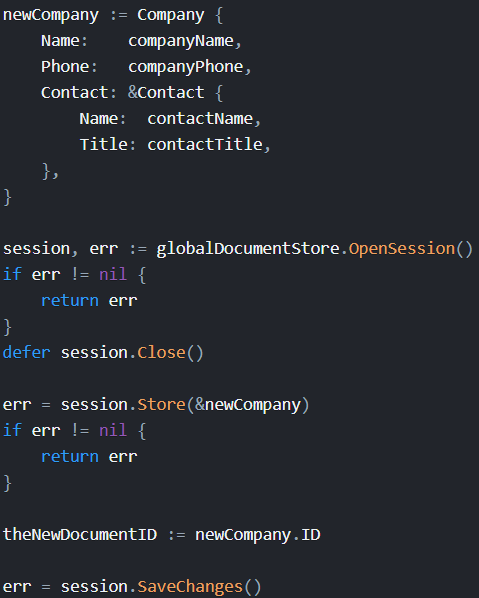

Up the call stack we went, to try to figure out what we were doing. Here is where we ended up in (hint: The crash happened deep inside the Insert() call).

This is test code, mind you, exercising some really obscure part of Voron’s storage behavior. And once we actually looked at the code, it was obvious what the problem was.

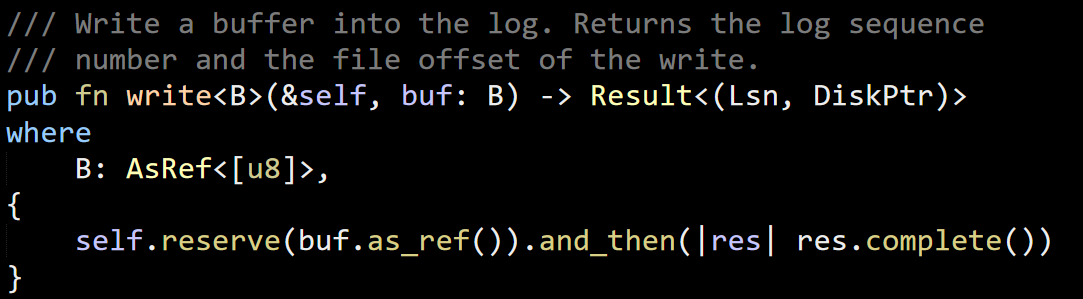

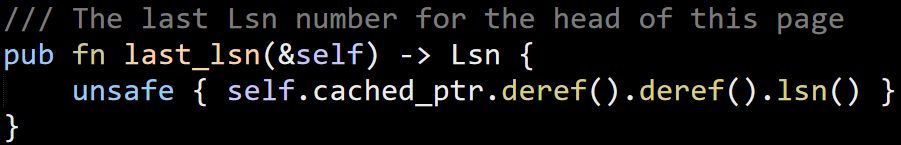

We were capturing the addresses of an array in memory, using the fixed statement.

But then we used them outside the fixed. If there happened to be a GC between these two lines, and if it happened to move the memory and free the segment, we would access memory that is no longer valid. This would result in an access violation, naturally. I think we were only able to reproduce this in 32 bits because of the tiny address space. In 64 bits, there is a lot less pressure to move the memory, so it remains valid.

Luckily, this is an error only in our tests, so we reduce our DEFCON level to more reasonable value. The fix was trivial (move the Insert calls to the fixed scope), and we were able to test that this fixed the issue.