A couple of weeks ago I started to talk about the implementation details of building a persistent data structure in RavenDB. As it turns out, I had some evenings available and I was able to sit down and actually write out the code for it. The current state of things is that a few tests work and the overall structure is in place. I run into some hurdles along the way, which is why I preferred to wait till I have something at hand before writing about it.

A couple of weeks ago I started to talk about the implementation details of building a persistent data structure in RavenDB. As it turns out, I had some evenings available and I was able to sit down and actually write out the code for it. The current state of things is that a few tests work and the overall structure is in place. I run into some hurdles along the way, which is why I preferred to wait till I have something at hand before writing about it.

Please note, I’m going to be doing a lot of low level talk here. Mostly about how we allocate space and manage bits and bytes in Voron. If you aren’t interested in such details, you can skip all the gory stuff and get to the design details.

If you want to go straight for the code, you can find it here. Just note that this version has been created as a proof of concept and hasn’t yet been through the same process we usually take our code through.

The first thing to understand is what I’m trying to write. The reason I need this data structure is for my series about writing search engines. That means that I want to use this to store posting lists. But given my experience with building such systems, I know that there are quite a few different use cases that I need to cover. A posting list is the list of documents matching a term in a search index.

Let’s consider a few examples, shall we?

The above represent fields and values for fields in a full text search index. There are a few things to note. We can usually assume that the Email field will be unique or nearly so. In other words, the number of documents where the Email field will match oren@example.com is going to be one (or very nearly so). This is a very frequent scenario when indexing data and it deserves optimal behavior.

The Hobbies field, however, is very different. Quite a few people likes Dogs, for example, so we can assume that we’ll have a lot of documents that are matched to this term. That mean that we need to optimize for very large number of matches, the exact opposite of how we need to behave for the Email field.

Sometimes, it is easier to understand when looking at the type signature. If I was writing this in memory, I would use:

Map<FieldName, Map<Term, Set<DocumentId>> InvertedIndex;

That is the conceptual model that we’ll be working with here. After implementing the actual data structure, we have the following API:

Once we have the data stored, we can now query on it. For example, to find all users that like dogs, you’ll write:

Actually building realistic queries on top of this is a tedious, but fairly straightforward matter. It will also likely be the topic of another post. For now, I want to focus on how I actually built the implementation of this feature.

At this point, Voron features are mostly built on top of… Voron features  . That is, we don’t need to build complex data structure from scratch, but can usually use a fair bit of the underlying infrastructure that we already have.

. That is, we don’t need to build complex data structure from scratch, but can usually use a fair bit of the underlying infrastructure that we already have.

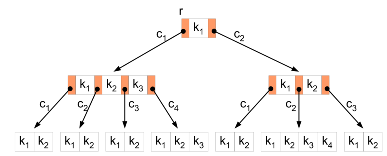

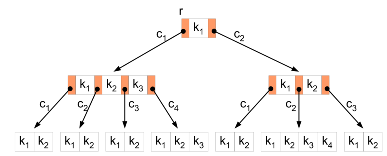

In this case, we need to understand one of the most basic building blocks in Voron: The Tree. This versatile data structure is the core of pretty much everything in Voron. It is a B+Tree that can hold arbitrary keys and values, keeping them in sorted order.

In particular, the Tree uses a byte string as its key, and its value can be either a raw value or a complex type. Going back to the type signature, the Tree would be:

SortedMap<ByteString, (byte[] RawValue, Tree NestedTree, FixedSizeTree NestedFixedSizeTree)> Tree;

Note that the value can be a raw value, a nested tree or a fixed size tree (there are other options, but we’ll focus on those). A raw value is simple, it is just a buffer that is stored and retrieved. The two nested tree options is just using recursion to its fullest potential. The difference between Tree and FixedSizeTree is one of optimizations. A Tree can use any byte string as its key, but a fixed size tree can only use an int64 for its key. And as you can expect from the name, its values are also fixed in size. That means that it needs less metadata than its Tree sibling and can be somewhat simpler to implement.

Voron also has the notion of raw data sections. These allow you to allocate / write to the disk directly and are usually paired with another data structure to manage them. You can think about the raw data section as the malloc() of persistent data structures.

I’m going over these details because they are important to how I built the underlying data structure. Here are the rules that I had in mind while building this:

- Optimize for both storage space and computational power

- Zero managed allocations for reading

- Reduce / eliminate managed allocations for writing

- Document ids are int64

- Handle single use terms (Email)

- Handle multiple use terms (Hobbies)

We’ll start from the simple scenario, storing a document id for a particular email address:

emailField.Set("oren@example.com", 1L);

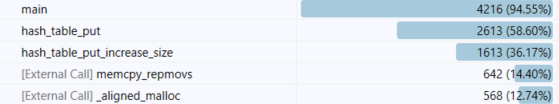

The backing store of the Roaring Set is a Voron Tree, and we’ll use the term as the key, and store the document id (1L, in this case) as the value. That is probably the absolutely simplest way to go about building this feature. Except that we are actually wasting space. 1L (long set to one, basically) takes 8 bytes to store data that can be stored in a single byte. That means that we’ll waste space, quite a lot of it, in fact.

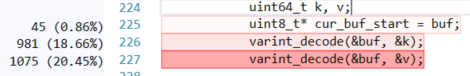

So we aren’t going to store the data as raw int64. Instead, we are going to use varints, instead. In this way, a value such as 1L can be stored in a single byte.

What happen if we have another value for the same field and term?

emailField.Set("oren@example.com", 3L);

At this point, we’ll encode the next value using varint as well, but instead of recording the actual value, we’ll record the difference from the previous value. We’ll continue to do so until the size of the buffer we need to record the data reach 32 bytes.

The idea is that in most cases, we’ll have a single value or very few of them. We have a compact way of representing this information, which works quite nicely for small set of values.

Here is how you can read such an encoding:

As you can see, there is nothing really interesting going on here. There are implementation details that I’m not getting into, such as the fact that we are storing the values sorted (which maximize the delta encoding from keeping just the difference from the previous number), but that doesn’t actually matter to the core concept.

I mentioned before that this is limited to 32 bytes, right? So what happens when we get beyond that level? This is where things become interesting / complicated.

Instead of using a raw value for the values, we will move to a more complex structure. This is suitable when we have enough values to justify the extra effort. The idea here is to make use of Roaring Bitmaps, which is an efficient way to store bit maps. A bit map is simply an array of bits that are either set or cleared. I’m using them to hold a set of values. In other words, consider a Set<int64>, where the implementation is using a bitmap to figure out if a value exists or not.

Of course, storing such a set using standard bitmaps would be incredibly wasteful in space, but that is what roaring bitmaps are for. I’ll let you go to the actual site for a description of them, but you can think about them as a sparse map. You only need to hold the bits that you care about. That said, the way roaring bitmaps are usually used, they are using 8KB ranges. That is, each such range is capable of holding 65,536 bits. However, when looking into how I’ll be using this in Voron, I run into an issue.

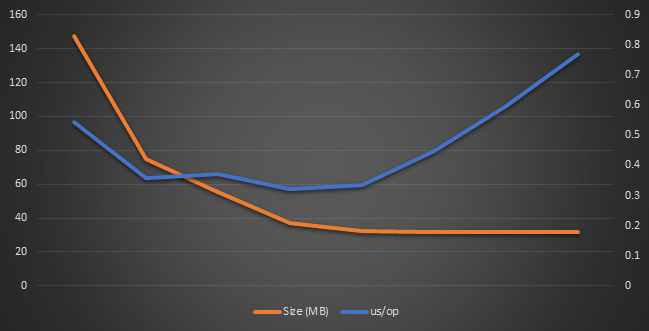

A Voron page is 8KB in size, and we have to allocate some space for the page header, we can’t easily store an 8KB value there. I thought about using 4KB, instead, but that just made things awkward. I’ll be losing half a page, after all. After some experimentation, I ended up with each roaring set segment using 256 bytes. This is small, but has several advantages for my needs.

A Voron page has a 64 bytes header, which means that I can use 8,128 bytes for real data. Using 256 bytes for the roaring segment size, I also need to account for some metadata per segment, so that turns out to be 260 bytes total. That gives me a total of 30 segments that I can squeeze into a single page. I actually have a total of additional 10 bytes that I can use per segment, without impacting the total number of values that can be stored into in a page.

A single segment represent the state of the bits with a range of 2048 bits. And there are other advantages to the small size, though. This is planned as a persistent and mutable data structure. Having a smaller segment size means that I have easier time modifying just a single segment. Following the roaring bitmap rules, we have three types of segments:

- Small (128 or less bits set) – stored as an array of int16 (up to 256 bytes) holding the offsets of set bits in the range.

- Medium (up to 1920 bits set) – stored as a bitmap value (taking 256 bytes).

- Large (more than 1920 bits set) – stored as an array of int16 (up to 256 bytes) holding the offsets of cleared bits in the range.

Roaring Bitmaps tend to perform much better than the alternative (even though this is the 8KB version).

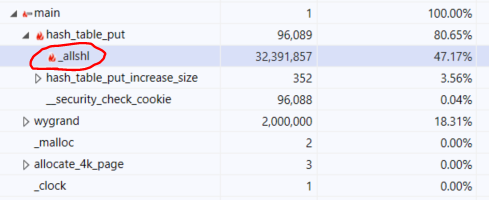

Just having the segments isn’t good enough, though. I need to also have a way to search for a segment. After all, the whole idea is that we’ll have a sparse data structure. This is done using a Fixed Size Tree. Each segment gets a key, made up of the segment range (54 bits) and the number of set bits in the range (10 bits). Together, they make up the key that we can use to look up a particular segment. The value for the Fixed Size Tree is the position of the actual segment in the data file.

You can think about this as:

SortedMap<SegmentKey(Range: 54 bits, NumOfSetBits: 10 bits), FileOffset> Segments;

In other words, the total metadata cost for a segment is actually 270 bytes (counting also currently unused space) for the segment as well as 16 bytes for the key/value in the fixed size tree. In other words, to hold about 10 million values, we’ll need roughly 2.8 MB or so. On the other if we stored the offsets directly as int64, 10 million values would be around 76MB. The numbers aren’t quite that bad, because for roaring bitmap we pay per segment, while for a simple array of int64, we’ll pay for each set value.

I think that this post has gone on long enough. You can look at the code, which has all the details (and I would love to get feedback / questions on this), but I now need to face another challenge in this road. Tying all of this together so we can create a useful search API. Just having the code I’ve shown at the top of this post is not sufficient, we need to be able to provide more metadata around tying values together. I’ll get to that in another post.