You can watch the full thing here:

In this Webinar, we will discuss one of the upcoming features of RavenDB 3.0. We won't tell you what exactly, but it is a pretty cool one.

Date: Thursday, November 7, 2013

Space is limited.

Reserve your Webinar seat now at:

https://www2.gotomeeting.com/register/507777562

I am doing a big merge, and as you can imagine, some tests are failing. I am currently just to get it out the door, and failing tests are really annoying.

I identified the problem, but resolving it would be too hard right now, so I opted for the following solution instead:

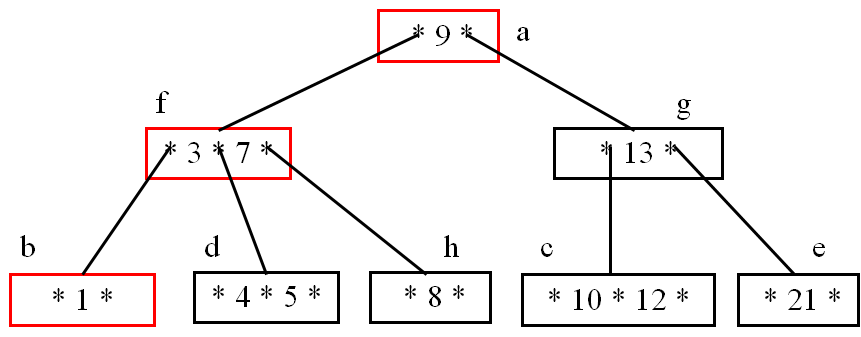

Me & Michael looking at a tricky part of Voron’s rebalancing code:

Michael & Daniel are working on Voron’s free space reuse strategy:

We have an outage that appears to have taken roughly 12 hours.

The reason it took so long to fix, it was after business hours, and while we have production support for our clients, we never hooked up our own websites to our own system. A typical case of the barefoot shoemaker.

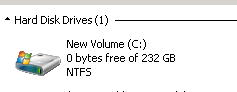

The reason for the outage? Also pretty typical:

The reason for that? We had a remote backup process that put some temp files and didn’t clean them up properly. The growth rate was about 3-6 MB a day, so no one really noticed.

The fix:

All is working now, I sorry for the delay in fixing this. We’ll be having some discussion here to see how we can avoid repeat issues like that.

This is another post on the “learning to code” series. Mostly because I think that this is an important distinction. The way I learn something new, I usually try to construct a mental model of how it works in the simplest terms, then I learn to use it, then I dig into the actual details of how it works.

The key here is that the results of the first part is usually wrong. It is a lie, but it is a good lie, in the sense that it give me the ability to gloss over details in order to understand how things are really working. It is only when I understand how something is used and under what scenarios that I can really appreciate the actual details.

A good example of that are things like:

- Memory

- Garbage Collection

- Network

If you ever wrote C, you are familiar with the way we can just allocate memory and use it. The entire system is based on the idea that it looks to you like you have full access to the entire system, and that one piece of memory is pretty much the same as anything else. It is a great mental model to go with, even if it is completely wrong. Virtual memory, OS paging, CPU cache lines are all things that really mess up with that model when you get down to really understanding how this works. So the model that you have in your head (and probably still do, unless you need to think about high perf code).

The way most people think GC works is probably based on John McCarthy's "Recursive functions of symbolic expressions and their computation by machine" and/or George E. Collins' "A method for overlapping and erasure of lists", both from 1960. While those set the basis for GC in general, there is a lot more going on, and modern GC systems work quite differently. But it is enough to get by.

And networking have this whole 7 level OSI model:

And very rarely do people need to think beyond the next layer down. We might think that we viewing a web page, and then break it down to the list of requests made, but we usually stop at that level. It is easy to just consider what happens as magical, but you sometimes need to figure out what is going on in the next layer down, how the packets are actually broken up for transports.

An example of that is when you run into issues with Nagle’s algorithm.

But trying to learn REST, for example, by focusing on the format of IPv4 vs. IPv6 packets is a non starter. We need to know just enough to understand what is going on. We are usually good with just thinking about the movement of data as just happening. Of course, later on we would need to revisit that to have better understanding of the stack. But that is usually not relevant. So we tell ourselves a little lie and move on.

As an example of when those lies comes to bite us in the ass is the Fallacies Of Distributed Computing. But for the most part, I am really in favor of dedicating just enough time to figure out a good lie and move of to the real task.

Of course, it has to be a good lie. A really good one was the Ptolemaic model of the universe:

It might be wrong, but it was close enough for the things that you needed doing.

Casey asked me:

Any tips on how to read large codebases - especially for more novice programmers?

As it happens, I think that this is a really great question. I think that part of what makes someone a good developer is the ability to go through a codebase and figure out what is going on. In your career you are going to come into an existing project and be expected to pick up what is going on there. Or, even more nefarious, you may have a project dumped in your lap and expected to figure it out all on your own.

The worst scenario for that is when you are brought in to replace “those incontinent* bastards” that failed the project, and you are expected to somehow get things working. But another common scenarios for this include being asked to maintain a codebase written by a person who left the company. And finally, of course, if you are using any Open Source projects, there is a strong likelihood that you’ll be asked to “can you extend this to also do this”, or maybe you are just curious.

Especially for novice programmers, I would strongly recommend that you’ll do just that. See the rest of the post for actual details on how I do that, but do go ahead and read code.

I usually approach new codebases with a blind eye toward documentations / external details. I want to start without preconceptions about how things are working. I try to figure out the structure of the project from the on disk structure. That alone can tell you a lot. I usually try to figure out the architecture from that. Is there a core part of the system? How is it split, etc.

Then I find the lowest level part of the code just start reading it. Usually in blind alphabetical order. Find a file, read it all the way, next file, etc. I try to keep notes (you can see some examples of those in the blog) about how things are hooked together, but mostly, I am trying to get a feel for the code. There is a lot of code that is usually part of the project style, it can be things like precondition checks, logging, error handling, etc. Those things you can learn to recognize early and then can usually just skip them to read the interesting bits.

I usually don’t try to read too deeply at this point, I am trying to get a feeling about the scope of things. This file is responsible for X and do so by calling Y & Z, but it isn’t really important to me to know every little detail at that point. Oh, and I keep notes, a lot of notes. Usually they aren’t really notes but more a list of questions, which I fill / answer as I understand more. After going through the lowest level I can find, I usually try to do a vertical slice. Again, this is most so I can figure out how things are laid out and working. That means that the next time that I am going to go through this, I’ll have a better idea about the structure / architecture of this.

Next, I’ll usually head to the interesting bits. The part of the system that make it interesting to me rather than something that is off the shelve.

That is pretty much it, there isn’t much to it. I am pretty much just going over the code and trying to first find the shape & structure, then I dive into the unique parts and figure out how they are made.

In the meantime, especially if this is hard, I’ll try to go over any documentation exists, if any. At this point, I should have a much better idea about how things are setup that I’ll be able to really go through the docs a lot more quickly.

* I started writing incompetent, but this is funnier.

On the 5th on November, I am going to be doing a talk in Skills Matter London.

In this talk I am going to talk about LevelDB, LMDB and storage internals. I am going to cover how we built Voron, the next generation storage engine for RavenDB, the design decisions that we made and the things we took from other codebases that we looked in.

Expect a low level talk filled with details about how to implement ACID and transactions, how to optimize reads and writes. Pitfalls in building storage engines, and a lot of details that comes from several years of research into this area.

Yes, it will be recorded, but you probably want to come anyway. It is a talk I’ve been dying to give for the last 3 months, and I got some goodies to share.

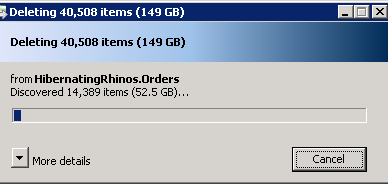

As I mentioned, there is one scenario which produce worse & worse performance for LMDB, random writes. The longer the data set, the more expensive the cost. This is due to the nature of LMDB as a Copy On Write database. Here is a simple explanation of what is the cost:

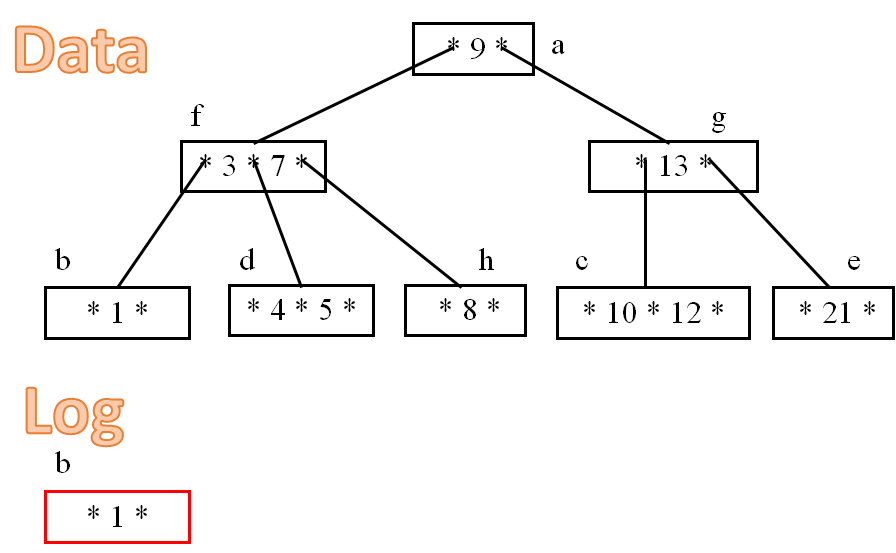

We want to update the value in the red square.

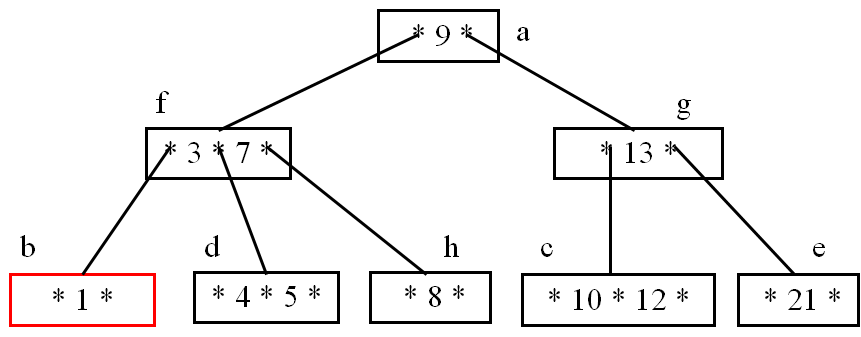

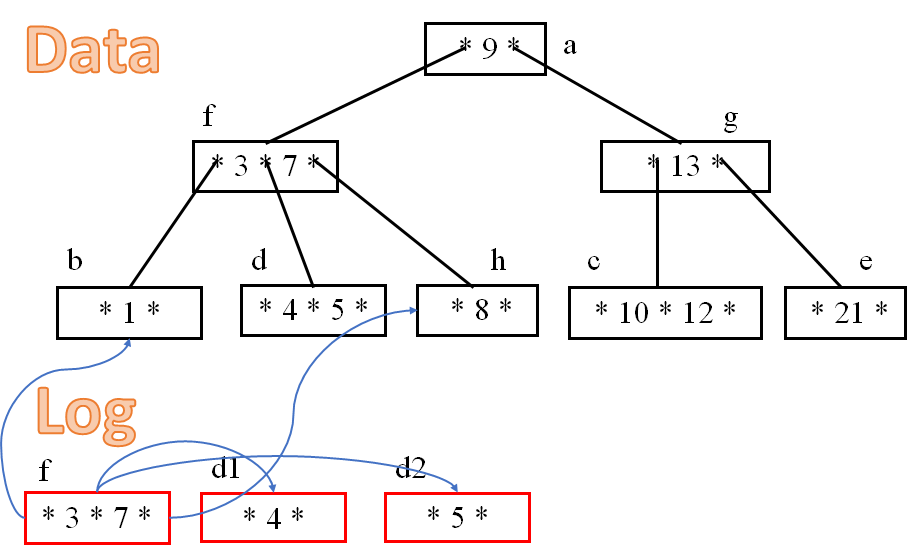

Because the tree is Copy On Write, we need to modify the following pages:

An as the tree goes deeper, it becomes worse. Naturally, one consequence of doing random writes is tree fragmentation. In my tests, on a small 1 million records dataset we would get a cost of over hundred pages (roughly 4 MB) to update a hundred 16 + 100 bytes values. Just to clarify, those tests were done on the LMDB code, not on Voron. So I am pretty sure that this isn’t my problem. Indeed, I confirmed that this is a general problem with COW approach.

I accepted that, and the benefits that we get are significant enough to accept that. But… I never really was complete in my acceptance. I really wanted a better way.

And after adding Write Ahead Log to Voron, I started thinking. Is there a reason to continue this? We are already dealing with writing to the log. So why modify the entire tree?

Here is how it looks like:

Now, when you are looking at the tree, and you ask for the B page, you’ll get the one in the log. However, older transactions would get to see the B page in the data file, not the one in the log. So we maintain MVCC.

This complicates matters somewhat because we need to sync the data file flushing with existing transactions (we can’t flush the file until all transactions have progressed to the point where they look at the new pages in the log), but that is something that we have to do anyway. And that would cut down the amount of writes that we have to do by a factor of ten, according to my early estimation. Hell, this should improve write performance on sequential writes.

For that matter, look what happens when we need to split page D:

Here we need to touch the tree, but only need to touch the the immediate root. Which again, gives us a lot of benefits in the size we need to touch.

We had an artist come to our offices yesterday and do some work on the wall.

You can see the entire process here, but the entire result is: