One of the hardest things that we did in RavenDB 4.0 would probably go completely unnoticed by users. We completely re-wrote how RavenDB is processing map/reduce queries. One of my popular blog posts is still a Visual Explanation to Map/Reduce, and it still does a pretty good job of explaining what map/reduce is.

One of the hardest things that we did in RavenDB 4.0 would probably go completely unnoticed by users. We completely re-wrote how RavenDB is processing map/reduce queries. One of my popular blog posts is still a Visual Explanation to Map/Reduce, and it still does a pretty good job of explaining what map/reduce is.

The map/reduce code in RavenDB 3.x is one of the more fragile things that we have, require you to maintain in your head several completely different states that a particular reduction can be in and how they transition between states. Currently, there are probably two guys* who still understand how it works and one guy that is still able to find bugs in the implementation. It is also not as fast as we wished it would be.

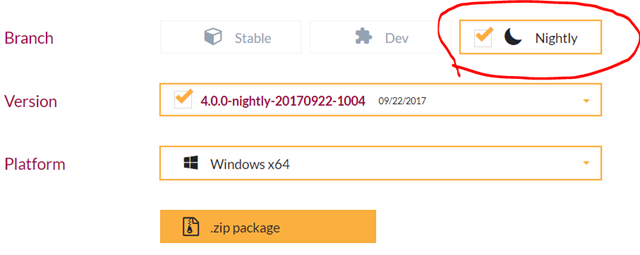

So with RavenDB 4.0 we set out to build it from scratch, based in no small part on the fact that we had also written our storage engine for 4.0 and was able to take full advantage of that. You can read about the early design in this blog post, but I’m going to do a quick recap and explain how it works now.

The first stage in map/reduce is… well, the map. We run over the documents and extract the key portions we’ll need for the next part. We then immediately apply the reduce on each of the results independently. This give us the final map/reduce results for a single document. More to the point, this also tells us what is the reduce key for the results is. The reduce key is the value that the index grouped on.

We store all of the items with the same reduce key together. And here is where its get interesting. Up until a certain point, we just store all of the values for a particular reduce key as an embedded value inside a B+Tree. That means that whenever any of the values changes, we can add that value to the appropriate location and reduce all the matching values in one go. This works quite well until the total size of all the values exceed about 4KB or so.

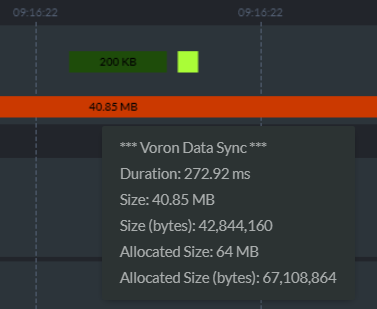

At this point, we can’t store the entire thing as an embedded value and we move all the values for that reduce key to its own dedicated B+Tree. This means that we start with a single 8KB page and fill it up, then split it, and so on. But there is a catch. The results of a map/reduce operation tend to be extremely similar to one another. At a minimum, they share the same properties and the same reduce key. That means that we would end up storing a lot of duplicate information. To resolve that, we also apply recursive compression. Whenever a page nears 8KB in size, we will compress all the results stored in that page as a single unit. This tend to have great compression rate and can allow us to store up to 64KB of uncompressed data in a single page.

When adding items to a map/reduce index, we apply an optimization so it looks like:

results = reduce(results, newResults);

Basically, we can utilize the recursive nature of reduce to optimize things for the append only path.

When you delete or update documents and results change or are removed, things are more complex. We handle that by running a re-reduce on the results. Now, as long as the number of results is small (this depend on the size of your data, but typically up to a thousand or so) we’ll just run the reduce over the entire result set. Because the data is always held in a single location, this means that it is extremely efficient in terms of memory access and the tradeoff between computation and storage leans heavily to the size of just recomputing things from scratch.

When we have too many results (the total uncompressed size exceeds 64KB) we start splitting the B+Tree and adding a level to the three. At this point, the cost of updating a value is now the cost of updating a leaf page and the reduce operation on the root page. When we have more data still, we will get yet another level, and so on.

The (rough) numbers are:

- Up to 64KB (roughly 1000 results) – 1 reduce for the entire dataset

- Up to 16 MB – 2 reduces (1 for up to 1000 results, 1 for up to 254 results)

- Up to 4 GB – 3 reduces (1 for up to 1000 results, 2 for up to 254 results each)

- Up to 1 TB - 4 reduces (1 for up to 1000 results, 3 for up to 254 results each)

- I think you get how it works now, right? The next level up is 1 to 248 TB and will requite 5 reduces.

These numbers is if your reduce data is very small, in the order of a few dozen byes. If you have large data, this means that the tree will expand faster, and you’ll get less reduces at the first level.

Note that at the first level, if there is only an addition (new document, basically), we can process that as a single operation between two values and then proceed upward as the depth of the tree requires.There are also optimizations in place if we have multiple updates to the same reduce key, in that case, we can first apply all the updates, then do the reduce once for all of them in one shot.

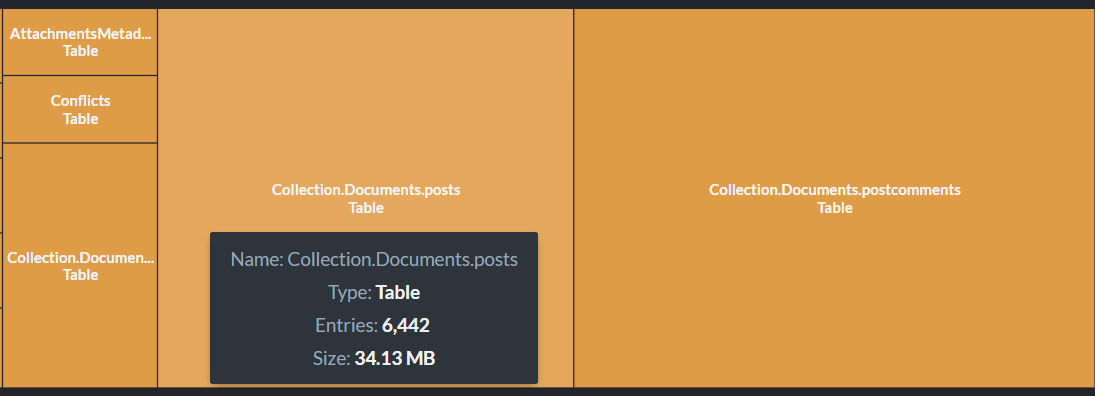

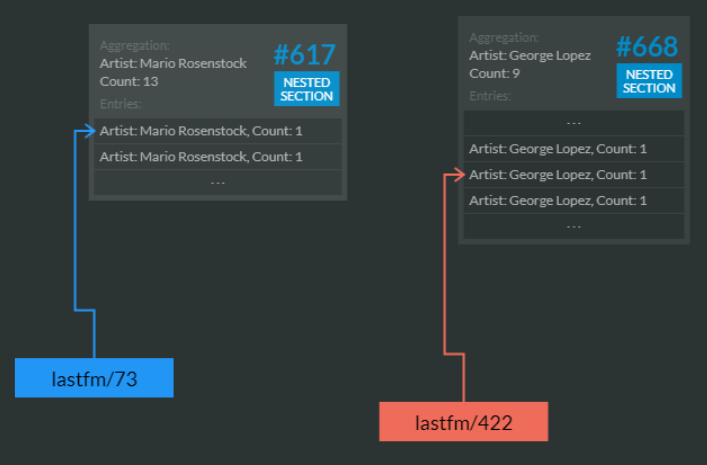

And all of that is completely invisible to the users, unless you want to peek inside, which is possible using the Map/Reduce visualizer:

This can give you insight deep into the guts of how RavenDB is handling map/reduce operations.

The current status is that map/reduce indexing are actually faster than normal indexes, because they are almost all our code, while a large portion of the normal indexing cost is with Lucene.

* That is an exaggeration, there is one guy that know how it works. Okay, okay, I’ll admit that we can dive into the code and figure out what is going on, but it takes quite a bit of time if there is a significant issue there.

I mentioned that RavenDB 4.0 uses x509 client certificate for authentication, right? As it turns out, this can create some issues for us when we need to do more than just blind routing to the right location.

I mentioned that RavenDB 4.0 uses x509 client certificate for authentication, right? As it turns out, this can create some issues for us when we need to do more than just blind routing to the right location.