The design of RavenDB 4.0You can’t see the map/reduce from all the trees

Map/Reduce is a core part of RavenDB, one of the earliest features that we implemented and something that we have worked to improve many times. You can read my original blog post about them. In the current codebase, Map/Reduce is also one of the scariest pieces of code, and one of the most fragile. The good thing is that we have a really high number of tests around it. The sad thing is that it takes a long time to make any modification there to stick without breaking something else.

It is a complex topic from the get go, and having a performant version of that was not trivial, so put all together, this is one of the more complex pieces of code in RavenDB. I’m not going to go into the details on how this works now, it is that complex.

That complexity really bugged me. This is an area of the code that only a few of us had approached, and usually with various levels of dread. We spent so much time simplifying things in RavenDB 4.0 that I couldn’t really abide the concept of having to bring this complexity over and having to live with it. But we couldn’t find a better way to do it.

Until I realized that I was thinking about this in totally the wrong level. The way to handle that was to reduce the level of abstraction down, way down. Again, being able to control the storage at a very low level helps a lot.

I have talked before about B+Trees, and this time, we are going to use their structure directly. Here is how it works. Let us assume that we have the following map/reduce index:

This operates on orders, and gives us the total purchases per product. Now, for the actual implementation. We first run the map function over a set of orders, giving us the mapped results, which we’ll store in a B+Tree. A B+Tree is a key/value structure, and previously we used composite keys using the reduced key, the document key, and a whole bunch of other stuff. That led to a whole lot of complications.

Now, we are going to have a separate key per reduce key. Confusing? Lets see if I can explain. Each reduce key (a reduce key is the thing that you group by, in this case, that is the product id) is going to have its own dedicated tree. And the content of that tree is going to be the document id as the key to the tree, and the mapped result for that particular reduce key as the value. So far, that isn’t really impressive. We changed things around, instead of having a single tree for all mapped results, we are going to have a tree per reduce key.

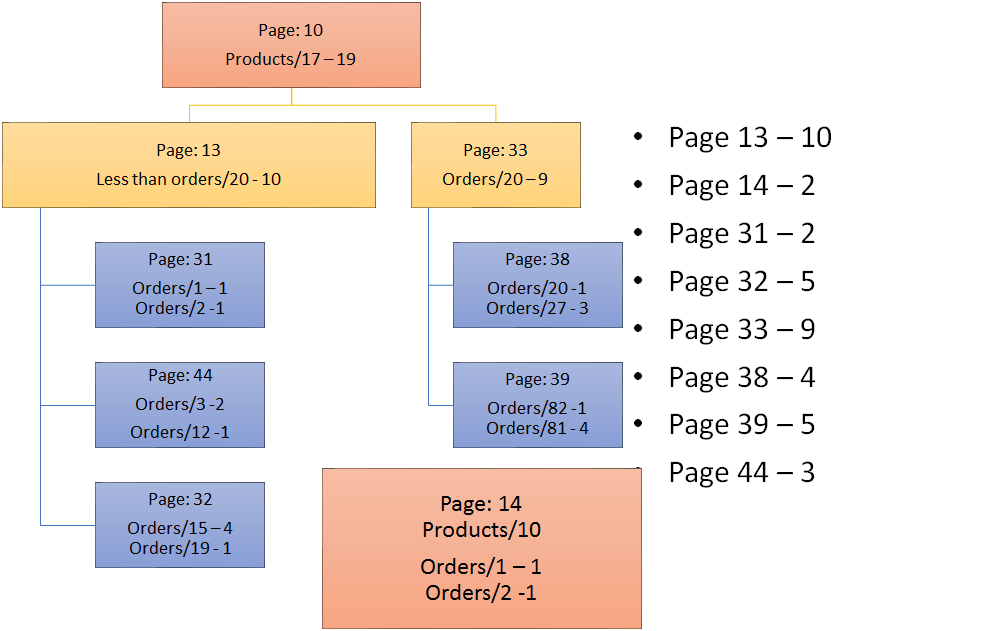

But here is where it gets interesting. B+Tree are… well, trees. That means that they are hierarchical in nature. What we did was ask the tree to let us know about all the changed pages. Consider the image below, which shows the status of the database after indexing a few orders, which have line items for products/17 and products/10.

We are now going to update orders/2. So the next thing that we need to do, is to run the map over the updated order, giving us new entries for products/17 and products/10. Each of them in a different tree. Because we can ask the tree what pages have changed, we can now do the following:

- For each changed page:

- calculate the new value of the page.

- mark the parent page as changed

- Repeat until there are no changed pages.

Let us see this in detail, in Page 14, which is the (small) tree for products/10, we don’t really have much to do. We just need to run the reduce over all the entries in the page, and we have the final result. Things are more interesting with products/17.

Here, we updated Page 31 during the map process. When we get to the reduce, we discover that, and that means that we need to re-reduce Page 31. But that isn’t the end. Now we need to also update things upward. In our case, we need to update Page 13. You might have noticed the list on the side, there is the computed reduce value per each page there. And when we are done updating Page 31, we go to its parent, Page 13. We get the computed values for pages 31,44, 32 and reduce those, giving us the final value for Page 13. In which point, we go up yet again, and reduce Page 13 and Page 33 together, resulting in the final value.

All in all, this is pretty simple to explain, was very easy to implement and is going to handle the complexities of map/reduce in dramatically better fashion.

In the example above, I’m showing it working with very few entries per page. In practice, we are usually talking about roughly 200 entries per page, so a reduce key has more than 200 entries, we start going with the multiple steps.

Our go-to example for map reduce was the US census. 310 million people, more or less, and we want to build a count of how many people per state. That gives us California, with roughly 40 million people in it. Adding a new person to California using this system will result in a B+Tree that have a depth of 5, and re-reducing after an update will take less than a thousand operations to re-compute it. And that is for an update / delete operation.

If we have a new value, we can skip the whole thing, and re-update the map/reduce in about 6 operations. And the entire codebase is easy to read, and we expect it to be much faster as well.

More posts in "The design of RavenDB 4.0" series:

- (26 May 2016) The client side

- (24 May 2016) Replication from server side

- (20 May 2016) Getting RavenDB running on Linux

- (18 May 2016) The cost of Load Document in indexing

- (16 May 2016) You can’t see the map/reduce from all the trees

- (12 May 2016) Separation of indexes and documents

- (10 May 2016) Voron has a one track mind

- (05 May 2016) Physically segregating collections

- (03 May 2016) Making Lucene reliable

- (28 Apr 2016) The implications of the blittable format

- (26 Apr 2016) Voron takes flight

- (22 Apr 2016) Over the wire protocol

- (20 Apr 2016) We already got stuff out there

- (18 Apr 2016) The general idea

Comments

Fantastic news. I dealt with a similar situation recently, in which approaching the problem from a new perspective improved performance by roughly 100x (that's not a typo), and nearly flattened the workload to give us a very consistent load on the servers.

And this comes with a best practice: don't do map reduce if there is only a few entries per reduce key because if you do you would end up with lots of almost empty pages.

Jesus, Actually, no. What we'll probably end up doing is putting the tiny map/reduce values together in a single page.

Comment preview