The design of RavenDB 4.0Getting RavenDB running on Linux

We have been trying to get RavenDB to run on Linux for the over 4 years. A large portion of our motivation to build Voron was that it will also allow us to run on Linux natively, and free us from dependencies on Windows OS versions.

The attempt was actually made several times, and Voron has been running successfully on Linux for the past 2 years, but Mono was never really good enough for our needs. My hypothesis is that if we were working with it from day one, it would have been sort of possible to do it. But trying to port a non trivial (and quite a bit more complex and demanding than your run of the mill business app) to Mono after the fact was just a no go. There was too much that we did in ways that Mono just couldn’t handle. From GC corruption to just plain “no one ever called this method ever” bugs. We hired a full time developer to handle porting to Linux, and after about six months of effort, all we had to show for that was !@&#! and stuff that would randomly crash in the Mono VM.

The CoreCLR changed things dramatically. It still takes a lot of work, but now it isn’t about fighting tooth and nail to get anything working. My issues with the CoreCLR are primarily in the area of “I wanna have more of the goodies”. We had our share of issues porting, some of them were obvious, a very different I/O subsystem and behaviors. Other were just weird (you can’t convince me that the Out Of Memory Killer is the way things are supposed to be or the fsync dance for creating files), but a lot of that was obvious (case sensitive paths, / vs \, etc). But pretty much all of this was what it was supposed to be. We would have seen the same stuff if were working in C.

So right now, we have RavenDB 4.0 running on:

- Windows x64 arch

- Linux x64 arch

We are working on getting it running on Windows and Linux in 32 bits modes as well, and we hope to be able to run it on ARM (a lot of that depend on the porting speed of the CoreCLR to ARM, which seems to be moving quite nicely).

While there is still a lot to be done, let me take you into a tour of what we already have.

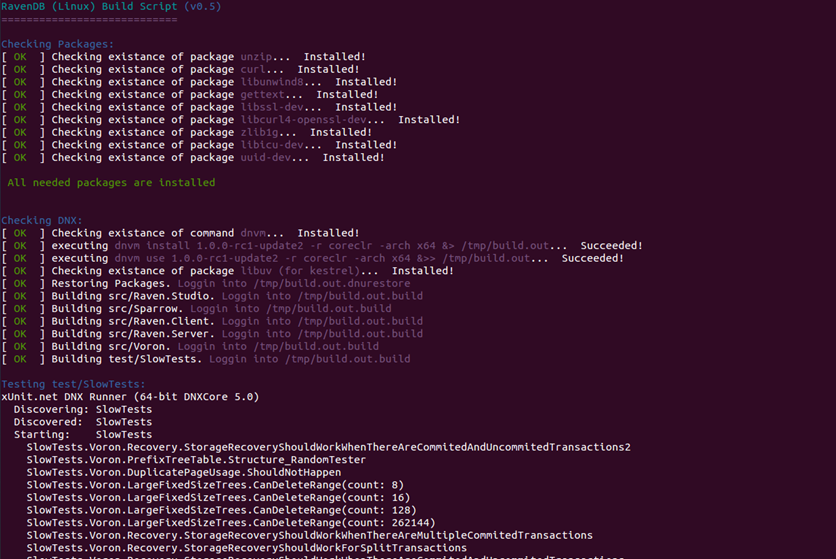

First, the setup instructions:

- git clone https://github.com/ayende/ravendb.git

- git checkout v4.0

- ./build.sh

This should take care of all the dependencies (including installing CoreCLR if needed), and run all the tests.

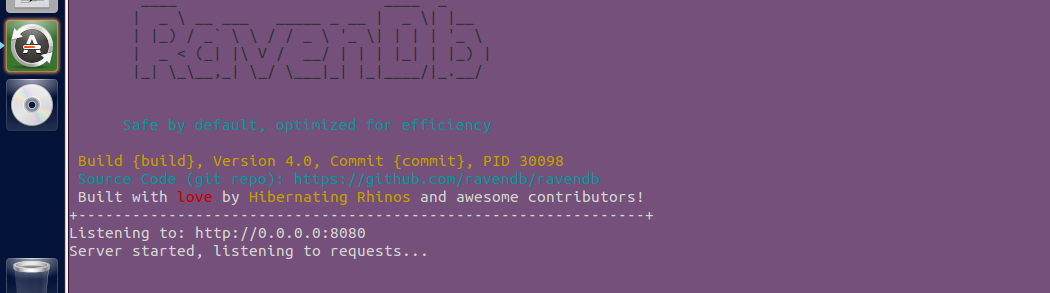

You can now run the dnx command (or the dotnet cli, as soon as that become stable enough for us to use), which will give you RavenDB on Linux:

By and large, RavenDB on Windows and Linux behaves pretty much in the same manner. But there are some differences.

I mentioned that the out of memory killer nonsense behavior, right? Instead of relying on swap files and the inherent unreliability of Linux in memory allocations, we create temporary files and map them as our scratch buffers, to avoid the OS suddenly deciding that we are nasty memory hogs and that it needs some bacon. Windows has features that allow the OS to tell applications that it is about to run out of memory, and we can respond to that. In Linux, the OS goes into a killing spree, so we need to monitor that actively and takes steps accordingly.

Even so, administrators are expected to set vm.overcommit_memory and vm.oom-kill to proper values (2 and 0, respectively, are the values we are currently recommending, but that might change).

Websockets client handling is also currently not available on the CoreCLR for Linux. We have our own trivial implementation based on TcpClient, which currently supports on HTTP. We’ll replace that with the real implementation as soon as the functionality becomes available on Linux.

Right now we are seeing identical behaviors on Linux and Windows, with similar performance profiles, and are quite excited by this.

More posts in "The design of RavenDB 4.0" series:

- (26 May 2016) The client side

- (24 May 2016) Replication from server side

- (20 May 2016) Getting RavenDB running on Linux

- (18 May 2016) The cost of Load Document in indexing

- (16 May 2016) You can’t see the map/reduce from all the trees

- (12 May 2016) Separation of indexes and documents

- (10 May 2016) Voron has a one track mind

- (05 May 2016) Physically segregating collections

- (03 May 2016) Making Lucene reliable

- (28 Apr 2016) The implications of the blittable format

- (26 Apr 2016) Voron takes flight

- (22 Apr 2016) Over the wire protocol

- (20 Apr 2016) We already got stuff out there

- (18 Apr 2016) The general idea

Comments

So it should be possible to create a docker image for ravendb? That would be awesome!

Amazing news! Any benchmarks comparison?

Awesome!

Awesome! 'nuff said.

Uri, We are roughly the same speed on both. We saturate the network much faster on Linux, because we need to figure out the incantation to get the kernel to handle high bandwidth connections. So we can handle more req/sec on Windows right now. Not a major concern, since that is just something that we can get to when we are done.

Man, the OOM killer is really bad. How can OS people be so misguided? Servers do not have expendable processes.

Tobi, As far as I can tell, the idea is that the admin is responsible for setting this up so it wouldn't happen. But I absolutely agree on the insanity of the issue.

As far as I understand it CoreCLR does not run under 32-bit Linux. See https://github.com/dotnet/coreclr/issues/60

Mike, There is active work on getting this to work on 32bits. Arm32, for example, has less than 50 tests failing right now

Wow!

That's great news. I assume all the tools that come with RavenDb also work - Smuggler and Backup?

Gleb, Yes, on release it will have all the features of the Windows version.

Switching to RC2 or waiting till RTM?

Ian, We are already running on RC2

Anyone got this branch working on docker? Ran into a whole world of pain, the build.sh is useless for docker, finally got it all building in a docker image and then get "Object reference not set to an instance of an object" when I run it....

Patrick, We haven't tested this yet on Docker. There is still some time before it is ready for actual usage.

Oren, any update on whether v4.0 is dockerisable yet?

Dan, It will support it on release, yes

Running on CoreCLR would be great. I have no intention of running on Linux, but would love to run this on Windows Nano Server. This then allows us to create a docker image with RavenDB from the nano server base image with a much smaller footprint. Does this also include Rachis (and potentially have a docker swarm of a clustered RavenDB using nano servers)?

Johan, Yes that would include cluster support on Docker, yes

Comment preview