Several months ago we decided to ramp up the RavenDB on Linux migration effort, and hired a full time developer to do just that.

Several months ago we decided to ramp up the RavenDB on Linux migration effort, and hired a full time developer to do just that.

We started this with great hopes, mostly because we were able to get Voron to run on Linux in a reasonable amount of time. But RavenDB is several orders of magnitude bigger than Voron, and we run into a lot more complexities along the way.

In particular, and I am not quite sure how to put it nicely, the entire environment is pretty unstable. Our target was Mono – 4.3.0, MonoDevelop 5.10 and Ubuntu 14.04. And it takes really no effort at all to break pretty much everything there.

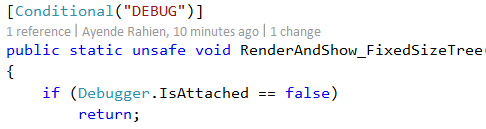

For example, SLEEP_DURATION_BEFORE_ABORT will, if you are running inside a debugger, or just running GC, sometimes, it will intentionally crash if certain operations takes longer than 200ms.

But in general, it feels like Mono just isn’t nearly stable enough for a production platform. Sometimes it would work fine, other time, you get horrible crashes in the process that required us to debug the mono runtime to figure out what is going on. Sometimes it was us doing stupid things, other times it was real bugs in the Mono runtime. Other issues related to missing websockets implementation (it appears to be there and then removed, for some reason), missing chunked encoding support etc.

As an aside, MonoDevelop in particular is… quite uncomfortable IDE, and it doesn’t compare well in pretty much any level of experience to the experience you get from other IDEs. That just exacerbate the problem, to be frank.

In other words, porting RavenDB to mono is a lot of hard work. But that was pretty much expected. What I didn’t really expect was how much work it would be not to port it, but to actually fill in missing / incomplete parts of the framework itself.

Now, there are plenty of other problems in there even without Mono. Typically Windows –> Linux port, anything from file paths to case sensitivity to finding different ways to do various things (from finding how busy the CPU is to getting low memory notifications to … well, you get the drift). Those are the kind of problems that we expected, and the kinds that frankly, we wanted to be solving.

But the instability of the mono runtime environment make it really expensive to port any non trivial software without spending most of the time debugging Mono. Even if the error is in our code, the only way to verify that is to actually debug Mono itself. And that puts a much higher cost on the actual porting effort. More to the point, this also means frightening things for trying to support this in production. I don’t relish the thought of having to go to a customer and tell them that a particular issue is caused by a GC bug and that fixing that will require a new custom runtime. Leaving aside the actual cost of such a support call.

So we stopped, and looked at the CoreCLR. I have a much higher expectation of quality from Microsoft, given the track record of the .NET framework. The problem is that the CoreCLR, while it is supposed to have an RC out in November, it still quite problematic on Linux. For example, you can’t use Unix paths in Uris, which broke us pretty early in the process. That was annoying, but expected for the time being, and while this issue (and other stuff we run into, I’m only pointing this out as a simple example, nitpickers, don’t get hang up on this) are surmountable, the major issue is that the CoreCLR require a lot more than just a few #ifdef, it require quite a lot of work. Probably restructuring the project (some dependencies are now nuget packages, different structure for projects and build system, etc).

Therefor, what we intend to do now is wait a bit until the CoreCLR next release, probably September, and then start seeing what it takes to run RavenDB (both server & client) under the CoreCLR on Windows. The idea is that we have much better tooling for working with .NET code on Windows, and hopefully the bulk of the work to actually run on the CoreCLR runtime there, then just deal with everything we need to run on Linux, and not have to worry about debugging the runtime as well.

Now, to deal with the nitpickers:

Mono is Open Source, you can fix any issues you find and submit pull requests.

That is correct, but let me talk about a few of the issues we have run into.

The class library is partial / bad. I think that we would have done much better if we were building on Mono from the get go, we would know what pieces to avoid. But when a call to a ZipFile.Open will crash with SIGSEGV and no indication of why that is happening (ended up being stack overflow in the Mono BCL implementation), the fix was a single line but finding the root cause took a long while.

With the actual runtime, a GC issue caused a segmentation fault, that took about a month to figure out.

Now, we aren’t expert in Mono. So when we got in trouble we reached out to the members in the Mono team, and were recommended a company that had the required expertise. I’m very happy with the service provided by them, but the fact of the matter is, it took a month to narrow down a big problem to something that would be fixed by a couple of lines of code. Not because they weren’t good, but because the problem was pretty tough to figure out.

So we contributed some stuff back, and we would be happy to continue doing so if the process wasn’t so hard (and quite expensive). Even relative simple issues had a very high threshold.

The RavenDB codebase is close to a million lines of code. It is doing some pretty advanced stuff, and changing the foundation underneath it means that we invalidate a lot of silent assumptions. If the trivial stuff breaks so badly, I don’t want to think about the cost of the really complex stuff. The GC issue was bad enough.

If we were working from scratch, we would know what to avoid, but with such a big codebase, that isn’t feasible. And again, even if we did manage to get it working properly, we still have the issue of how to support it. We provide production support to our customers, and the ability to accurately and quickly pinpoint problems and troubleshoot them is key. Taking on the support burden on Mono is very risky.

Note that the worry I have isn’t based around vague fears. The GC issue we had would cause random crashes, typically far after the relevant code would run, and only under very specific scenarios. In 99% of the cases, it would run just fine (well, not fine, but it wouldn’t be crashing). A production system with this type of behavior would be a nightmare, even escalating to the Mono core team, figuring out where and how this is happening is nasty, and it won’t happen in the time frames that we want to provide to customers who have production support contracts with us.

What about the CoreCLR, it is Open Source too, why not contribute to it?

The current plan, as I said, is to first see what it takes to move to the CoreCLR on Windows. That is a much smaller step, but it will let us get familiar both with the way the CoreCLR is structured and the new dependencies. We are actively looking at the project, and to say that I’m excited is not nearly enough. This require a lot of work on our side, before we get to the point where we are actually getting to figure out if there is stuff that needs fixing there too.

Once we have that, we are going to go back to Linux and get to working on running on a different OS, with all the implications of that. I’m actually looking forward to this. That is the kind of problem that I want to tackle.

If you want to look at the current state of our work (RavenDB on Linux using Mono), you can check it out here. We still have a full time developer for this, we are just going to divert him until the next beta of CoreCLR is out, and then we are going to restart the process as I described above.

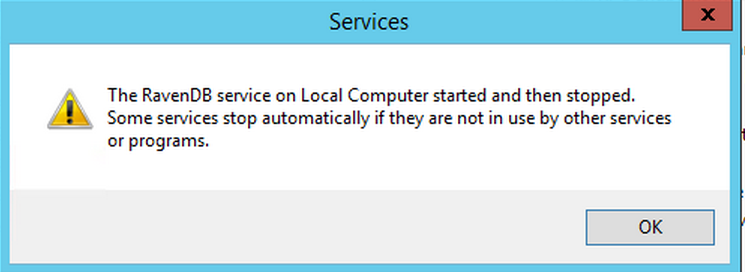

In this case, I was trying to use a service for the purpose it was intended to. And I was just bogged down with a lot of details that had to be completed before I could even test out the service.

In this case, I was trying to use a service for the purpose it was intended to. And I was just bogged down with a lot of details that had to be completed before I could even test out the service.