The code for CommunityCourses can be found here: http://github.com/adam7/CommunityCourses

This is a RavenDB application whose existence I learned about roughly an hour ago. The following is a typical code review about the application, not limited to just RavenDB.

Tests

When I first opened the solution, I was very happy to see that there are tests for the application, but I was disappointed when I actually opened it.

The only tests that exists seems to be the default ones that comes with ASP.Net MVC.

I would rather have no test project than a test project like that.

Account Controller, yet again

Annoyed with the test project, I headed for my favorite target for frustration, the MVC AccountController and its horrid attempt at creating DI or abstractions.

Imagine my surprise when I found this lovely creature instead:

[HandleError]

public partial class AccountController : Controller

{

public virtual ActionResult LogOn()

{

return View();

}

[AcceptVerbs(HttpVerbs.Post)]

public virtual ActionResult LogOn(string userName, string password)

{

if (FormsAuthentication.Authenticate(userName, password))

{

FormsAuthentication.SetAuthCookie(userName, false);

return RedirectToAction("Index", "Home");

}

else

{

ModelState.AddModelError("logon", "Invalid username or password");

return View();

}

}

public virtual ActionResult LogOff()

{

FormsAuthentication.SignOut();

return RedirectToAction("Index", "Home");

}

}

Yes, simple, work, simple, uncomplicated! You don’t have to think when reading this code, I like it. That is how the Account Controller should have been, by default.

The model

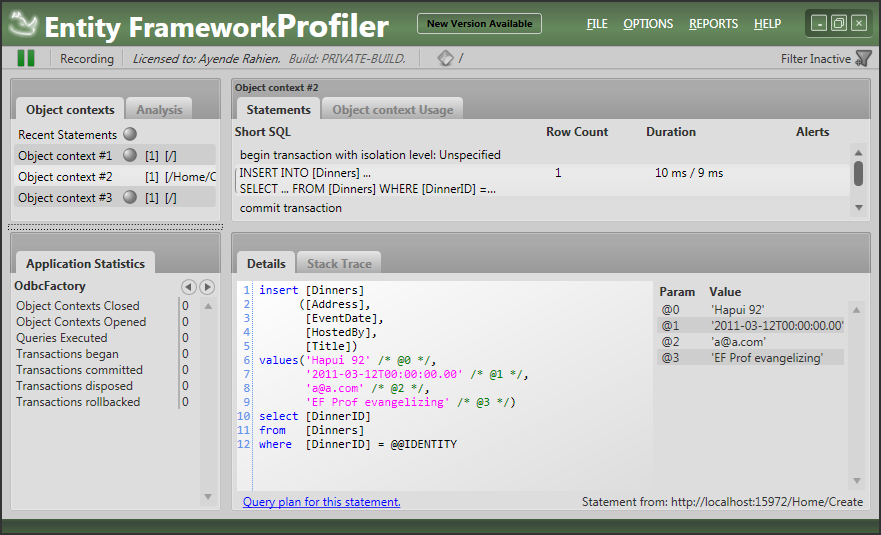

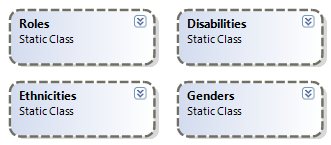

I then turned into the model. Community Courses is a RavenDB application, so I am very interested in seeing how this is handled. The first thing that I noticed was this:

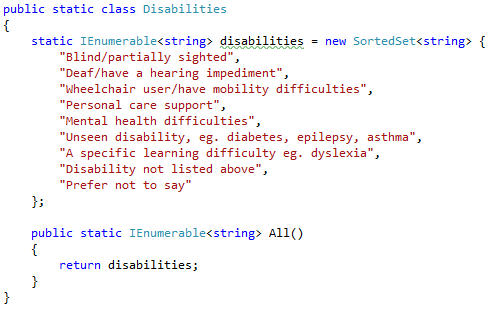

That was interesting, I am not used to seeing static classes in the model. But then I looked into those classes, and it all became clear:

This is essentially a lookup class.

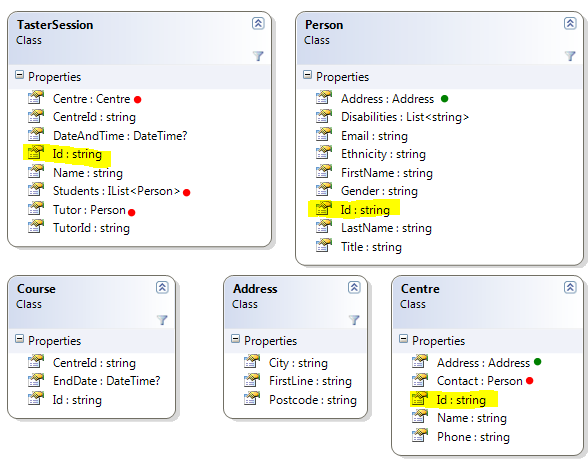

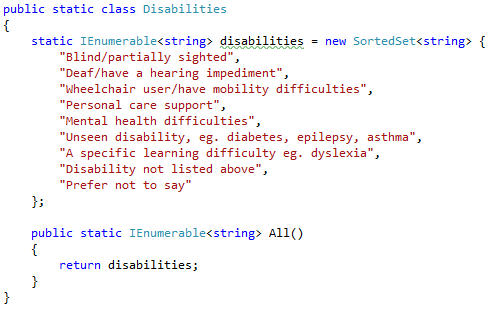

Then I switched to the rest of the model, the following image shows a partial view of the model, annotated a bit:

The classes with the Id property (highlighted) are presumed to be Root Entities (in other words, they would each reside in their own document). I am not absolutely sure that this is the case yet, but I am sure enough to point out a potential problem.

Did you notice that we have references to things like Address, Person and Centre in the model? They are marked with red and green points.

A green point is when we reference a class that doesn’t have an id, and is therefore considered to be a value type which is embedded in the parent document. A red point, however, indicate what I believe will be a common problem for people coming to RavenDB from an OR/M background.

RavenDB doesn’t support references (this is by design), and the result of referencing a Root Entity in another Root Entity is that the referenced entity is embedded inside the referencing document. This is precisely what you want for value types like Address, and precisely what you don’t want for references. You can see in TasterSession that there are actually two references to Tutor, one for the Tutor data and one for the TutorId. I think that this is an indication for hitting that problem.

For myself, I would prefer to not denormalize the entire referenced entity, but only key properties that are needed for processing the referencing entity. That make is easier to understand the distinction between the Person instance that is mapped to people/4955 and the Person instance that is help in Centre.Contact.

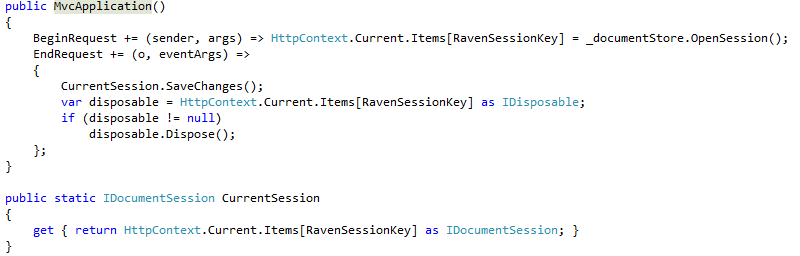

Session management

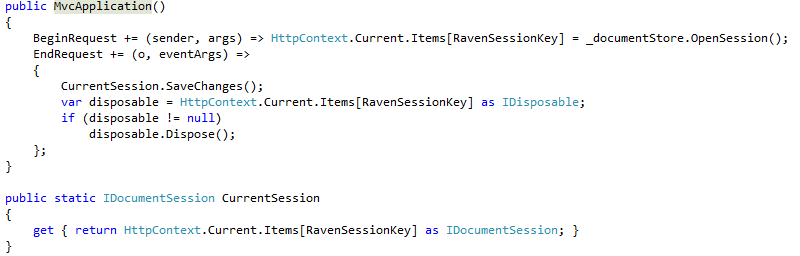

Next on the list, because it is so easy to get it wrong (I saw so many flawed NHibernate session management):

This is great, we have a session per request, which is what I would expect in a we application.

I might quibble with the call to SaveChanges, though. I like to make that one an explicit one, rather than implicit, but that is just that, a quibble.

Initializing RavenDB

This is worth speaking about (it happens in Application_Start):

RavenDB is pretty conventional, and Community Courses override one of those conventions to make it easier to work with MVC. By default, RavenDB will use ids like: “people/391”, “centres/912”, by changing the identity parts separator, it will ids like: “people-952”, “centres-1923”. The later are easier to work with in MVC because they don’t contain a routing character.

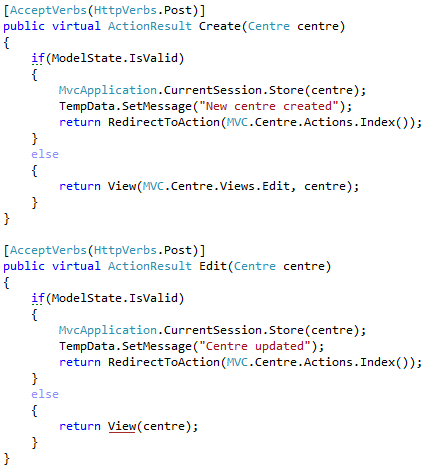

Centre Controller

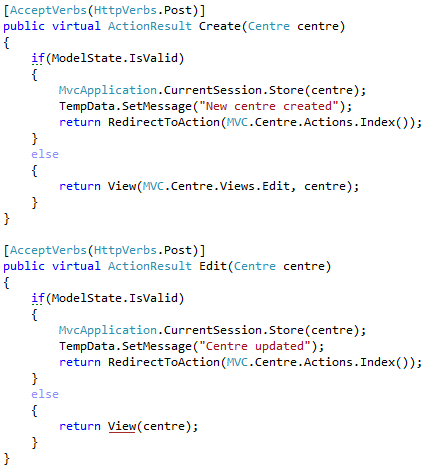

This is a simple CRUD controller, but it is worth examining nonetheless:

Those are all pretty simple. The only thing of real interest is the Index action, which uses a query on an index to get the results.

Currently the application doesn’t support paging, but it probably should, which wouldn’t complicate like all that much (adding Skip & Take, that is about it).

Next, the Create/Edit actions:

They are short & to the point, nothing much to do here. I like this style of coding very much. You could fall asleep while writing it. The only comment beyond that is that those methods are so similar that I would consider merging them into a single action.

Course Controller

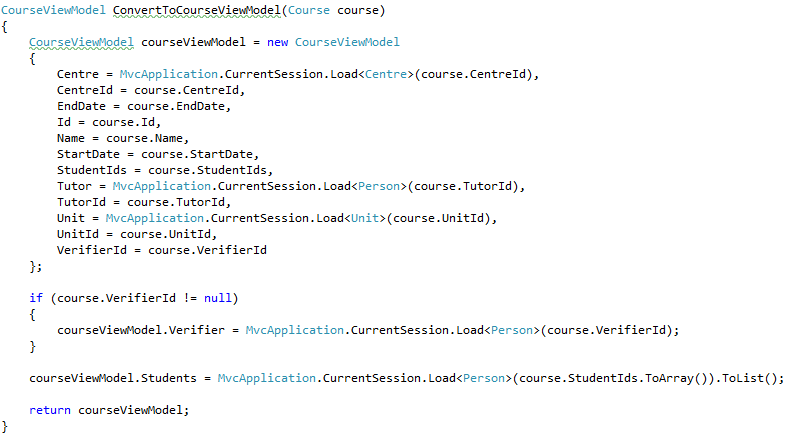

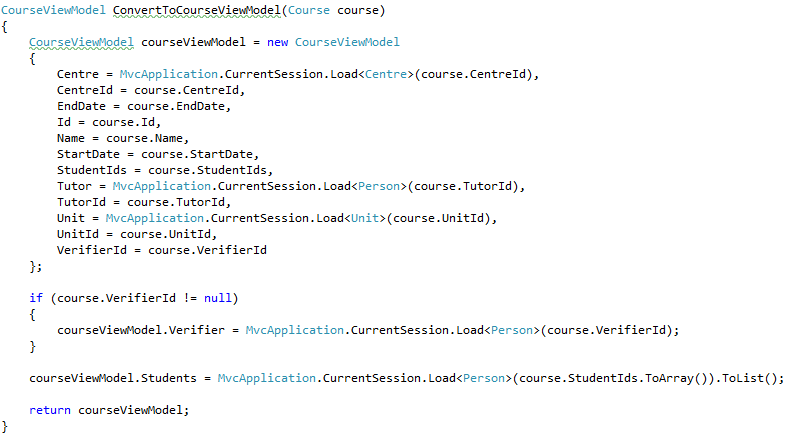

Things are starting to get much more interesting here, when we see this method:

Overall, it is pretty good, but am very sensitive to making remote calls, so I would change the code to make only a single remote call:

CourseViewModel ConvertToCourseViewModel(Course course)

{

var ids = new List<string> { course.CentreId, course.TutorId, course.UnitId };

if(course.VerifierId != null)

ids.Add(course.VerifierId);

ids.AddRange(course.StudentIds);

var results = MvcApplication.CurrentSession.Load<object>(ids.ToArray());

var courseViewModel = new CourseViewModel

{

Centre = (Centre)results[0],

CentreId = course.CentreId,

EndDate = course.EndDate,

Id = course.Id,

Name = course.Name,

StartDate = course.StartDate,

StudentIds = course.StudentIds,

Tutor = (Person)results[1],

TutorId = course.TutorId,

Unit = (Unit)results[2],

UnitId = course.UnitId,

VerifierId = course.VerifierId

};

int toSkip = 3;

if (course.VerifierId != null)

{

toSkip += 1;

courseViewModel.Verifier = (Person)results[3];

}

courseViewModel.Students = results.Skip(toSkip).Cast<Person>().ToList();

return courseViewModel;

}

This is slightly more complex, but I think that the benefits outweigh the additional complexity.

5N+1 requests ain’t healthy

The following code is going to cause a problem:

It is going to cause a problem because it makes a single remote call (the Query) and for every result from this query it is going to perform 5 remote calls inside ConvertToCourseViewModel.

In other words, if we have twenty courses to display, this code will execute a hundred remote calls. That is going to be a problem. Let us look at how the document courses-1 looks like:

{

"CentreId": "centres-1",

"Status": "Upcoming",

"Name": "NHibernate",

"StartDate": "/Date(1280361600000)/",

"EndDate": "/Date(1280534400000)/",

"UnitId": "units-1",

"TutorId": "people-1",

"VerifierId": null,

"StudentIds": [

"people-1"

]

}

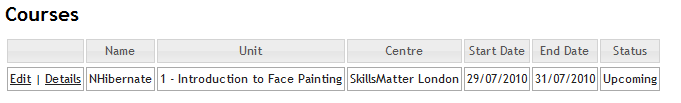

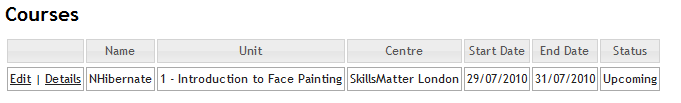

And here is how the UI looks like:

I think you can figure out what I am going to suggest, right? Instead of pulling all of this data at read time (very expensive), we are going to denormalize the data at write time, leading to a document that looks like this:

{

"CentreId": { "Id": "centres-1", "Name": "SkillsMatter London" },

"Status": "Upcoming",

"Name": "NHibernate",

"StartDate": "/Date(1280361600000)/",

"EndDate": "/Date(1280534400000)/",

"UnitId": { "Id": "units-1", "Name": "1 - Introduction to Face Painting"},

"TutorId": { "Id": "people-1", "Name": "Mr Oren Eini" },

"VerifierId": null,

"StudentIds": [

{ "Id": "people-1", "Name": "Ayende Rahien" }

]

}

Using this approach, we can handle the Index action shown above with a single remote call. And that is much better.

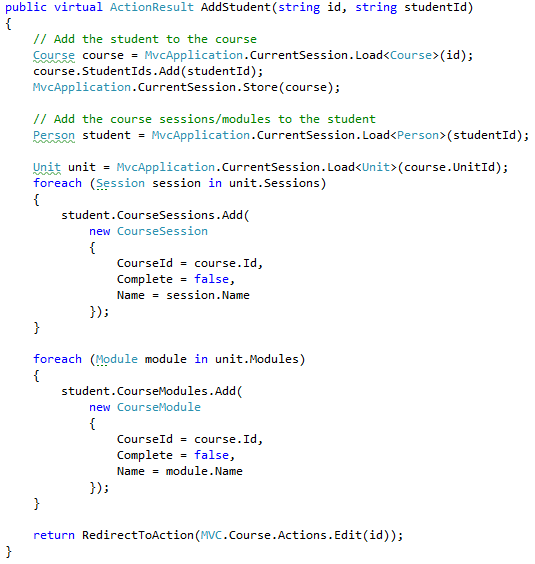

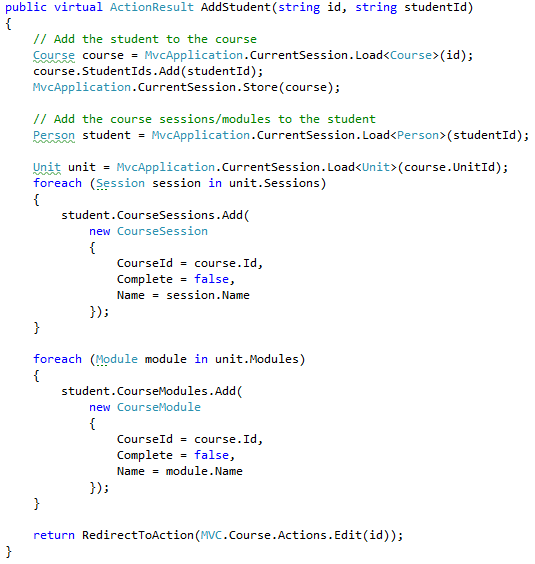

I am going to ignore actions whose structure we already covered (Edit, Details, etc), and focus on the interesting ones, the next of which is:

This is an excellent example of how things should be. (Well, almost, I would remove the unnecessary Store call and move the StudentIds.Add just before the foreach, so all the data access happens first, it makes it easier to scan). Using an OR/M, this code would generate 8 remote calls, but because Raven’s documents are loaded as a single unit, we have only 3 here (and if we really wanted, we can drop it to one).

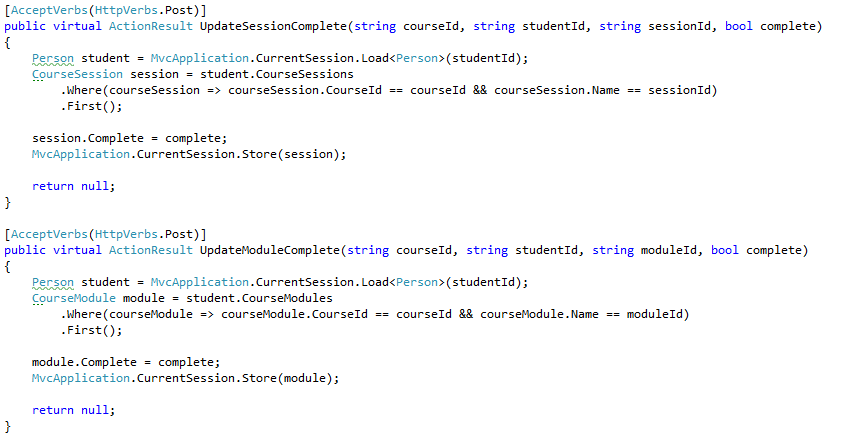

Next, we update a particular session / module in a student.

We can drop the unnecessary calls to Store, but beside that, it is pretty code. I don’t like that moduleId / sessionId are compared to the Name property, That seems confusing to me.

Charting with RavenDB

I am showing only the parts that are using RavenDB here:

There is one problem with this code, it doesn’t work. Well, to be bit more accurate, it doesn’t work if you have enough data. This code ignores what happen if you have enough people to start paging, and it does a lot of work all the time. It can be significantly improved by introducing a map/ reduce index to do all the hard work for us:

This will perform the calculation once, updating it whenever a document changes. It will also result in much less traffic going on the network, since the data that we will get back will look like this:

Person Controller doesn’t contain anything that we haven’t seen before. So we will skip that and move directly to…

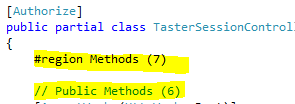

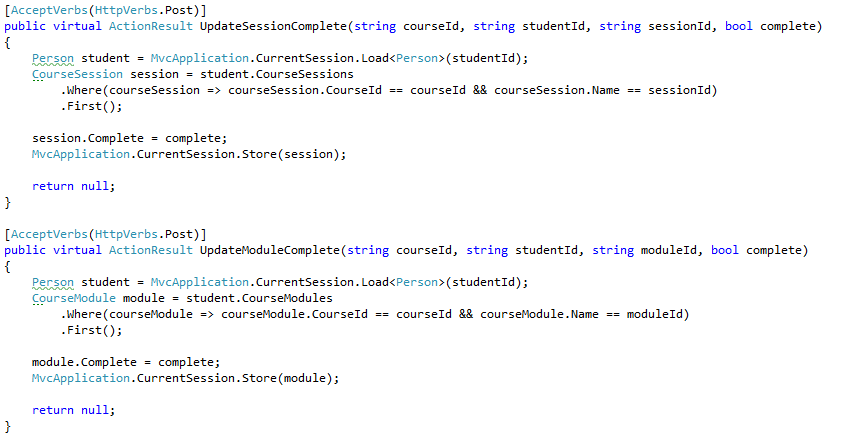

Taster Session Controller

I sincerely hope that those comments were generated by a tool.

The following three methods all shared the same problem:

They all copy Centre & Person to the TasterSession entity. The problem with that is that it generate the following JSON:

References in RavenDB are always embedded in the referencing entity. This should be a denomralized reference instead (just Id & Name, most probably).

Other aspects

I focused exclusively on the controller / Raven code, and paid absolutely no attention to any UI / JS / View code. I can tell you that the UI looks & behaves really nice, but that is about it.

Summary

All in all, this was a codebase that was a pleasure to read. There are some problems, but they are going to be easy to fix.