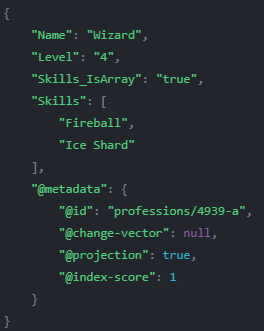

I posted about the @refresh feature in RavenDB, explaining why it is useful and how it can work. Now, I want to discuss a possible extension to this feature. It might be easier to show than to explain, so let’s take a look at the following document:

The idea is that in addition to the data inside the document, we also specify behaviors that will run at specified times. In this case, if the user is three days late in paying the rent, they’ll have a late fee tacked on. If enough time have passed, we’ll mark this payment as past due.

The basic idea is that in addition to just having a @refresh timer, you can also apply actions. And you may want to apply a set of actions, at different times. I think that the lease payment processing is a great example of the kind of use cases we envision for this feature. Note that when a payment is made, the code will need to clear the @refresh array, to avoid it being run on a completed payment.

The idea is that you can apply operations to the documents at a future time, automatically. This is a way to enhance your documents with behaviors and policies with ease. The idea is that you don’t need to setup your own code to execute this, you can simply let RavenDB handle it for you.

Some technical details:

- RavenDB will take the time from the first item in the @refresh array. At the specified time, it will execute the script, passing it the document to be modified. The @refresh item we are executing will be removed from the array. And if there are additional items, the next one will be schedule for execution.

- Only the first element in the @refresh array only. So if the items aren’t sorted by date, the first one will be executed and the persisted again. The next one (which was earlier than the first one) is already ready for execution, so will be run on the next tick.

- Once all the items in the @refresh array has been processed, RavenDB will remove the @refresh metadata property.

- Modifications to the document because of the execution of @refresh scripts are going to be handled as normal writes. It is just that they are executed by RavenDB directly. In other words, features such as optimistic concurrency, revisions and conflicts are all going to apply normally.

- If any of the scripts cause an error to be raised, the following will happen:

- RavenDB will not process any future scripts for this document.

- The full error information will be saved into the document with the @error property on the failing script.

- An alert will be raised for the operations team to investigate.

- The scripts can do anything that a patch script can do. In other words, you can put(), load(), del() documents in here.

- We’ll also provide a debugger experience for this in the Studio, naturally.

- Amusingly enough, the script is able to modify the document, which obviously include the @refresh metadata property. I’m sure you can imagine some interesting possibilities for this.

We also considered another option (look at the Script property):

The idea is that instead of specifying the script to run inline, we can reference a property on a document. The advantage being is that we can apply changes globally much easily. We can fix a bug in the script once. The disadvantage here is that you may be modifying a script for new values, but not accounting for the old documents that may be referencing it. I’m still in two minds about whatever we should allow a script reference like this.

This is still an idea, but I would like to solicit your feedback on it, because I think that this can add quite a bit of power to RavenDB.