reWriting a very fast cache service with millions of entries

I run into this article that talks about building a cache service in Go to handle millions of entries. Go ahead and read the article, there is also an associated project on GitHub.

I run into this article that talks about building a cache service in Go to handle millions of entries. Go ahead and read the article, there is also an associated project on GitHub.

I don’t get it. Rather, I don’t get the need here.

The authors seem to want to have a way to store a lot of data (for a given value of lots) that is accessible over REST. The need to be able to run 5,000 – 10,000 requests per second over this. And also be able to expire things.

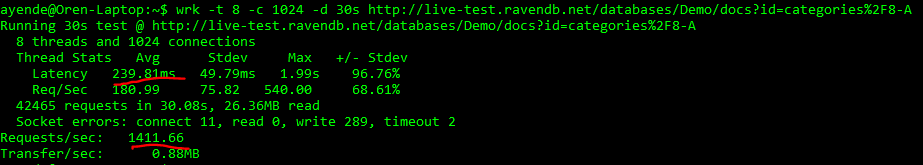

I decided to take a look into what it would take to run this in RavenDB. It is pretty late here, so I was lazy. I run the following command against our live-test instance:

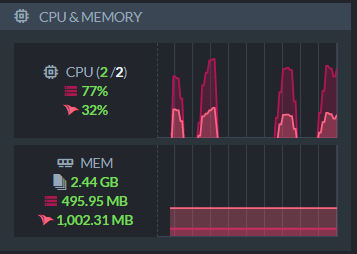

This say to create 1,024 connections and get the same document. On the right you can see the live-test machine stats while this was running. It peaked at about 80% CPU. I should note that the live-test instance is pretty much the cheapest one that we could get away with, and it is far from me.

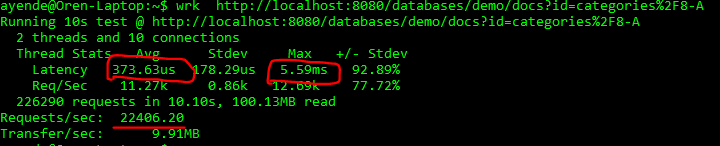

Ping time from my laptop to the live-test is around 230 – 250 ms. Right around the numbers that wrk is reporting. I’m using 1,024 connections here to compensate for the distance. What happens when I’m running this locally, without the huge distance?

So I can do more than 22,000 requests per second (on a 2016 era laptop, mind) with max latency of 5.5 ms (which the original article called for average time). Granted, I’m simplifying things here, because I’m checking a single document and not including writes. But 5,000 – 10,000 requests per second are small numbers for RavenDB. Very easily achievable.

RavenDB even has the @expires feature, which allows you to specify a time a document will automatically be removed.

The nice thing about using RavenDB for this sort of feature is that millions of objects and gigabytes of data are not something that are of particular concern for us. Raise that by an orders of magnitude, and that is our standard benchmark. You’ll need to raise it by a few more orders of magnitudes before we start taking things seriously.

More posts in "re" series:

- (05 Dec 2025) Build AI that understands your business

- (02 Dec 2025) From CRUD TO AI – building an intelligent Telegram bot in < 200 lines of code with RavenDB

- (29 Sep 2025) How To Run AI Agents Natively In Your Database

- (22 Sep 2025) How To Create Powerful and Secure AI Agents with RavenDB

- (29 May 2025) RavenDB's Upcoming Optimizations Deep Dive

- (30 Apr 2025) Practical AI Integration with RavenDB

- (19 Jun 2024) Building a Database Engine in C# & .NET

- (05 Mar 2024) Technology & Friends - Oren Eini on the Corax Search Engine

- (15 Jan 2024) S06E09 - From Code Generation to Revolutionary RavenDB

- (02 Jan 2024) .NET Rocks Data Sharding with Oren Eini

- (01 Jan 2024) .NET Core podcast on RavenDB, performance and .NET

- (28 Aug 2023) RavenDB and High Performance with Oren Eini

- (17 Feb 2023) RavenDB Usage Patterns

- (12 Dec 2022) Software architecture with Oren Eini

- (17 Nov 2022) RavenDB in a Distributed Cloud Environment

- (25 Jul 2022) Build your own database at Cloud Lunch & Learn

- (15 Jul 2022) Non relational data modeling & Database engine internals

- (11 Apr 2022) Clean Architecture with RavenDB

- (14 Mar 2022) Database Security in a Hostile World

- (02 Mar 2022) RavenDB–a really boring database

Comments

Not to be nitpicking...

Although the general message of YAGNI and Don't reinvent the wheel still holds, the original post is dated March-16... just hope they have learned something.

And to be fair, you should be comparing with whatever version RavenDB was on at the time (3.0/3.5 I guess).

The hardware part is already fair, I guess. 😜

Kurbein,

You are correct, I didn't notice the date. But the technical / performance landscape didn't change very much in that time frame.

I still think that their load is pretty minimal.

Comment preview