When you want to store, index and search MBs of text inside of RavenDB

A scenario came up from a user that was quite interesting to explore.

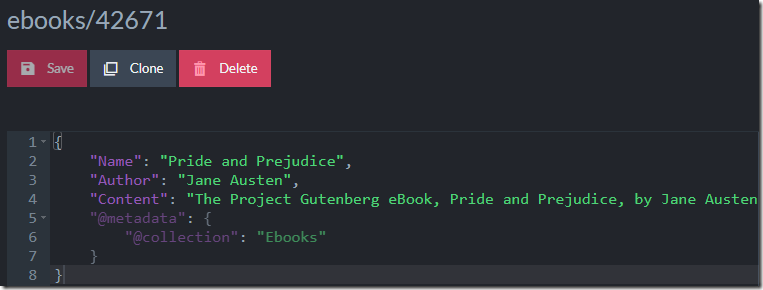

Let’s us assume that we want to put the Gutenberg Project inside of RavenDB. An initial attempt for doing that would look like this:

I’m skipping a lot of the details, but the most important field here is the Content field. That contains the actual text of the book.

On last count, however, the size of the book was around 708KB. When storing that as a single field inside of RavenDB, on the other hand, RavenDB will notice that this is a long field and compress it. Here is what this looks like:

The 738.55 KB is the size of the actual JSON, the 674.11 KB is after quick compression cycle and the 312KB is the actual size that this takes on disk. RavenDB is actively trying to help us.

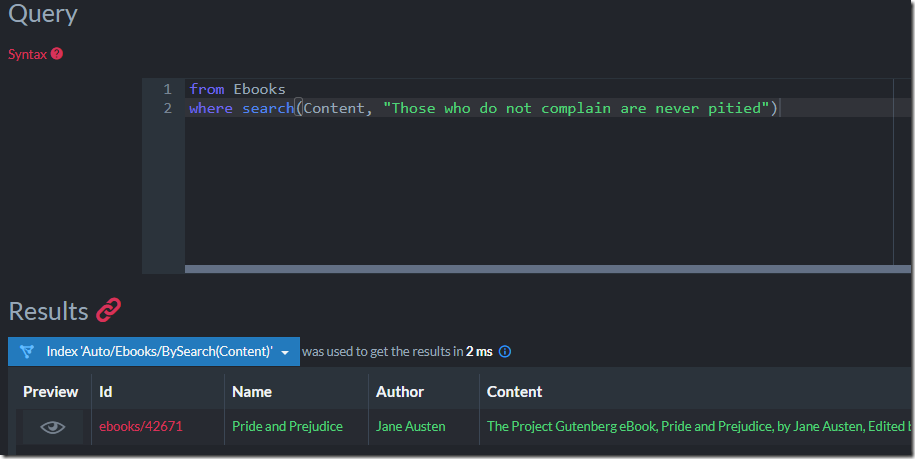

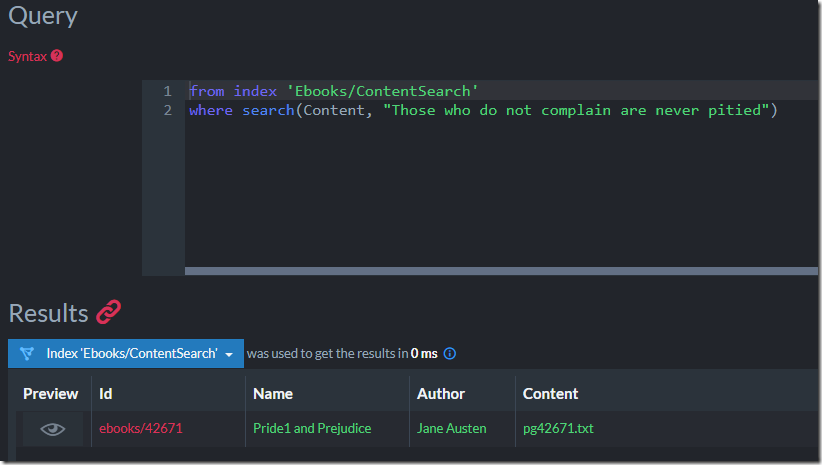

But let’s take the next step, we want to allow us to query, using full text search, on the content of the book. Here is what this will look like:

Everything works, which is great. But what is going on behind the scenes?

Even a single text field that is large (100s of KB or many MB) puts a unique strain on RavenDB. We need to manage that as a single unit, it significantly bloats the size of the parent document and make it more expensive to work with.

This is interesting because usually, we don’t actually work all that much with the field in question. In the case of the Pride and Prejudice book, the content is immutable and not really relevant for the day to day work with the document. We are better off moving this elsewhere.

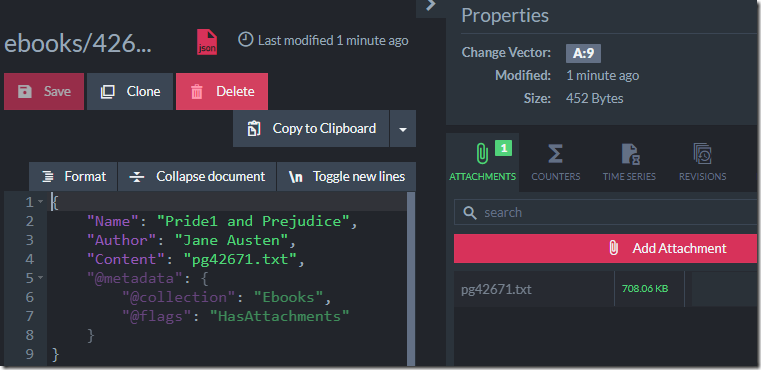

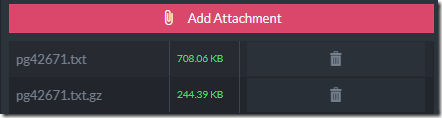

An attachment is a natural way to handle this. We can move the content of the book to an attachment. In this way, the text is retained, we can still work and process that, but it is sitting on the side, not making our life harder on each interaction with the document.

Here is what this looks like, note that the size of the document is tiny. Operations on that size would be much faster than a multi MB document:

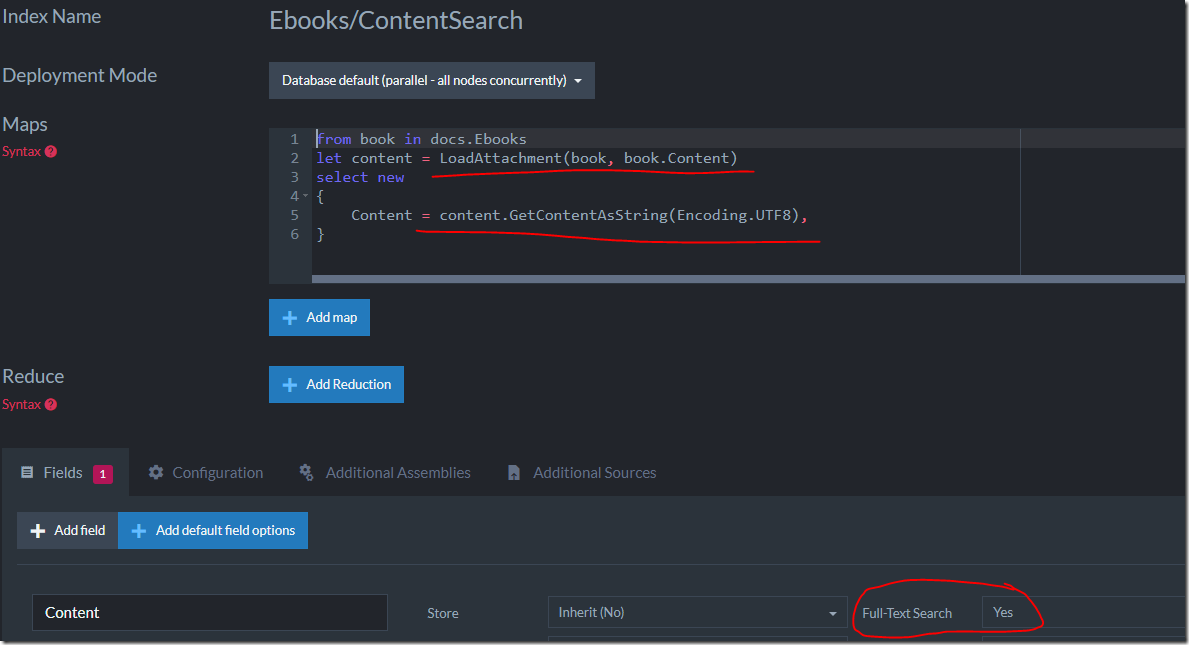

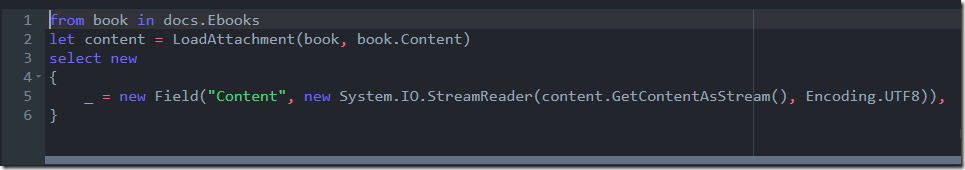

Of course, there is a disadvantage here, how can we index the book’s contents now? We still want that. RavenDB support that scenario explicitly, let’s define an index to do just that:

You can see that I’m loading the content attachment and then accessing its content as string, using UTF8 as the encoding mechanism. I tell RavenDB to use full text search on this field, and I’m off to the races.

Of course, we could stop here, but why? We can do even better. When working with large text fields, an index such as the one above will force us to materialize the entire field as a single value. For very large values, that can put a lot of pressure in terms of memory usage.

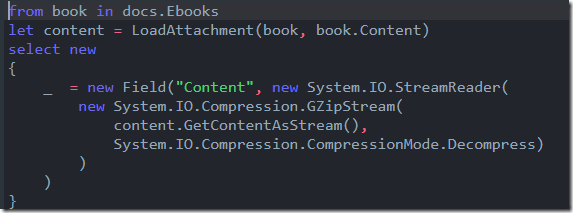

But RavenDB supports more than that. Instead of processing a very large string in one shot, we can do that in an incremental fashion, avoiding big value materialization and the memory pressure associated with that. Here is what you can write:

That tells RavenDB that we should process the field in a streaming fashion.

Here is why it matters:

| Value Materialized | Streaming Value |

|

|

When we are working on a document that has a < 1 MB attachment, it probably doesn’t matter all that much (although using 25% of the memory is nice), but it matters a lot more when you are working with larger texts.

We can take this one step further still! Instead of storing the attachment text as is, we can compress it, like so:

And then in the index, we’ll decompress on the fly:

Note that throughout all of that, the queries that you send are exactly the same, we are just taking 20% of the disk space and 25% of the memory that we used to.

Comments

Whats the difference between using {Content=...} vs {_=new Field("Content", ...} Are these basically the same?

David,

Basically, they are the same. We typically use the

Fieldin the context of dynamic fields, so you have to specify the name, and I didn't want to have it there twice.I think it would be great to see something like:

select new { _ = new StreamedField("Content") }

instead of writing it all.

This is great. Perhaps in future attachments could have built-in compression as well?

Btw. I wonder would it be worth it (or even possible given there is BlittableJson) to send undecompressed documents using zstd HTTP content encoding in communication between Raven client and server? (assuming https://datatracker.ietf.org/doc/html/rfc8878#section-7.2 would be supported by Kestrel and HttpClient of course)

How about a scenario where we can flag a field to be handled as an attachment, automagically, as you described it 🙂 Then all this happens behind the scene. And when issuing a query, one should somehow explicitly flag this field to be returned, at default RavenDB would not.

// Ryan

Ryan,

That is something that I would really not have. This is fairly easy to do client side, FWIW (you can do that by hooking to

BeforeStore, for example).However, that leads to "magic" and make things a lot harder to figure out.Bruno,

That is a fairly rare scenario, when you have to index that much text all at once. It isn't usually worth it to add API dedicated for that.

Milosz,

About the auto compression, not something we want. See details here: https://ayende.com/blog/195073-C/negative-feature-response-automatic-attachment-compression-in-ravendb?key=041af353afed487a8cb48ed49be2f6e8

As for whatever it would be worth it to integrate that into the pipeline directly, no.

Most of the time, we aren't sending the raw document bytes from the store to the client. We need to do something to add. Other documents, included values, etc. As such, it is cheaper to handle HTTP Compression as a single stream, rather than send separately compressed values, which is what we'll need to do in this case.

Comment preview