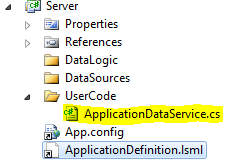

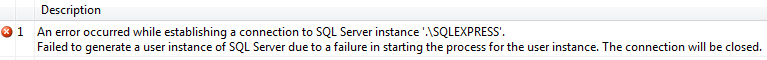

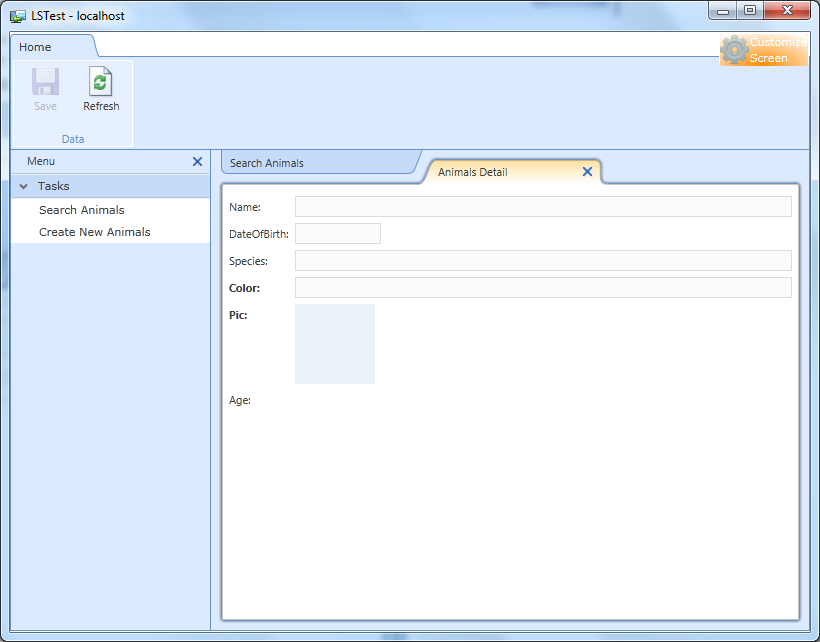

I thought it would be a good idea to see what sort of data access behavior LightSwitch applications have. So I hook it up with the EntityFramework Profiler and took it for a spin.

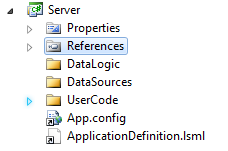

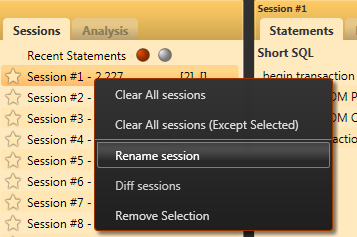

It is interesting to note that it seems that every operation that is running is running in the context of a distributed transaction:

There is a time & place to use DTC, but in general, you should avoid them until you really need them. I assume that this is something that is actually being triggered by WCF behavior, not intentional.

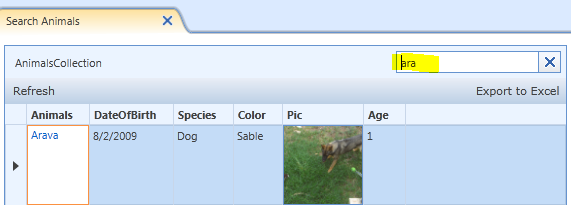

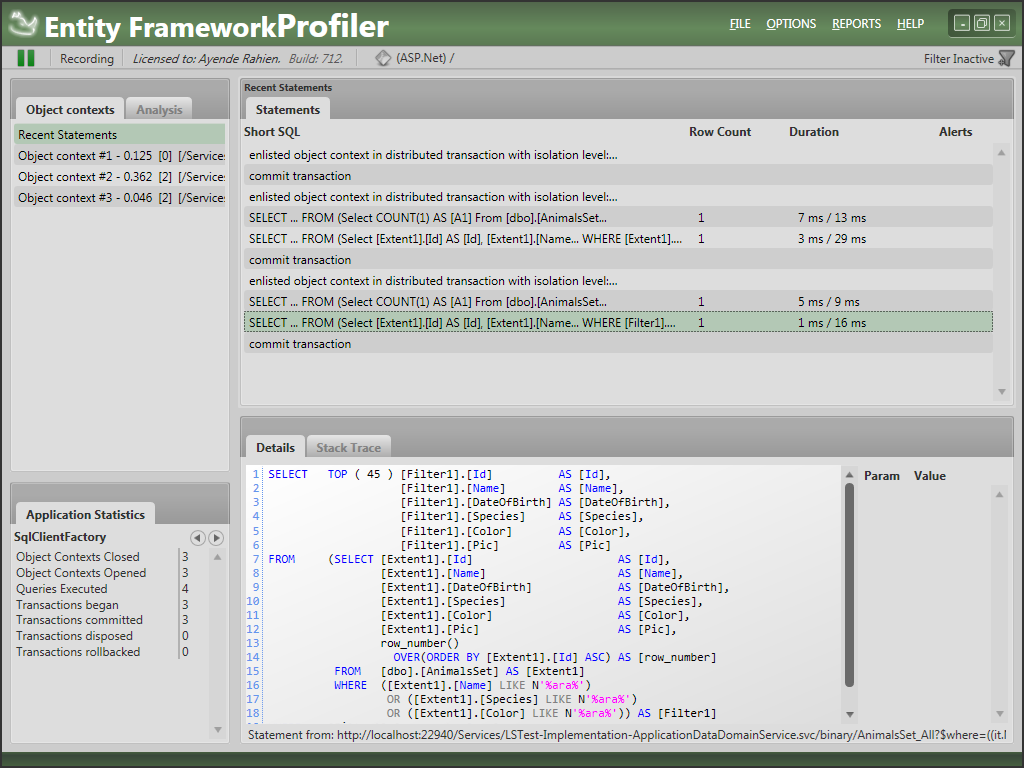

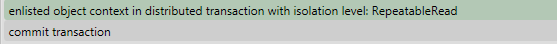

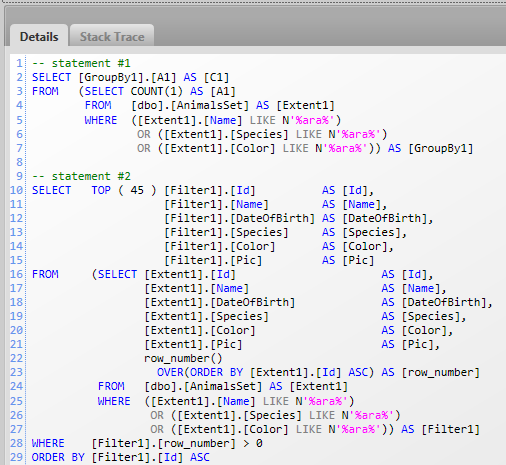

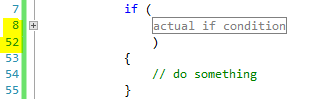

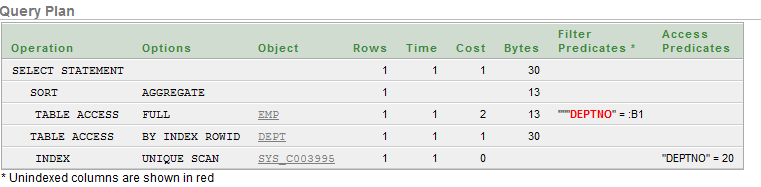

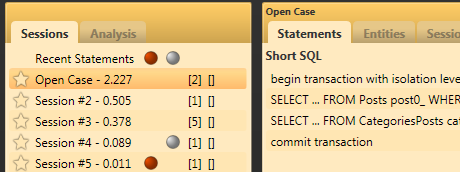

Now, let us look at what a simple search looks like:

This search results in:

That sound? Yes, the one that you just heard. That is the sound of a DBA somewhere expiring. The presentation about LightSwitch touted how you can search every field. And you certainly can. You can also swim across the English channel, but I found that taking the train seems to be an easier way to go about doing this.

Doing this sort of searching is going to be:

- Very expensive once you have any reasonable amount of data.

- Prevent usage of indexes to optimize performance.

In other words, this is an extremely brute force approach for this, and it is going to be pretty bad from performance perspective.

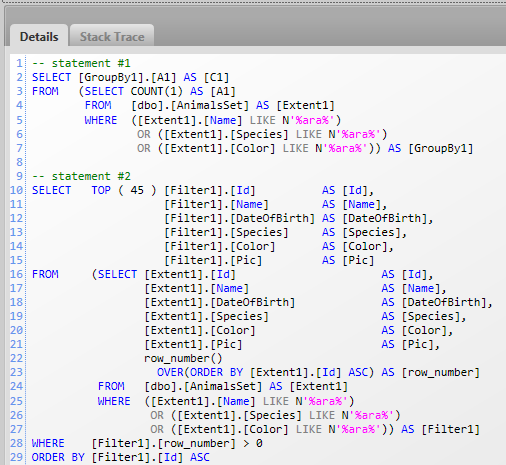

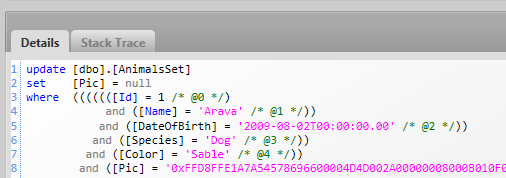

Interestingly, it seems that LS is using optimistic concurrency by default.

I wonder why they use the slowest method possible for this, instead of using version numbers.

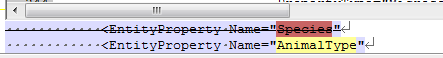

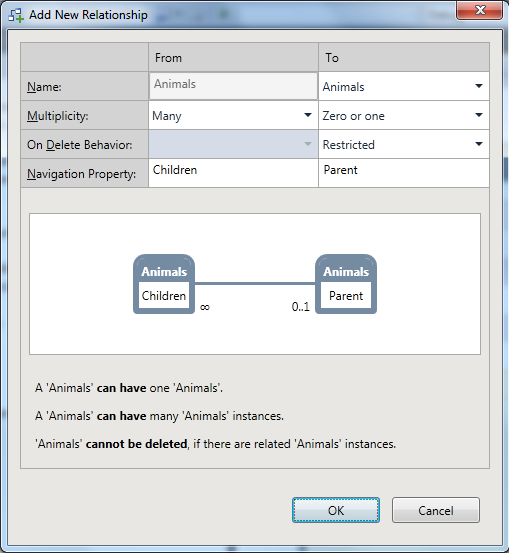

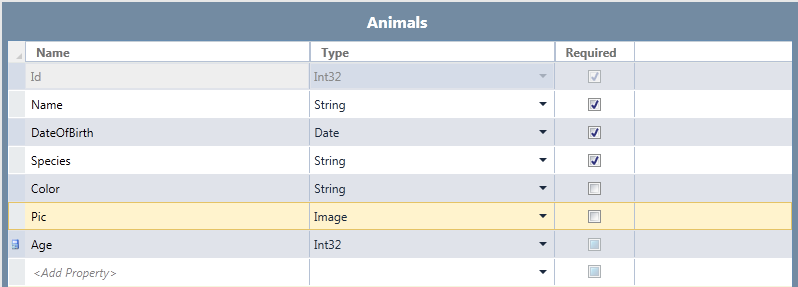

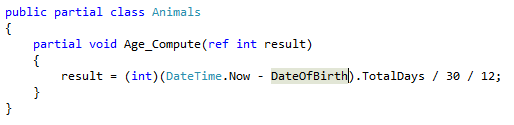

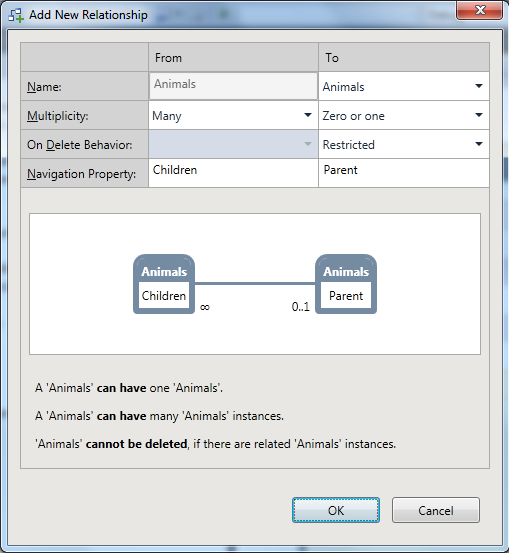

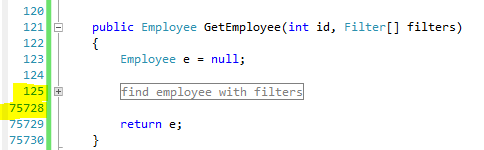

Now, let see how it handles references. I think that I run into something which is a problem, consider:

Which generates:

This make sense only if you can think of the underlying data model. It certainly seems backward to me.

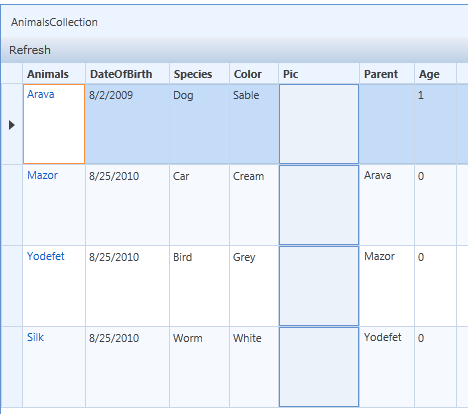

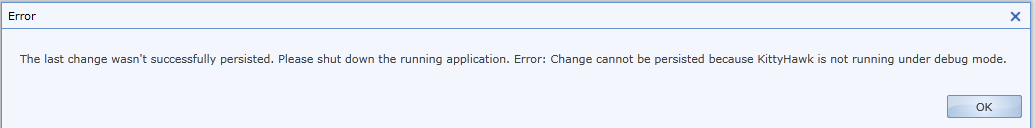

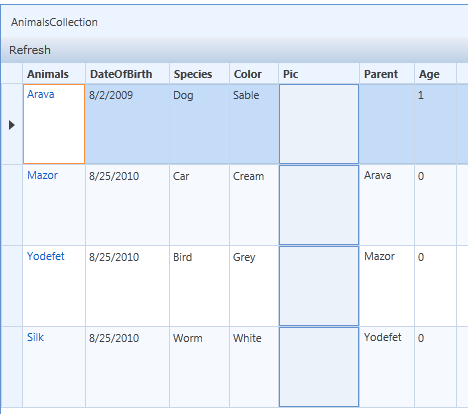

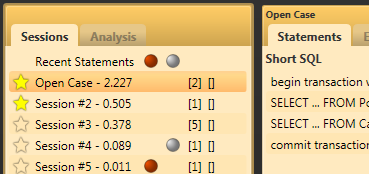

I fixed that, and created four animals, each as the parent of the other:

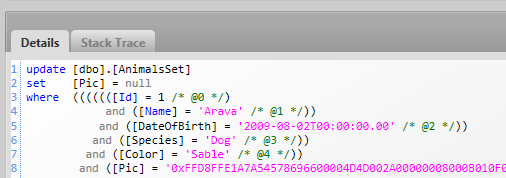

Which is nice, except that here is the SQL required to generate this screen:

-- statement #1

SELECT [GroupBy1].[A1] AS [C1]

FROM (SELECT COUNT(1) AS [A1]

FROM [dbo].[AnimalsSet] AS [Extent1]) AS [GroupBy1]

-- statement #2

SELECT TOP ( 45 ) [Extent1].[Id] AS [Id],

[Extent1].[Name] AS [Name],

[Extent1].[DateOfBirth] AS [DateOfBirth],

[Extent1].[Species] AS [Species],

[Extent1].[Color] AS [Color],

[Extent1].[Pic] AS [Pic],

[Extent1].[Animals_Animals] AS [Animals_Animals]

FROM (SELECT [Extent1].[Id] AS [Id],

[Extent1].[Name] AS [Name],

[Extent1].[DateOfBirth] AS [DateOfBirth],

[Extent1].[Species] AS [Species],

[Extent1].[Color] AS [Color],

[Extent1].[Pic] AS [Pic],

[Extent1].[Animals_Animals] AS [Animals_Animals],

row_number()

OVER(ORDER BY [Extent1].[Id] ASC) AS [row_number]

FROM [dbo].[AnimalsSet] AS [Extent1]) AS [Extent1]

WHERE [Extent1].[row_number] > 0

ORDER BY [Extent1].[Id] ASC

-- statement #3

SELECT [Extent1].[Id] AS [Id],

[Extent1].[Name] AS [Name],

[Extent1].[DateOfBirth] AS [DateOfBirth],

[Extent1].[Species] AS [Species],

[Extent1].[Color] AS [Color],

[Extent1].[Pic] AS [Pic],

[Extent1].[Animals_Animals] AS [Animals_Animals]

FROM [dbo].[AnimalsSet] AS [Extent1]

WHERE 1 = [Extent1].[Id]

-- statement #4

SELECT [Extent1].[Id] AS [Id],

[Extent1].[Name] AS [Name],

[Extent1].[DateOfBirth] AS [DateOfBirth],

[Extent1].[Species] AS [Species],

[Extent1].[Color] AS [Color],

[Extent1].[Pic] AS [Pic],

[Extent1].[Animals_Animals] AS [Animals_Animals]

FROM [dbo].[AnimalsSet] AS [Extent1]

WHERE 2 = [Extent1].[Id]

-- statement #5

SELECT [Extent1].[Id] AS [Id],

[Extent1].[Name] AS [Name],

[Extent1].[DateOfBirth] AS [DateOfBirth],

[Extent1].[Species] AS [Species],

[Extent1].[Color] AS [Color],

[Extent1].[Pic] AS [Pic],

[Extent1].[Animals_Animals] AS [Animals_Animals]

FROM [dbo].[AnimalsSet] AS [Extent1]

WHERE 3 = [Extent1].[Id]

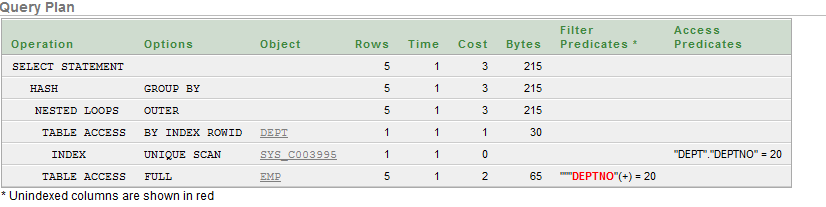

I told you that there is a select n+1 builtin into the product, now didn’t I?

Now, to make things just that much worse, it isn’t actually a Select N+1 that you’ll easily recognize. because this doesn’t happen on a single request. Instead, we have a multi tier Select N+1.

What is actually happening is that in this case, we make the first request to get the data, then we make an additional web request per returned result to get the data about the parent.

And I think that you’ll have to admit that a Parent->>Children association isn’t something that is out of the ordinary. In typical system, where you may have many associations, this “feature” alone is going to slow the system to a crawl.

![image_thumb[1] image_thumb[1]](http://ayende.com/Blog/images/ayende_com/Blog/WindowsLiveWriter/LightSwitchonthewire_8EF2/image_thumb%5B1%5D_f8593434-3dd0-471a-99bf-9fb9149ff5d8.png)