In my previous post, I wrote about the case of a medical provider that has a cloud database to store its data, as well as a whole bunch of doctors making house calls. There is the need to have the doctors have (some) information on their machine as well as push updates they make locally back to the cloud.

However, given that their machines are in the field, and that we may encounter a malicious doctor, we aren’t going to fully trust these systems. We still want the system to function, though. The question is how will we do it?

Let’s try to state the problem in more technical terms:

- The doctor need to pull data from the cloud (list of patients to visits, patient records, pharmacies and drugs available, etc).

- The doctor nee to be able to create patient records (exam made, checkup results, prescriptions, recommendations, etc).

- The doctor’s records needs to be pushed to the cloud.

- The doctor should not be able to see any record that is not explicitly made available to them.

- The same applies for documents, attachments, time series, counters, revisions, etc.

Enforcing Distributed Data Integrity

The requirements are quite clear, but they do bring up a bit of a bother. How are we going to enforce it?

One way to do that would be to add some metadata rule for the document, deciding if a doctor should or should not see that document. Something like that:

In this model, a doctor will have be able to get this document if they have any of the tags associated with the document.

This can work, but that has a bunch of non trivial problems and a huge problem that may not be obvious. Let’s start from the non trivial issues:

- How do you handle non document data? Based on the owner document, probably. But that means that we have to have a parent document. That isn’t always the case.

- It isn’t always the case if the document was deleted, or is in a conflicted state.

- What do you do with revisions, if the access tags has changed? What do you follow?

There are other issues, but as you can imagine, they are all around managing the fact that this model allows you to change the tags for the document and expect to handle this properly.

The huge problem, however, is what should happen when a tag is removed? Let’s assume that we have the following sequence of events:

- patients/oren is created, with access tag of “doctors/abc”

- That access tag is then removed

- Doctor ABC’s machine is then connected to the cloud and setup replication.

- We need to remove patients/oren from the machine, so we send a tombstone.

So far, so good. However, what about Doctor' XYZ’s machine? At this time, we don’t know what the old tags were, and that machine may or may not have that document. It shouldn’t have it now, so we’ll send a tombstone there? That leads to information leak by revealing document ids that we aren’t authorized for.

We need a better option.

Using the Document ID as the Basis for Data Replication

We can define that once created, the access tags are immutable, and that would help considerably. But that is still fairly complex to manage and opens up issues regarding conflicts, deletion and re-creation of a document, etc.

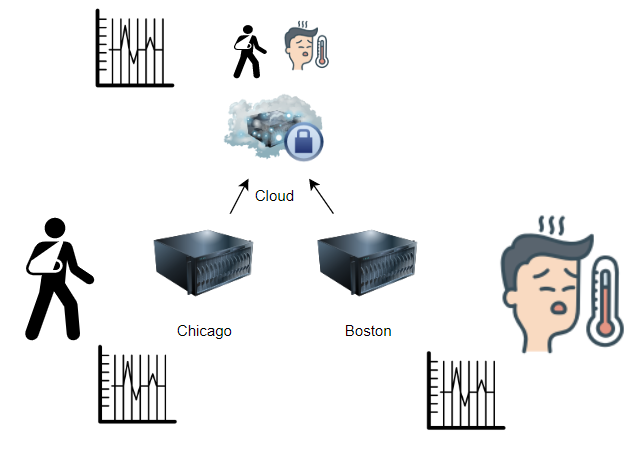

Instead, we are going to use the document’s id as the source for the decision to replicate the document or not. In other words, when we register the doctor’s machine, we set it up so it will allow:

| Incoming paths | Outgoing paths |

|

|

In this case, incoming and outgoing are defined from the point of view of the cloud cluster. So this setup allows the doctor’s machine to push updates to any document with an id that starts with “doctors/abc/visits/” or “tasks/doctors/abc/*”. And the cloud will send all pharmacies and laboratories data. The cloud will also send all the patients for the doctor’s clinic as well as the tasks for this doctor, finally, we have the doctor’s record itself. Everything else will be filtered.

This Model is Simple

This model is simple, it provides a list of outgoing and incoming paths for the data that will be replicated. It is also quite surprisingly powerful. Consider the implications of the configuration above.

The doctor’s machine will have a list of laboratories and pharmacies (public information) locally. It will have the doctor’s own document as well as records of the patients in the clinic. The doctor is able to create and push patient visit’s records. Most interestingly, the tasks for the doctor are defined to allow both push and pull. The doctor will receive updates from the office about new tasks (home visits) to make and can mark them complete and have it show up in the cloud.

The doctor’s machine (and the doctor as well) is not trusted. So we limit the exposure of the data that they can see on a Need To Know basis. On the other hand, they are limited in what they can push back to the cloud. Even with these limitations, there is a lot of freedom in the system, because once you have this defined, you can write your application on the cloud side and on the laptop and just let RavenDB handle the synchronization between them. The doctor doesn’t need access to a network to be able to work, since they have a RavenDB instance running locally and the cloud instance will sync up once there is any connectivity.

We are left with one issue, though. Note that the doctor can get the patients’ files, but is unable to push updates to them. How is that going to work?

The reason that the doctor is unable to write to the patients’ files is that they are not trusted. Instead, they will send a visit record, which contains their finding and recommendation and on the cloud, we’ll validate the data, merge it with the actual patient’s record, apply any business rules and then update the record. Once that is done, it will show up in the doctor’s machine magically. In other words, this setup is meant for untrusted input.

There are more details that we can get into, but I hope that this outline the concepts clearly. This is not a RavenDB 5.0 feature, but will be part of the next RavenDB release, due around September.

_thumb.png)