This is an interesting and a somewhat confusing topic, so I decided to write down my understanding of how certificates signature works with cross signing.

A certificate is basically an envelope over a public key and some metadata (name, host, issuer, validation dates, etc). There is a format called ASN.1 that specify how the data is structured and there are multiple encoding options DER / BER. None of that really matters for this purpose. A lot of the complexity of certificates can be put down to the issues in the surrounding technology. It would probably be a lot more approachable if the format was JSON or even XML. However, ASN.1 dates to 1984. Considering that this is the age of MS-DOS 3.0 and the very first Linux was 7 years in the future, it is understandable why this wasn’t done.

A certificate is basically an envelope over a public key and some metadata (name, host, issuer, validation dates, etc). There is a format called ASN.1 that specify how the data is structured and there are multiple encoding options DER / BER. None of that really matters for this purpose. A lot of the complexity of certificates can be put down to the issues in the surrounding technology. It would probably be a lot more approachable if the format was JSON or even XML. However, ASN.1 dates to 1984. Considering that this is the age of MS-DOS 3.0 and the very first Linux was 7 years in the future, it is understandable why this wasn’t done.

I’m talking specifically about the understandability issue here, by the way. The fact that certificates are basically opaque blocks that requires special tooling to operate and understand has made the whole thing a lot more complex than it should be. A good example of that can be seen here, this will parse the certificate using ASN.1 and let you see its contents.

Here is the same data (more or less) as a JSON document:

The only difference between the two options is that one is easy to parse, and the other… not so much, to be honest.

After we dove into the format, let’s understand what are the important fields we have in the certificate itself. A certificate is basically just the public key, the name (or subject alternative names) and the validity period.

In order to establish trust in a certificate, we need to trace it back up to a valid root certificate on our system (I’m not touch that topic in this post). The question is now, how do we create this chain?

Well, the certificate itself will tell us. The certificate contains the name of the issuer, as well as the digital signature that allows us to verify that the claimed issuer is the actual issuer. Let’s talk about that for a bit.

How does digital signatures work? We have a key pair (public / secret) that we associate with a particular name. Given a set of bytes, we can use the key pair to generate a cryptographic signature. Another party can then take the digital signature, the original bytes we signed and the public key that was used and validate that they match. The details of how this works are covered elsewhere, so I’ll just assume that you take this on math. The most important aspect is that we don’t need the secret part of the key to do the verification. That allows us to trust that a provided value is a match to the value that was signed by the secret key, knowing only the public portion of it.

As an aside, doing digital signatures for JSON is a PITA. You need to establish what is the canonical form of the JSON document. For example: with or without whitespace? With the fields sorted, or in arbitrary order? If sorted, using what collation? What about duplicated keys? Etc… the nice thing about ASN.1 is that at least no one argues about how it should look. In fact, no one cares.

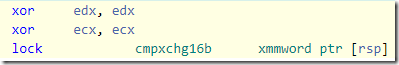

In order to validate a certificate, we need to do the following:

There are a few things to note in this code. We do the lookup of the issuer certificate by name. The name isn’t anything special, mind you. And the process for actually doing the lookup by name is completely at the hands of the client, an implementation detail for the protocol.

You can see that we validate the time, then check if the issuer certificate that we found can verify the digital signature for the certificate we validate. We continue to do so until we find a trusted root authority or end the chain. There are a lot of other details, but these are the important ones for our needs.

A really important tidbit of information. We find the issuer certificate using a name, and we establish trust by verifying the signature of the certificate with the issuer public key. The actual certificate only carries the signature, it knows nothing about the issuer except for its name. That is where cross signing can be applied.

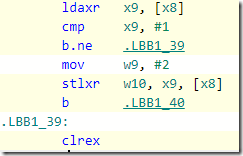

Consider the following set of (highly simplified) certificates:

The www.example.com certificate is signed by the Z1 certificate, which is signed by the Root Sep21 certificate, which is signed by itself. A root certificate is always signed by itself. Now, let’s add cross signing to this mix. I’m not going to touch any of the certificates we have so far, instead, I’m going to have:

This is where things get interesting. You’ll notice that the name of the intermediate certificate is the same in both cases, as well as the public key. Everything else is different (including validity periods, thumbprint, parent issuer, etc). And this works. But how does this work?

Look at the validate() code, what we actually need to do to verify the certificate is to have the same name (so we can lookup the issuer certificate) and public key. We aren’t using any of the other data of the certificate, so if we reuse the same name and public key in another certificate, we can establish another chain entirely from the same source certificate.

The question that we have to answer now is simple, how do we select which chain to use? In the validate() code above, we simply select the first chain that has an element that matches. In the real world, we may actually have multiple certificates with the same public key in our system. Let’s take a look:

Take a look here 8d02536c887482bc34ff54e41d2ba659bf85b341a0a20afadb5813dcfbcf286d, these are all certificates that has the same name and public key, issued at different times and by different issuers.

We don’t generally think about the separate components that makes a certificate, but it turns out to be a very useful property.

Note that cross signed here is a misnomer. Take a look at this image, which shows the status of the Let’s Encrypt certificates as of August 2021.

You can see that the R3 certificate is “signed” by two root certificates: DST Root CA X3 and ISRG Root X1. That isn’t actually the case, there isn’t a set of digital signatures on the R3 certificate, just a single one.

That means that we can produce a different chain of trust from an existing certificate, without modifying it at all. For example, I can create a new R3 certificate, which is issued by Yours Truly CA, which will validate just fine, as long as you trust the Yours Truly CA. For fun, I don’t even need to have the secret key for the R3 certificate, all the information I need to generate the R3 certificate is public, after all. I wouldn’t be able to generate valid leaf certificates, but I can add parents at will.

The question now become, how will a client figure out which chain to use? That is where things get really interesting. During TLS negotiation, when we get the server certificate, the server isn’t going to send us just a single certificate, instead, it is going to send us (typically) at least the server certificate it will use to authenticate the connection as well as an issuer’s certificate.

The client will then (usually, but it doesn’t have to) will use the second certificate that was sent from the server to walk up the chain. Most certificate chains are fairly limited in size. For example, using the R3 certificate that I keep referring back to, you can see that it has the ability to generate certificates that cannot properly sign child certificates (that is because its Path Length Constraint is zero, there is no more “room” for child certificates to also sign valid certs).

What will happen usually is that the client will use the second certificate to lookup the actual root certificate in the local certificate authority store. That must be local, otherwise it wouldn’t be trusted. So by sending both the certificate itself and its issuer from the server, we can ensure that the client will be able to do the whole certificate resolution without having to make an external call*.

* Not actually true, the client may decide to ignore the server “recommendation”, it will likely issue an OSCP call or CRL call to validate the certificate, etc.

I hope that this will help you make sense of how we are using cross signed certificates to add additional trust chains to a existing certificate.