This post is the text version of a presentation I gave a few weeks ago. There is in reference of this classic post by Joel.

This post is the text version of a presentation I gave a few weeks ago. There is in reference of this classic post by Joel.

In 2015, I decided that we needed to reboot RavenDB. I did that with the full understanding that this is going to be a huge task, including knowing that it will be bigger than what I can project, even if I take this line of thinking into account.

RavenDB 1.0 was written a decade ago. It was written because it didn’t leave me alone and I wanted to get it out of my head. At the time, I was focused more on getting it out the door (and my head) and was taking shortcuts in the implementation. That allowed me to cut down dramatically on the amount of work that is involved in it. At the same time, this put some constraints on the implementation and architecture. The most obvious one was the reliance on Esent, which tied us to Windows. C# as the implementation language, to a lesser extent, also had the same issue until .NET Core. (Yes, I’m aware of Mono, I have no idea how people managed to run anything beyond hello world on it. We tried porting RavenDB to Mono multiple times, and I still bear the scars.)

I went back and looked at our release notes, in literally every major release, we have spent significant amount of time and effort on “performance optimizations”. In January of 2015 we had a few sprints that were dedicated to just this issue. We went down to assembly code in some cases, analyzed our hotspots and optimize things in a very serious manner. We got some amazing performance improvements in some cases, reducing the runtime by orders of magnitude in some cases. But it still felt like we were hitting a limit. What is more, experience from customers in production showed us that there were a number of cases where we run into problematic behavior. This mostly happened on large / complex projects. And nearly all those issues were related in one way or another to memory and the GC.

Our indexing, for example, would be reading data from disk into memory. That was meant to save disk I/O during indexing, and including pretty smart prefetching and monitoring behavior. It also had the side effect of loading documents (which can be large) into managed memory and holding on to them long enough to push them into Gen1 and Gen2. Then they would be indexed and need to go away. But given that they were pushed to a higher generation… that meant more expensive collection cycle.

RavenDB was created before the pervasive use of fast disks, and it turns out that in some cases, reading the data from disk was actually faster than parsing it using JSON.Net. In other words, our “I/O bound” process of reading documents was actually dominated by the time it took to parse the JSON text. That does not include the costs of actually cleaning up this memory. Complex JSON documents can have a lot of objects, and the cost of GC rise with the number of objects that are being tracked. There were pretty fundamental problems, which I didn’t think we could fix in a piecemeal fashion.

That time also coincided with a peak in the number of support incidents that we got. Unlike many other open source projects, we treat support as a cost center, not a revenue center. In other words, we don’t want to have more support, that isn’t how we want to make money. Being a database, we were frequently at the heart of things and our customers and users are very sensitive to any issue that might arise. I’m painting somewhat of a bleak picture, I’m aware. It wasn’t nearly that bad from the point of view of any particular customer. But on aggregate, from our point of view, it felt like a nasty game of whack a mole. As soon as we provided a solution to one customer’s issue, another would pop up, somewhat related but just different enough to not be fixed by the previous change. These weren’t regressions, mind. These were just a lot of places where the changing times violated some of our core assumptions.

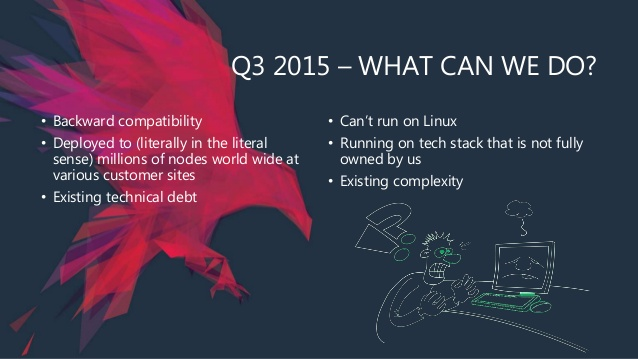

Toward the end of 2015, I sat down and really thought about what we needed and were missing. This was the situation as I saw it.

There was also the issue that we have learned a lot over the years. We built Voron (our storage engine) from the ground up, we had a lot of experience running in production and we knew what kind of tasks our customers were using us for. I kept thinking that I wished I had a time machine and could do things over properly. Given that my time machine is still in the shop, I decided that we had two options:

- Minor fixes along the way – slowly improving our behavior as we stride toward the desired architecture and usage.

- Break it all – essentially start from scratch, with a new architecture and write it the way we want it to be written.

The obvious choice was to do this slowly. The problem was that I really couldn’t think of a good way to actually achieve that. The kind of changes we wanted to make started from replacing the most fundamental structure we had, how we represent JSON in our document database and got more complex from there. We wanted to change how we store data on disk, how we index data, how we … literally every single feature that we had was going to be transformed in some way.

We also had additional issues. The Windows only limitation was really hurting us and we really wanted to get a good Linux story going. The support burden was also at the very top of my mind as we considered what to do. In the end, we came up with the following decisions:

- We don’t require backward compatibility. Either on the server side or client side.

- That was the hardest decision, but it meant that we could actually tackle some of the biggest issues freely and without constraint.

- That meant that we wanted to keep the same feeling, but be able to make changes to corners of the API that atrophied.

- Support cost and simplified operations as a primary concern.

- This meant that, at the design level, we took into account debugging considerations.

- Order of magnitude performance improvement across the board.

- Otherwise, it isn’t worth the effort.

- Cross platform from the get go.

That was in Sep 2015. I sat down and wrote a design document that outlined the new architectural approach, spiked a few things and then we were off to the races. I blogged all about the process extensively, so I’m not going to repeat that.

We decided to use DNX (which became .NET Core) at a very early stage. Initially, I don’t believe that we even had a debugger, and most of our builds had to be trigger from the command line. I guess that if you are going to make a risky decision, you might as well make a few others…

I’ll say that I made a lot of preparation to fail up front. Part of the reason we went with DNX was that we knew that worst case scenario, we could spend a few days and get it working on the full .NET framework if we had to. I took this step with a lot of backward glances to make sure that we won’t get lost.

Alongside our experience in supporting RavenDB, we also run a UX study and combed all the incident reports we generate from support calls. The idea was to take as much time as necessary to get things as right as we could handle it. The studio change between 3.5 and 4.0 is massive, and was driven by getting a talented professional to design each part of the UI, guided by real world UX study and analysis. We kept asking “where do it hurt?” and whenever we had found a cause of pain we worked to alleviate it.

Some of our guiding principals during that phase of the project were:

- Cross platform from the get go.

- We couldn’t afford to port it midway through. Too complex and prune to failure.

- OWN the stack.

- We don’t want to use any components that we don’t have good visibility into and the ability to work with.

- In particular, anything that is a core competence should be owned and built by us. For our scenario, that means primarily the storage engine.

- Build for performance.

- I wasn’t kidding about requiring x10 performance improvement. We had one or two devs at all time running benchmarks and fixing things performance of every completed feature.

- Build for operations.

- Each and every design decision should be considered in light of its operational behavior.

- In particular, we excised any feature that relied on hard to figure out technology or integration (I’m looking at you, Windows Auth).

- This included changing the design of the software so a core dump would make it easier to figure out what is going on. We also explicitly opened up a lot of the internal behavior as debug endpoints and plug them to the studio so operators will have greater visibility. As an aside, that was very helpful in figuring out our performance bottlenecks and we worked to improve that part of the project as we strived for ever faster performance.

- Reducing the support burden as an major goal.

- A lot of the previous points tie into this. But this is also where we combed over any issue that had a “user misconfigured / misused” and built in alerts directly into RavenDB to give the user early warnings about common issues.

- We defined a set of common scenarios. Reading / writing documents, for example, and then we spent months on designing the whole system so it will work to make these fast, seamless and easy.

A good example of that is how we stored documents in RavenDB now. We have our own binary format that allow us to avoid parsing the document when reading from disk, plays nicely with memory mapped files (which is how Voron, our storage engine, works) and can effectively allow us to hand a pointer to a memory mapped buffer and start working with that as a JSON document without:

- Allocating any managed memory

- Parsing JSON

- Require caching / pre-fetching, etc.

We spent a lot of time thinking about what we want to do, and then we looked into how the operating system expect us to behave. The idea is that if we play to the operating system’s expectation, we can reap a lot of benefits from the OS’ own behavior. This is how RavenDB handles loading data to memory. We let the operating system handle it and just make sure that our own behavior is both predictable and applicable to the OS’ optimizations.

I mentioned that GC was the bane of our existence, right? We moved a lot of the memory management in RavenDB to unmanaged code and handle that explicitly. That gave us the advantage that we know a lot more about how we should expect to use the memory an can spend the time to make this highly optimized.

At the debugger side of things, we made some changes to the design of RavenDB with the intent to make it easier to debug and analyze core dumps. For example, most of the long running threads are named, so it is easy to figure out who they belong to (and not just what they are currently doing). For that matter, long running tasks are using synchronous mode, specifically because it means that we can drop in the debugger / core dump and look at their state. This is much harder to do with async methods. You might have noticed that I mentioned core dumps a few times, right? These are essential to figuring out what is going on with your software on production systems. We learned a lot about production debugging over the years and with RavenDB 4.0 we took steps to make things easier. For example, many data structured in RavenDB have an extra field called tag that is there specifically to provide debugging information about the value if we are looking at the value in the debugger.

An obvious question for this project was whatever we should still stay on .NET or should we move to an unmanaged language. I considered this seriously, with Rust, C/C++ and Go being the top contenders as the implementation language. I decided to stay with .NET for several reasons. Productivity was right there in the top. We already had a team that was well versed in .NET, and while that isn’t a blocker, it was a consideration. The tooling around .NET are leagues ahead of anything else that I have seen. That include both write time (where Rider / ReSharper rules) and for debug time (I found nothing remotely close to Visual Studio for debugging non trivial code easily). The cross platform angle, which was the most serious issue for us, was resolved with .NET Core.

Rust wasn’t matured enough at the time (2015) and even today I think that a language that prides itself in being hard to learn isn’t a good choice. C++ was a strong contender, but the slow compilation times were an issue. The tooling is similar, but inferior in many respects. Cross platform C++ is possible, and modern C++ is very different from what I remember. However, it come with a very high degree of complexity and would take a lot of time to master again properly. C (distinct from C++) is much simpler language. Still has the compilation speed issues, but the language is much simpler. I think that if it had a defer mechanism builtin it would be a much nicer language. Go was ruled out because if I’m going to be writing everything from scratch, I might as well go all the way down to C’s level and not stop with something that still has GC pauses.

The choice of C# as CoreCLR has been vindicated. The project team and the community at large puts a large emphasis on performance and we keep getting more and better way to handle low level details while still able to use higher level concepts when needed. And the tooling… dear God. I routinely work with other platforms, testing things out, but there is nothing that come close to the toolsets that are available for C#.

An interesting wrinkle with the 4.0 release was that we started it before the 3.5 release was even out. For a while, we had a small team working on the foundations of 4.0 while the rest of us were busy hammering in the last details of RavenDB 3.5.

As soon as we had the bare minimum to go (basically, it compiled and could save a single document and even regurgitate it back up again) we started heavy parallelization of the work. We had a team working on indexes while another was dealing with (even at this early stage) performance and another working on the user interface. At that time frame, we have hired a few more people and could really see the benefits of all of the separate teams working in concrete. One of the priorities of this method was to get to a demoable state. In fact, at some point we had over 30% of the people working on either the UI directly or UI related infrastructure.

One of the things we kept hearing back is that the UI and insight it provided into what is going on inside the database were crucial for our users. It also helped a lot to us as we developed RavenDB to get to play with it directly and see things in an easy manner. The UI has been at once one of the most trivial of changes and the most profound. On the one hand, we didn’t really make any significant architectural changes in the UI. On the other hand, we re-wrote most of it with the aid of UX study and a real professional at the helm. That gave us a lot of visible polish that underscore the amount of work that happened in the engine.

How did all of that turn out?

- I initially thought it would last a year to 15 months, with an expectant due date of Dec 2016. That was with a team size of about 25 people. Work started in Sep 2015.

- As it turns out, RavenDB accumulated a lot of features in the years it spent in production. We had to evaluate each of it, see how it would fit into our architecture and get it ported. That took a lot of time. Especially because it many cases we took the time to change the approach we had for the feature completely.

- By Mid 2016 I already changed the scheduled to Jun 2017.

- Close to the end of 2016 we released RavenDB 3.5. This freed up some people to work on the 4.0 release, but also meant we had higher than usual support calls while customers integrated the new release.

- Actual release of the 4.0 release happened in Feb 2018. So just about 30 months from the start or about double the time I expected it to happen.

- We had to cut some features out to make the 4.0 release, all of them are back in the 4.1 release, scheduled for next month.

- This means that to get back to the same place took us 3 years. But we now have a lot of extra features.

- Most of the missing features were pretty minor, though, and rarely used.

What did all of that gain us?

- Performance: Single node. Over 100,000 writes / sec and over 1,000,000 reads / sec in our benchmarks.

- Real world users report performance boost of x20 to x52 time faster.

- Support call duration dropped from days / weeks to about 2 – 4 hours.

- Cross platform on Windows, Linux, ARM and MacOSX.

- We are now deployed to production on Raspberry PIs, because we are the fastest real database on that kind of hardware.

We were over a year overdue, and even with the deadline being extended several times we had to cut some features to actually make the cut for release. The general acceptance of the new release by the community has been a roaring success. We exceeded our own goals for the project, even if we took a lot longer than expected to get there.

Now, for some additional thoughts. We didn’t really re-write the whole thing from scratch. Instead, we had a lot of code that we could at least partially reuse. The storage engine was ported, no re-written, for example. However, we changed architectures in a pretty significant way. For example, the format and manner of working with JSON changed entirely between these two released. We are a JSON document database. As you can imagine, we pretty much had to modify everything as a result of that.

We didn’t designed the whole things from the started. We had a rough outline and we let things roll from there. As a result of the new architecture and expectation, by the time we hit a particular feature we were able to utilize what we already learn about how to work with the new architecture to improve things. We also weren’t afraid of changing things multiple times. Authentication had several major design changes midway through, and it ended up so much simpler than what we had before. Even pretty late in the game, we still made significant changes. The RQL support, having a SQL like querying language, came about on the last 20% of the project.

That was a huge change, and I got a lot of “here comes the crazy train again” feedback. This is probably one of the reasons we delayed by another few months. But it was worth it by far. Basically, because we were able to give up on backward compatibility, we were able to move quickly and change stuff as we wished. We knew that we wouldn’t have another change like that for another decade, so we try to get the big changes done.

In retrospect, I think it worked quite well. I’m really proud of how RavenDB 4.0 turned out.