I really wanted to leave this series of posts alone. Getting 135 times faster should be fast enough for everyone, just like 640KB was.

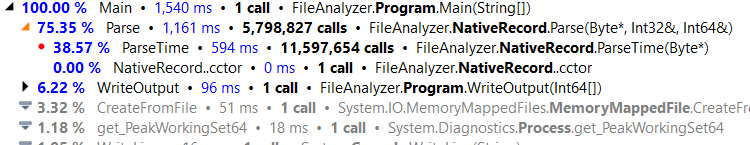

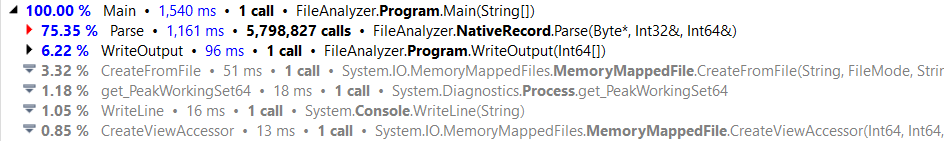

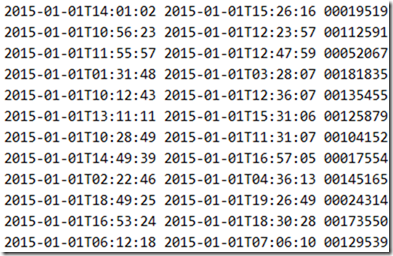

Unfortunately, performance optimization is addictive. Last time, we left it at 283 ms per run. But we still left some performance on the table. I mean, we had inefficient code like this:

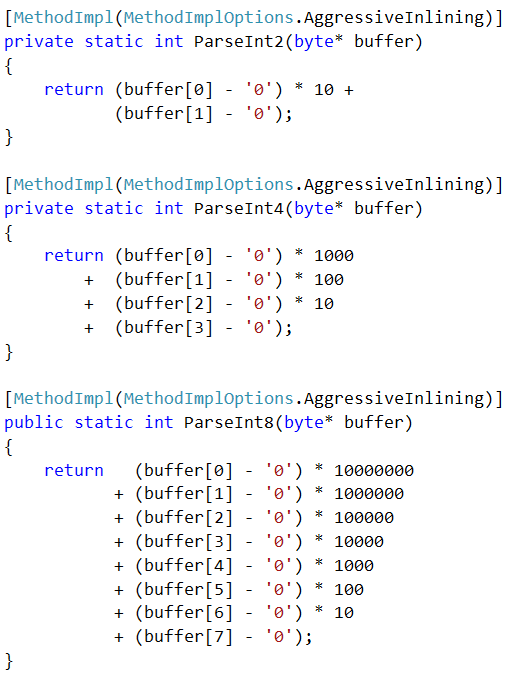

Just look at it. Analysis showed that it is always called with 2, 4 or 8 only. So we naturally simplified things:

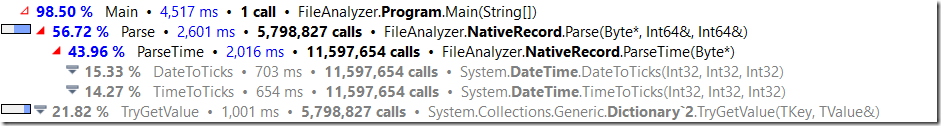

Forcing the inlining of those methods also helped, and pushed us further toward 240 ms.

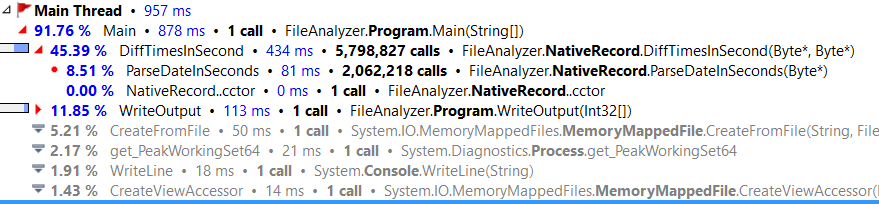

Another cost that we had was date diff calculation, we optimized for the case where the day is the same, but in our dataset, we have about 2 million records that cross the day line. So we further optimized for the scenario where the year & month are the same, and just the day is different. That pushed us further toward 220 ms.

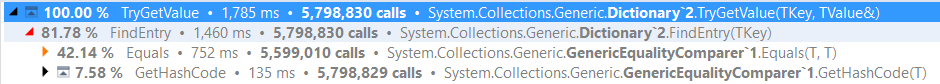

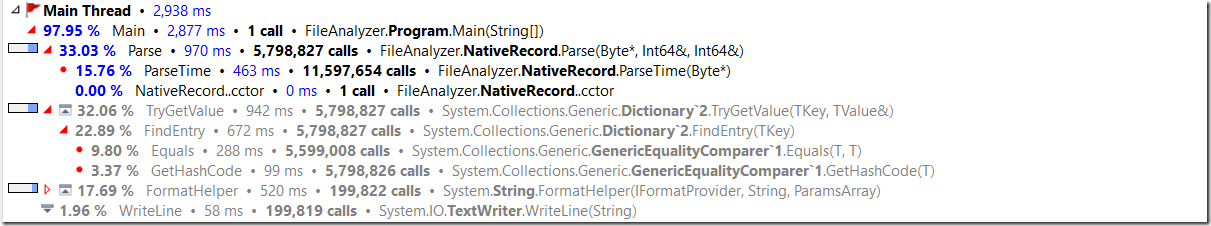

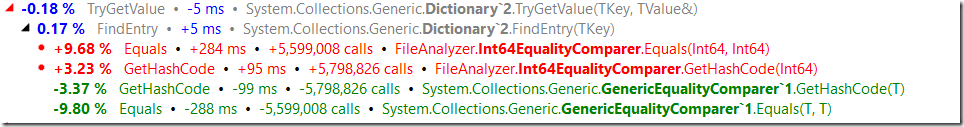

At this point the profiler was basically laughing at us, and we had no real avenues to move forward, so I made the code use 4 threads, each processing the file at different locations.

That gave me: 73 ms and allocated 5,640 kb with peak working set of 300,580 kb

- 527 times faster than the original version.

- Allocate 1350 times less memory.

- 1/3 of the working set.

- Able to process 3.7 GB / sec.

Note that at this point, we are relying on this being in the file system cache, because if I was reading it from disk, I wouldn’t be able to do more than 100 – 200 MB / sec.

Here is the full code, write code like this at your peril.