Making code fasterSpecialization make it faster still

Okay, at this point we are really pushing it, but I do wonder if we can get it faster still?

So we spend a lot of time in the ParseTime call, parsing two dates and then subtracting them. I wonder if we really need to do that?

I wrote two optimizations, once to compare only the time part if they are the same, and the second to do the date compare in seconds, instead of ticks. Here is what this looks like:

Note that we compare the first 12 bytes using just 2 instructions (by comparing long & int values), since we don’t care what they are, only that they are equal. The result:

283 ms and allocated 1,296 kb with peak working set of 295,200 kb

So we are now 135 times faster than the original version.

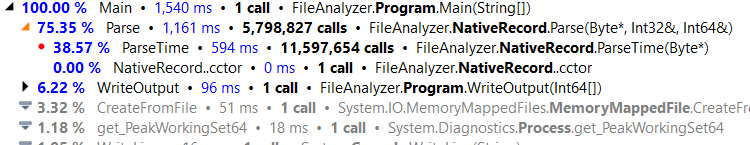

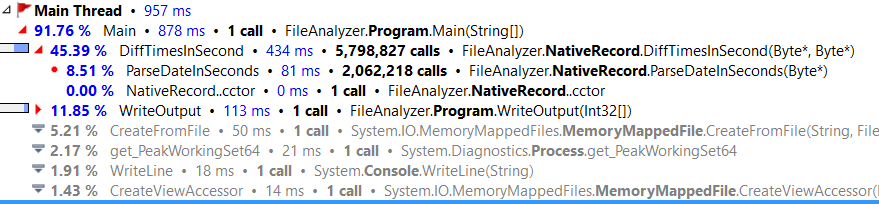

Here is the profiler output:

And at this point, I think that we are pretty much completely done. We can parse a line in under 75 nanoseconds, and we can process about 1 GB a second on this machine ( my year old plus laptop ).

We can see that the skipping the date compare for time compare if we can pay off in about 65% of the cases, so that is probably a nice boost right there. But I really can’t think of anything else that we can do here that can improve matters in any meaningful way.

For comparison purposes.

- 38,478 ms

- 7,612,741 kb allocated

- 874,660 kb peak working set

- 50 lines of code

- Extremely readable

- Easy to change

- 283 ms

- 1,296 kb allocated

- 295,200 kb peak working set

- 180 lines of code

- Highly specific and require specialize knowledge

- Hard to change

So yes, that is 135 times faster, but the first version took about 10 minutes to write, then another half an hour to fiddle with it to make it non obviously inefficient. The final version took several days of careful though, analysis of the data and careful optimizations.

More posts in "Making code faster" series:

- (24 Nov 2016) Micro optimizations and parallel work

- (23 Nov 2016) Specialization make it faster still

- (22 Nov 2016) That pesky dictionary

- (21 Nov 2016) Streamlining the output

- (18 Nov 2016) Pulling out the profiler

- (17 Nov 2016) I like my performance unsafely

- (16 Nov 2016) Going down the I/O chute

- (15 Nov 2016) Starting from scratch

- (14 Nov 2016) The obvious costs

- (11 Nov 2016) The interview question

Comments

Several days to save one minute. It reminds me of the Terence Parr motto:

See https://news.ycombinator.com/item?id=8293390 http://parrt.cs.usfca.edu/

I look forward to the automated optimizer :-)

Carsten, a) This didn't actually take several days to write, you understand. b) And for hot paths, this is more than worth it.

Since at this point, making the code faster by few instructions could make a difference, have you considered using

&instead of&&in the condition?It would save one conditional jump, which, depending on the distribution of the data, could be hard for the CPU to predict.

I am glad you mentioned the cost of maintainability and readability. For me personally those two factors are incredibly important. Even more so when you work in a company with a lot of developers. Personally, I felt the solution that was done in around 3 seconds was the happy midpoint. It was readable and maintainable both to a very high degree but saw a massive reduction in time taken to execute the code.

Probably more importantly, why are you comparing the first 12 bytes? As far as I can tell, that includes the first digit of the time, so if the date is the same, but the first digit of time isn't, you're unnecessarily using the slow path. This means that casting to

short*instead ofint*could help by increasing the fraction of cases that use the fast path.Is this correct that most speed up was obtained with MemoryMappedFile.CreateFromFile thing? Can anyone explain what is this and how it works? I've read a little bit but still don't get why is it that fast, after all it has to read 276 Mb from disk and it does it under a second? How?

Another question: what would happen if file would be larger than ram size?

Memory-mapping a file takes advantage of the OS's virtual memory capabilities, so the OS handles the business of reading pages into memory and flushing/evicting those which are no longer needed. The size of physical RAM is largely irrelevant since only a part of the file is mapped at a time, but if RAM is too tight then the OS will have to page more often which will put more load on the IO subsystem.

The IO speed in this case is down to the use of a solid-state disk. 276MB would take rather longer to read from a conventional spinning disk.

To clarify, the main benefit of memory-mapping in this case (I think) is that it drastically reduces the number of syscalls and memory copies required to access the data.

Reading a typical stream:

And that's assuming that the application is using a buffered stream. Otherwise, pretty much every tiny read could result in a syscall.

Reading a memmapped file effectively bypasses the second step, mapping the page read from disk directly into the application's memory space. By using unsafe code and dealing with the bytes in-place, we can skip the third step too.

Ok, thanks, that make more sense now. Also @Ayende just a check: I've found here that super fast speed might be because of windows cache: http://stackoverflow.com/questions/26456195/why-is-reading-from-a-memory-mapped-file-so-fast#comment41553531_26456195 Is this taken into account?

Even for ssd this looks too good to be true:

http://unix.stackexchange.com/questions/88796/are-these-throughput-numbers-typical-for-an-ssd

Oops, ignore the last comment, it seems that 600Mb\s for ssd on windows is possible...

Svick, & is supposed to be more expensive. It forces us to compare both ends, while && will short circuit.

However, the cost of doing the comparison vs. the conditional jump is probably worth testing.

Svick, You are correct, I should have used a short here, not an int

ren, Yes, we don't really take I/O into account here, since we are mostly reading from the system cache.

It looks like your code is fast but incorrect.

Original article said:

But line 22 of the Final Version code seems to swap start and end):

I see that you're using the Trace mode of dotTrace to do your performance profiling. This adds overhead to each called method to track the number of calls and for "free" methods, like property getters and such, it adds a not-so-insignificant overhead. I wonder what your performance profile results would look like if you switched to sampling, would you see the same results?

Lasse, It is possible, yes, but I found that this is much clearer for me to track down costs and optimize.

Comment preview