Bleve is a Go search engine library, and that means that it hit a few good points with me. It is interesting, it is familiar ground and it is in a language that I’m not too familiar with, so that is a great chance to learn some more.

I reviewed revision: 298302a511a184dbab2c401e2005c1ce9589a001

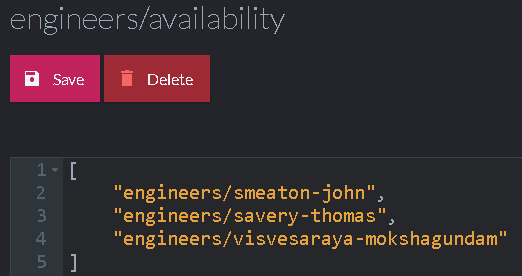

I like to start with reading from the bottom up, and in this case, the very first thing that I looked at was the storage level. Bleve uses a pluggable storage engine and currently has support for:

- BoltDB

- LevelDB

- Moss

- In memory tree

This is interesting, if only because I put BoltDB and Moss on my queue of projects to read.

The actual persistent format for Bleve is very well document here. Which make it much easier to understand what is going on. The way Bleve uses the storage, it has a flat key/value store view of the world, as well as needing prefix range queries. Nothing else is required. Navigating the code is a bit hard for me as someone who isn’t too familiar with Go, but the interesting things start here, in scorch.go (no idea why this is called scorch, though).

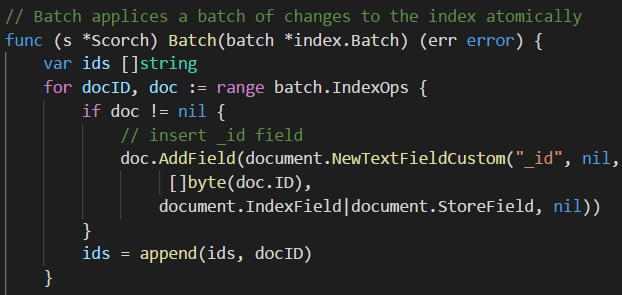

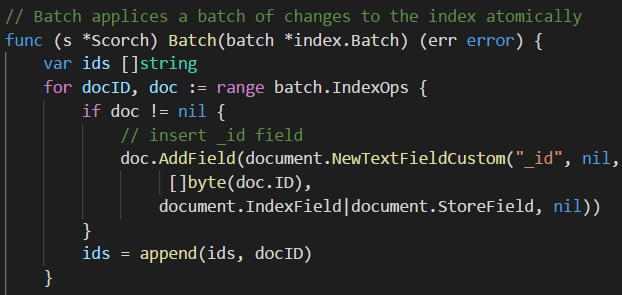

We get a batch of changes, and run over them, adding an _id field to the document. So far, pretty simple to figure out. The next part is interesting:

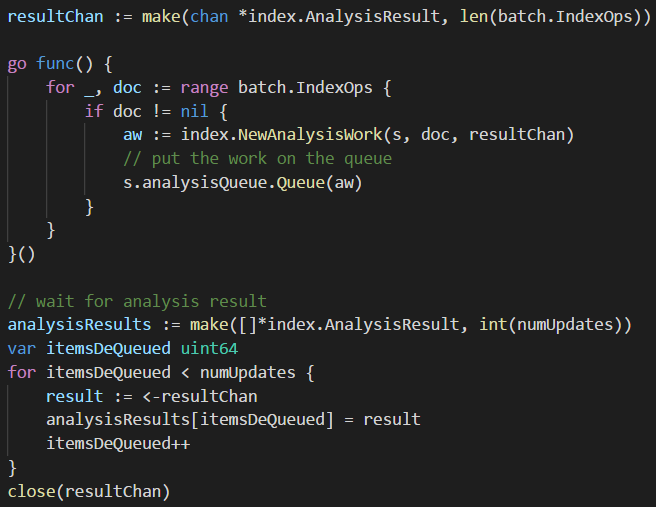

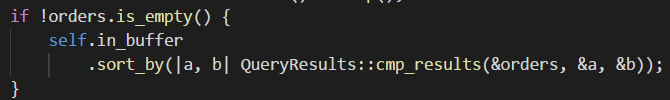

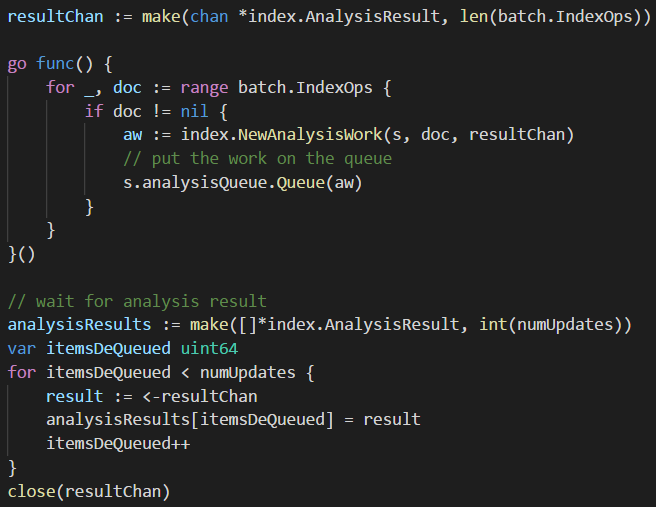

You can see that we are running in parallel here, starting the analysis work and queuing it all up. Bleve then wait for the analysis to run. I’ll dig a bit deeper into how that work in a bit, first I want to understand how the whole batch concept work.

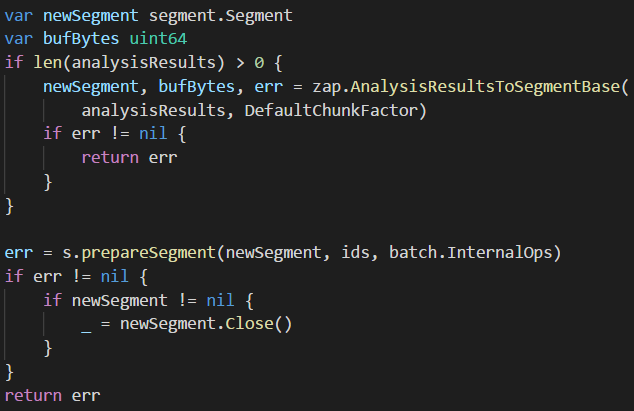

So that tells us some interesting things. First, even though there is the concept of a store, there is also this idea of a segment. I’m familiar with this from Lucene, but there it is tied very closely to the on disk format. Before looking at the analysis, let’s look at this concept of segments.

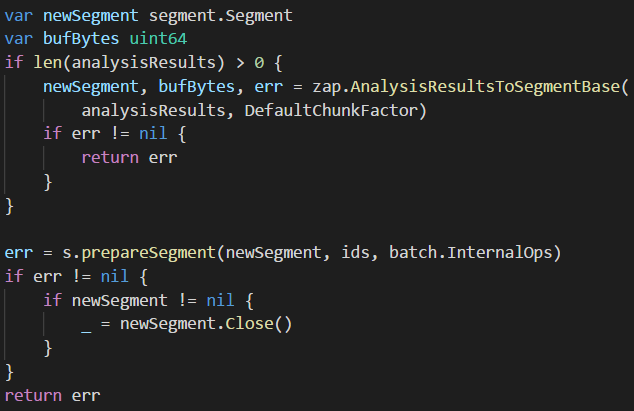

The “zap” package, in this term, seems to refer to the encoding that is used to store the analysis results. It looks like it is running over all the results of the batch and write them into a single binary value. This is very similar to the way Lucene works so far, although I’m still confused about the key/value store. What is happening is that after the segment is created, it is sent to prepareSegment. This eventually send it to a Go channel that is used in the Scortch.mainLoop function (which is being run as a separate thread).

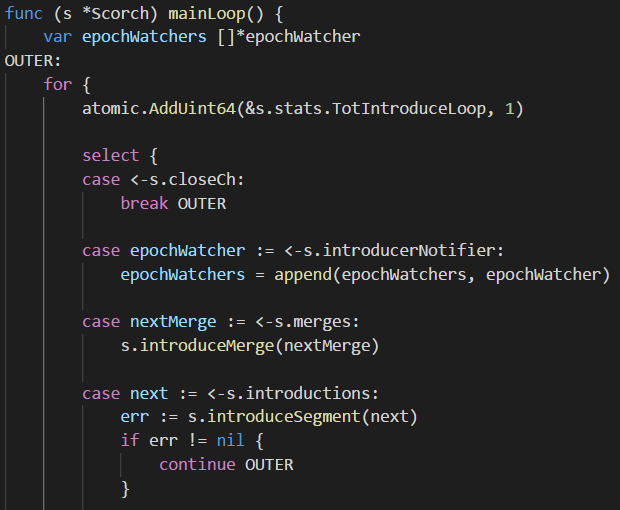

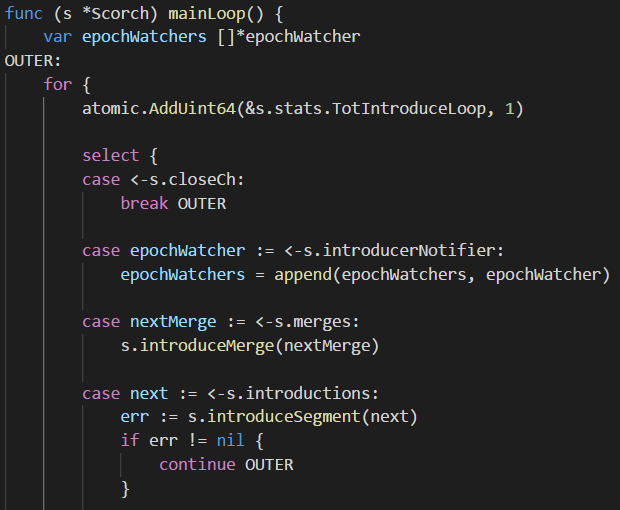

Here is the relevant code:

The last bit is the one that is handling the segment introduction, whatever that is. Note that this seems to be strongly related to the store, so hopefully we’ll see why this is showing up here. What seems to be going on here is that there is a lot of concurrency in the process, the code spawns multiple go funcs to do work. The mainLoop is just one of them. The persisterLoop is another as well as the mergerLoop. All of which sounds very much like how Lucene works.

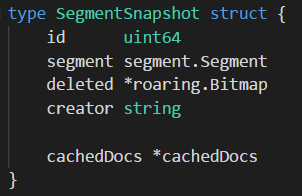

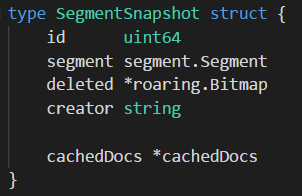

I’m still not sure how this is all tied together. So I’m going to follow just this path for now and see what is going on with these segments. A lot of the work seems to be around managing this structure:

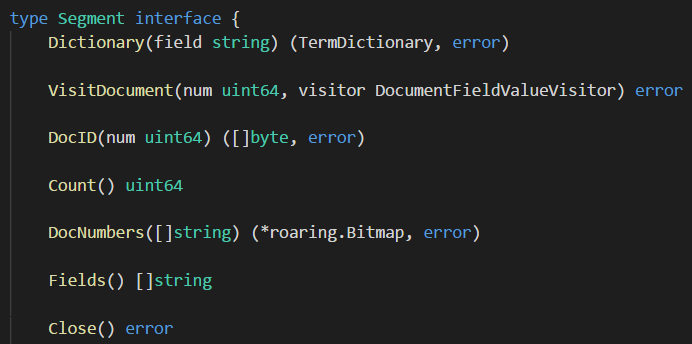

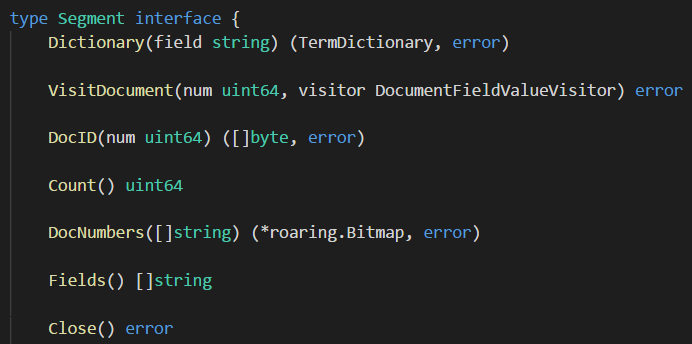

The Segment itself is an interface with the following definition:

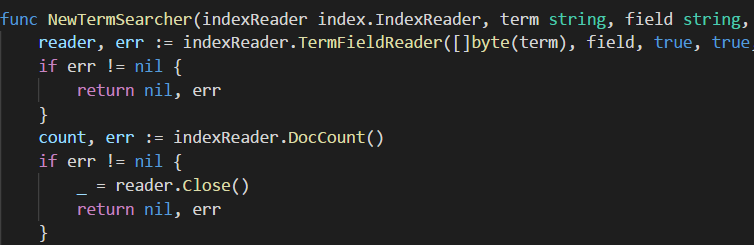

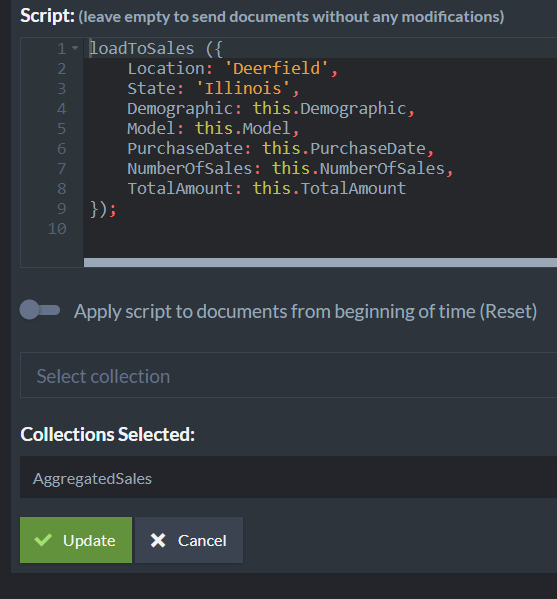

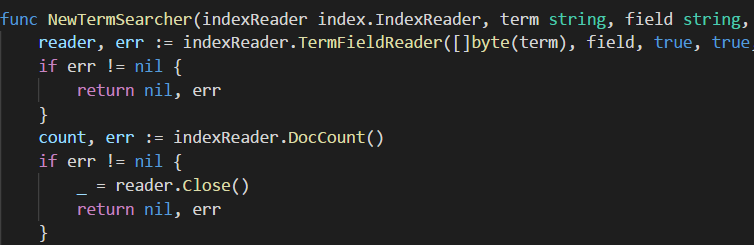

There are go in memory and mmap versions of this interface, it seems. So far, I’m not following relation between the storage interface and this segments idea. I think that I’m lost here so I’m going to go a slightly different route. Instead of seeing how Bleve write stuff, let’s focus on how it reads. I’ll try to follow the path of a query. This path of inquiry leads me to this guy:

Again, very similar to Lucene. And the TermFieldReader is where we are probably going to get the matches for this particular term (field, value). Let’s dig into that. And indeed, following the code for this method leads to the inverted index, called upside_down in this code. I managed to find how the terms are being read, and it makes perfect sense, exactly as expected, it does a range query and parses both key and values for the relevant values. Still not seeing why there is the need for segments.

And here is where things start to come together. Bleve uses the key/value interface to store some data that it searches on, but document values are stored in segments, and are loaded directly from there on demand. At a glace, it looks like the zap encoding is used to store values in chunks. It looks like I didn’t paid attention before, but the zap format is actually documented and it is very helpful. Basically, all the per document (vs. per term / field) data is located there, as well as a few other things.

I think that this is were I’ll stop. The codebase is interesting, but I now know enough to have a feeling how things work. Some closing thoughts:

- Really good docs.

- I didn’t use my usual “read the project in lexical file order” to figure out things, and I had a hard time navigating the codebase because of that. Probably my lack of Go chops.

- There seems to be a lot more concurrency for stuff that I would usually assume be single threaded than I’m used to. I’m aware that Go has builtin concurrency primitives and it is more common to use there, but it seems strange to see. As consume of search libraries, I’m not sure that I’m happy about this. I like to control my threading behaviors.

- It seems that a lot of the data is held in memory (mmap) but in a format that requires work to handle or in the key/value store, but again, in a format that require work.

The problem with work is that you have to do it each and every time. I’m used to Lucene (read it once from disk and keep a cached version in memory that is very fast) or Voron, in which the data is held in memory and can be access with zero work.

I didn’t get to any of the core parts of the library (analysis, full text search). This is because they aren’t likely to be that different and they are full of the storage interaction details that I just went over.

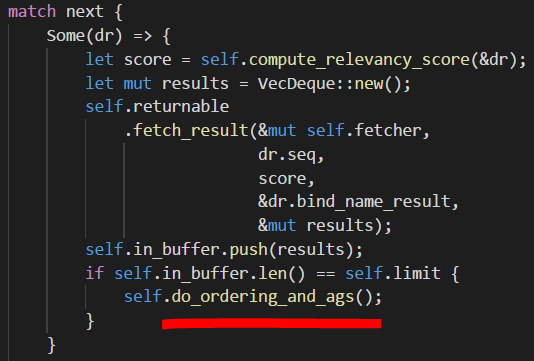

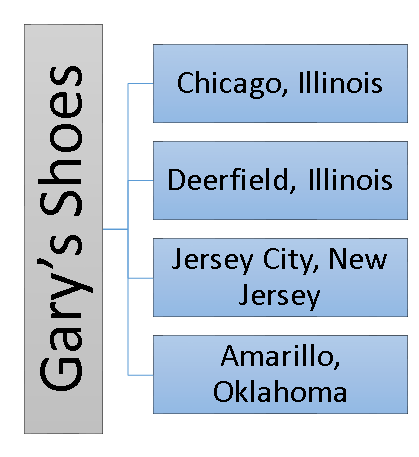

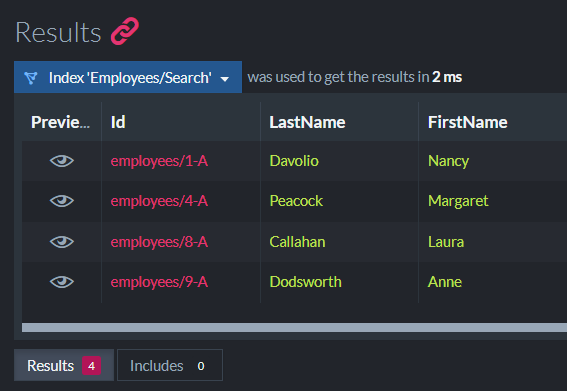

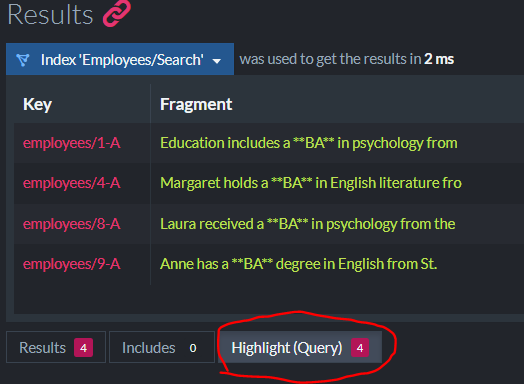

For my needs, I’m mostly interesting in being able to under this type of query:

For my needs, I’m mostly interesting in being able to under this type of query: