Daisy chaining data flow in RavenDB

I have talked before about RavenDB’s MapReduce indexes and their ability to output results to a collection as well as RavenDb’s ETL processes and how we can use them to push some data to another database (a RavenDB database or a relational one).

Bringing these two features together can be surprisingly useful when you start talking about global distributed processing. A concrete example might make this easier to understand.

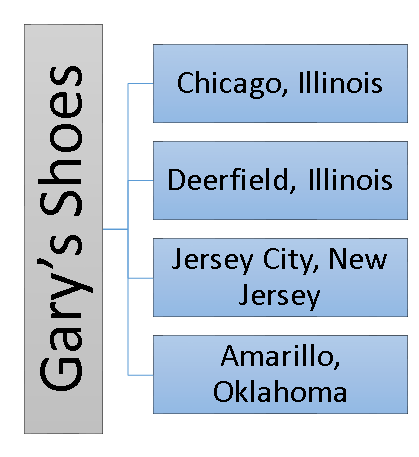

Imagine a shoe store (we’ll go with Gary’s Shoes) that needs to track sales across a large number of locations. Because sales must be processed regardless of the connection status, each store hosts a RavenDB server to record its sales. Here is the geographic distribution of the stores:

To properly manage this chain of stores, we need to be able to look at data across all stores. One way of doing this is to set up external replication from each store location to a central server. This way, all the data is aggregated into a single location. In most cases, this would be the natural thing to do. In fact, you would probably want two-way replication of most of the data so you could figure out if a given store has a specific shoe in stock by just looking at the local copy of its inventory. But for the purpose of this discussion, we’ll assume that there are enough shoe sales that we don’t actually want to have all the sales replicated.

We just want some aggregated data. But we want this data aggregated across all stores, not just at one individual store. Here’s how we can handle this: we’ll define an index that would aggregate the sales across the dimensions that we care about (model, date, demographic, etc.). This index can answer the kind of queries we want, but it is defined on the database for each store so it can only provide information about local sales, not what happens across all the stores. Let’s fix that. We’ll change the index to have an output collection. This will cause it to write all its output as documents to a dedicated collection.

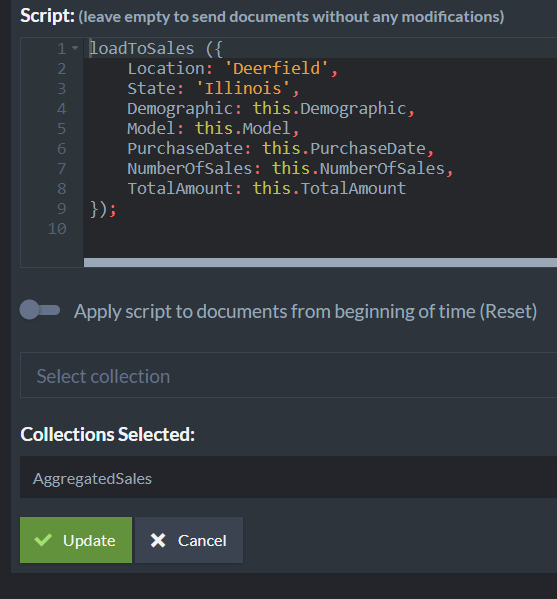

Why does this matter? These documents will be written to solely by the index, but given that they are documents, they obey all the usual rules and can be acted upon like any other document. In particular, this means that we can apply an ETL process to them. Here is what this ETL script would look like.

The script sends the aggregated sales (the collection generated by the MapReduce index) to a central server. Note that we also added some static fields that will be helpful on the remote server so as to be able to tell which store each aggregated sale came from. At the central server, you can work with these aggregated sales documents to each store’s details, or you can aggregate them again to see the state across the entire chain.

The nice things about this approach are the combination of features and their end result. At the local level, you have independent servers that can work seamlessly with an unreliable network. They also give store managers a good overview of their local states and what is going on inside their own stores.

At the same time, across the entire chain, we have ETL processes that will update the central server with details about sales statuses on an ongoing basis. If there is a network failure, there will be no interruption in service (except that the sales details for a particular store will obviously not be up to date). When the network issue is resolved, the central server will accept all the missing data and update its reports.

The entire process relies entirely on features that already exist in RavenDB and are easily accessible. The end result is a distributed, highly reliable and fault tolerant MapReduce process that gives you aggregated view of sales across the entire chain with very little cost.

Comments

Might not be the best to ask it here, but today I just happened to be playing around with this feature. What I noticed was when I created some documents, created a map-reduce index which outputs the reduce results into a collection and then modified the index, then upon index deployment, I got an exception because the output collection was not empty.

Of course, this is a blog post, not a Q&A thread, and I don't know whether this is by design or a bug, but this has made me wondering how such scenarios are going to be handled. I don't know if as per 4.0.3, there is a way to instruct the index to clear the output collection if the index definition has changed. But in such a distributed setup, how would a scenario, when the index definition (and possibly the results) change be handled? Will there be a way to not do incremental, but a "full rebuild" of such artificial documents? Or will we have to delete the output collection if the index definition changes and the results need to be rebuilt?

Balázs, Updating the same index should be fine. But creation of a new index (or delete / create) cycle will cause an error. Deletion of the artificial collection is done manually

Comment preview