Tobi had a few questions about memory mapped files. And it is quite an interesting topic.

A memory mapped file is a feature for all modern operating system. It require coordination between the memory manager and the I/O subsystem. Basically, you can tell the OS that some file is the backing store for a certain portion of the process memory. In order to understand that, we have to understand virtual memory.

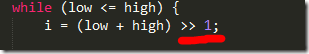

Here is your physical memory, using 32 bits for ease of use:

Now, you have two processes that are running. Each of them get their own 4 GB address space (actually, only 2 GB is available to the process in 32 bits, but that is good enough). What happen when both of those processes obtain a pointer to 0x0232194?

Well, what actually happens is that the pointer that looks like a pointer to physical memory is actually a virtual pointer, which the CPU and the OS work together to map to physical memory. It is obvious from the image that there is a problem here, what happens if two processes uses 4GB each? There isn’t enough physical memory to handle that. This is the point were the page file gets involved. So far, this is pretty basic OS 101 stuff.

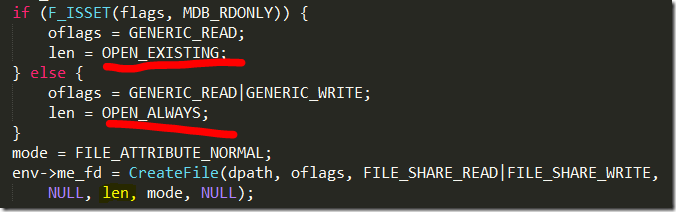

The next stuff is where it gets interesting. So the OS already knows how to evict pages from memory and store them on the file system, because it needs to do that for the page file. The next step is to make use of that for more than just the page file. So you can map any file into your memory space. Once that is done, you can access the part of the memory you have mapped and the OS will load the relevant parts of the file to memory. Again, pretty basic stuff so far.

You can read about this more in this article.

The reason you want to do this sort of thing is that it gives you the ability to work with files as if it was memory. And you don’t have to worry about all that pesky file I/O stuff. The OS will take care of that for you.

And now, to Tobi’s questions:

- What are the most important advantages and disadvantages?

Probably the most important is that you don’t have to do manual file I/O. That can drastically reduce the amount of work you have to do, and it can also give you a pretty significant perf boost. Because the I/O is actually being managed by the operation system, you gain a lot of experience in optimizing things. And the OS will do things like give you a page buffer, caching, preloading, etc. It also make it drastically easier to do parallel I/O safely, since you can read/write from “memory” concurrently without having to deal with complex API.

The disadvantages you need to be aware that things like the base addresses would change whenever you re-open the file, and that data structures that are good in memory might not result in good performance if they are stored on disk.

Another problem relates to how the changes actually are saved to the file. It is hard to make sure that the writes you do are coherently stored in the file system. For example, let us say that you made changes to two different memory locations, which reside on two different pages. The OS can decide, at any time, to take one of those pages away from you because it needs that memory for other things. When that happens, it will write that page to disk for you. So when you ask for it the next time, it can load it up again and the application won’t notice.

However, what would happen during a crash? You might have partially written data in that scenario. Usually, when writing to files using file I/O routines, you have very predictable write pattern. When you use memory mapped files for writes, you don’t know for sure in what order that is going to happen. The OS is free to choose that. There are ways you can control that. For example, you might use FILE_MAP_COPY to avoid the OS writing stuff for you, but you would have to handle writes yourself now, and that is decidedly not trivial.

You can use something like FlushViewOfFilew() to make sure that a specific range is flushed, but that still doesn’t mean that they will be written in any order that you might expect. As a db writer, I care, quite deeply, about the exact way the data is written to file, because otherwise it is really hard to reason about how to read it back. Especially when you have to consider failures and corruptions.

Personally, I would be writing stuff using memory mapped file for data that I needed critical stuff for.

- Why are using well known products like SQL Server custom caches instead of memory mappings?

SQL Server is actually its own operating system. It managed memory, locks, threads, I/O and a lot more. That comes from the fact that for a long time, it had to do those sort of things. But note that SQL Server probably use memory mapped file quite a lot. But they are also using their own custom caches because it make sense to them. For example, query plan cache. Even when you do have memory mapped files, you usually have multiple layers of caching.

In RavenDB, for example, we have the native page cache (managed by Esent), the documents cache (managed by RavenDB) and the client cache. The reason that we have multiple layers is that we cache different things. The client cache avoid having to call the server. The documents cache avoid having to parse documents and the native page cache avoid having to go to disk.

- Why are other products like LevelDB using mmap instead of custom caches?

Because they are drastically simpler products. They really want to just give you the data as quickly as possible, and they don’t need to do additional processing of the data. They can lean on the OS page cache to a much larger extent. Note that when use in real products, there are often higher level caches that they will use anyway, used for storing processed / parsed information.

- Are they well suited for managed code?

Memory Mapped Files are usually harder to use from managed code, because we don’t do our own memory management. It does meant that you lose the benefit of just treating this as part of your memory space, because there is a very clear line between managed memory and native memory, and memory mapped files are on the other side of the hatch. That said, you can easily use them via UnmanagedMemoryStream, or read from them directly via Memory Accessor. The managed implementation for leveldb make heavy use of memory mapped files.

- How does performance compare with other techniques?

It is generally better, mostly because the OS is really good in managing paging, and that you rely directly on the low level I/O routines. Another thing that you have to remember that if you use file I/O, you need to create a buffer, copy to/from that buffer, etc. Using memory mapped files saves all of that.

- Is it possible to use them with mutable data structures and retain crash recoverability?

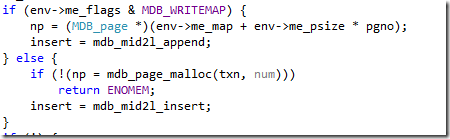

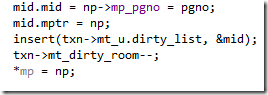

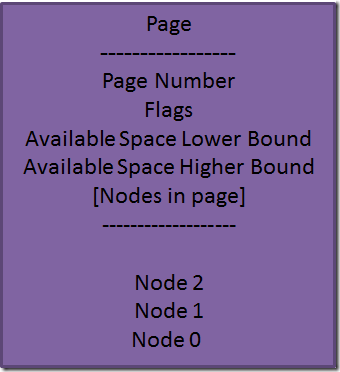

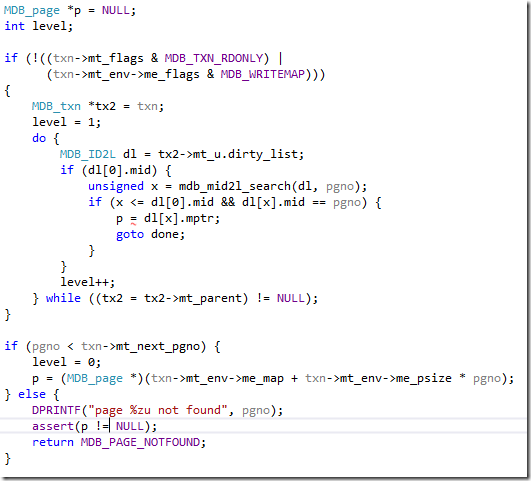

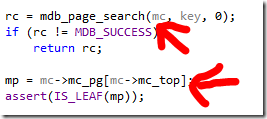

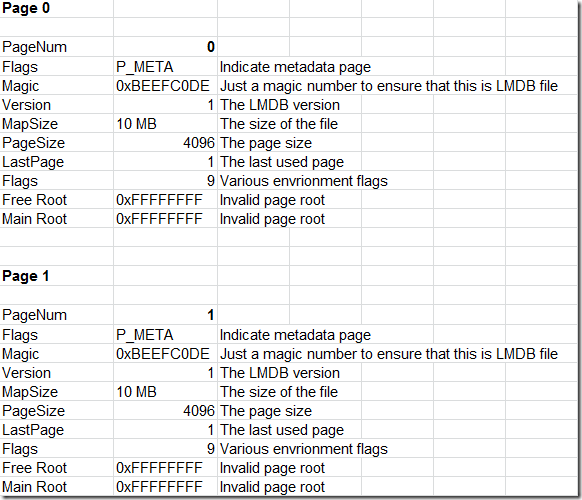

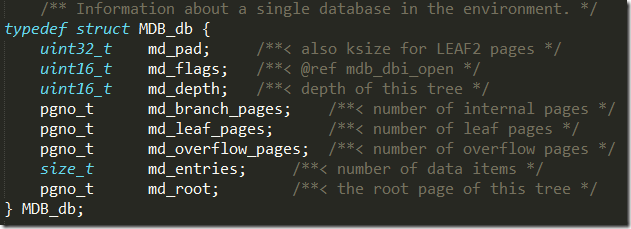

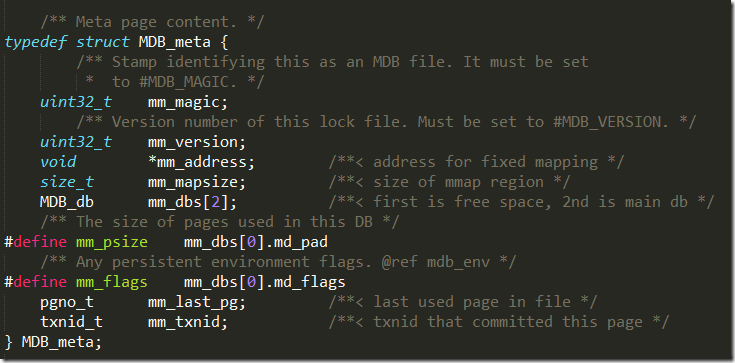

Yes, but… is probably the best answer. Yes, you can use them for mutable data, but you really want to be careful about how you do it. In particular, you need to make sure that you write in such a way that you can survive partial writes. A good way of doing that is to make writes to specific pages, and you “commit” by recording that those pages are now available on a metadata page, or something like that. This require a lot of really careful work, to be honest. Probably more work than you would like it to be. LMDB does it this way, and even if the code wasn’t a eye tearing pain, what it is doing is quite hard.

Note that in order to actually be sure that you a flushing to disk, you have to call both FlushViewOfFile and FlushFileBuffers on Windows.

- What guarantees does the OS make regarding ordering and durability?

Pretty much none regarding ordering, as noted. Windows will guarantee that if both FlushViewOfFile and FlushFileBuffers have been called and successfully completed, you are golden. But there aren’t any promises in the API about what will happen for partway failures, or in what order this happens.

To summarize:

Memory mapped files are a great tool, and for reads, they are excellent. For writes, they are awesome, but since there is no way to ensure in what order dirty pages gets written to disk, it make it hard to generate reliable system using them.

I rather use standard file I/O for writes, since that is far more predictable.

We worked with 2 recruitment agencies, looking for a 5+ years experience in C#/.NET, Server Side work, OSS experience / NoSQL experience, multi threading, TCP/IP, REST…

We worked with 2 recruitment agencies, looking for a 5+ years experience in C#/.NET, Server Side work, OSS experience / NoSQL experience, multi threading, TCP/IP, REST…