One of the distinguishing feature of RavenDB is its ability to process large aggregations very quickly. You can ask questions on very large data sets and get the results in milliseconds. This is interesting, because RavenDB isn’t an OLAP database and the kind of questions that we ask can be quite complex.

For example, we have the Products/Recommendations index, which allow us to ask:

For any particular product, find me how many times it was sold, what other products were sold with it and in what frequency.

The index to manage this is here:

The way it works, we map the orders and have a projection for each product, and then we add the other products that were sold with the current one. In the reduce, we group by the product and aggregate the related products together.

But I’m not here to talk about the recommendation engine. I wanted to explain how RavenDB process such indexes. All the information that I’m talking about can be seen in the Map/Reduce visualizer in the RavenDB Studio.

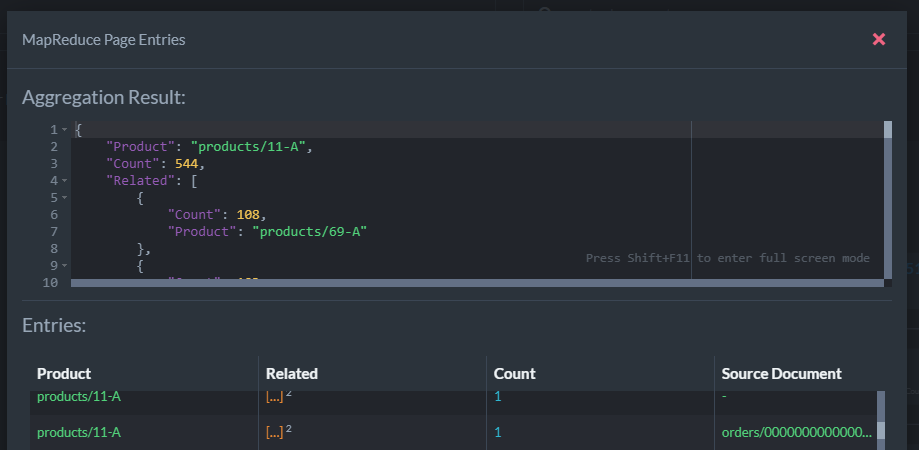

Here is a single entry for this index. You can see that products/11-A was sold 544 times and 108 times with products/69-A.

Because of the way RavenDB process Map/Reduce indexes, when we query, we run over the already precomputed results and there is very little computation cost at querying time.

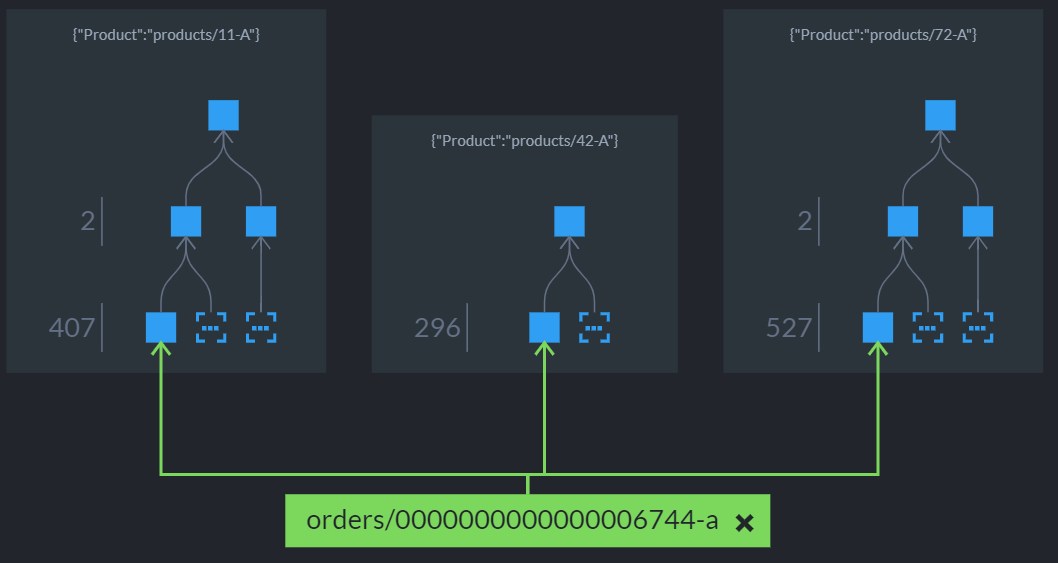

Let see how RavenDB builds the index. Here is a single order, where three products were sold. You can see that each of them as a very interesting tree structure.

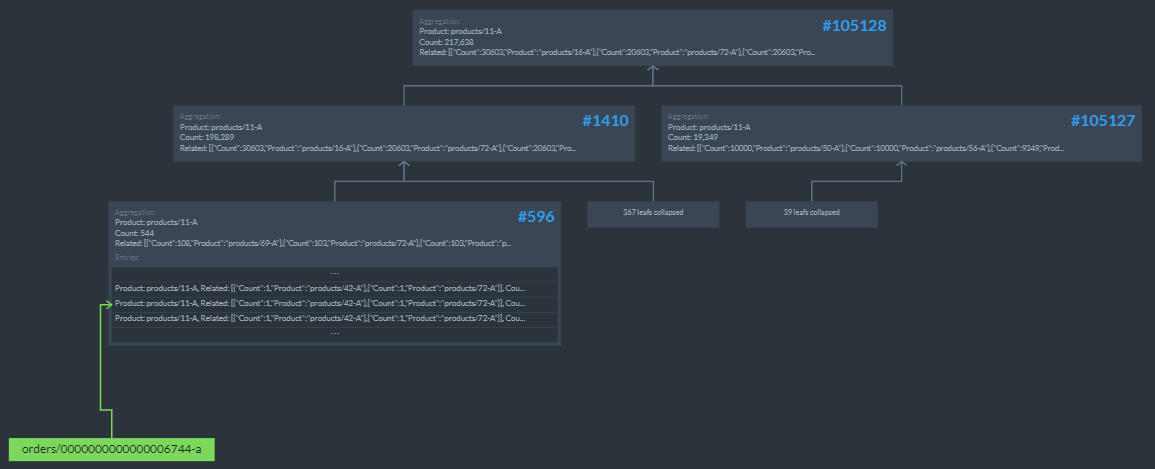

Here is how it looks like when we zoom into a particular product. You can see how RavenDB aggregate the data. First in the bottom most page on the right (#596). We aggregate that with the other 367 pages and get intermediate results at page #1410. We then aggregate that again with the intermediate results in page #105127 to get the final tall. In this case, you can see that products/11-A was sold 217,638 times and mostly with products/16-A (30,603 times) and products/72-A (20,603 times).

When we have a new order, all we need to do is update a bottom most page and then recurse upward in the three. In the case we have here, there is a pretty big reduce value and we are dealing with tens of millions of orders. We have three levels to the tree, which means that we’ll need to do three update operations to account for new or updated data. That is cheap, because it means that we have to do very little work to maintain the index.

At query time, of course, we don’t really have to do much, all the hard work was done.

I like this example, because it shows case a non trivial example and how RavenDB handles this with ease. These kind of non trivial work is something that tend to be very hard to get working properly and with RavenDB this is part of my default: “let’s do this on the fly demo”.

We got a feature request that we don’t intend to implement, but I thought the reasoning is interesting enough for a blog post. The feature request:

We got a feature request that we don’t intend to implement, but I thought the reasoning is interesting enough for a blog post. The feature request: