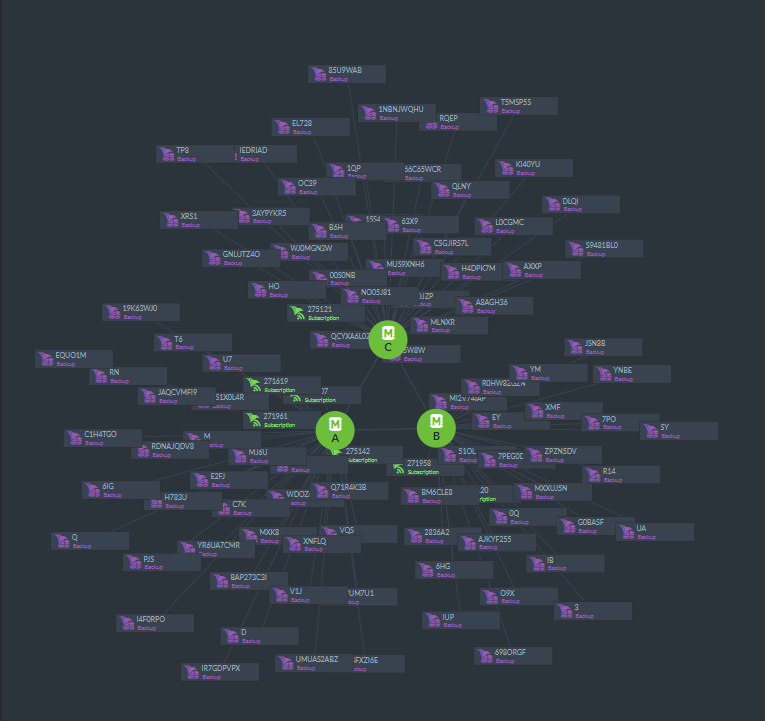

The task in question was to read a CSV file (which is large, but can fit in memory) and allow quick searches on the data by email to find the relevant users. Most candidates handle that with a dictionary, but since our candidate decided to flip things around, he also included an implementation of doing this with sorted arrays. That is actually quite nice, in theory. In practice, it ended up something like this:

In order to truly understand the code, I have to walk you through a bunch of it.

It starts with the following fields:

List<Structure> emails = new List<Structure>();

Structure[] namesArray = new Structure[0];

Structure, by the way, is a helper class that just has a key and a list of ids. The duplicate is strange, but whatever, let move on.

The class constructor is reading one line at a time and add an structure instance with the email and the line id to the emails list.

public void ToArray()

{

emailsArray = this.emails.ToArray();

}

This code just copy emails to the array, we are not sure why yet, but then we have:

public void Sort()

{

Array.Sort(emailsArray, delegate(Structure x, Structure y) { return x.STR.CompareTo(y.STR); });

}

So far, this is right in line with the "use a sorted array" method that the candidate talked about. There is a small problem here, because emails are allowed to be duplicated, but no fear, our candidate can solve that…

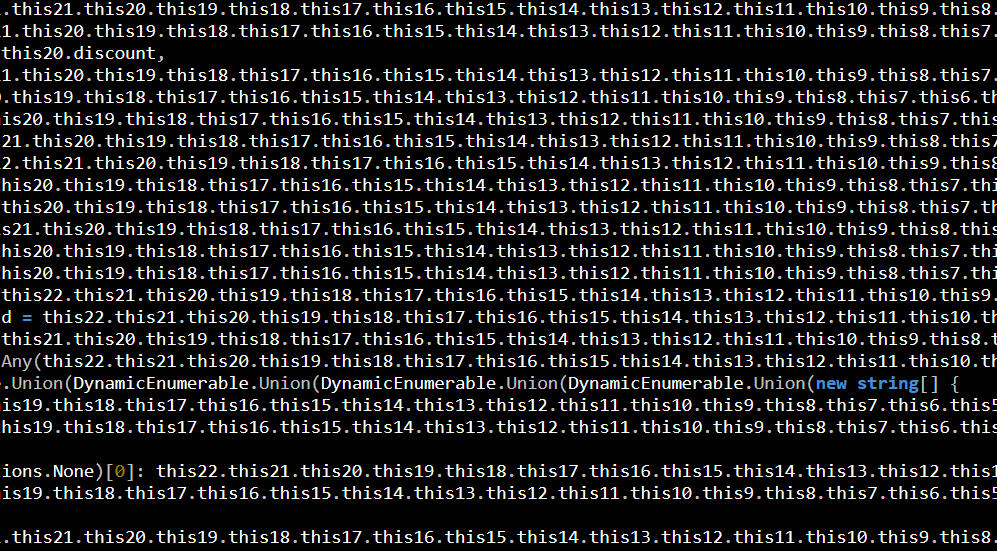

public void UnifyIdLists()

{

for (int i = 0; i < emailsArray.Length; i++)

{

if (i + 1 == emailsArray.Length)

break;

if (emailsArray[i].STR.Equals(emailsArray[i + 1].STR))

{

emailsArray[i].ID.AddRange(emailsArray[i + 1].ID);

emailsArray[i + 1] = null;

List<Structure> temp = emailsArray.ToList<Structure>();

temp.RemoveAll(item => item == null);

emailsArray = temp.ToArray();

}

}

}

The intent of this code is to merge all identical email values into a single entry in the list.

Now, to put things in perspective, we are talking about a file that is going to be around the 500MB in size, and there are going to be about 3.5 million lines in it.

That means that the emailsArray alone is going to take about 25MB.

Another aspect to consider is that we are using dummy data in our test file. Do you want to guess how many duplicates there are going to be there? Each of which is generating two 25MB allocations and multiple passes over an array of 3.5 million items in size.

Oh, and for the hell of it, the code above doesn't even work. Consider the case when we have three duplicates…

![]() . And I just love this image.

. And I just love this image.