Write optimizations in Voron

As I mentioned, there is one scenario which produce worse & worse performance for LMDB, random writes. The longer the data set, the more expensive the cost. This is due to the nature of LMDB as a Copy On Write database. Here is a simple explanation of what is the cost:

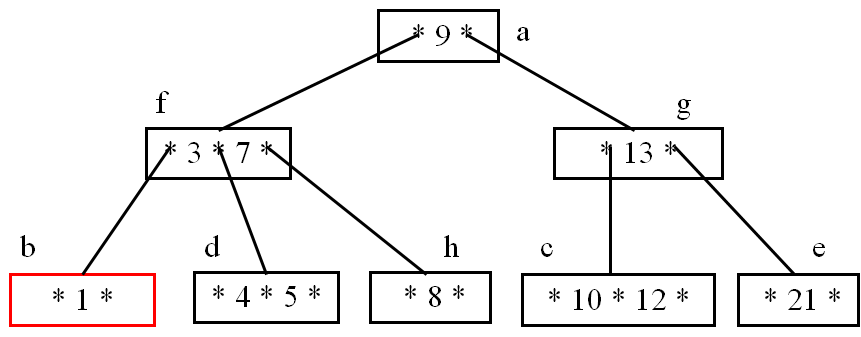

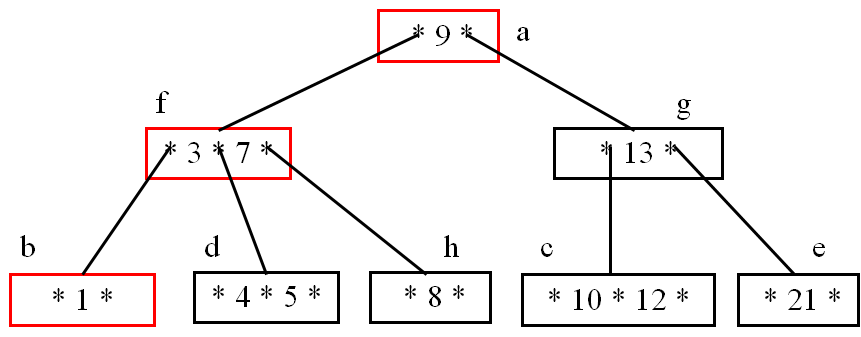

We want to update the value in the red square.

Because the tree is Copy On Write, we need to modify the following pages:

An as the tree goes deeper, it becomes worse. Naturally, one consequence of doing random writes is tree fragmentation. In my tests, on a small 1 million records dataset we would get a cost of over hundred pages (roughly 4 MB) to update a hundred 16 + 100 bytes values. Just to clarify, those tests were done on the LMDB code, not on Voron. So I am pretty sure that this isn’t my problem. Indeed, I confirmed that this is a general problem with COW approach.

I accepted that, and the benefits that we get are significant enough to accept that. But… I never really was complete in my acceptance. I really wanted a better way.

And after adding Write Ahead Log to Voron, I started thinking. Is there a reason to continue this? We are already dealing with writing to the log. So why modify the entire tree?

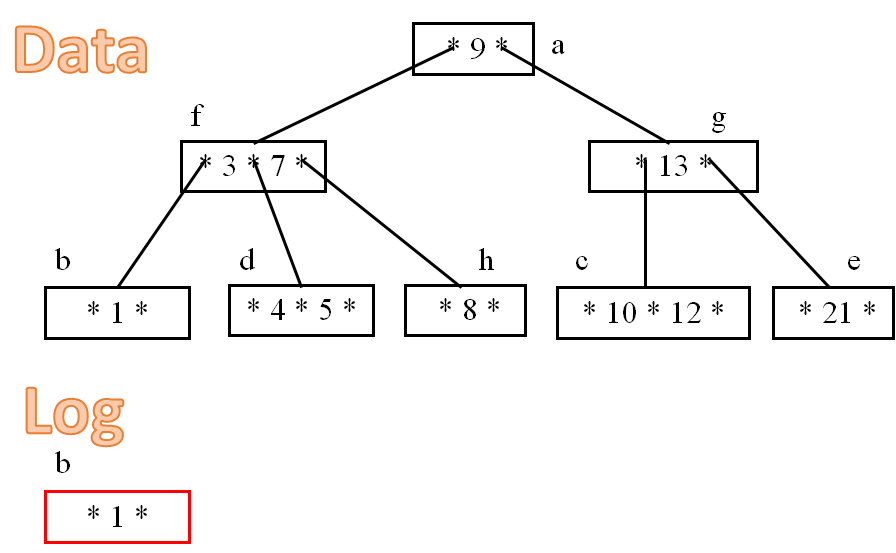

Here is how it looks like:

Now, when you are looking at the tree, and you ask for the B page, you’ll get the one in the log. However, older transactions would get to see the B page in the data file, not the one in the log. So we maintain MVCC.

This complicates matters somewhat because we need to sync the data file flushing with existing transactions (we can’t flush the file until all transactions have progressed to the point where they look at the new pages in the log), but that is something that we have to do anyway. And that would cut down the amount of writes that we have to do by a factor of ten, according to my early estimation. Hell, this should improve write performance on sequential writes.

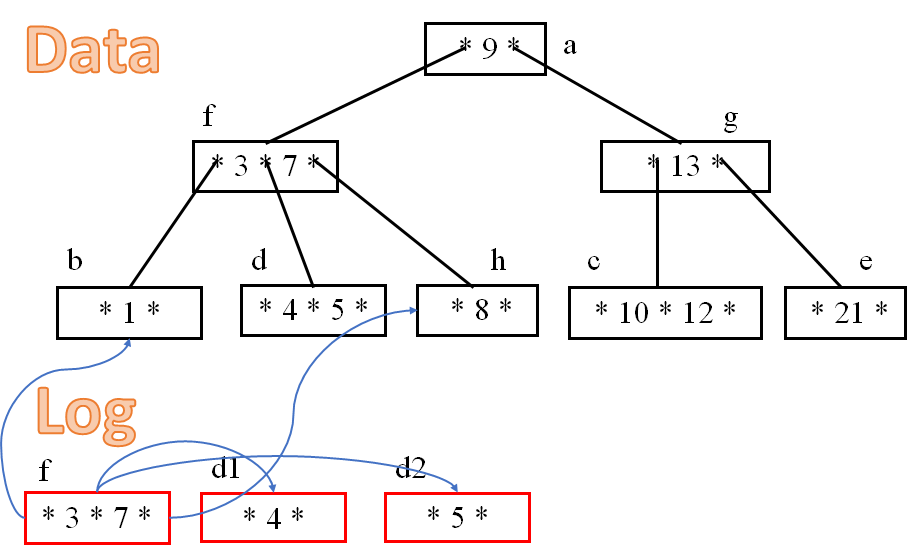

For that matter, look what happens when we need to split page D:

Here we need to touch the tree, but only need to touch the the immediate root. Which again, gives us a lot of benefits in the size we need to touch.

Comments

Nice idea. But how do you handle a situation when there are multiple versions of the same page in the log (i mean if you modify a page that's already in the log)? This will happen all the time because every modification must be propagated up to the tree root.

To avoid deep trees you may use configurable Btree*M where M is number of slots.

Rafal, The idea here is that you don't have to propogate all the way to the root. And you can do that by maintaining translation table in memory.

Lex, Those are B+Tree, I can usually put 100+ items per page.

Sorry if my curiosity is premature, but this looks quite interesting. You maintain a non-persistent page map that points to the last version of every page in the write ahead log. But current transactions may refer to previous versions of the same page, so your page map has to be versioned as well because it might be different for every transaction. Am i getting it right? If so, what do you do in case of concurrent modification of two versions of the same page? Abort one transaction? R

Rafal, The page table is stored as immutable structure. That means that we get cheap copies & modifications, and the current transactions can use the old version. We don't allow concurrent write transactions (we allow concurrent writes, but they are merged to one tx).

@Oren: regarding immutable structures, what do yoy think about the immutable collections package coming from the BCL guys? see here http://blogs.msdn.com/b/dotnet/archive/2013/09/25/immutable-collections-ready-for-prime-time.aspx

Since the earlier discussion on allocating "shadow copy" pages from a memmapped journal (as opposed to temporary blocks of unmanaged memory for the duration of a transaction), I had also realized that the journal can be regarded as a "multi-version" shadow copy of the data file (as of the last data sync), and that no more shadow copying is required for the data file itself. A scheme like this, with periodic data syncing from journal to data file appears to work quite well, but in my experience so far, does require some careful tuning, especially if journal and data file are located on the same (spindle) disk volume. Even more so if the disk volume is shared with other processes (virus checker, OS, ...).

Key to good journal write performance seems to be to use rather small journal chunks (16 MB) and to recycle the chunk files after they have been synced with the data file (and optionally copied to a backup location).

Key also seems to be to use adaptive data sync scheduling, to keep the amount of pages per sync within a certain range. If the amount of pages to sync is large, increase the sync frequency, if it is small decrease the frequency. A larger average amount of pages/sync increases overall throughput (up to a limit), but leads to some pretty big latency spikes. A smaller amount improves this, but goes at the cost of average throughput.Not so trivial to tune well.

njy, Yes, we are already making use of them

Alex, Yes, that is pretty much what we are doing. I am not sure how we are going to tune things, and reusing the journal isn't easy if you want to use incremental backup, but it is something that we are working on.

Comment preview