So far I posted quite a few of posts about building the document database. To be frank, the reason that I did this is because the idea has been bouncing in my head a lot recently, and sitting down and actually thinking about it has been great, especially since now I have the design dancing in my head, shiny & beautiful. Here is the full list, in case you missed anything:

- Schema-less databases

- Designing a document database

- Designing a document database: Storage

- Designing a document database: Scale

- Designing a document database: Authorization

- Designing a document database: Concurrency

- Designing a document database: Attachments

- Designing a document database: Replication

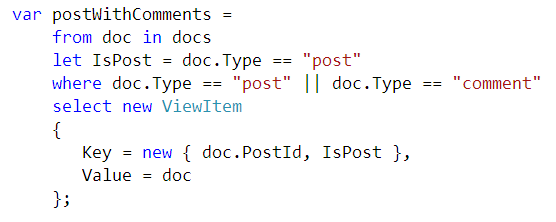

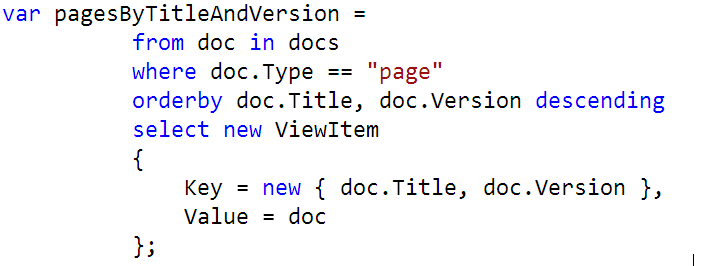

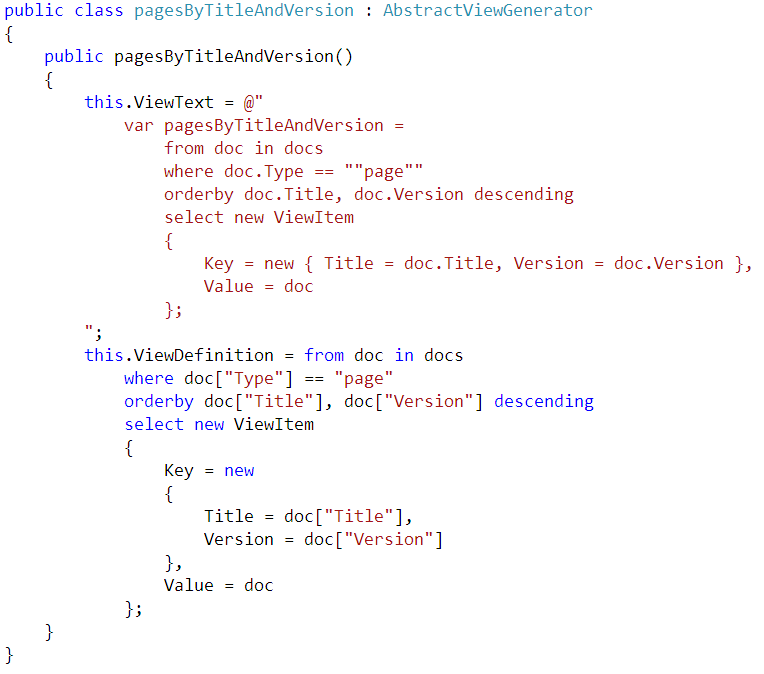

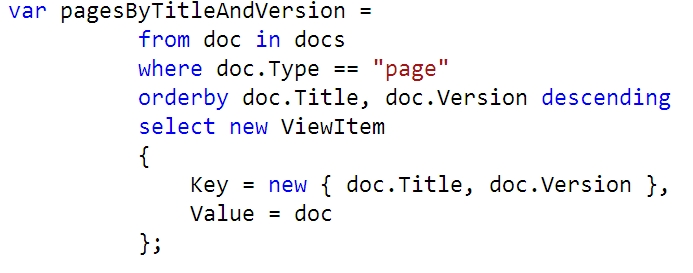

- Designing a document database: Views

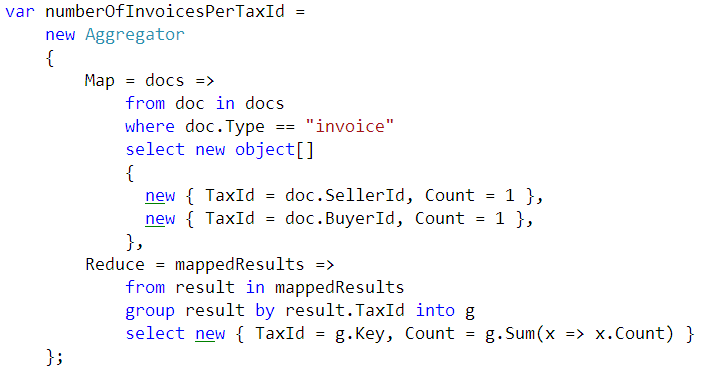

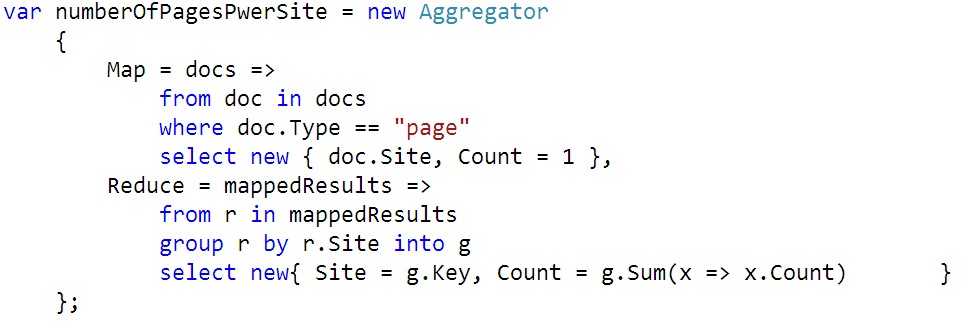

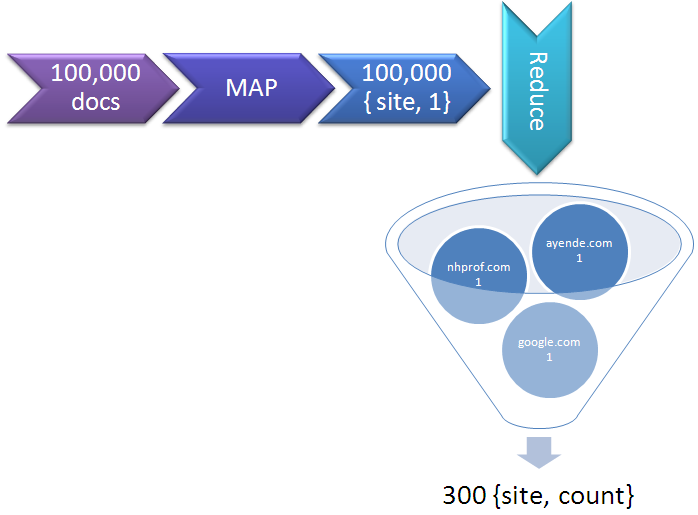

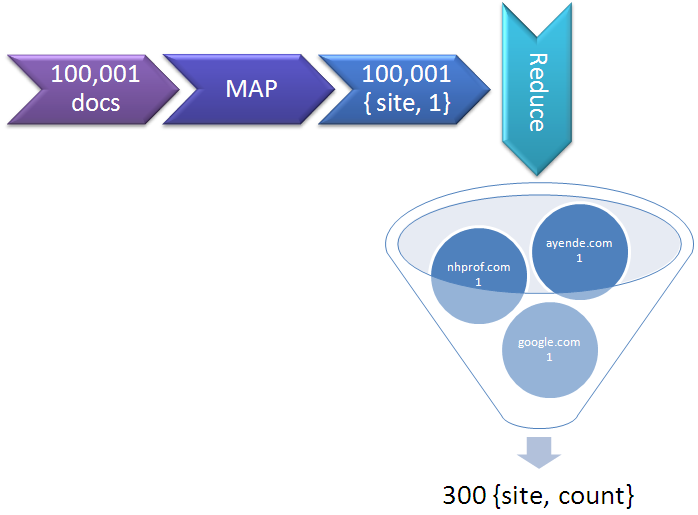

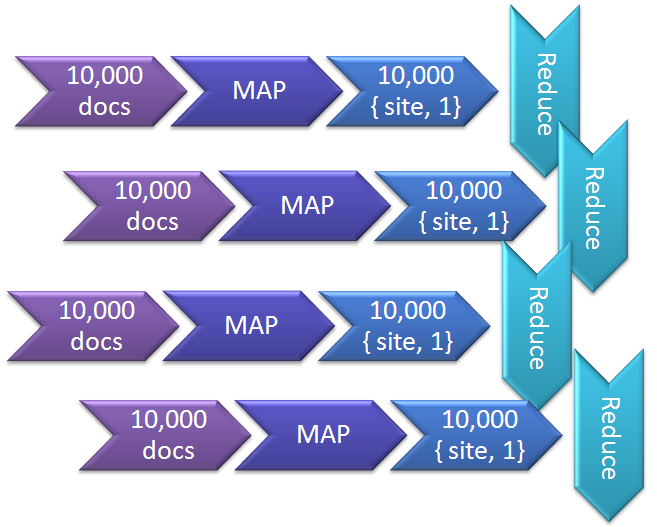

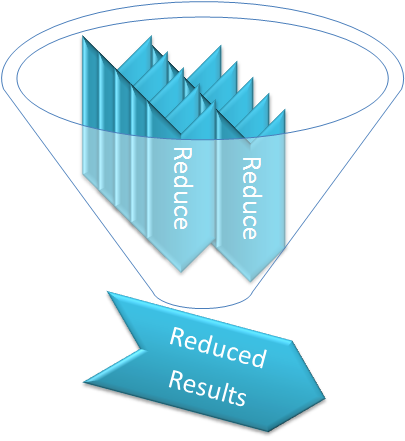

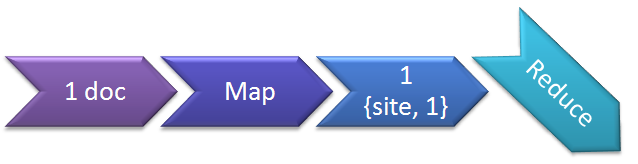

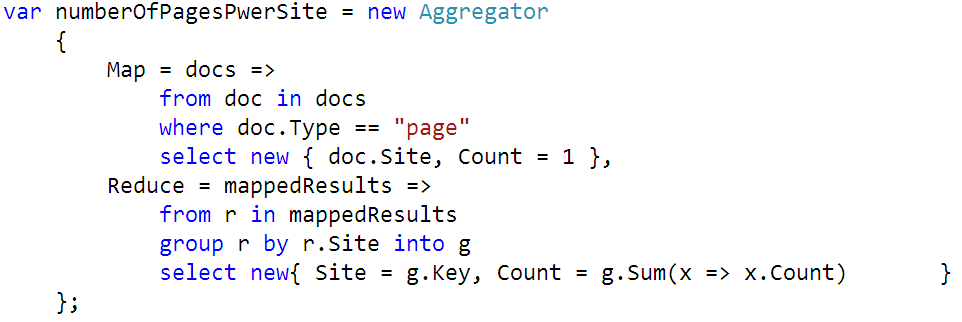

- Designing a document database: Aggregation

- Challenge: C# Rewriting

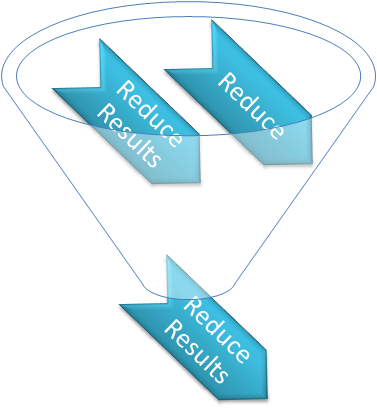

- Designing a document database: Aggregation Recalculating

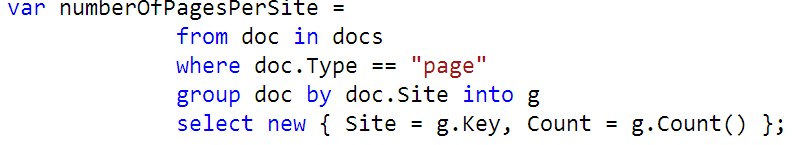

- Designing a document database: View syntax

- Designing a document database: Remote API & Public API

A few days ago I asked on twitter what do people think, do I have this written up yet or not. Opinions seems to be divided on this score. Let me try to set the record straight. I have a lot of scattered code around this, yes. But it is not a project, is is a lot of tiny experiments to prove that one approach or the other would work. This series of posts has required a lot of research. But I don’t have anything that is even remotely close to a working system.

I am estimating that it would take a month or two to take this from the drawing board to something that I would be willing to use in production*. This if full time work, by the way. It is likely that I can get something usable faster than that, depending on your definition of usable :-). Most of the challenge is going to be in implementing the views, as I see it now. Everything else seems to be pretty straightforward.

That is somewhat of a problem. I don’t really want to spend several months (and the associated support costs afterward) to build an open source project. The main issue is that while it is fun, there is simply no money in it, and I heard that eating is mandatory. On the other hand, I don’t really see something like that selling as a commercial package. This is infrastructure, and infrastructure has been commoditized. The ideal solution from my point of view is what we tried to do with Linq to NHibernate. Getting a company, or several companies, to sponsor its development as an OSS project.

The motivation would be the same as usual, this is something that the aforementioned companies need, and are willing to pay for. It didn’t end up the way I expected it with Linq for NHibernate, but it ended up very well after all, so I am happy about that.

Oh, and as an aside, if you want more posts in this series, do suggest a few topics that you want to hear about.

* Just to give you an idea about the complexity involved, I estimated Linq to NHibernate to be about 3 months.