Designing a document databaseAggregation Recalculating

One of the more interesting problems with document databases is the views, and in particular, how are we going to implement views that contain aggregation. In my previous post, I discussed the way we will probably expose this to the users. But it turn out that there are significant challenges in actually implementing the feature itself, not just in the user visible parts.

For projection views, the actual problem is very simple, when a document is updated/removed, all we have to do is to delete the old view item, and create a new item, if applicable.

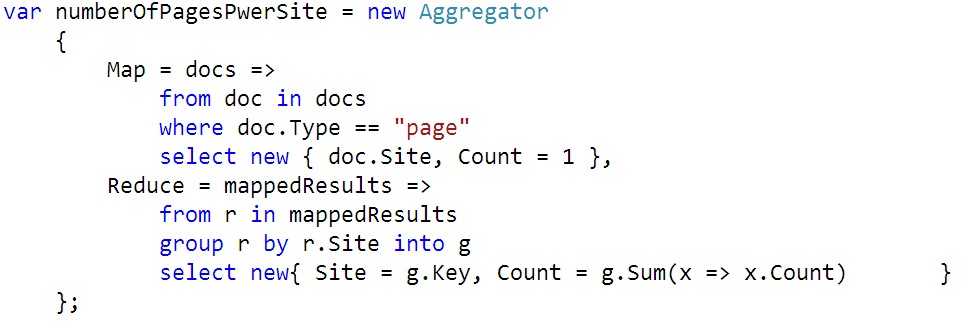

For aggregation views, the problem is much harder, mostly because it is not clear what the result of adding, updating or removing a document may be. As a reminder, here is how we plan on exposing aggregation views to the user:

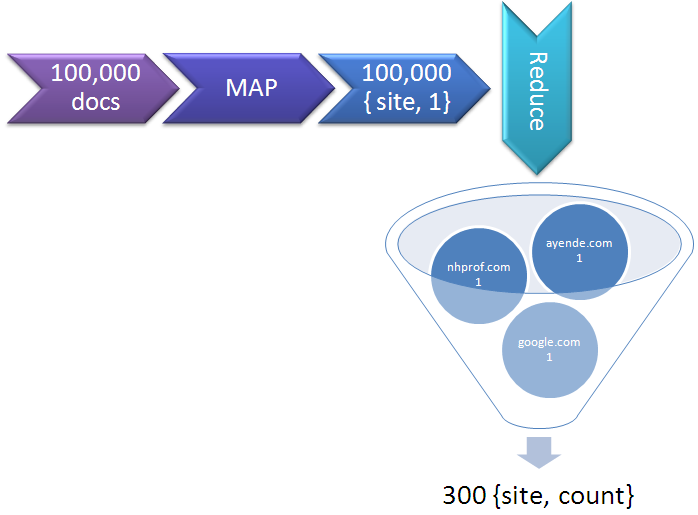

Let us inspect this from the point of view of the document database. Let us say that we have 100,000 documents already, and we introduce this view. A background process is going to kick off, to transform the documents using the view definition.

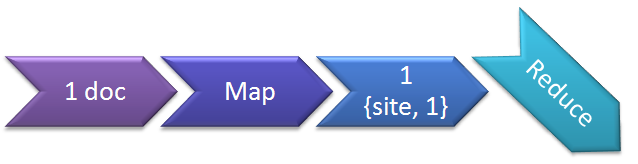

The process goes like this:

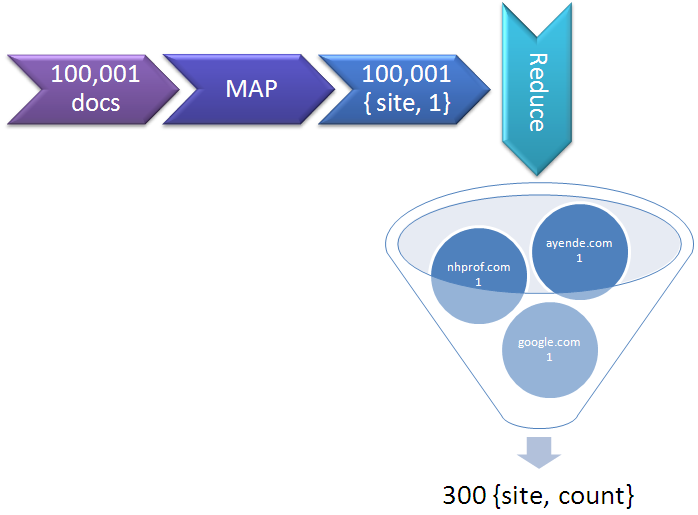

Note that the process depict above is a serial process. This isn’t really useful in the real world. Let us see why. I want to add a new document to the system, how am I going to update the view? Well… an easy option would be this:

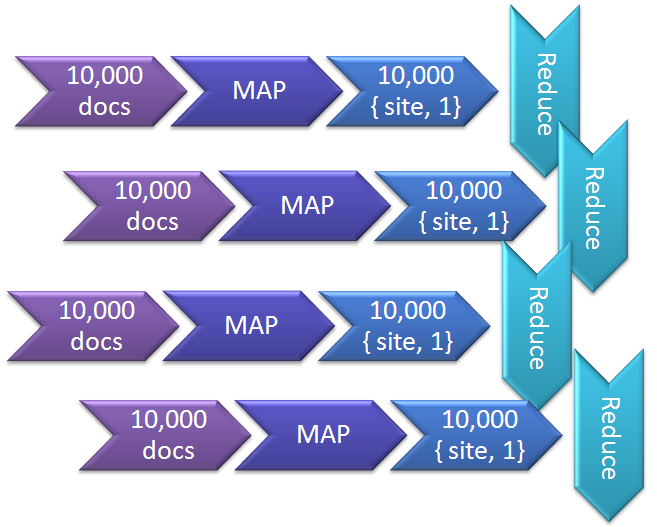

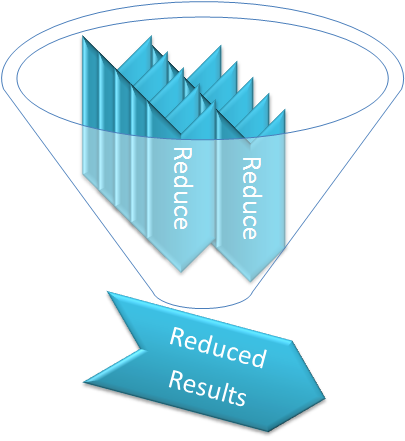

I think you can agree with me that this is not a really good thing to do from performance perspective. Luckily for us, there are other alternative. A more accurate representation of the process would be:

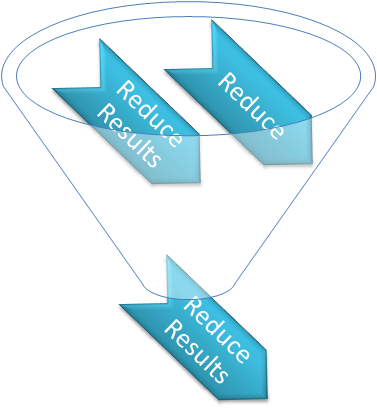

We run the map/reduce process in parallel, producing a lot of separate reduced data points. Now we can do the following:

We take the independent reduced results and run a re-reduce process on them again. That is why we have the limitation that map & reduce must return objects in the same shape, so we can use reduce for data that came from map or from reduce, without caring where it came from.

This also means that adding a document is a much easier task, all we need to do is:

We get the single reduced result from the whole process, and now we can generate the final result very easily:

All we have to do is run the reduce on the final result and the new result. The answer from that would be identical to the answer running the full process on all the documents. Things get more interesting, however, when we talk about document update or document removal. Since update is just a special case of atomic document removal and addition, I am going to talk about document removal only, in this case.

Removing a document invalidate the final aggregation results, but it doesn’t necessarily necessitate recalculating the whole thing from scratch. Do you remember the partial reduce results that we mentioned earlier? Those are not only useful for parallelizing the work, they are also very useful in this scenario. Instead of discarding them when we are done with them, we are going to save them as well. They wouldn’t be exposed to the user at any way, but they are persisted. They are going to be useful when we need to recalculate. The fun thing about them is that we don’t really need to recalculate everything. All we have to do is recalculate the batch that the removed document resided on, without that document. When we have the new batch, we can now reduce the whole thing to a final result again.

I am guessing that this is going to be… a challenging task to build, but from design perspective, it looks pretty straightforward.

More posts in "Designing a document database" series:

- (17 Mar 2009) What next?

- (16 Mar 2009) Remote API & Public API

- (16 Mar 2009) Looking at views

- (15 Mar 2009) View syntax

- (14 Mar 2009) Aggregation Recalculating

- (13 Mar 2009) Aggregation

- (12 Mar 2009) Views

- (11 Mar 2009) Replication

- (11 Mar 2009) Attachments

- (10 Mar 2009) Authorization

- (10 Mar 2009) Concurrency

- (10 Mar 2009) Scale

- (10 Mar 2009) Storage

Comments

Okay, map-reduce is very spectacular and appealing, but can you please describe some real-world problem solved using map-reduce on documents? In typical business applications you usually perform operations on single entities and don't aggregate them. Aggregation is usually done when reporting and involves separate report database or OLAP system. I think map-reduce can be used for indexing document data - is it the main reason why you are writing about it?

Rafal,

Reporting scenarios is a major consideration, certainly.

But it is not just that, there are numerous reasons to want to be able to do aggregation in most systems.

Look at the right side of the blog, you see the category list, and the monthly list? Those are aggregations.

In many scenarios, it is important to be able to do so as efficiently as possible.

Leaving that aside, a good reporting story is pretty important, don't you think?

I have a possible scenario of having to handle lots of small databases, mostly with reports on them.

You're right, RDBMS-based systems usually have problems with data aggregation - that's why we're using separate report databases for larger applications. Aggregations done in a transactional system are too heavy for the database server, also they usually don't cache query results or partial results and perform aggregation each time data is requested. So map-reduce with automatic caching of partial results would help in such cases. Example: task management system where each user and group of users has its own 'inbox' for keeping todo list and each user has its own dashboard with statistics. If you want to calculate statistics for each logged in user based on raw transactional data, you'll probably kill the database server.

"...a challenging task to build, but from design perspective, it looks pretty straightforward." I think implementation is the main problem here. Failure at reducing node during calculation and so on. But the idea looks good, thank you for the post :) We have interesting discussion about it at Friday :)

Rafal,

That is why I specified that the aggregation is done as part of a background process.

That way, you can still serve requests while still maintaining the perf of the server.

Evgeny,

Yes, the implementation would be challenging, but not complex, just hard.

Is this the map-reduce algorithm used by Google?

What data would you return to the user while aggregation is being done?

For those like me who aren't as familiar with MapReduce:

http://labs.google.com/papers/mapreduce.html

http://en.wikipedia.org/wiki/MapReduce

I only read the google page because it made sense to me after that, but the wiki page looks like it covers a little more detail.

configurator,

That is a great question, I don't really know.

You also have duplication. If you want to be able to read duplicated data for added efficiency rather than just keeping it as backups, you might decide not to record which copy of the data is considered real -- maybe all copies are the real copy.

But when you want to do this kind of map/reduce thing, you need to know whether to include this entry in the results, and duplicates should be excluded.

This means, though, that when a node goes down, you have to discover that fact, and select another node that contains a copy of its non-duplicate data to replace it.

The alternative is to write your queries in such a way that duplicates can be resolved by the client, but that really isn't the client's concern, and it's inefficient.

@Ayende

"Yes, the implementation would be challenging, but not complex, just hard. "

I agree with complex but not hard. I often tell my clients exactly that.

Programming should never be measured in simple or hard. The variable of time is much more useful and as time holds all solutions, programmers simply have time to solve an issue or not.

Length of time determines cost and whether the solution can afford to be found.

Chris,

Right now, I am not considering yet how to actually get the entire document / view space distributed, it looks much easier to simply replicate things with sharding algorithm.

Comment preview