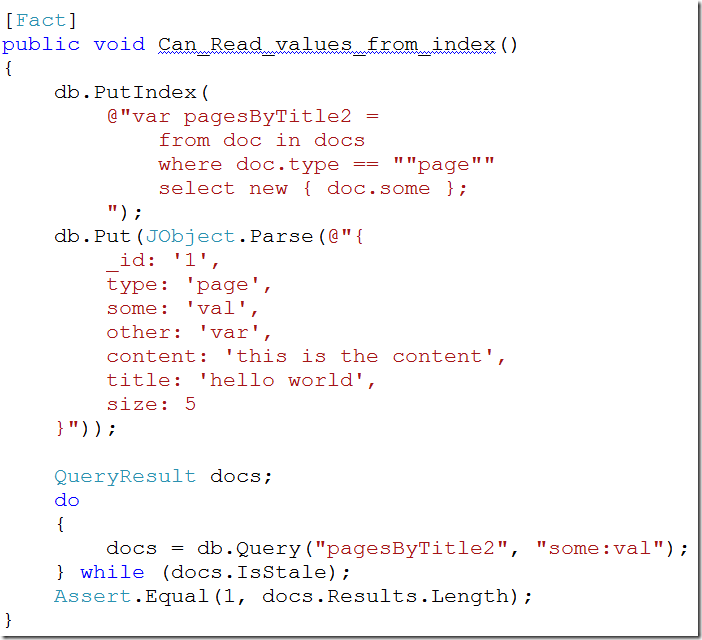

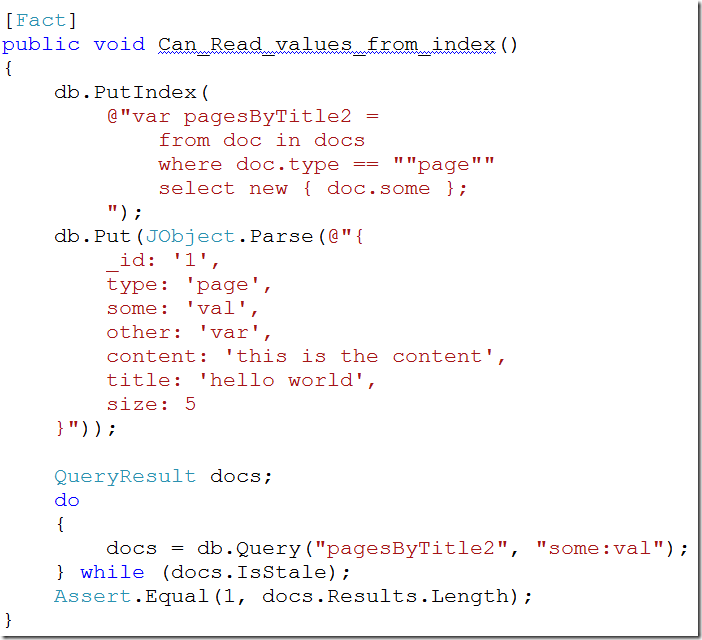

Here is a unit test testing Rhino DivanDB:

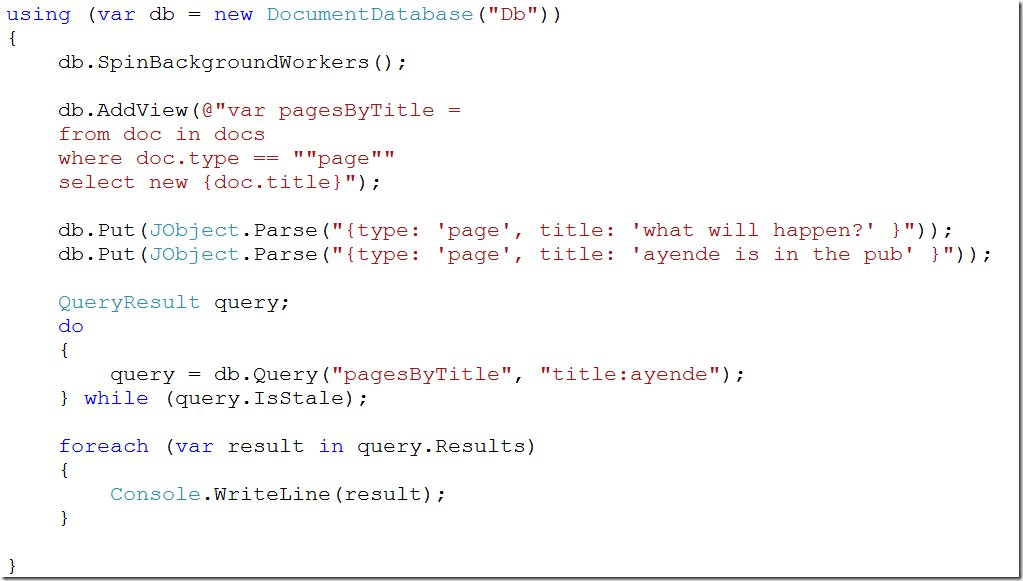

Here is a test that tests the same thing, using scenario based approach:

What are those strange files? Well, let us take a pick at the first one:

| 0_PutDoc.request | 0_PutDoc.response |

| PUT /docs HTTP/1.1

Content-Length: 283 {

"_id": "ayende",

"email": "ayende@ayende.com",

"projects": [

"rhino mocks",

"nhibernate",

"rhino service bus",

"rhino divan db",

"rhino persistent hash table",

"rhino distributed hash table",

"rhino etl",

"rhino security",

"rampaging rhinos"

]

} | HTTP/1.1 201 Created

Connection: close

Content-Length: 15

Content-Type: application/json; charset=utf-8

Date: Sat, 27 Feb 2010 08:12:08 GMT

Server: Kayak {"id":"ayende"} |

Those are just test files, corresponding to the request and the expected response.

RBD’s turn those into tests, by issuing each request in turn and asserting on the actual output. This is slightly more complicated than it seems, because some requests contains things like dates, or generated guids. The scenario runner is aware of those and resolve those automatically. Another issue is dealing with potentially stale requests, especially because we are issuing requests on the same data immediately Again, this is something that the scenario runner handles internally, and we don’t have to worry about it.

There are some things here that may not be immediately apparent. We are doing pure state base testing, in fact, this is black box testing. The scenarios define the external API of the system, which is a nice addition.

We don’t care about the actual implementation, look at the unit test, we need to setup a db instance, start the background threads, etc. If I modify the DocumentDatabase constructor, or the initialization process, I need to touch each test that uses it. I can try to encapsulate that, but in many cases, you really can’t do that upfront. SpinBackgroundWorkers, for example, is something that is required in only some of the unit tests, and it is a late addition. So I would have to go and add it to each of the tests that require it.

Because the scenarios don’t have any intrinsic knowledge about the server, any require change is something that you would have to do in a single location, nothing more.

Users can send me a failure scenarios. I am using this extensively with NH Prof (InitializeOfflineLogging), and it is amazing. When a user runs into a problem, I can tell them, please send me a Fiddler trace of the issue, and I can turn that into a repeatable test in a matter of moments.

I actually thought about using Fiddler’s saz files as the format for my scenarios, but I would have to actually understand them first. :-) It doesn’t look hard, but flat files seemed easier still.

Actually, I went ahead and made the modification, because now i have even less friction, just record a Fiddler session, drop it in a folder, and I have a test. Turned out that the Fiddler format is very easy to work with.