Production Test RunRowhammer in Voron

Rowhammer is a type of attack on the way DRAM is built. A specific write pattern to a specific memory cell can cause “leakage” to nearby memory cells, causing bit flips. The issue we were facing in production ended up being very similar.

Rowhammer is a type of attack on the way DRAM is built. A specific write pattern to a specific memory cell can cause “leakage” to nearby memory cells, causing bit flips. The issue we were facing in production ended up being very similar.

The initial concern was that a database on disk size was very large. Enough that we started a serious investigating into what exactly is taking all this space. Our internal reporting said, nothing. And that was very strange. Looking at the actual file usage, we had a lot of transaction journals taking a lot of space there. By a lot I mean that the size of the data file in question was 32 MB and the journals were taking a total of over 15GB. To be fair, some of them were kept around to be reused, but that was bad.

It took a while to figure out what was going on. This particular Voron instance was used for a map/reduce index on a database that had high write throughput. Because if that, the indexing was always active. So far, so good, we have several other such instances, and they don’t exhibit this behavior. What was different about this index is that due to the shape of the index and the nature of the data, what ended up happening is that we’ll always modify the same (small) set of the data.

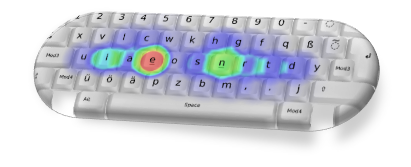

This index sums up a number of events and aggregate them based on when they happened. This particular system handle about a hundred updates a second on average, and can peak to about five to seven times that. The index gives us things such as “how many events of each type happened today” and things like that. This means that there is a lot of locality in the updates. And that was the key.

Even though this index (and the Voron storage that backed it) was experienced a lot of writes, these writes almost always happened to the same set of data (basically updating the counters). That means that there wasn’t actually just a very small set of pages in the data that were modified. And that set off a chain of behaviors that results in a lot of disk space being used.

- A lot of data is modified, meaning that we need to write a lot to the journal on each transaction.

- Because the same data is constant modified, the total amount of modified bytes is smaller than a certain threshold.

- Writes are constants.

- Flushing the data to disk is expensive, so we try to avoid it.

- We can only delete journals after we flushed the data.

Because we try to avoid flushing to disk if we can, we only do that when there is enough idle time or when enough data has been modified. In this case, there was no idle time, and the amount of data that was modified was too small to hit the limit.

The system would actually balance itself out eventually (which is why it stopped at around ~15GB or so of journals). At some point we would either hit an idle spot or the buffer will hit the limit and we’ll flush to disk, which allow us to free the journals, but that happened only after we had quite a few. The fix was to add a limit to how long we’ll delay flushing to disk in such a case and was quite trivial once we figure out what exactly all the different parts were doing.

More posts in "Production Test Run" series:

- (26 Jan 2018) Overburdened and under provisioned

- (24 Jan 2018) The self flagellating server

- (22 Jan 2018) Rowhammer in Voron

- (18 Jan 2018) When your software is configured by a monkey

- (17 Jan 2018) Too much of a good thing isn’t so good for you

- (16 Jan 2018) The worst is yet to come

Comments

Comment preview