Interview questionStackoverflow THAT

We are doing perf testing right now, and we are looking into real world datasets to play around with. Luckily for us, Stackoverflow have regular data dump of size significant enough to be useful for our experiments.

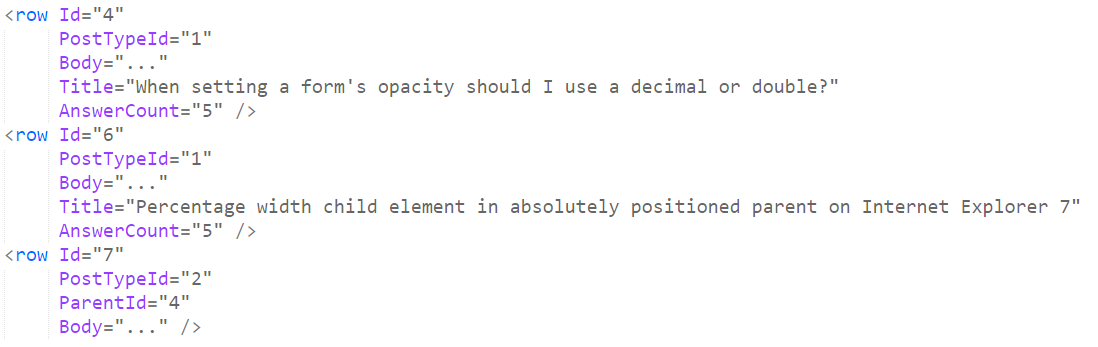

The file that I’m currently looking it is the Posts.xml file, which is about 45GB in size, and looks roughly like this (lot of stuff removed to make the point).

Since Stackoverflow is using relational database, their output is also relational. You can see that each element is a single row, and you have the ParentId in row #7 that points back to row #4.

Basically, row #4 is the question, and row #7 is one of the answers.

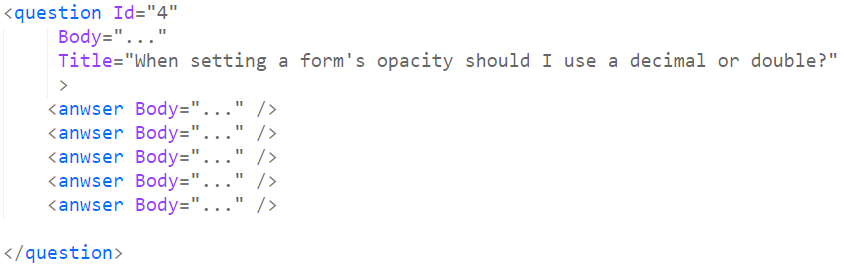

What I want it to take all of this data and move it into a more document format. In other words, what I want to have all the answers for a question contained within the question, something like this:

The fun part here is that this is a pretty big file, and we are writing the output into a GzipStream, so we don’t really have the option of saving / modifying midway through. Once we have written something out to the GzipStream, it cannot be changed.

So we need to find a way in which we can group all the answers under their questions, but at the same time, the file size is big, much bigger than the memory I have available, so we can’t just keep it all in memory and write it out in the end.

How would you solve this issue? My attempt is currently sitting at roughly 10GB of RAM used after processing about 30GB of XML, but I have to admit that I have thrown it together rather quickly, since I just needed the data and a quick & dirty solution is just fine here.

More posts in "Interview question" series:

- (29 Sep 2016) Stackoverflow THAT

- (30 Mar 2015) fix the index

- (27 Aug 2014) That annoying 3rd party service

Comments

I have no idea about the data characteristics in the Stackoverflow dump, and whether more optimizations can be applied, however, with a generic solution, I would:

Great! I didn't know that you could make online SQL-queries against the databases: https://data.stackexchange.com/stackoverflow/queries http://meta.stackexchange.com/questions/2677/database-schema-documentation-for-the-public-data-dump-and-sede

Since it's relational data and this is basically a sorting problem, my approach would be to load it into an SQL Server instance or similar and then write it out in a more appropriate order: ORDER BY COALESCE(ParentId, Id), PostTypeId. That should put each question with its answers and the set can then be processed with minimal RAM.

If the intent is to write a custom tool, however:

This assumes that our XML source is a random-access stream rather than a forward-only stream, and that the XML parser exposes stream offset information for tags. Alternatively we could generate tabular temporary files and index those instead.

If our parser lets us identify start and end offsets of attribute values, things get even faster because we can pull out the body value directly in step 3, and since it's already XML-encoded it can be written directly to the output stream.

Since the data is relational I would use relational db to store it. I would use https://github.com/ren85/linqdb since it's super easy for C# developer. So I would have two tables Questions and Answers and once the data is there iterate the Questions table and for each question query for its answers. (Also I would store text as byte arrays in linqdb to avoid full text index).

Parse it twice with a XML SAX Parser?

In the first parse, create something to store just the count for each parent Id in a dictionary so you know how many answers there are for each question. In the second parse, start to build your ouput in memory and check if all answers have been read (hopefully they would be close to the questions). If a complete question has been read and all the answers attached then write the question with answers out to the stream, clearing the memory.

You can't do that in a single pass. You basically need questions and answers sorted by question id, questions by row id and answers by parentId, then merge answers and questions using the basic merge algorithm. You can store questions in RavenDB using document id in the form question/<row id> and answers in the form answer/<parentId>/<row id>, read them ordered by document id using the streaming API and merge them. You can also store all rows into a SQL Server table using row id as primary key, create an index on parentId and read them using one single FOR XML select statement, SQL Server would likely perform a MERGE JOIN.

Since you have the answercount plus presumably spacial locality in the data, then the problem is not bigger than keeping the title entry in memory until all answers have been read.

Here is the algorithm :

I implemented a more imaginative solution based on the file system. I made some assumptions: input data set is ordered by row id and answers row id are greater than the corresponding question row id. Here is the code https://gist.github.com/jesuslpm/07fb8121b1747bd69fb43581c4a576d9

That's a pretty big assumption, which I don't think is true. It's likelier than the data is in the natural database order, which depends on when the post was created.

So, every post has an answer count, shouldn't it be possible to create a dictionary of posts and answers, and flush a post to the output stream only if all answers have been seen?

this code doesn't work if the hierarchy is deeper than 2 levels, but could be adjusted for that.

Pop Catalin, That assumes that all the answers to a question would fit into your batch size. It is pretty common to have questions that have answers years afterward, so they are very spread out.

Alex, Yes, throwing it through something that would allow sorted access makes things much easier. However, the intent is to not use an existing db.

And the major problem with your suggestion is that XmlReader does buffering, so you can't tell the exact position. Writing to a dedicated file the data and writing the positions to an index, then sorting the index would work, though

ren, Interesting, you would have to create a composite key there, and at the scale we are talking about, you'll be waiting for this data to get into linqdb for a LONG while (it creates an index per value, which is _expensive_.

Ian, You already have the answer count in the data itself. The problem is that it is likely that there is a big gap between the question being asked and all its answers.

Ian, Also, an answer may appear before the question

Dennis, There ISN'T spatial locality.

Rémi, That would create millions of files, and will likely bork the file system

Jesús, Answer id may be smaller than question id.

Remco, That was my first attempt, but an answer may exist to a question that was posted years ago, so there is a huge gap and a lot of stuff you need to remember yet.

@Ayende, that's the reason for the document merge as a final step, merge documents with the same question ID from multiple temporary files. The document will contain the question an maybe some answers, the documents with same question ID from other temporary files will only contain answers .

I would create an index where the post Id, parent id and the xml node start file position is stored. This list should be pretty small and be able to sort in memory. Then I can group by post id and parents and sort ascending. Once I have that I can scan through the xml file mostly sequentially and stream out the resulting xml file as needed while taking advantage of the OS file system cache which will do a little read ahead and cache already read section of the file so that although I am seeking around a lot it will be pretty fast.

A creative way to solve the XmlReader buffering problem that obscures the position is to write the rows out into another XML file. That way you can record the positions in the new file. It's a costly but rather quick hack. I'd be concerned about the random IO that this causes while reading back, though.

My solution: If perf is not a concern I'd put this into an indexed database. This optimizes for dev time. If this is supposed to be fast I'd find (or write) and external sorting algorithm, sort the posts and merge them together in a streaming fashion. Should be hard to do better than this since fundamentally we need to shuffle all the data around anyway. I'd probably use Protobuf as the intermediate storage format. Various variants of this are possible.

It's probably best to move the text bodies along with the metadata. Not moving them around causes lots of random IO again.

XmlTextReader exposes line and character info. It's probably possible to provide it a TextReader implementation which tracks the stream offsets of the last X linebreaks and enables translation of line/character numbers to stream offsets within a limited range of history. Alternatively, if the XML format is simple enough it might be an option to write a quick'n'dirty stream-scraper which parses a limited yet sufficient subset of XML...

But unless we really want to squeeze it, intermediate temporary files would indeed be my preferred approach!

Have you tried asking Stackoverflow? :)

How can answer id be smaller than question id if id is auto increment? That would mean that you can answer a question that is not posted yet

The code I posted previously what it really needs to work is that answers come later than corresponding questions. But it is not difficult to make it to work when an answer comes before the corresponding question, I added a modified version program2.cs to https://gist.github.com/jesuslpm/07fb8121b1747bd69fb43581c4a576d9 that works even when that happens, unfortunately it needs to read posts.xml twice.

@Jesús López, it can happen when using Merge Replicated Servers, that each have their own identity ranges for example. Question is inserted on one server and answer on another.

I'd approach this problem as a trade-off between memory and disk. If you have 135GB (or so) of free disk space, I would...

I'd probably do the sorts using unix or powershell - but if you programmed it yourself, I'd expect it to have memory usage well under 100MB as the file reading is forward only and can be streamed. SSD probably preferred - but since all access is sequential, even HDD would perform reasonably well.

Jesus, I assume that this related to edits, you don't update the row, you create a new one? Not really sure.

Jesus, You'll be writing a LOT of small files, that typically has a bad result for the FS in question

@Pop Catalin. There are about 32 million posts on SO and only about 960 answers have Id < ParentId. It doesn't seem to be caused by replication.

@Ayende you asked for a quick and dirty solution. Not for an efficient solution. My solution is not efficient but do the work in a few lines of code.

Jesus, That might actually fail, hard, on large number of files See: http://stackoverflow.com/a/291292

I'm actualy curious wha's the disk space usage when using files to store questions considering min disk allocation per file is 4KB without considering the file index. A quick calculation would show a minimum of 135 GB for 35M questions/answers.

@Ayende, it will work. I'm not storing all files in a single folder, I'm storing up to 1000 files and up to 1000 subdirectories in an single directory. http://stackoverflow.com/a/26205776/4540020

@Pop Cătălin there are 32.5 million posts on SO, 12.5 million of them are questions. I write files only for questions, so without considering index files the minimum space would be 50 Gb

GOTO 2016 • Exploring StackOverflow Data • Evelina Gabasova https://www.youtube.com/watch?v=qlKZKN7il7c

Interesting analysis in F#

I would read X chars from the file at a time, If the input contains valid XML then process the input and split the XML elemements as described below, if there are no valid xml elements then read another X chars from the stream etc.. if the input contained a fragment of additinal xml then reset the seek position to the end of the last valid xml element

Since the number of answers to a question are known and the questions to answers have some locality , it is probably safe to start populating a in memory dictionary with the question,list<answers>. as you encounter question , add them to the dictiionary.

As you ecounter answers , find the question in the dictionary and add them - when a question reaches its full answer capacity , pop it from the dictionary - format and write to the gzip stream

Comment preview