Voron InternalsReducing the journal

We spend a lot of time trying to reduce our sync I/O cost with Voron, namely, the actual journal write to the disk. This is very expensive, because we have to hit the actual disk, forgoing any buffering.

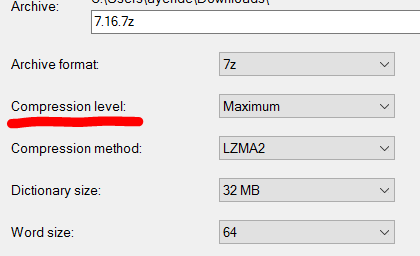

So anything that can reduce that cost is a really good idea. We spent some time looking at dynamic compression ratios heuristics, to see if it is worth it. Basically, we tried to figure out which option to use:

The idea is that based on the speed of the hard disk in use, we can decided whatever it is worth it or not to spend more time compressing the journal entry before saving it. We tested a system where the I/O duration would be balanced against compression speed and size, and adjust automatically.

It failed, horribly. Basically, even on the fastest drives we could find, it was almost always better to compress at the highest level, because the cost of going to disk is so high.

There is another aspect of this, however. The cost of going to disk isn’t linear to the size you are writing. I used the example of putting your groceries in the trunk. The fuel cost of the trip is not really going to be dominated by the weight of the groceries. After writing this statement, I fact checked myself. According to Auto Blog, each 100 pounds (50 KG) of added weight will increase the fuel utilization by about 1%. What is going to dominate the cost, however, is how much do you have to drive.

There is another aspect of this, however. The cost of going to disk isn’t linear to the size you are writing. I used the example of putting your groceries in the trunk. The fuel cost of the trip is not really going to be dominated by the weight of the groceries. After writing this statement, I fact checked myself. According to Auto Blog, each 100 pounds (50 KG) of added weight will increase the fuel utilization by about 1%. What is going to dominate the cost, however, is how much do you have to drive.

In the same manner, writing to the disk is impacted by the amount you write, but writing 4KB or 20KB has roughly the same cost anyway. Writing 2 MB is much longer, but not as much as you would expect. Note that all of those numbers assume no buffering all the way to disk, and using DMA.

We then tried to see what happen if we would just avoid compressing small writes. Anything smaller than 64KB is going to be compressed to less than 64KB, but the actual cost of writing to disk isn’t going to change, so we can save the compression costs. That actually improved performance a little bit for fast drives, but it hurt us on slow ones.

I had an interesting discussion with Alex on the usage of diff compression in the journal. This can take advantage on the fact that in many cases, we don’t modify full pages, so we can write just the changes out to disk. He was kind enough to include a few implementations of that for us to look at, those are RLE0 (Zero Run Length Encoding) implementations, and I’ll use RLE to refer to it from now on.

Reducing I/O is always good, and this promised to give a substantial boost, but the actual design details that cropped us are really interesting. Diff compression can be simple, like the RLE0 in this link, effectively, outputting something like:

... [x bytes unchanged][y bytes changed][byte 1 .. y][z bytes unchanged] ...

Or they can be much more complex, like bsdiff or xdelta. RLE handles the scenario where some bytes changes nicely, but fails badly if there is a single added byte (since it simply check for equality, we’ll see all the bytes are different). Algorithms like bsdiff or xdelta can handle much more complex differences, but they are drastically more expensive. For my purposes, bsdiff has runtime complexity of O( 2N * logN ) and memory utilization of 17N. It other words, to get the diff of 4 pages, we’ll need 272KB and about 230K operations.

Algorithms like that are usually meant for distributions. In other words, they are meant for cases where you can spend as much time as you want generating the diff, and you benefit from reduced download times. A modern usage of those is the Courgette project, for reducing the size of Chrome updates. It doesn’t matter if generating the update takes 3 hours, since it will be downloaded millions of times, and a 600KB saved in this manner will pay themselves many time over.

But those kind of costs are not something that we can pay. Analysis of our memory usage patterns also showed that in many cases, we are using mostly fixed addressing. In other words, we’ll typically change only small parts of the page, and we don’t tend to have moving writes. When we do (typically on defrag), we do them on a page boundary, so RLE implementation should generate good savings.

We have an implementation that we are currently testing, but while you can read the code, what is more interesting is the assumptions that we are making.

We scan the original and modified buffers using longs. We can safely assume that the buffers we scan are always sized in pages, so we don’t need to worry about buffers whose size isn’t divisible in sizeof(long), this make the code much simpler. We also don’t bother to encode identical parts, instead, we record the (start, count, raw bytes) differences from the original. There is a small optimization there for long runs of zeros (to make it cheaper to delete data), but beyond that, we do very little. I’ll have a separate post to dive into the actual implementation details and considerations that drove it, but that is for later.

An important reason why we don’t keep track of the unmodified data is that we don’t need it, and that we can’t actually trust the original data. Consider the case where we actually need to use the journal to recover. We do that by running through all of the transactions, and applying the diffs to the data. The problem is that we may fail midway through the recovery process, so the state of the data is not known. When applying a diff, if we use the original data, we might actually see data from a later transaction (which was applied, but we don’t know about it since we crashed before we can make a note of that). Because of this, we only use the modified data, which is safe to apply multiple times. Note that this assumes that modifying a page can not corrupt the page. In other words, if I have a 4 KB page, and I write a value to the 3rd byte, it isn’t going to cause any change to any other byte. Aside from that, we don’t require that the bytes that we modified will be there on restart, because we’ll overwrite them until we are sure that we properly synced them.

Another aspect of the diff operation that we aren’t actually all that worried about the data size (which is interesting, since we really want to reduce it), the reason for that is that we are going to throw all the diffed data into the compressor anyway. The idea is that even after the diff, we are still likely to find data to compress among the modifications on the transaction.

Currently, the steps to write a transaction to disk are:

- Get all the modified pages.

- For each of those, compute the difference between it and the previous version of that page.

- Compress all the diffs of all the pages.

- Write the compressed data to disk in a safe manner.

More posts in "Voron Internals" series:

- (13 Sep 2016) The diff is the way

- (07 Sep 2016) Reducing the journal

- (31 Aug 2016) I/O costs analysis

- (30 Aug 2016) The transaction journal & recovery

- (29 Aug 2016) Cleaning up scratch buffers

- (26 Aug 2016) MVCC - All the moving parts

Comments

https://www.infoq.com/news/2016/09/facebook-zstandard-compression?utm_campaign=infoq_content&utm_source=infoq&utm_medium=feed&utm_term=global

Even though you want to make it small, the tradeoff involved is faster CPU vs. smaller IO. But between smaller IO and a bit bigger IO there is equal costs. So in the general sense between 2.8 and 2.1 there is probably a very small gain than to avoid writting altogether (if possible). In general though the information written to the journal is becoming more and more compressed over time in its default layout (which makes general purpose algorithms less effective over time); eventually we will look into ZStandard but it is definitely not something that promise us to win much today in the current state.

Cool, you've taken up this suggestion.

Since I did not see any follow up after my last posted comment, I thought you were not going to do anything with it.

Now both my comments on journal compression schemes seem to be finding their way into Raven DB https://ayende.com/blog/164738/fruits-of-the-poisonous-tree-voron-performance-refactoring?key=9af8a35bda6346ebbb5490108ebc3f5f#comment7.

I am curious if (and how much) this dif compression will improve Voron journal write performance.

@Alex at the moment we were thinking of a different approach (to keep a few optimizations at the moment of recovery) and therefore a bit more balanced in the tradeoff. We just prototyped the full CPU vs IO version of the tradeoff for testing purposes.

Journal compression was needed because we are investigating a very different internal layout for the BTREE nodes that allow us to go with nodes with far bigger ( aka insane +2500 keys per node ) fanout, cache aware O(w) search per node instead of cache unfriendly O(log n) --- essentially free ---, and bigger node size (128kb per node) which improves write amplification. With our current simulations we can pack 1.5M keys on a 2 levels tree, we are far from decide to use it in the 4.0 timeframe, but is a bet we decided we wanted to make just in case it ends up being a viable direction.

@Federico, do you mean some type of compressed trie as the node index?

@alex it is a cache concious trie yes. The upside is that it is very locally aware, so inserts/upserts/deletes ensure that changes are very localized. Because of the cache concious structure lookups and creation are very fast too. While it can take between 20ns to 50ns to compare a single 60 bytes key in like 18-24ns on a cold node (and we have log(n) of those to do on edge nodes), the same structure written in C can do a whole lookup in 50ns on a tree of 600K variable size keys. For comparison an in-memory btree written in C++ takes 1080ns to do the same lookup.

Needless to say that we will be in a great position if we only achieve 0.5x the throughput of the original structure (which is totally doable). However, our most problematic issue today is write-amplification (when not in memory of course) so even if the structure is very fast, there is no guarantee that we can achieve better speeds. That's the reason we are treating this a highly experimental stuff and not in the roadmap for the time being. As you might guess, we will never ever add the intrisec complexity such a structure bring with itself, if it is not offsetted by a very measurable gain.

@alex btw we invested in prototyping other types of TRIEs before but there is always a hidden cost somewhere. We investigated (we actually built a prototype of it) of a very interesting TRIE with (and I am not joking) O(1) high probability expected cost for lookups. The caveats, we had to write the key at the data level (which is very cache unfriendly). The key setup cost was awful and the write amplification worst, so inserts where 3x slower than the naive BTREE approach, and queries not that much faster.

Did we learn from it? Yes, a lot!!. Was worth the effort to prototype? Absolutely; this work spawned the discussion on tables which are pervasive now through Voron. Was it complex? Insane. Was it useful for Voron? Not at all. But you have to prototype and understand how it behave to know that first hand :)

Hi Federico, I think this is amazing, why not to use this as configurable option? there are some databases that is 99% read and only 1% write, in this case, I'm willing to pay the 3x slower writes to gain faster reads. why not to consider this?

Uri, The problem is that this isn't really something that you can configure. It is a very deep factor in the db. This is the kind of thing that we make assumption on how it works to be effective. For example, if the cost of access is O(logN) vs. O(N) vs. O(1) - that result in very different behaviors, and that isn't just something that you can / want to just configure.

@Uri we are moving slowly into bringing new data structures tuned for their specific uses, but as Oren noted they are very deep, so we approach the problem measuring. For the typical (and sometimes not so typical case) where are our limits, we measure to know which ones are the heaviest and move to unblock them; measuring again you just found a new blocker that was on the noise level after the one you just unblocked. Hash structures comes to my mind, we have several places where structures optimized for hash storage would be a sure win, but we are so far away from them to become a measurable blocker that there is no reason to mess with the underlying assumptions.

Just to exemplify the issue. In the 3.x branch the biggest performance blocker is storing the data using JSON. Unblocking that in 4.x opened up so many (smaller) blockers that were just hidden, that we could optimize many different subsystems and start thinking into deep changes that wouldnt have brought anything to the table before. Who knows, maybe at 5.x or even 6.x these kind of control could actually bring something to the table, today measurements show that it is just not big enough.

Thanks for the clarification, Awesome work!

Comment preview