We spend a lot of time trying to reduce our sync I/O cost with Voron, namely, the actual journal write to the disk. This is very expensive, because we have to hit the actual disk, forgoing any buffering.

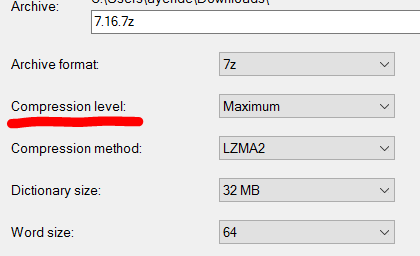

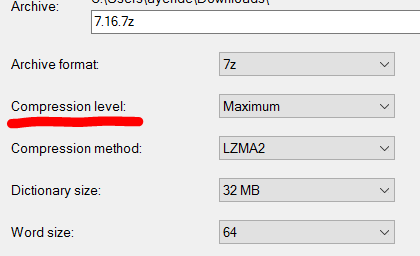

So anything that can reduce that cost is a really good idea. We spent some time looking at dynamic compression ratios heuristics, to see if it is worth it. Basically, we tried to figure out which option to use:

The idea is that based on the speed of the hard disk in use, we can decided whatever it is worth it or not to spend more time compressing the journal entry before saving it. We tested a system where the I/O duration would be balanced against compression speed and size, and adjust automatically.

It failed, horribly. Basically, even on the fastest drives we could find, it was almost always better to compress at the highest level, because the cost of going to disk is so high.

There is another aspect of this, however. The cost of going to disk isn’t linear to the size you are writing. I used the example of putting your groceries in the trunk. The fuel cost of the trip is not really going to be dominated by the weight of the groceries. After writing this statement, I fact checked myself. According to Auto Blog, each 100 pounds (50 KG) of added weight will increase the fuel utilization by about 1%. What is going to dominate the cost, however, is how much do you have to drive.

There is another aspect of this, however. The cost of going to disk isn’t linear to the size you are writing. I used the example of putting your groceries in the trunk. The fuel cost of the trip is not really going to be dominated by the weight of the groceries. After writing this statement, I fact checked myself. According to Auto Blog, each 100 pounds (50 KG) of added weight will increase the fuel utilization by about 1%. What is going to dominate the cost, however, is how much do you have to drive.

In the same manner, writing to the disk is impacted by the amount you write, but writing 4KB or 20KB has roughly the same cost anyway. Writing 2 MB is much longer, but not as much as you would expect. Note that all of those numbers assume no buffering all the way to disk, and using DMA.

We then tried to see what happen if we would just avoid compressing small writes. Anything smaller than 64KB is going to be compressed to less than 64KB, but the actual cost of writing to disk isn’t going to change, so we can save the compression costs. That actually improved performance a little bit for fast drives, but it hurt us on slow ones.

I had an interesting discussion with Alex on the usage of diff compression in the journal. This can take advantage on the fact that in many cases, we don’t modify full pages, so we can write just the changes out to disk. He was kind enough to include a few implementations of that for us to look at, those are RLE0 (Zero Run Length Encoding) implementations, and I’ll use RLE to refer to it from now on.

Reducing I/O is always good, and this promised to give a substantial boost, but the actual design details that cropped us are really interesting. Diff compression can be simple, like the RLE0 in this link, effectively, outputting something like:

... [x bytes unchanged][y bytes changed][byte 1 .. y][z bytes unchanged] ...

Or they can be much more complex, like bsdiff or xdelta. RLE handles the scenario where some bytes changes nicely, but fails badly if there is a single added byte (since it simply check for equality, we’ll see all the bytes are different). Algorithms like bsdiff or xdelta can handle much more complex differences, but they are drastically more expensive. For my purposes, bsdiff has runtime complexity of O( 2N * logN ) and memory utilization of 17N. It other words, to get the diff of 4 pages, we’ll need 272KB and about 230K operations.

Algorithms like that are usually meant for distributions. In other words, they are meant for cases where you can spend as much time as you want generating the diff, and you benefit from reduced download times. A modern usage of those is the Courgette project, for reducing the size of Chrome updates. It doesn’t matter if generating the update takes 3 hours, since it will be downloaded millions of times, and a 600KB saved in this manner will pay themselves many time over.

But those kind of costs are not something that we can pay. Analysis of our memory usage patterns also showed that in many cases, we are using mostly fixed addressing. In other words, we’ll typically change only small parts of the page, and we don’t tend to have moving writes. When we do (typically on defrag), we do them on a page boundary, so RLE implementation should generate good savings.

We have an implementation that we are currently testing, but while you can read the code, what is more interesting is the assumptions that we are making.

We scan the original and modified buffers using longs. We can safely assume that the buffers we scan are always sized in pages, so we don’t need to worry about buffers whose size isn’t divisible in sizeof(long), this make the code much simpler. We also don’t bother to encode identical parts, instead, we record the (start, count, raw bytes) differences from the original. There is a small optimization there for long runs of zeros (to make it cheaper to delete data), but beyond that, we do very little. I’ll have a separate post to dive into the actual implementation details and considerations that drove it, but that is for later.

An important reason why we don’t keep track of the unmodified data is that we don’t need it, and that we can’t actually trust the original data. Consider the case where we actually need to use the journal to recover. We do that by running through all of the transactions, and applying the diffs to the data. The problem is that we may fail midway through the recovery process, so the state of the data is not known. When applying a diff, if we use the original data, we might actually see data from a later transaction (which was applied, but we don’t know about it since we crashed before we can make a note of that). Because of this, we only use the modified data, which is safe to apply multiple times. Note that this assumes that modifying a page can not corrupt the page. In other words, if I have a 4 KB page, and I write a value to the 3rd byte, it isn’t going to cause any change to any other byte. Aside from that, we don’t require that the bytes that we modified will be there on restart, because we’ll overwrite them until we are sure that we properly synced them.

Another aspect of the diff operation that we aren’t actually all that worried about the data size (which is interesting, since we really want to reduce it), the reason for that is that we are going to throw all the diffed data into the compressor anyway. The idea is that even after the diff, we are still likely to find data to compress among the modifications on the transaction.

Currently, the steps to write a transaction to disk are:

- Get all the modified pages.

- For each of those, compute the difference between it and the previous version of that page.

- Compress all the diffs of all the pages.

- Write the compressed data to disk in a safe manner.