Voron Performance, the end is nigh (sequentially)

For the past few months, we have been doing a lot of work on performance for Voron. Performance for a storage engine is a very complex problem, composed of many competing issues.

For example, sequential writes vs. random writes. Or the cost of writes vs. the cost of reads. In this post, I am going to focus specifically on the single scenario we have focused most of our efforts on, sequential writes.

Sequential writes are important because they present the absolute sweet spot for a storage engine. In other words, this is the very first thing that needs to be fast, because if that ain’t fast ,nothing else would be. And because this represent the absolute sweat spot, it is perfectly fine and very common to explicitly design your system to ensure that this is what you’ll use.

We have been doing a lot there. In particular, some of our changes included:

- Moving from fsync model (very slow) to unbuffered writer through writes.

- Moving to vectored writes, reducing sys calls and the need for sequential memory space.

- Writing our own immutable collection classes, meant specifically for what we’re doing.

- Reduce # of tree searches.

- Optimizing the cost of copying data from the user’s stream to our own store.

There have probably been others ,but those have been the major ones. Let us look at the numbers, shall we? I am going to compare us to Esent, since this is our current storage engine.

The test is writing out 10 million items, in 100,000 transactions, with 100 items per transaction. This is done in purely sequential manner. Item size is 128 bytes value and 16 bytes key.

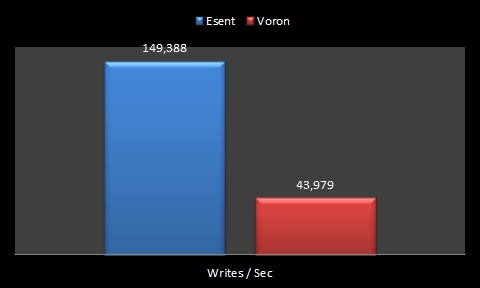

Here are the original code numbers.

Note that this was run on a W520 Lenovo with an SSD drive. The actual details don’t really matter, what matters is that we are comparing two runs happening on the same machine.

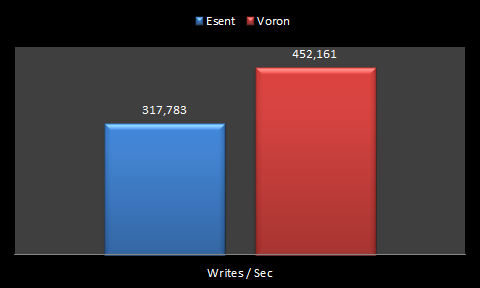

And now, let us compare Esent and Voron as they currently stand:

Yes, you see clearly.

The big jump in Esent numbers have happened because we also worked on the test suite, and optimized things there, but the important thing is that we are now significantly faster than Esent.

In fact, we are fast enough that it took me a while to accept that we are actually doing it. But yes, you are seeing an order of magnitude improvement over what we used to have.

Feel free to take a moment to do a victory dance, that is what I’m doing right now.

However, note the title of this post. This is for a single threaded sequential inserts. The numbers are a lot worse for random writes, but we’ll deal with that in another post.

Comments

dancing and hoping you will also share some info on CPU load, GC impact etc. ... at some point.

Woohooo! :does a little dance:

Curious, what are the new Voron numbers against the original test suite?

Matt, I gave the original numbers.

Sorry, I meant - when things were being tested in the way that gave the ESENT perf of 149,388 writes/sec, what would be the equivalent Voron measurement? I would think somewhere around 200,000 ish?

Matt, Not really. Voron was stuck at much lower speeds because of fsync.

Well that is quite impressive.

That would be around 4 pages per tx, at 4521 tx/s. So roughly 70 MB/s in data, excluding journal writes. Which would amount to more than 140 MB/s disk transfer rate if the journal pages are uncompressed, or somewhere between 80-110 MB/s if the journal pages are compressed.

I should definitely get me an SSD soon :D

Alex, We are actually doing better since this was written :-)

Ayende, I think what Matt meant that just based on the graphs it is not clear how much (or even if) Voron has improved. Because first graph shows one test suite with one version of Voron, and the other shows a different test suite with a different version of Voron.

The only thing the graphs show is that ESENT is ~2x faster on the new test suite than the old... and I don't think anyone cares about that. What this series of blog posts is about is improving Voron and we all want to see how much faster Voron is now vs. Voron in the past. The graph as they are now, conceal this information, very odd to see.

Alexei, I understand now, and it make sense, but we don't care very much about comparison to the past version. Sure, it would be nice to do a comparison, but It would mean doing some non trivial work to run the old version of the test against the new version of the code, etc. What I care about it comparing to Esent.

Comment preview